AMD CPU speculation... and expert conjecture

Page 363 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

juanrga :

Ranth :

Juan we do agree that richland and piledriver is basicly the same, expect for powermanagement and the like, right? what does the richland APU have that the fx doesn't? And specifically what is it that makes the APU be the only one recieving performance increases:

The six factors, Except for the piledriver -> steamroller/HSA (Won't help in singlethreaded), doesn't everything else apply to fx and older apu too..?

The six factors, Except for the piledriver -> steamroller/HSA (Won't help in singlethreaded), doesn't everything else apply to fx and older apu too..?

The APU lacks L3 cache. I don't know if there are other differences which AMD has not disclosed. What I know is what follows.

The BF4 benchmark given at the October talk to OEMs shows a Richland APU performing as well as FX-6350 and FX-8350. The APU gives a 98% and 96% of the performance of each FX respectively.

The argument that Suez map is single player and not well-multithreaded doesn't mean anything, because a FX-4350 will not be 30--50% faster than the FX-6350 and FX-8350.

meaningless ... really ...

ya, ... multiplayer really favors the APU core in the 750k doesn't it.

Just to clarify,

750k = piledriver without l3 cache and no IGP.

4350 = piledriver fx

6300 = piledriver fx

8350 = piledriver fx

lets compare the 4.5 ghz piledriver 750k to the 4.7 ghz fx. That only accounts for a 4.5% difference in clock speed, if perfect scaling (wich its not) then subtract 4.5% from the results.

4350 = 18% faster than the l3 cacheless APU core

6300 = 47% faster than the l3 cacheless APU core

8350 = 76% faster than the l3 cacheless APU core

How many times do you have to be proven wrong? Single player benchmark is meaningless because it doesn't stress the cpu AT ALL. Its strictly a gpu benchmark until you drop below 3.0 ghz.

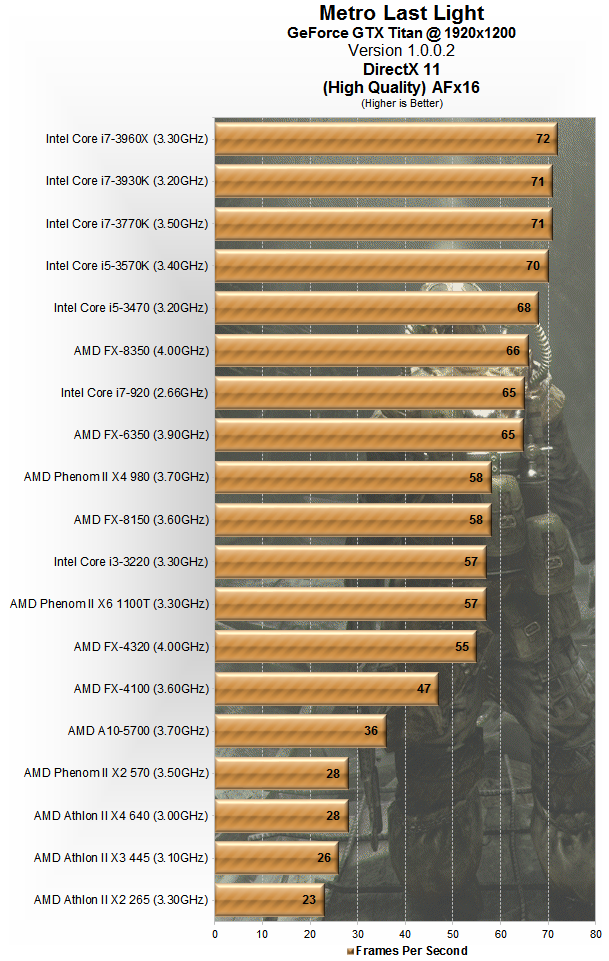

http://static.techspot.com/articles-info/734/bench/CPU_01.png

Anyone attempting to argue the new APU's will be remotely close to a high end dGPU is deluded. That's just not how CPU's work. The highest end APU will be about equal to the low end dCPU's but with a moderately good GPU bolted on. The entire purpose of an APU isn't to replace dCPU's, it's to do budget / compact computing. SFF and similar use's where power and space are limited and going with an all inclusive solution works out the best.

gamerk316

Glorious

juanrga :

Interesting data about MANTLE disclosed at APU 13.

http://translate.googleusercontent.com/translate_c?depth=2&hl=es&ie=UTF8&prev=_t&rurl=translate.google.com&sl=fr&tl=en&u=http://www.hardware.fr/news/13445/apu13-amd-mantle-premiers-details.html&usg=ALkJrhgnY37aJJ61I7lorqV5I0j-_HjSSw

A part that caught my attention was that about how AMD would pair a APU with a dGPU. Previously in this tread two options were discussed. In one the iGPU was devoted entirely to compute and the dGPU to graphics. In the second option the iGPU and the dGPU would work in tandem doing compute or graphics or both. This is from the talk:

http://translate.googleusercontent.com/translate_c?depth=2&hl=es&ie=UTF8&prev=_t&rurl=translate.google.com&sl=fr&tl=en&u=http://www.hardware.fr/news/13445/apu13-amd-mantle-premiers-details.html&usg=ALkJrhgnY37aJJ61I7lorqV5I0j-_HjSSw

A part that caught my attention was that about how AMD would pair a APU with a dGPU. Previously in this tread two options were discussed. In one the iGPU was devoted entirely to compute and the dGPU to graphics. In the second option the iGPU and the dGPU would work in tandem doing compute or graphics or both. This is from the talk:

The application also acquires the ability to take control of the multi-GPU and to decide where to run each command issued. Why AMD has provided in Mantle access CrossFire compositing engine, data transfer between GPU etc.. Will allow multi-GPU modes that go beyond the AFR and adapt better example to use GPU computing in games or asymmetric multi-GPU systems as is the case for APU combined with a GPU. For example it is possible to imagine the GPU load based rendering and APU handle the post processing.

Which is exactly where I've been saying things were moving toward, and how I see this all being applied: The APU handles Physics/Compute/OpenCL, while the dGPU focuses on graphics.

8350rocks

Distinguished

palladin9479 :

Anyone attempting to argue the new APU's will be remotely close to a high end dGPU is deluded. That's just not how CPU's work. The highest end APU will be about equal to the low end dCPU's but with a moderately good GPU bolted on. The entire purpose of an APU isn't to replace dCPU's, it's to do budget / compact computing. SFF and similar use's where power and space are limited and going with an all inclusive solution works out the best.

+1

juanrga :

The APU lacks L3 cache. I don't know if there are other differences which AMD has not disclosed. What I know is what follows.

The BF4 benchmark given at the October talk to OEMs shows a Richland APU performing as well as FX-6350 and FX-8350. The APU gives a 98% and 96% of the performance of each FX respectively.

The 'old' argument that FX is 30--50% faster because has L3 cache doesn't apply here.

The argument that Suez map is single player and not well-multithreaded doesn't mean anything, because a FX-4350 will not be 30--50% faster than the FX-6350 and FX-8350.

The BF4 benchmark given at the October talk to OEMs shows a Richland APU performing as well as FX-6350 and FX-8350. The APU gives a 98% and 96% of the performance of each FX respectively.

The 'old' argument that FX is 30--50% faster because has L3 cache doesn't apply here.

The argument that Suez map is single player and not well-multithreaded doesn't mean anything, because a FX-4350 will not be 30--50% faster than the FX-6350 and FX-8350.

The argument that the optimizations for APUs also work for FX is not reflected in the BF4 benchmark. If the performance of the APU increases up to match the FX-6350 and the FX-8350. Why has not increased the performance of the FX chips? The FX chip run also W8.1, the same drivers and used the same 2133MHz memory and HDD than the APU.

you are quite literally twisting the facts here. it's not the apu's(6790k's in that promo slide) performance of apu that increases. it's the reduction of the cpus' (fx) performance. go take a look at a 4 core apu vs 6+ core fx in a 7zip benchmark for real cpu performance comparison in a mainstream software.

you completely stonewalled the software factor(bf4 single player). even though you incessantly keep mention that one benchmark. the game was benched in a way so that the gpu is busy rendering while the cpu is staying idle. that's mainly why 6+ core fx do not show much higher fps. if there is something cpu intensive like multiple enemys or explosion of high action scene or all of the aforementioned were happening, the extra resources in the fx cpus would kick into action as they do in multiplayer mode. in single player mode, only the main render thread does most of the work and barely scales to other cores. i hope i explained it properly... people with more in-depth knowledge would be able to explain it better.

in that benchmark, it is a scripted run to make the apus look capable and to maintain consistency between runs. when it's scripted, the 4 cores and the gpu is doing all the work, extra cores in the cpus are idling.

noob2222 :

meaningless ... really ...

http://pclab.pl/zdjecia/artykuly/chaostheory/2013/10/bf4/charts/bf4_cpu_geforce.png

ya, ... multiplayer really favors the APU core in the 750k doesn't it.

Just to clarify,

750k = piledriver without l3 cache and no IGP.

4350 = piledriver fx

6300 = piledriver fx

8350 = piledriver fx

lets compare the 4.5 ghz piledriver 750k to the 4.7 ghz fx. That only accounts for a 4.5% difference in clock speed, if perfect scaling (wich its not) then subtract 4.5% from the results.

4350 = 18% faster than the l3 cacheless APU core

6300 = 47% faster than the l3 cacheless APU core

8350 = 76% faster than the l3 cacheless APU core

How many times do you have to be proven wrong? Single player benchmark is meaningless because it doesn't stress the cpu AT ALL. Its strictly a gpu benchmark until you drop below 3.0 ghz.

http://static.techspot.com/articles-info/734/bench/CPU_01.png

http://pclab.pl/zdjecia/artykuly/chaostheory/2013/10/bf4/charts/bf4_cpu_geforce.png

ya, ... multiplayer really favors the APU core in the 750k doesn't it.

Just to clarify,

750k = piledriver without l3 cache and no IGP.

4350 = piledriver fx

6300 = piledriver fx

8350 = piledriver fx

lets compare the 4.5 ghz piledriver 750k to the 4.7 ghz fx. That only accounts for a 4.5% difference in clock speed, if perfect scaling (wich its not) then subtract 4.5% from the results.

4350 = 18% faster than the l3 cacheless APU core

6300 = 47% faster than the l3 cacheless APU core

8350 = 76% faster than the l3 cacheless APU core

How many times do you have to be proven wrong? Single player benchmark is meaningless because it doesn't stress the cpu AT ALL. Its strictly a gpu benchmark until you drop below 3.0 ghz.

http://static.techspot.com/articles-info/734/bench/CPU_01.png

you are quite literally twisting the facts here. it's not the apu's(6790k's in that promo slide) performance of apu that increases.

Is that what he's been going on about? The fact that they benched the new APU vs a dCPU with a 630 in it? All that did was show that the APU's iGPU is better then a 630, which I would hope it to be considering how cheap those 630's are.

I should do a bench of my 8350 @4.8 and dual 780 Hydros. Then point at it and say the APU is useless because it can't keep up.

^^

Is that what he's been going on about? The fact that they benched the new APU vs a dCPU with a 630 in it? All that did was show that the APU's iGPU is better then a 630, which I would hope it to be considering how cheap those 630's are.

I should do a bench of my 8350 @4.8 and dual 780 Hydros. Then point at it and say the APU is useless because it can't keep up.

no not that one with kaveri vs 4770k +gt 630.

juanrga keeps bringing up a slide #13 from richland a10 6790k introduction event where a bf4 single player benchmark was shown as the a10 6790k having similar perf to 6+ core fx cpus. then he kept repeatedly bringing it up saying that benchmark is the reason amd cancelled higher core SR-FX cpus and apparently that's what amd 'told' oem representatives at that event (even thought he failed to provide an audio transcript after repeated asking).

edit: ugh. i don't know why my post got into the middle of the page while it should be at the end... the thread display is messy at my end.

palladin9479 :

you are quite literally twisting the facts here. it's not the apu's(6790k's in that promo slide) performance of apu that increases.

Is that what he's been going on about? The fact that they benched the new APU vs a dCPU with a 630 in it? All that did was show that the APU's iGPU is better then a 630, which I would hope it to be considering how cheap those 630's are.

I should do a bench of my 8350 @4.8 and dual 780 Hydros. Then point at it and say the APU is useless because it can't keep up.

no not that one with kaveri vs 4770k +gt 630.

juanrga keeps bringing up a slide #13 from richland a10 6790k introduction event where a bf4 single player benchmark was shown as the a10 6790k having similar perf to 6+ core fx cpus. then he kept repeatedly bringing it up saying that benchmark is the reason amd cancelled higher core SR-FX cpus and apparently that's what amd 'told' oem representatives at that event (even thought he failed to provide an audio transcript after repeated asking).

edit: ugh. i don't know why my post got into the middle of the page while it should be at the end... the thread display is messy at my end.

de5_Roy :

^^

Is that what he's been going on about? The fact that they benched the new APU vs a dCPU with a 630 in it? All that did was show that the APU's iGPU is better then a 630, which I would hope it to be considering how cheap those 630's are.

I should do a bench of my 8350 @4.8 and dual 780 Hydros. Then point at it and say the APU is useless because it can't keep up.

no not that one with kaveri vs 4770k +gt 630.

juanrga keeps bringing up a slide #13 from richland a10 6790k introduction event where a bf4 single player benchmark was shown as the a10 6790k having similar perf to 6+ core fx cpus. then he kept repeatedly bringing it up saying that benchmark is the reason amd cancelled higher core SR-FX cpus and apparently that's what amd 'told' oem representatives at that event (even thought he failed to provide an audio transcript after repeated asking).

edit: ugh. i don't know why my post got into the middle of the page while it should be at the end... the thread display is messy at my end.

palladin9479 :

you are quite literally twisting the facts here. it's not the apu's(6790k's in that promo slide) performance of apu that increases.

Is that what he's been going on about? The fact that they benched the new APU vs a dCPU with a 630 in it? All that did was show that the APU's iGPU is better then a 630, which I would hope it to be considering how cheap those 630's are.

I should do a bench of my 8350 @4.8 and dual 780 Hydros. Then point at it and say the APU is useless because it can't keep up.

no not that one with kaveri vs 4770k +gt 630.

juanrga keeps bringing up a slide #13 from richland a10 6790k introduction event where a bf4 single player benchmark was shown as the a10 6790k having similar perf to 6+ core fx cpus. then he kept repeatedly bringing it up saying that benchmark is the reason amd cancelled higher core SR-FX cpus and apparently that's what amd 'told' oem representatives at that event (even thought he failed to provide an audio transcript after repeated asking).

edit: ugh. i don't know why my post got into the middle of the page while it should be at the end... the thread display is messy at my end.

What dGPU were they using for the dCPU's? I ask because it wasn't mentioned and without it the benchmark's are useless. I can make pretty graphs that show a TI86 beating an i7-3770K, doesn't mean it'll be worth a damn.

8350rocks

Distinguished

con635 :

Are we not supposed to be getting sandy bridge i5 performance???

It should come in approximately where a locked core i5 SB CPU is in single threaded tasks.

Overclockability on these new APUs is suspect to skepticism at this point. I have a feeling we will see Intel-esque variability on a wide scale. (i.e. the odds of hitting the silicon lottery with the new APUs will be significantly lower than they are with Richland).

Anyone watch the mantle talk at apu13 just now?

Open for 'other vendors' eg nvidia in future, how it removes cpu bottleneck, closer to ps4 than dx, better xfire/multi gpu tbh most of it went over my head but it all seemed positive lol

Oh, will be on linux/steam os it seems as well

Open for 'other vendors' eg nvidia in future, how it removes cpu bottleneck, closer to ps4 than dx, better xfire/multi gpu tbh most of it went over my head but it all seemed positive lol

Oh, will be on linux/steam os it seems as well

8350rocks

Distinguished

con635 :

Anyone watch the mantle talk at apu13 just now?

Open for 'other vendors' eg nvidia in future, how it removes cpu bottleneck, closer to ps4 than dx, better xfire/multi gpu tbh most of it went over my head but it all seemed positive lol

Oh, will be on linux/steam os it seems as well

Open for 'other vendors' eg nvidia in future, how it removes cpu bottleneck, closer to ps4 than dx, better xfire/multi gpu tbh most of it went over my head but it all seemed positive lol

Oh, will be on linux/steam os it seems as well

The fact that it is Linux/OSX native is the biggest boon. Now you could do AAA titles on all 3 major OS platforms...breaking the monopoly Windows has on high end PC Gaming.

griptwister

Distinguished

Speaking about removing CPU bottlenecks...

I just got done playing a round of Crysis 3 Multiplayer on high settings at 1440P. And yes, I just did it with the Phenom II x4 840 and 4Gb RAM. and it ran at a better frame rate than it did at 1080P. But, to be fair, I am using a new driver since the last time I played that game. I'm about to give BF3 a go. This is absolutely insane how smooth everything runs. Can't Imagine once I get the FX 8350 in here. (later this month)

I just got done playing a round of Crysis 3 Multiplayer on high settings at 1440P. And yes, I just did it with the Phenom II x4 840 and 4Gb RAM. and it ran at a better frame rate than it did at 1080P. But, to be fair, I am using a new driver since the last time I played that game. I'm about to give BF3 a go. This is absolutely insane how smooth everything runs. Can't Imagine once I get the FX 8350 in here. (later this month)

tracker45 :

Oh ! So mantle doesn't actually improve game performance, fps etc, it just makes games easier to make for developers.

Still good though.

Still good though.

It actually looked like it nicely increased performance, all over the board cpu/gpu/vram/ram usage. The other bits are a bonus.

con635 :

tracker45 :

Oh ! So mantle doesn't actually improve game performance, fps etc, it just makes games easier to make for developers.

Still good though.

Still good though.

It actually looked like it nicely increased performance,

When did it ?

It has never been displayed by AMD yet.

tracker45 :

con635 :

tracker45 :

Oh ! So mantle doesn't actually improve game performance, fps etc, it just makes games easier to make for developers.

Still good though.

Still good though.

It actually looked like it nicely increased performance,

When did it ?

It has never been displayed by AMD yet.

There was a talk about it at apu13, no showing or numbers just how it works. He was talking like its a wip though maybe we'll only see the start with bf4.

Ranth :

But Juan that is a single case out of many, okay so in the BF4 it won't be 50%, what about the million other applications (Which I btw doesn't think is right either, 10-25% is more likely).

"It's not reflected" in a canned benchmark by AMD who is trying to sell APU's. Would it not be odd if AMD choose a benchmark where the richland would perform poorly? That would be terrible marketing. And before you go claim that "this is how it's going to be" , you have to have more of the picture. Meaning more than one benchmark.

"It's not reflected" in a canned benchmark by AMD who is trying to sell APU's. Would it not be odd if AMD choose a benchmark where the richland would perform poorly? That would be terrible marketing. And before you go claim that "this is how it's going to be" , you have to have more of the picture. Meaning more than one benchmark.

Of course it is only a single case, but I recall that when I started my discussion of this, I clearly said that AMD was taking it as "prototype" for the "next gen" games.

Yes, it is picked benchmark by AMD who is trying to sell APU's at the expense of the FX chips. They could compare Richland to an Intel chip, but they compared to its own FX chips. The FX-4350 was not even mentioned.

noob2222 :

juanrga :

noob2222 :

juanrga :

Disagreeing is fine, but he writing "to save their ass", "a crappy cpu", "and an idle IGP", "weak ass cores"... clearly denotes hate.

Specially when he is plain wrong. As shown in my article about Kaveri a 3.7GHz SR CPU perform like a SB/IB i5 with ordinary CPU workloads, loosing in the FP intensive ones but outperforming in the integer workloads. There I assumed 20% IPC over PD, but some late leaks suggest that the final improvement is >30%. Therefore add to the scores I published if the leak is true.

you want to claim I am wrong yet you only offer your opinion. APU cores are weak, always have been.

the lowly fx-4100 is 46% faster than the A10 5700 and clocked slower. The fx-4320 is 58% faster and the fx-8350 and the I5 3470 is 73% faster. good luck catching that i5.

This is the same architecture. Id call that a weak ass core any day.

again, 4320 = 40% faster, 8350 = 56% faster, I5 3470 = 73% faster.

4320 - 52% , 8320 - 83%,I5 3470 - 89%

4320 - 33%, 8320 - 50%, i5 3470 - 62%

... there is plenty more where these came from

How is kaveri going to catch the I5? 8350? ... heck even the 4320 ...

Id say the a10 5700 is a pretty good spot to start with Kaveri figures since they both clock at 3.7 ghz. As you put it "in ordinary cpu workloads" shown above, its starting at negative 40% already just to get to the 4320.

Kaveri is not going to be the answer your hoping it will be. It will not catch the i5 3470 very often (if ever,) it may here and there, but that will take some luck "in ordinary cpu workloads"

Tell me just exactly how I am "just plain wrong"?

I already said this to you. Also you continue using essentially the same logic than in your previous attack to AMD ARM line.

You miss the estimation of Kaveri CPU performance in the BSN* article, you miss the leaked benchmarks comparing Kaveri to Bulldozer and Piledriver FX, and you miss the BF4 benchmark given by AMD during October talk, where a Richland APU got the 98% of the performance of a FX-6350 and the 96% of the performance of the FX-8350 (the three using a R9 280X and playing @ 1080p ultra). I think you continue missing the subsequent discussion on multiplayer BF4. And you miss that AMD is in the consoles now, with a CPU based in jaguar cores.

Can you compare the performance of the PS4 CPU to Kaveri CPU? I can.

Maybe you don't still understand this, but game developers will be offloading the consoles CPUs and running the heavy computations on the consoles GPUs. That is why both consoles have GPGPU abilities and HSA support.

You also miss that MANTLE aims to liberate some CPU bottlenecks that exist in current gaming technology. This is from Oxide talk at APU13:

Mantle Unleashed: How Mantle changes the fundamentals of what is possible on a PC. Over the last 5 years, GPUs have become so fast that it has become increasingly difficult for the CPU to utilize them. Developers expend considerable effort reducing CPU overhead and often are forced to make compromises to fully utilize the GPU. This talk will discuss real-world results on how Mantle enables game engines to fully and efficiently utilize all the cores on the CPU, and how it’s efficient architecture can eliminate the problems of being CPU bound once and for all.

get over yourself. Very old benchmarks? I specifically looked for games released in 2013 ... ermago ... thats soo friggin old.

a10 5700 = piledriver without l3 cache

4320 = piledriver fx

8350 = piledriver fx

whats soo old about the a10 5700? all that richland brought was higher clock speeds over trinity, kaveri brought lower clock speeds. you can't compare the 4.2 ghz richland to the 3.7 ghz kaveri and say its going to be 30% faster on top of being slower clock. 3.7 ghz piledriver vs 3.7 ghz kaveri is a fair comparison.

If anything id describe AMD's APU as a gpu with an integrated cpu while Intel is making cpus with an integrated gpu.

The reason your stuck on this "BF4 ERMAGO BENCHMARK" is because it doesn't stress the cpu AT ALL. Its a gpu bound benchmark. Thats why it was chosen, its a tactic called marketing.

As for the BSN article, its was pulled from your website.

Agree on that "very old" was an exaggeration from my part, but it was needed to compensate your exaggerated attack on Kaveri APU (I mean that one where you wrote "ass", "crappy", and "ass").

In the same paragraph I also wrote what I mean by "old", (="not optimized for AMD architecture"). Sorry but no, I was not referring to if was released in early 2013 or in late 2009.

I am not discussing marketing, you are. I am discussing technical and economic details behind marketing and execution plans by a company. I am explaining you that the whole master plan (which I have given you some elements) is towards offloading the CPU more and more. As you don't get it, offloading the CPU means you push more work on the GPU. Therefore a GPU bound benchmark is more characteristic of how next gen games will behave than old Intel+Nvidia games.

I note how you avoided my question about the jaguar-based CPU in the consoles...

noob2222 :

juanrga :

Ranth :

Juan we do agree that richland and piledriver is basicly the same, expect for powermanagement and the like, right? what does the richland APU have that the fx doesn't? And specifically what is it that makes the APU be the only one recieving performance increases:

The six factors, Except for the piledriver -> steamroller/HSA (Won't help in singlethreaded), doesn't everything else apply to fx and older apu too..?

The six factors, Except for the piledriver -> steamroller/HSA (Won't help in singlethreaded), doesn't everything else apply to fx and older apu too..?

The APU lacks L3 cache. I don't know if there are other differences which AMD has not disclosed. What I know is what follows.

The BF4 benchmark given at the October talk to OEMs shows a Richland APU performing as well as FX-6350 and FX-8350. The APU gives a 98% and 96% of the performance of each FX respectively.

The argument that Suez map is single player and not well-multithreaded doesn't mean anything, because a FX-4350 will not be 30--50% faster than the FX-6350 and FX-8350.

meaningless ... really ...

ya, ... multiplayer really favors the APU core in the 750k doesn't it.

Just to clarify,

750k = piledriver without l3 cache and no IGP.

4350 = piledriver fx

6300 = piledriver fx

8350 = piledriver fx

lets compare the 4.5 ghz piledriver 750k to the 4.7 ghz fx. That only accounts for a 4.5% difference in clock speed, if perfect scaling (wich its not) then subtract 4.5% from the results.

4350 = 18% faster than the l3 cacheless APU core

6300 = 47% faster than the l3 cacheless APU core

8350 = 76% faster than the l3 cacheless APU core

http://static.techspot.com/articles-info/734/bench/CPU_01.png

More of the same. Here you pick again the same benchmark that I commented before in a reply to you, when you linked it by the first time. And then you repeat the same without reading what was said to you. So typical.

blackkstar

Honorable

What happened to GloFo 22nm SOI process? There was talk of it from 2009 but I haven't heard a single thing about it recently.

http://www.advancedsubstratenews.com/2013/08/pcmags-michael-miller-called-ibms-22nm-soi-power8-the-most-fascinating-of-the-high-end-processors/

Did GloFo bail on 22nm SOI and just give it to IBM? Is it possible for IBM to make chips for AMD then?

http://www.advancedsubstratenews.com/2013/08/pcmags-michael-miller-called-ibms-22nm-soi-power8-the-most-fascinating-of-the-high-end-processors/

Did GloFo bail on 22nm SOI and just give it to IBM? Is it possible for IBM to make chips for AMD then?

8350rocks

Distinguished

juanrga :

Ranth :

But Juan that is a single case out of many, okay so in the BF4 it won't be 50%, what about the million other applications (Which I btw doesn't think is right either, 10-25% is more likely).

"It's not reflected" in a canned benchmark by AMD who is trying to sell APU's. Would it not be odd if AMD choose a benchmark where the richland would perform poorly? That would be terrible marketing. And before you go claim that "this is how it's going to be" , you have to have more of the picture. Meaning more than one benchmark.

"It's not reflected" in a canned benchmark by AMD who is trying to sell APU's. Would it not be odd if AMD choose a benchmark where the richland would perform poorly? That would be terrible marketing. And before you go claim that "this is how it's going to be" , you have to have more of the picture. Meaning more than one benchmark.

Of course it is only a single case, but I recall that when I started my discussion of this, I clearly said that AMD was taking it as "prototype" for the "next gen" games.

Yes, it is picked benchmark by AMD who is trying to sell APU's at the expense of the FX chips. They could compare Richland to an Intel chip, but they compared to its own FX chips. The FX-4350 was not even mentioned.

Of course, look at the die sizes on the APUs...where do you think the margins are better? That 315mm^2 die on the FX series makes them money, but APUs selling for ~70% of the cost, with 60% of the die size makes tons of sense. They can get better yields out of the product because they don't need the big dies like they have in FX.

Now, that of course precludes the fact that FX is still a better CPU when you need the raw horsepower, and so I anticipate they will still sell them by the truck load to boutique builders and DIY PC builders.

You talked about FX 8 cores being 0.4% of steam hardware survey, I think it was, and when you consider that's 50 mil people on Steam, most of them on mobile solutions, I would think you would see something along those lines...that's still 200k machines with FX 8 cores using steam. Considering that there are many people who do not have steam (myself included), I think that the prediction those numbers give is likely only 10-15% of the likely number of people running such systems in the US. You are also neglecting the very prevalent productivity types with that figure, and I think that plays a large part in the small representation of that sample.

de5_Roy :

you are quite literally twisting the facts here. it's not the apu's(6790k's in that promo slide) performance of apu that increases. it's the reduction of the cpus' (fx) performance. go take a look at a 4 core apu vs 6+ core fx in a 7zip benchmark for real cpu performance comparison in a mainstream software.

Your "real cpu performance" benchmark would not show how the APU really performs if I want play BF4 Suarez @ 1080p ultra using a R9 280X.

de5_Roy :

then he kept repeatedly bringing it up saying that benchmark is the reason amd cancelled higher core SR-FX cpus and apparently that's what amd 'told' oem representatives at that event (even thought he failed to provide an audio transcript after repeated asking).

This is pure and simply false (*). The reasons why AMD is not releasing SR FX line are multiple and I explained them here before: transition to APU, reorganization of server plans, and lack of demand. I gave details and further explanations for each one.

What I said about that October talk slide was that it clearly reflect AMD plans about migrating to an APU strategy and guess what Lisa Su (AMD vicepresident) confirmed my thoughts, this week during the opening keynotes:

Lisa Su, Senior VP & GM of Global Business Units at AMD, delivered the opening keynote and the message was clear: AMD is positioning its Accelerated Processing Units (APUs) -- which combine traditional multi-core CPUs and a discrete multi-core graphics processing unit on a single chip -- to dominate the market from smartphones to servers.

(*) Like when you said that I never wrote the PFs for the slide but I did in one of my posts in this thread.

8350rocks

Distinguished

juanrga :

de5_Roy :

then he kept repeatedly bringing it up saying that benchmark is the reason amd cancelled higher core SR-FX cpus and apparently that's what amd 'told' oem representatives at that event (even thought he failed to provide an audio transcript after repeated asking).

This is pure and simply false (*). The reasons why AMD is not releasing SR FX line are multiple and I explained them here before: transition to APU, reorganization of server plans, and lack of demand. I gave details and further explanations for each one.

What I said about that October talk slide was that it clearly reflect AMD plans about migrating to an APU strategy and guess what Lisa Su (AMD vicepresident) confirmed my thoughts, this week during the opening keynotes:

Lisa Su, Senior VP & GM of Global Business Units at AMD, delivered the opening keynote and the message was clear: AMD is positioning its Accelerated Processing Units (APUs) -- which combine traditional multi-core CPUs and a discrete multi-core graphics processing unit on a single chip -- to dominate the market from smartphones to servers.

(*) Like when you said that I never wrote the PFs for the slide but I did in one of my posts in this thread.

LOL...When did AMD say, specifically, that they were not putting out a new FX successor? Without you reading between the lines and interpreting...

I will have word from AMD about future FX successor within the next week. So don't go counting chickens before they hatch again...

- Status

- Not open for further replies.

TRENDING THREADS

-

Question No POST on new AM5 build - - - and the CPU & DRAM lights are on ?

- Started by Uknownflowet

- Replies: 13

-

-

-

-

Question Please help diagnosing a dying RX 6800 XT ?

- Started by sandijs11

- Replies: 13

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.