de5_Roy :

juanrga :

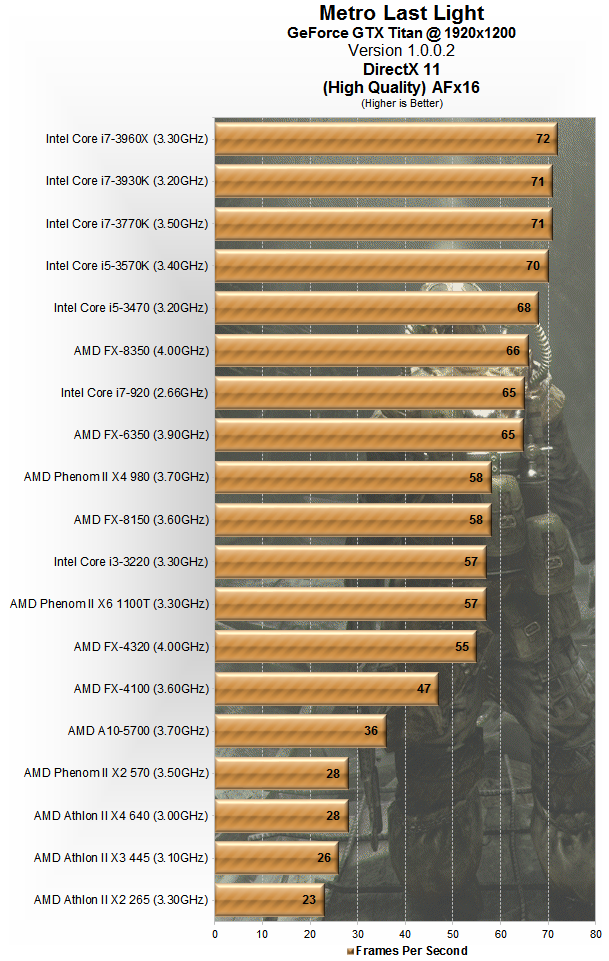

In their talk they were saying to OEMs that the new APU gives >90% of the FX-8350 performance at one fraction of the cost. That is what the slide #13 says. That doesn't look as promoting the FX line. The FX-4350 didn't even was mentioned by AMD.

talk? there's an audio version? i didn't listen to that. if amd said that the new apu(6790k) gives >90% of fx8350's performance, they were intentionally being vague (>90% of what? what tasks?) to pitch 6790k. benchmarketing at play - largely irrelevant, since independent reviews will reflect the real world perf/price.

^^ bolded the relevant part.

de5_Roy :

i just mentioned a couple of post ago that they were promoting 6790k (i.e. not fx). that's what the apu part of slideshow says, mostly filled with advertising than with facts.

And my reply was that usually one doesn't promote one product at the expense of another from the same company, unless the former product is going to replace/substitute the second.

de5_Roy :

the slide never mentioned exact fps, how did you deduce the numbers needed to calculate the percentage? those just show 'greater than' bars.

Measuring the bars and using the axis scale.

de5_Roy :

how did you measure this 'fraction of the cost' from slide #13? the apu has $130 msrp iirc, fx8350 sells for $200 - that's way more than a "fraction". the bf4 bench was done with a discreet card, so the apu's igpu is not a factor.

Evidently the cost is not obtained from slide #13. Spending $70 less for missing only a 8% of performance @ 1080p agrees with my concept of a 'fraction of the cost'.

I was assuming the iGPU was used together the dGPU. If it was not used then this move the performance per $ on the APU side.

de5_Roy :

above all, since when a single, very gpu bound, bf4 single player is representative of "new APU gives >90% of the FX-8350 performance at one fraction of the cost"(even though it's clearly miscalculated and misinterpreted)?

you have misinterpreted 'slide #13'. you're reading too much into it exacerbated by your own misinterpretation.

In the first place, when I presented the slide by the first time. I said that the point was not to discuss if the slide was representative or not, because the fact that AMD had made that slide was much more important. We can discuss if the situation represented in that slide is the general case or only a special case, but this is unimportant. The important is that the slide reflects AMD intentions.

Nobody makes a slide as that and present them to OEMs attending a talk if the goal is to sell lots of FX-6350 and 8350. The slide was clearly made with the goal of emphasizing the APU and deemphasizing the FX line. Now take your own opinion about that.

de5_Roy :

juanrga :

I see two options either (i) AMD will be abandoning the FX-4000 and refreshing the FX-6000/8000/9000 a la Warsaw or (ii) AMD will drop the entire FX line completely and focus on an APU line only.

I am not assuming anything, I asked you a specific question.

you see those options based on a single promo slide from an apu promo slideshow? even if the cpus get phased out, your base is just wrong.

as for answer to your question: you don't know what i believe.

No. I didn't get that from the slide. I already discussed options (i) and (ii)

before the slide appeared. That slide agrees with the options.