"I think the biggest issue AMD faces right now in GPUs are game developers supporting nVidia's proprietary technology. For instance in Fallout 4, if you turn off nVidia god rays on AMD systems, your performance jumps up."

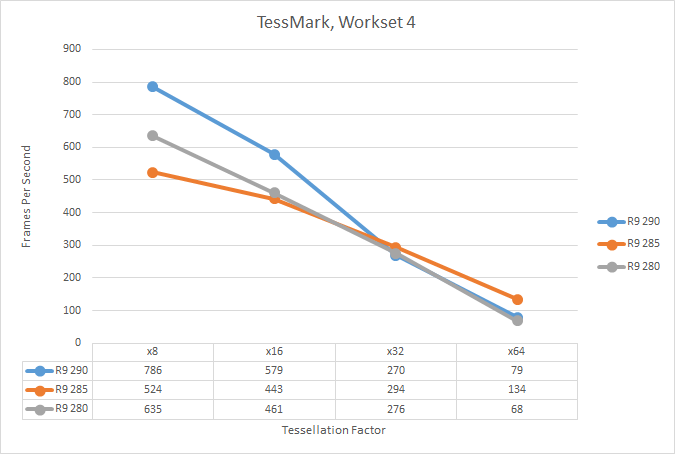

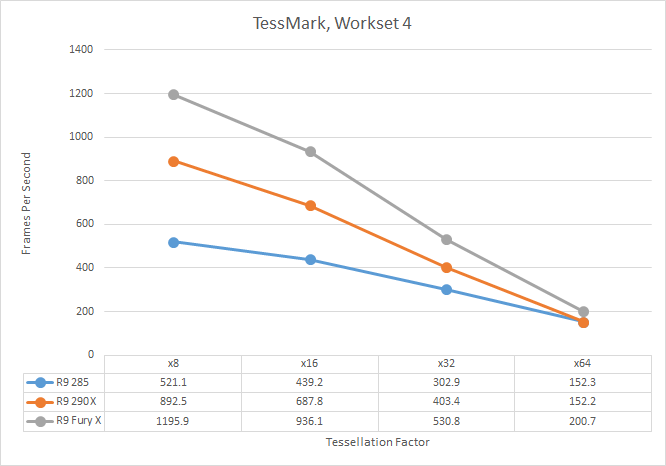

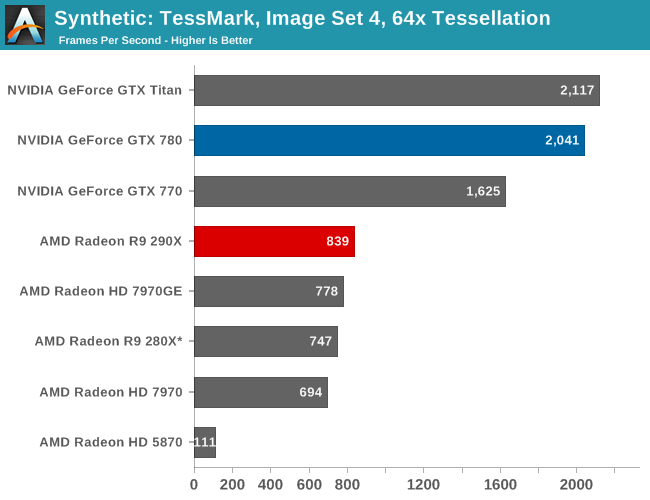

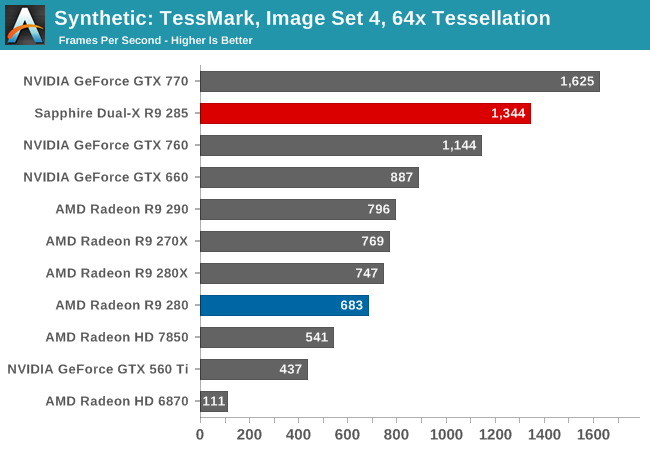

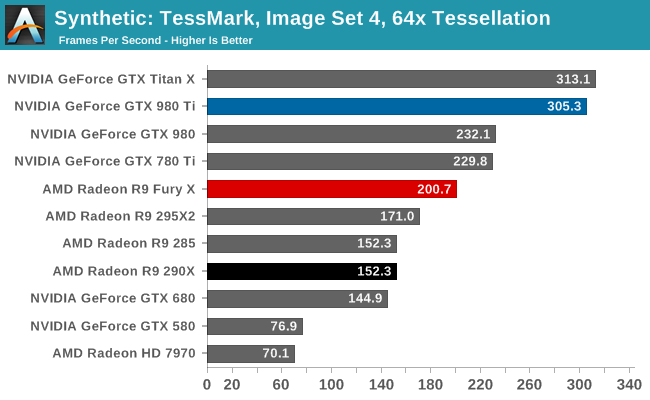

Yes, the Gameworks libraries are proprietary, but there is probably little reason for AMD to not be able to optimize their drivers to compensate for heavy tessellation, which is basically the only issue I'm aware of that cause such dramatic performance hits on AMD vs Nvidia. FO4 was released almost 2 years before Vega hit the streets, and AMD still can't get the drivers right for the Tessellation heavy Gameworks libraries. They've known this was coming for 2 years now, and Nvidia wasn't going to stop pushing the tech all of the sudden.....

I personally gave them (AMD) some leeway in the early days of GW AAA releases like TW3 and FO4, but its been long enough for them to have come up with some solution whether hardware related or software related, knowing that more AAA releases were certainly going to use this Tessellation saturating tech in the future.

Yes, the Gameworks libraries are proprietary, but there is probably little reason for AMD to not be able to optimize their drivers to compensate for heavy tessellation, which is basically the only issue I'm aware of that cause such dramatic performance hits on AMD vs Nvidia. FO4 was released almost 2 years before Vega hit the streets, and AMD still can't get the drivers right for the Tessellation heavy Gameworks libraries. They've known this was coming for 2 years now, and Nvidia wasn't going to stop pushing the tech all of the sudden.....

I personally gave them (AMD) some leeway in the early days of GW AAA releases like TW3 and FO4, but its been long enough for them to have come up with some solution whether hardware related or software related, knowing that more AAA releases were certainly going to use this Tessellation saturating tech in the future.