Honestly, I went with the 9 7950X3D after reading the guide here for best gaming CPU. Not sure what to do now!I assume you had your reasons to get the 7950X3D over a 7800X3D, if you need MT performance maybe return the CPU and wait for the 9950X3D but that might be a while yet.

Review AMD Ryzen 7 9800X3D Review: Devastating Gaming Performance

Page 2 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

JarredWaltonGPU

Splendid

If you want to do mini-ITX, I'm sure this will work. The main thing is total power use, and while the 9800X3D can pull 120W (maybe a bit more in certain workloads), a decent cooler should be able to handle that. Moreover, the CPU will simply clock down a bit if it's running too hot. The boost clocks aren't guaranteed, but the base clock should be doable even in mini-ITX.Is there any chance of putting this into an ITX case with a tiny pure lock LP type of cooler or the increased power draw makes this a nogo? Kinda thinking of pulling the trigger.

JarredWaltonGPU

Splendid

No, AMD did a ~15% gen-on-gen gaming performance improvement. The 7800X3D was already ~15% faster than the 14900K, and the 9800X3D is ~15% faster than the 7800X3D. But yes, it's weird to see Intel tank gaming performance so badly with Arrow Lake. I still don't understand why it put the memory controller on a separate tile / chiplet, which increased latency and hurt performance.Have we ever seen such a gaming performance uplift from one gen to the next in the past? I don't recall myself. This is one impressive CPU.

So AMD does a +30% gaming performance improvement from one gen to the next, while Intel does a -5%. How times have changed.

JarredWaltonGPU

Splendid

If you're only doing MSFS, there's little reason to have the 7950X3D. I'd try to get the 9800X3D, but I suspect they'll sell out for the next month or two. If you do stuff like application work (video editing?), keeping the 7950X3D is probably fine. I'm really, REALLY curious to see what AMD does with 9950X3D. I hope it doubles down on the 3D V-Cache this time and puts it on both chiplets. I'd be more than happy to take a 300 MHz hit to performance for double the cache, even if it costs $50 more.Appreciate the review. I literally just bought a Ryzen 9 7950X3D. I will be using it primarily for flight simulation at a flight school, with a secondary use as an office PC. Did I make a mistake? Should I return the 9 7950X3D for the 7 9800X3D?

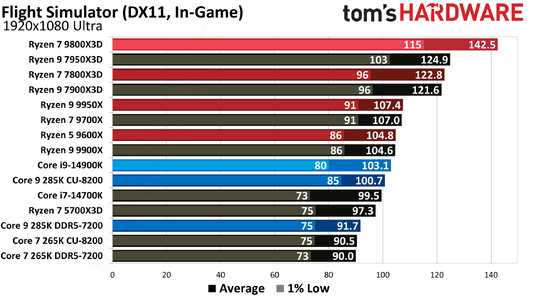

Or are you doing a different simulator than MSFS? Obviously, we don't know how MSFS 2024 will run just yet, though I don't expect it to be less CPU limited than the existing 2020 version. We'll find out in a couple of weeks. But here are Paul's results for MSFS 2020 on these chips:

That's a 14% gain for the 9800X3D, but at 1080p with a 4090. If you're using a lesser GPU, and/or running a higher resolution, the results will of course be different.

Elusive Ruse

Estimable

Well before this release both 7800X3D and 7950X3D were the best gaming CPUs, if your primary use is gaming then you should just return your CPU and buy the 9800X3D.Honestly, I went with the 9 7950X3D after reading the guide here for best gaming CPU. Not sure what to do now!

Thank you. The system we put together is as follows:If you're only doing MSFS, there's little reason to have the 7950X3D. I'd try to get the 9800X3D, but I suspect they'll sell out for the next month or two. If you do stuff like application work (video editing?), keeping the 7950X3D is probably fine. I'm really, REALLY curious to see what AMD does with 9950X3D. I hope it doubles down on the 3D V-Cache this time and puts it on both chiplets. I'd be more than happy to take a 300 MHz hit to performance for double the cache, even if it costs $50 more.

9 7950X3D

GIGABYTE X870E AORUS Master

ASUS ROG Ryujin III 360 ARGB All-in-one Liquid CPU Cooler with 360mm Radiator

G.SKILL Trident Z5 Neo RGB Series (AMD Expo) DDR5 RAM 64GB

MSI 4090 GPU

Sabrent Rocket 5

JarredWaltonGPU

Splendid

Do you run MSFS at 1080p ultra, or 1440p ultra, 4K ultra, ultrawide 3440x1440? Those will all impact performance as well. I suspect at 3440x1440 and 4K, there will be less than a ~5% difference between the various top CPUs.Thank you. The system we put together is as follows:

9 7950X3D

GIGABYTE X870E AORUS Master

ASUS ROG Ryujin III 360 ARGB All-in-one Liquid CPU Cooler with 360mm Radiator

G.SKILL Trident Z5 Neo RGB Series (AMD Expo) DDR5 RAM 64GB

MSI 4090 GPU

Sabrent Rocket 5

Thank you for all the help! We will be running it at 3840x1600 on an Alienware AW3821DWDo you run MSFS at 1080p ultra, or 1440p ultra, 4K ultra, ultrawide 3440x1440? Those will all impact performance as well. I suspect at 3440x1440 and 4K, there will be less than a ~5% difference between the various top CPUs.

There's always a better processor down the road. You've got an excellent processor now, just use it!Honestly, I went with the 9 7950X3D after reading the guide here for best gaming CPU. Not sure what to do now!

I'm starting to see some some "ideal" recommendations recommending more than 8 cores.

MSFS 2024 is one of them - the ideal config is supposedly a 7900X instead of a 7800X3D

It will be interesting to see if a 9900X does better than a 9800X3d when MSFS 2024 comes out.

MSFS 2024 is one of them - the ideal config is supposedly a 7900X instead of a 7800X3D

It will be interesting to see if a 9900X does better than a 9800X3d when MSFS 2024 comes out.

gggplaya

Splendid

No, AMD did a ~15% gen-on-gen gaming performance improvement. The 7800X3D was already ~15% faster than the 14900K, and the 9800X3D is ~15% faster than the 7800X3D. But yes, it's weird to see Intel tank gaming performance so badly with Arrow Lake. I still don't understand why it put the memory controller on a separate tile / chiplet, which increased latency and hurt performance.

They did it for power efficiency. I think if you look at Intel's endgame with this architecture, they want to be more like AMD with their chiplet designs. Moving more things off-die and focusing on power efficiency will allow them to make their server processors more like Epyc where they can use regular desktop dies to make server chips. Also separating the memory controller and other functions into tiles again will make this transition easier. AMD is killing Intel on price in the server market right now. Last quarter they sold more server revenue than Intel, which has Intel panicking. If Intel can use their desktop dies to make server chips, they could lower their prices enough to compete again with AMD. The current Intel generation gave zero f's about gaming. They are positioning themselves for their next server products coming in the future. Mark my words. We will see Intel server chips made from desktop dies in the future.

15% performance uplift over the 7800x3d is basically a gpu tier of uplift. That's incredible for a cpu. Well done amd.

Intel, please don't quit. We the consumer need you to compete!

Intel, please don't quit. We the consumer need you to compete!

RemmRun

Reputable

There certainly are reasons Intel is doing badly, economically wise, and this is one of those reasons, a big reason

This isn't even in the top 100, lol.There certainly are reasons Intel is doing badly, economically wise, and this is one of those reasons, a big reason

JarredWaltonGPU

Splendid

Yeah, as I said above, for 4K I doubt you'll see much of a delta between the various CPUs. I mean, you could run high settings instead of ultra and get some benefit. There's also DLSS3 frame generation to smooth things out, which works decently in MSFS since it's not as sensitive to a slight increase in input latency.Thank you for all the help! We will be running it at 3840x1600 on an Alienware AW3821DW

I really can't wait to see what the gains are with the 9950X3D. There should theoretically be more to gain from the generational update to the cores AND improving cross-CCD operation, than from the generational update alone.

I think you'll be fine with the 7950X3D. Don't sweat it, you need a good enough frame rate to run the simulator, you're not competing in a benchmarking contest.Appreciate the review. I literally just bought a Ryzen 9 7950X3D. I will be using it primarily for flight simulation at a flight school, with a secondary use as an office PC. Did I make a mistake? Should I return the 9 7950X3D for the 7 9800X3d?

KraakBal

Honorable

Congratulations AMD, thought you were trash my whole life until Zen 2 in 2019.. and since then been rooting for you hard.

With you taking the server market even more by storm you will be the goto choice. A lot of software will finally make AMD a priority when it comes to optimisations in general

With you taking the server market even more by storm you will be the goto choice. A lot of software will finally make AMD a priority when it comes to optimisations in general

Last edited:

JarredWaltonGPU

Splendid

I don't think this has anything to do with power efficiency. It was really just a cost saving measure IMO. The IO tile uses TSMC N6, and since external interfaces (read: memory controllers) don't scale well with smaller process nodes, putting that on the most expensive compute tile (N3B) would have increased costs quite a bit. I mean, not like MASSIVELY, but for bean counters it might have been 5% more expensive overall. That's a big change.They did it for power efficiency. I think if you look at Intel's endgame with this architecture, they want to be more like AMD with their chiplet designs. Moving more things off-die and focusing on power efficiency will allow them to make their server processors more like Epyc where they can use regular desktop dies to make server chips. Also separating the memory controller and other functions into tiles again will make this transition easier.

So, really, I do know why (I think) Intel put the memory controller on the IO tile. I just think it was a very odd and shortsighted decision. And in a similar vein, and I've talked with Paul about this as well, it seems absolutely crazy that Intel hasn't opted to put some sort of large cache tile into the mix here. The engineers at Intel have known about the gaming benefits of large caches for about ten years: Broadwell i7-5775C. That thing rocked for gaming, often beating the "superior" Skylake chips. But it was clocked lower and never really intended to be the next big thing.

AMD took the idea and ran with it on Zen 3 X3D, with major benefits. Intel could easily (relatively) do the same thing with the current tiled architectures. Link up one more chip somewhere, a big fat L3 cache of 64MB, and suddenly you have a gaming contender again. My bet? Intel will do this with Nova Lake. It would be foolish not to do it by then, so maybe we could even see an Arrow Lake Refresh that adds a cache tile.

In the grand scheme of CPU architectural design, this should be pretty trivial to do compared to all the stuff that's happened with cores and threads and decode/execute instruction widths. Lion Cove is 8-wide compared to the 6-wide used for the past eight years. I seriously don't get how no one felt adding a bigger cache was justifiable.

I agree with the rest, though. Intel needs tiled architecture server chips, three years ago. Again, crazy that it hasn't happened already! Not that Ponte Vecchio didn't try (and have plenty of other issues... but that's a GPU, not a CPU).AMD is killing Intel on price in the server market right now. Last quarter they sold more server revenue than Intel, which has Intel panicking. If Intel can use their desktop dies to make server chips, they could lower their prices enough to compete again with AMD. The current Intel generation gave zero f's about gaming. They are positioning themselves for their next server products coming in the future. Mark my words. We will see Intel server chips made from desktop dies in the future.

Even at 1080, based on your graphic, does anyone think it's worth worrying over whether it gets 125 FPS or 142? Both FPS are more than good enough and buttery smooth. And as mentioned, it will be nearly impossible to get a 9800X3D within the return window of his current CPU.Do you run MSFS at 1080p ultra, or 1440p ultra, 4K ultra, ultrawide 3440x1440? Those will all impact performance as well. I suspect at 3440x1440 and 4K, there will be less than a ~5% difference between the various top CPUs.

thestryker

Judicious

I think what it tells you is from a business perspective it simply doesn't matter to Intel more than the cost. Adding cache is a non-trivial matter and would require it's own run (an even more important issue pre-tiles). The only reason it even happened for AMD is the combination of enterprise (the only reason they were testing stacked cache) and using the same CCDs for both enterprise and client. Now with Intel going all in on tiles I do think it's a missed opportunity if they don't explore something along these lines but it does make sense from a cost standpoint why it hasn't happened yet.And in a similar vein, and I've talked with Paul about this as well, it seems absolutely crazy that Intel hasn't opted to put some sort of large cache tile into the mix here. The engineers at Intel have known about the gaming benefits of large caches for about ten years: Broadwell i7-5775C. That thing rocked for gaming, often beating the "superior" Skylake chips. But it was clocked lower and never really intended to be the next big thing.

thestryker

Judicious

Glad to see the switch on the cache is opening up higher clocks as it allows X3D to be better general performance than ever before. I will say I'm a bit surprised Turbo Mode doesn't seem to be part of AMD's push along with the launch, but very much looking forward to an examination of it.

If I work for 8 hours, then spend 2-3 browsing or watching some movie, that means it is around 250-275W more power draw on AMD. Then if I find some time to play some game, it may balance out. Not sure about AMD, but also Intel can be power limited to get slightly worse results at much lower power draw.

Idle power draw simply sucks.

Idle power draw simply sucks.

gggplaya

Splendid

Power efficiency is important for thermals and actual wattage usage. If they want to add multiple dies together to make 96+ core server chips, they'll need to get everything down to reasonable levels while still performing great in workloads while keeping clock speeds as high as possible. So for a single chip, I don't see any real world benefits at the moment. But in a multi-die package, I can see the advantages of improving efficiency. Which is what makes me think they're doing all this for their server market in the future.I don't think this has anything to do with power efficiency. It was really just a cost saving measure IMO.

I plan on buying Intel Stock when I think they're going to launch their nextgen server chips, contingent on them using desktop chips for their servers. If they do, they'll be price competitive with AMD while offering great performance. Which will allow them to claw back marketshare from what AMD has taken.

Last edited:

It just disables SMT. I don't know, don't think it's incredibly useful on an 8 core chip.Glad to see the switch on the cache is opening up higher clocks as it allows X3D to be better general performance than ever before. I will say I'm a bit surprised Turbo Mode doesn't seem to be part of AMD's push along with the launch, but very much looking forward to an examination of it.

TRENDING THREADS

-

Question My DELL PC always have no signal when I plug into GPU Nvidia GeForce GT 730

- Started by Jbonie8

- Replies: 5

-

-

-

-

Question ARRGGGH!!!! Looking for help finding a replacement screw.

- Started by Franknj229

- Replies: 11

-

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.