1) Client CPUs, not GPUs, can do vector FP64...

Oops. I'm terribly sorry. That was a simple typo, on my part. I meant to type "client GPUs", as in the gaming GPUs. I'm well aware that x86 CPUs have supported vector fp64, ever since SSE2.

Just look at the VFMADD132PD instruction in AVX512...

Yes, and thank you for even citing a specific example.

Client GPUs can do FP64 as well,

This is the part where I was trying to say they only do scalar fp64. The way to see this for Nvidia is slightly convoluted. First you have to see which CUDA Compute Capability is supported by a given GPU. Then, you can read about its features and properties. So, we can take the example of their latest Blackwell client & server GPUs, but this holds for all of the ones they've shipped in the past decade.

According to this, the RTX 5000-series (i.e. Blackwell client GPUs) support CUDA Compute Capability 12.0, while the server Blackwells support 10.0:

In section 17.9.1 of their CUDA Programming Guide, they say that a 10.x SM (Shader Multiprocessor) contains 128x fp32 pipelines, 64x fp64 pipelines, and 64x int32 pipelines. A warp is their unit of SIMD and is 32-wide. So, that corresponds to an ability for concurrent dispatch of up to 4 warp-level fp32 operations, 2 warp-level fp64 operations, or 2 warp-level int32 operations. They don't say how you can mix and match, but I assume they have only enough bandwidth to/from the vector register file to sustain 4x 32-bit warp ops per cycle.

Contrast this with what 12.0 supports, in section 17.10.1. There, the number of fp64 pipelines per SM drops to just 2. That's their way of saying there are only two scalar fp64 piplines per SM and no vector fp64 capability.

You can go through and see a similar pattern between all of their client vs. server GPUs.

just that they cut alot of the throughput because CLIENT workloads don't need much of it... but HPC sure does...

Yeah, funny enough, Intel's Alchemist generation dGPUs dropped

all hardware fp64 capabilities and support it only through emulation. In the Battlemage generation, they brought back true hardware scalar fp64 support.

If you look at their GPU architecture slides from about 4+ years ago, they list fp64 support as being optional for the Xe cores used in both the "Xe-HPG" (High-Performance Gaming) GPUs and Xe-HP (general-purpose cloud GPU). They subsequently cancelled Xe-HP, which was probably the version of the core that actually had scalar harware fp64, leaving only Xe-HPG and Xe-HPC (AKA Ponte Vecchio, which has full vector fp64). I'm having trouble locating a copy of the slide...

In AMD's case, at least RDNA4 seems to take a different approach. I'm not sure if this is true for all generations of RDNA, as I only checked the latest, but you do find a full contingent of V_..._F64 instructions in their ISA manual:

The specs indicate a 64:1 ratio of fp32 to fp64 TFLOPS. I think they might microcode those Vector F64 instructions to run on a single scalar port per WGP, but I haven't found direct confirmation.

3) 15FLOPs/Byte may not be hard to hit... but what about over 245 FLOPs per Byte which is what you'd need to hit for fully saturating FP16 Matrix on the MI300X... that WILL be a lot harder to hit...

A couple of points about that. The first is that you're often convolving multiple data items against the same weights. The weights can be held in registers, leaving only the data items to be streamed in/out.

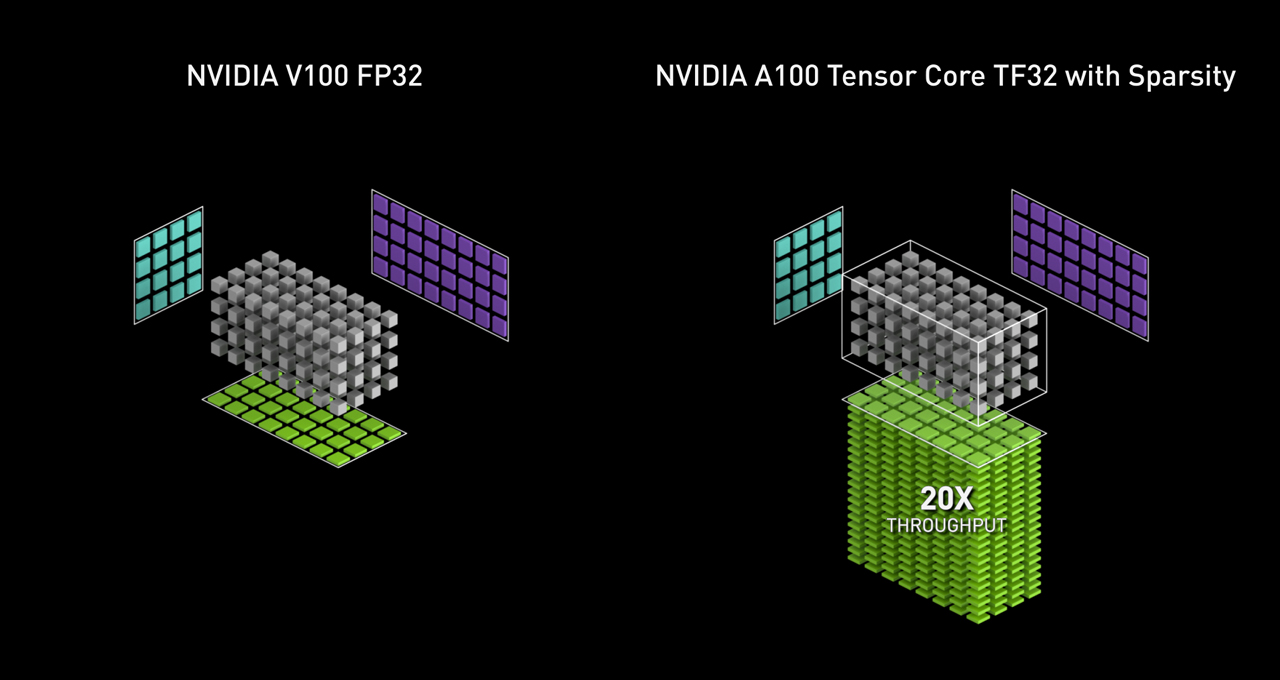

Next, if we're talking about matrix multiply, there's an inherent data efficiency in matrix multiply hardware. The amount of compute scales as roughly a cube of the input/output data width. This is how "AI" GPUs and NPUs can tout such eye-watering numbers of TOPS and why it was such a game-changer when Nvidia first added Tensor cores.

Silicon area is expensive, which is why I'm rather confident there's not an over-capacity of compute power, for the key workloads they're targeting.