If you wanted a PCIe device to have direct access to the pagefile, you'd need that device to have detailed knowledge of the file system, synchronize accesses with the OS to avoid file system corruption, have some method of managing access control lists, trust that the device will actually follow ACLs, etc. or have the CPU/OS manage all DMA activity. In a coherent CXL environment, everything becomes memory pages and all the CPU/OS has to do is decide what devices can access what memory pages, the devices can sort the rest out between themselves, no more need for CPU/OS intervention since there is no file system getting in the way.As a layman, it's not obvious to me why this is different/better than the current situation of using a pagefile for arbitrary amounts of virtual memory, and having peripherals access memory via DMA. Can you elaborate?

News AMD Working to Bring CXL Technology to Consumer CPUs

Page 2 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I do 3d art - anything that can get my render engine more memory is a fine thing, and something that I am very interested in.

At the hobbyist level, I was running out of ram (128Gb) a decade ago. The ability to add a Tb of ram to my render engine would really be helpful, since we have too many idiot vendors that believe that 8K texture maps are a good thing.

I don't think that's the problem which CXL aims to solve. If you really need more than 128GB RAM, then you can have that already (8TB on 2way Epyc/Xeon, 4TB on Xeon W, 2TB on Threadripper Pro, etc). It is true that you need to spend more on CPU to have that kind of memory, but the small price difference shouldn't be a problem, when the cost of RAM is already hovering at $5k/TB. I believe CXL is here for the very few workloads which require more memory than the ceiling (currently sitting at 4TB). There are some (in fact a few) workloads which slow down greatly when you only got 4TB of RAM (compare to 8TB) and CXL could boost the speed by providing the additional 4TB of RAM needed.

Nothing about this is true.Because NAND isn't as slow as you claim... the main reason NAND SSD's are that slow today are the controllers and housekeeping they do. The wide fast low latency part you can GET is always better than the single supplier technically better part that you cannot get. If you spend more money on the nand controller in enerprise parts you start approaching Optane performance... that is just all there is to it. Then all optane has going for it is durability.

NAND isn't slow simply because SSD controllers are rubbish. I don't know where you got the idea that high-end NVMe controllers are a bottleneck, simply for lack of investment or due to cost savings. Try asking that in an AMA with Phison, Samsung, or Silicon Motion and they'll probably laugh you out of the thread.

NAND is slow (compared with Optane) for multiple reasons. One is that NAND requires an entire block to be read & written at a time, and error correction must be performed. Any advantage that high-performance enterprise SSDs have over consumer models is likely that they run in pseudo-MLC or pseudo-SLC mode, where they use NAND with higher-density cells and simply pack in fewer bits. This lower density makes them faster and also more expensive. And where a few enterprise SSDs have been able to get in the same order of magnitude latency as Optane, a lot of that is because the NVMe/PCIe protocol overhead is simply dominating its latency. Optane DIMMs show just how well it can theoretically perform.

Most likely, enterprise drives use the same controllers and NAND as high-end consumer drives, but with different cell densities, different firmware features enabled, and in different form factors, like U.2.

Anyway, SSDs on CXL will be able to shave off some PCIe overhead, but that still won't make them anywhere near as fast as CXL memory devices[1]. The rumor behind Optane's demise is that it failed to retain a cost advantage over DRAM (not that it wasn't still much faster than NAND[2]). And that opens the door for battery-backed and NAND-backed alternatives, especially since CXL unchains us from the DIMM form factor, for memory devices.

- https://www.servethehome.com/compute-express-link-cxl-latency-how-much-is-added-at-hc34/

- Intel's P5800X maintains < 6 µs latency @ 99th percentile. No NAND-based SSD can touch that. See: https://www.storagereview.com/review/intel-optane-ssd-p5800x-review

Because most of your accesses would go to in-package DRAM stacks, which are potentially much faster.So a bleeding edge, proprietary packaging ram module further from the cpu would help the mainstream user how?

According to this, CXL might add < 2x the latency of existing DIMMs, which isn't a bad tradeoff if most of your accesses stay in-package.

As I explained above, it's not the controller that makes Optane SSDs special. What will replace Optane SSDs, in the CXL-dominated world of tomorrow, is battery/NAND-backed DRAM. You'll get all the speed of DRAM, but with persistence. And if you don't need persistence, then you can simply use regular DRAM modules.Could you imagine if a company that made Optane could put that controller tech into a nand ssd?

I didn't follow that product line, but my guess is that they primarily used the Optane memory as a fast write-buffer, whereas most drives use NAND in pseudo-SLC or pseudo-MLC mode. If you dig into write-oriented benchmarks, you'll probably see the benefit of this approach. I'm not saying it's worth the added cost, but to properly assess them, you must understand where they're trying to add value.Guess what? Intel could, and even mixed the two on the same ssd and nand was still the same latency.

Memory tiering scales way better. Just look at who is putting TBs of DRAM in servers, and ask why they don't simply use pagefiles instead.As a layman, it's not obvious to me why this is different/better than the current situation of using a pagefile for arbitrary amounts of virtual memory, and having peripherals access memory via DMA. Can you elaborate?

The problem with today's approach to scaling memory capacity is that you reach a point where you have to add more CPUs, just so they can host more DRAM (because DRAM is directly coupled to a CPU, currently). CXL enables "disaggregation", where you can scale out memory independently from the number of CPUs. It can also be shared by multiple CPUs, to the extent that people are even talking about having a separate physical boxes of DRAM, in a rack.I don't think that's the problem which CXL aims to solve. If you really need more than 128GB RAM, then you can have that already (8TB on 2way Epyc/Xeon, 4TB on Xeon W, 2TB on Threadripper Pro, etc).

For AMD, the "add a CPU" approach becomes a problem, since they only scale up to dual-CPU configurations. Also, with the number of cores their CPUs are now having (soon: 96 and 128 cores, more to follow), there's probably not another good reason for customers to go beyond dual-CPU configurations.

CXL gives other devices equal access to memory, such as network controllers (think: 100+ Gigabit) and compute accelerators. Because CXL is cache-coherent, they'll no longer need to route memory requests through a CPU, which will reduce latency and use interconnect bandwidth more efficiently.I believe CXL is here for the very few workloads which require more memory than the ceiling (currently sitting at 4TB).

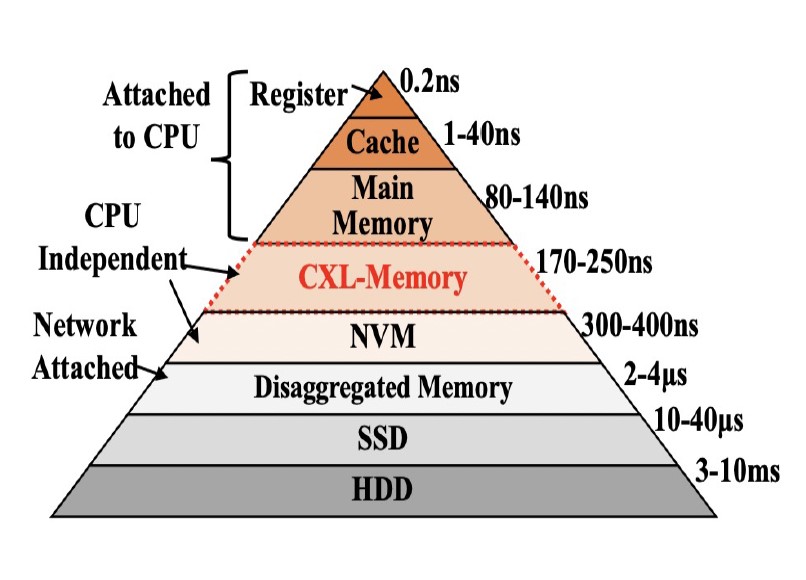

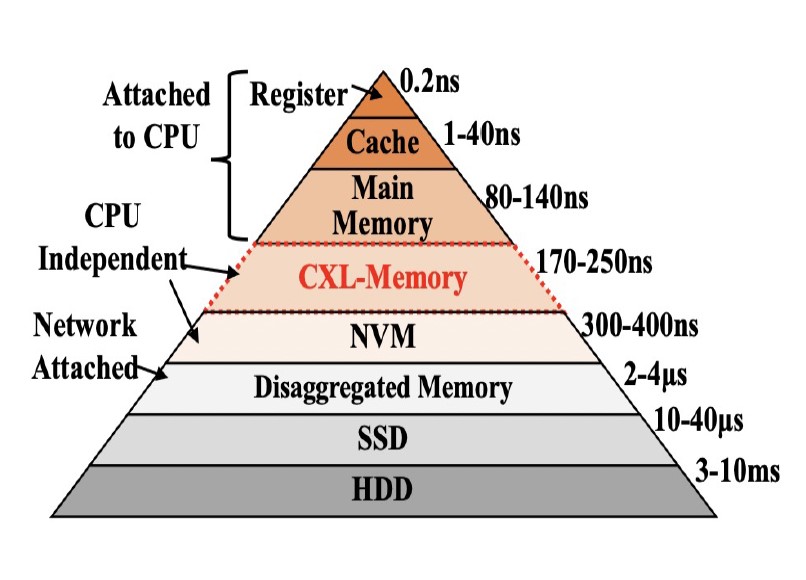

That pyramid doesn't factor in any hardware latency for the nvm. It just shows dram in CXL running at slightly better latency than Optane. And some magical unnamed NVM that isn't the lower ssd or hdd.Because most of your accesses would go to in-package DRAM stacks, which are potentially much faster.

According to this, CXL might add < 2x the latency of existing DIMMs, which isn't a bad tradeoff if most of your accesses stay in-package.

As I explained above, it's not the controller that makes Optane SSDs special. What will replace Optane SSDs, in the CXL-dominated world of tomorrow, is battery/NAND-backed DRAM. You'll get all the speed of DRAM, but with persistence. And if you don't need persistence, then you can simply use regular DRAM modules.

I didn't follow that product line, but my guess is that they primarily used the Optane memory as a fast write-buffer, whereas most drives use NAND in pseudo-SLC or pseudo-MLC mode. If you dig into write-oriented benchmarks, you'll probably see the benefit of this approach. I'm not saying it's worth the added cost, but to properly assess them, you must understand where they're trying to add value.

How long are these CXL ram modules persistent?

And are they cheaper than regular ram?

Also that Optane/ssd hybrid used crappy qlc, but that was in response to someone else.

Not quite. You have to erase one block at a time but each block is sub-divided into pages and you can write one page at a time.One is that NAND requires an entire block to be read & written at a time, and error correction must be performed.

You can also program one cell at a time as long as the changes only affect cells that were still in the 'erased' (highest charge) state, which is handy for the SSD's internal book-keeping for things like marking used pages, deleted and defective pages, write/erase counting for write leveling, etc.

I don't think that's the problem which CXL aims to solve. If you really need more than 128GB RAM, then you can have that already (8TB on 2way Epyc/Xeon, 4TB on Xeon W, 2TB on Threadripper Pro, etc).

I take it you missed the "hobbyist" portion of my statement. Going used - Eypc is the cheapest way, (7001 based systems can be had for 2K used). If I wasn't taking care of my mother, this is the route I would go, and my current system (5700/RTX 3060) would just be my gaming boxen.

The latest version of the Cycles fork in Poser will use multiple GPUs. Right now I am watching the prices of 3060 (12gb). Two of those would get me over 7,000 CUDA cores & 24gb of Vram for less than an additional $300 to my current system and cut my render speed by about a third, according to the developers. Going the dual RTX 3080 route would get me over double the CUDA cores (just under 17,000), but I'd also need to factor in the cost of a 1200 watt power supply.

CXL is at the hardware bus level and the pagefile is at the OS level. Because the OS has to go through all of the steps necessary to move things around there is a pretty significant amount of latency in the process. CXL creates about as close of a hardware direct link as you can get and each of the devices can talk not only its single interface point, but to each other. In addition to this the protocol provisions the ability to have accelerators in the mix as well. So rather than the OS having to decide what should be in RAM and what should be on the page file in theory a dedicated hardware accelerator could offload most of this operation without direct CPU interaction and it all just works as a self maintaining memory pool, even when mixing RAM and SSDs. You are still going to have a cache routine, but it would be offloaded. Throw an FPGA in the mix as the accelerator and you set yourself up for the ability to software define and tweak the interaction on a programable level with hardware speeds. So depending on your application's needs the pool and accelerator could be optimized differently on the same hardware on the fly. On consumer machines this isn't that big of a deal. But in server applications running specific loads the ability to optimize the memory structure from the software and define multi tiered pools on the fly would be a huge performance benefit. Taken a step further it could also allow hot-swapping in and out of the memory pool. Most of this stuff is pretty point moot for a normal consumer, but for a server that needs a high nine count it could reduce some of the redundancy necessary today or add 9s in the same setup.As a layman, it's not obvious to me why this is different/better than the current situation of using a pagefile for arbitrary amounts of virtual memory, and having peripherals access memory via DMA. Can you elaborate?

Or, it could be they're expecting CXL to eventually replace PCIe. CXL being the more versatile standard, it's a little hard to see why you'd need both.They are obviously trying to find or create a market among people by selling things that 90+% of people have no real need for. The truth is, we all know this, that people are trained to buy with excess.

Kind of hard to get rid of PCIe when CXL's hardware enumeration, initialization and register-based accesses still need PCIe. CXL is basically a low-latency alt-mode and PCIe is the "PCIe-PD" used to setup and manage the alt-mode.Or, it could be they're expecting CXL to eventually replace PCIe. CXL being the more versatile standard, it's a little hard to see why you'd need both.

George³

Respectable

How? They used patented from other companies phisical interface, controllers and slots.Or, it could be they're expecting CXL to eventually replace PCIe. CXL being the more versatile standard, it's a little hard to see why you'd need both.

Who did? CXL? Its patents should belong to a pool managed by the CXL consortium, and not hard or too costly for implementers to license.How? They used patented from other companies phisical interface, controllers and slots.

George³

Respectable

I think I have sufficiently expressed exactly what I meant. And maybe I'm wrongWho did? CXL? Its patents should belong to a pool managed by the CXL consortium, and not hard or too costly for implementers to license.

TRENDING THREADS

-

Question upgraded to 32gb ddr4 and now it wont boot above 2133mhz - help appreciated

- Started by SophieTheMeh

- Replies: 4

-

-

-

-

Question Difficulty over Nord (and other) VPN configuration

- Started by kurtepearl22

- Replies: 0

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.