TerryLaze

Titan

Yes, that's what AMD is targeting.....what was your point here even?! That AMD makes CPUs for 20 years ago?!That is just 100% false... What about the literal 30 years of games from 1970-2000 when there was literally only 1 core in PCs so that was all that was programed for? THere were very few games that used more than 1 thread from 2000-2010ish.

7950x has a max single core boost of 5.7Ghz according to AMD ,in games it runs at 5-5.1Ghz so you are the one that has no clue what you are talking about.As far as the comment on AMD and how their CPUs clock themselves, you also have not a clue what you are talking about.

Yeah when they can keep that single core speed going when the whole CPU is doing work, that has been my argument the whole time.dude you are really reaching here.

What everyone is saying and what you are trying very hard not to hear is that CPU's that scored highly in single threaded benchmarks have historically been better at gaming

How many do I have to look at for you to make up your mind? Just asking to know how long this will go on for.While games may use multiple cores, most of the work is done on just a couple, check out your core loads next time you are gaming and you'll see what I mean.

Here is the most single thread biased modern game I could find and it runs 16 threads that have a decent amount of load additional to the main thread.

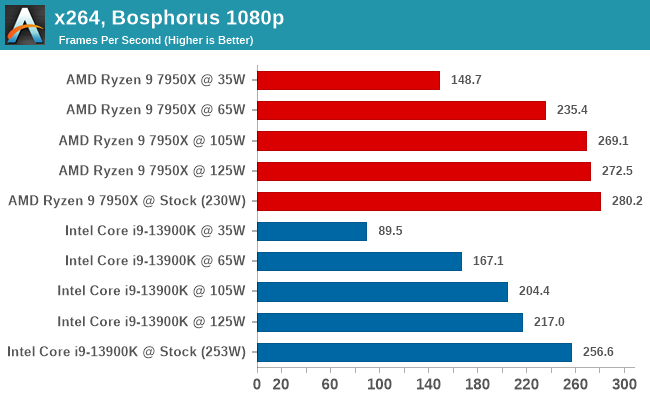

This will keep all cores busy enough for them to not go to sleep and force the CPU into the all core clock speed.