First of all my question is do you guys know any programs that show VRAM usage? I'm not talking about programs like MSI afterburner, Aurus Engine, or even the Task Manager because they show the allocation

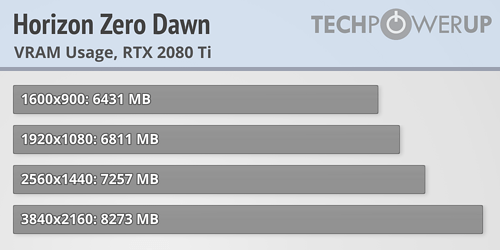

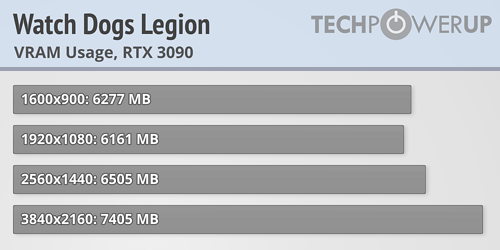

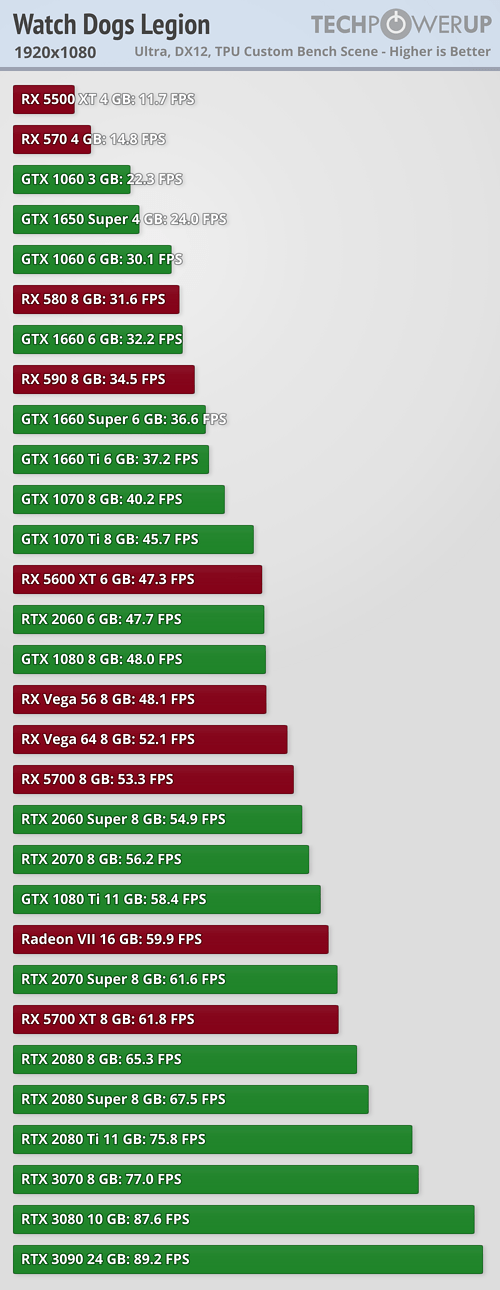

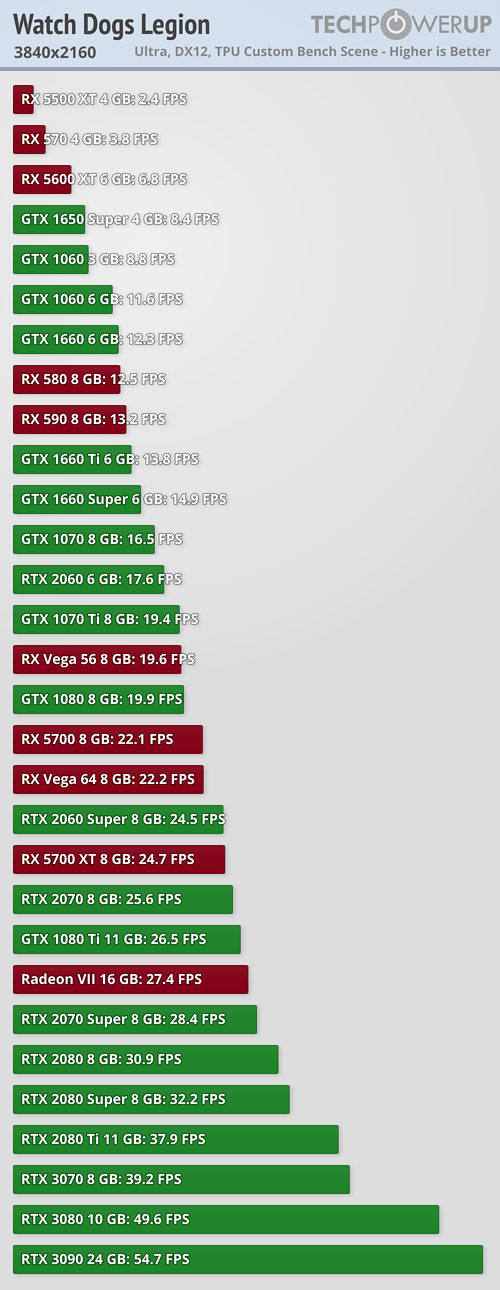

And on the side of things, a lot of people are worried that the 8GB or the 10GB of VRAM that the 3070 and 3080 come with are not enough for 1440p or 4k because of people constantly talking about how "8GB of VRAM is not enough even at 1440p, Doom Ethernal uses 9GB of VRAM at 1440p and 11 at 4k" which is just crazy. They scare people with VRAM allocation maybe not even knowing themselves that VRAM allocation is NOT VRAM usage, a game can allocate 8GB of a VRAM on startup but use around 5 to 6GB while playing.

People need to chill out.

And on the side of things, a lot of people are worried that the 8GB or the 10GB of VRAM that the 3070 and 3080 come with are not enough for 1440p or 4k because of people constantly talking about how "8GB of VRAM is not enough even at 1440p, Doom Ethernal uses 9GB of VRAM at 1440p and 11 at 4k" which is just crazy. They scare people with VRAM allocation maybe not even knowing themselves that VRAM allocation is NOT VRAM usage, a game can allocate 8GB of a VRAM on startup but use around 5 to 6GB while playing.

People need to chill out.