Achoo22 :

THat is a very generous offer, thank you. I'm running a HD6850 on an i5 2500. Upgrading to the beta drivers you mentioned in the article and dropping everything to lowest possible settings at 720p while shutting down every nonessential background task made the game playable, but I'm still seeing severe hitching. Especially while driving, the action periodically grinds almost to a complete standstill. I notice that when this happens, my HD activity light is glowing like the sun and firing up resource monitor does show a ton of hard paging faults - I decided to bite the bullet and pick up more RAM to see if it helps. I do wish I would've seen your message before placing my order, but maybe the extra headroom is still a good idea - I originally planned to hold off on any upgrades at all until rebuilding my next system.

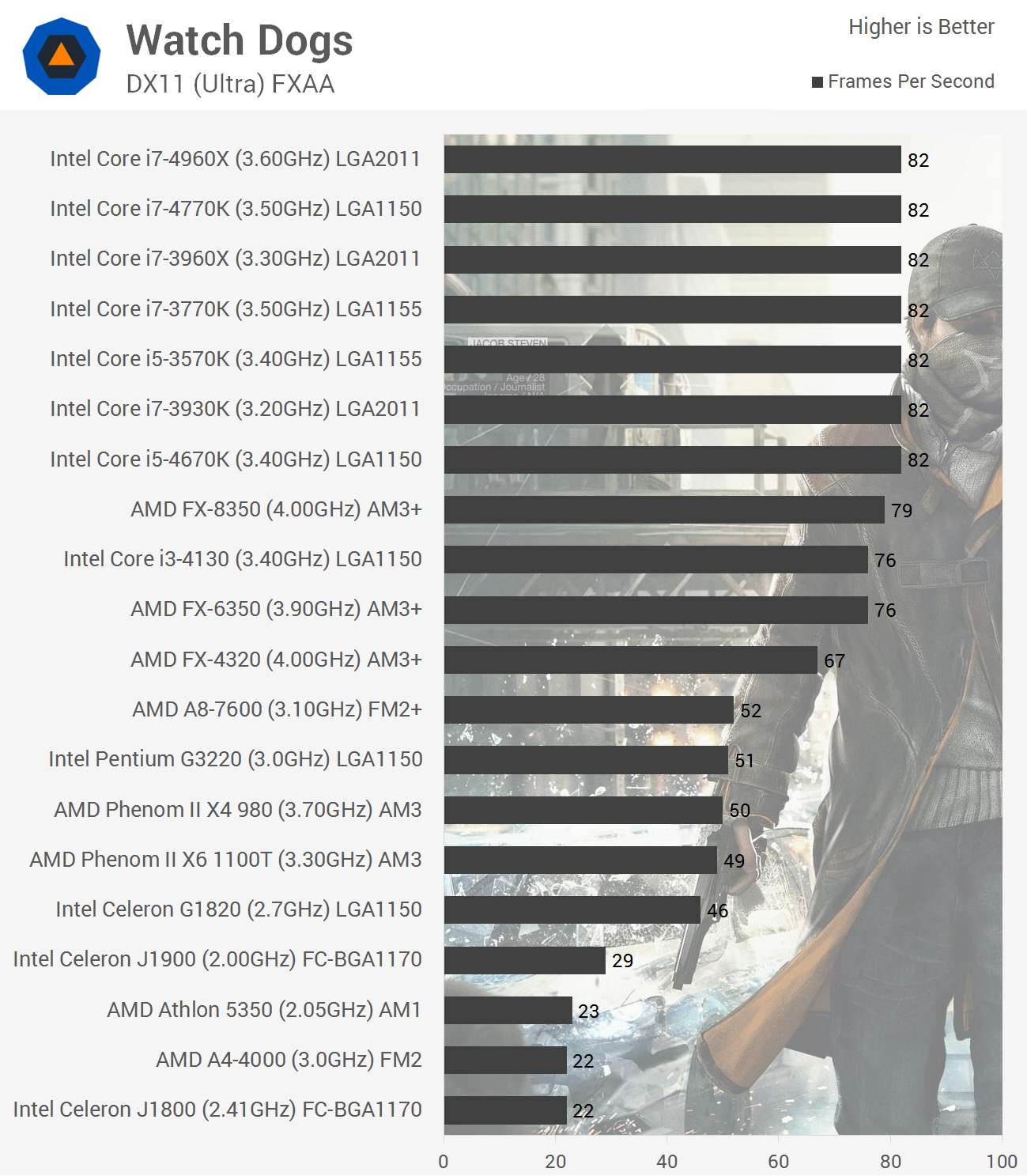

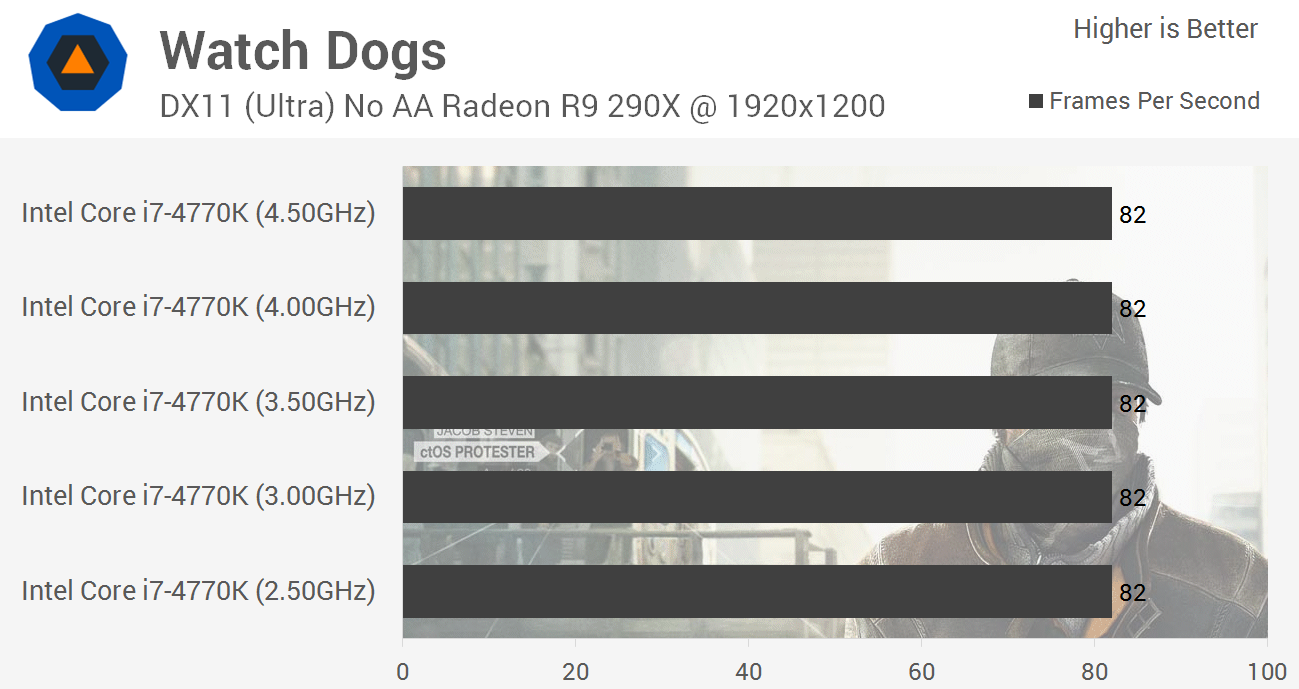

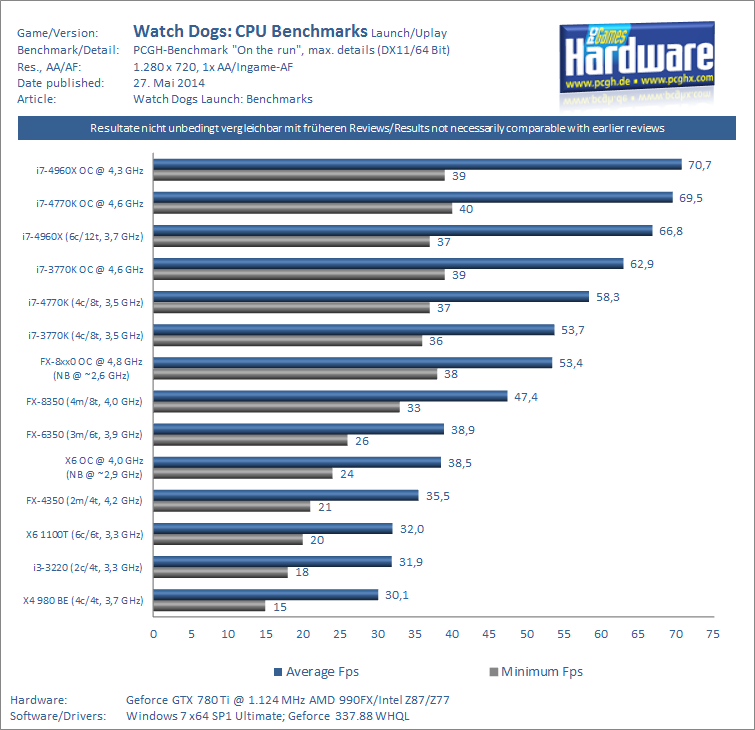

1. Well, your CPU is great. An i5-2500 is practically indistinguishable from a new i5-3650 from a gaming perspective in a real-world scenario.

2. Your graphics card is definitely the obvious weak link, but...

3. Your inability to run it at 720p on low settings smoothly is really disturbing. Even a Radeon 7770/R7 250X could handle 1080p/low details in our tests, and your 6850 should be on par with that at least. Something seems off.

If the RAM solves your problems I'll be surprised, but please let us know if it does. I would have prescribed a better GPU, but I never ran the game with less than 8GB of system RAM. So that might do it. If not, I'd say something is wrong with your system, and I'd try a graphics card swap (even if only temporarily to test) to see if there's something not quite right with your radeon.