bit_user :

DDWill :

I don't remember ever saying that NVLink or SLI is to communicate with the CPU??

The latest IBM POWER CPUs actually do support that. But that just goes to show what a strategic feature it is.

DDWill :

there is no sign of an integrated pcie4/5 interface in any CPU spec's coming out in the next year.

As I mentioned POWER CPUs support PCIe 4.0,

today. For some months, in fact.

According to this, EPYC 7 nm will be sampling with PCIe 4.0 support, by the end of the year:

https://wccftech.com/amd-zen-2-design-complete-7nm-epyc-2018/

DDWill :

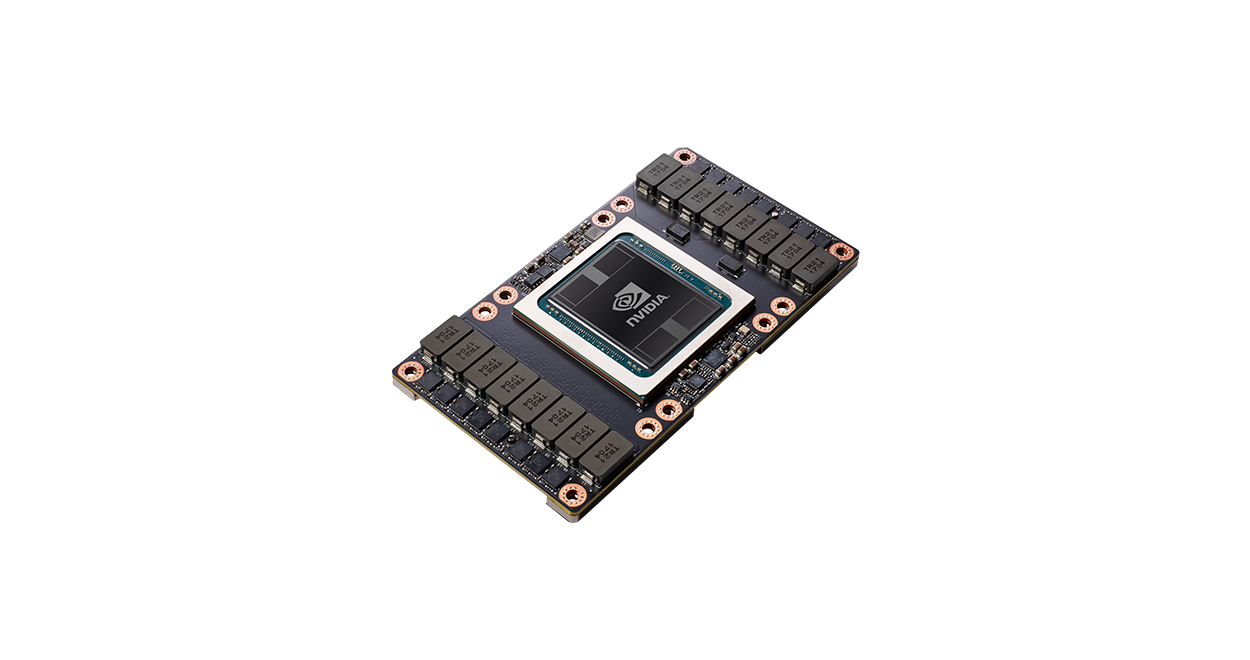

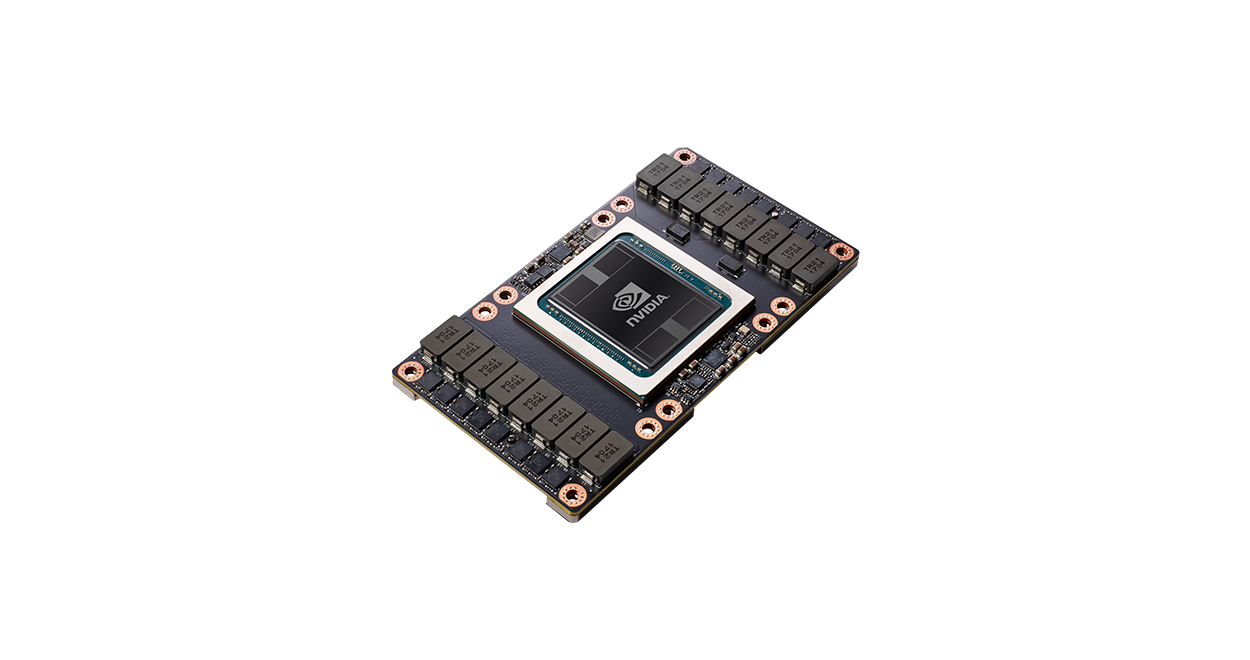

my bandwidth claims were regarding NVswitch which, not NVlink, which currently allows 16 GPU's in the DGX2 to speak to each other simultaneously, not just share memory, and the bandwidth claims are astonishing!

It takes a bank of NVSwitches to accomplish that, burning a substantial amount of power in the process. DGX-2

only makes sense for cases where people need fast communication between > 8 GPUs. Otherwise, they're better off buying two DGX-1's.

NVswitch is not fundamentally different than PCIe switches, BTW.

DDWill :

Obviously Intel are in on the NVswitch design, the new DGX2 motherboard has a pair of Xeon Platinum's on it, so intel are already catering for NVswitch designs,

No, because the NVsiwtch is only used for GPU <-> GPU communication. It has nothing to do with the CPUs. Just because it sits on the same motherboard doesn't mean Intel has to know anything about it.

DDWill :

still not readying their up and coming Xeons , i9s. i7's, i5's or i3s , due later this year/ next year with pcie4

How do you know that?

DDWill :

Please watch Jensen Huang's keynote as Computex a few days back, especially on DGX2 and NVswitch,

No, I watched exactly one product launch (Pascal GPUs) by that windbag and that's quite enough for me. I did

read all of the specs and coverage of the DGX-2 and NVswitch, when it was announced several months ago. The important part is the details, not his hyperbolic presentations.

DDWill :

the CPU may just become nothing more than a caretaker in the future, while the GPU's chat amongst each other, and do all the hard work. Yes both are needed now, both will be needed in the future, but currently its looking as though GPGPU will be the dominant force and do all the talking, freeing up the CPU just to keep the house in order.

The problem with that is that the GPUs need to be fed. So, they need fast access to main memory and storage. AMD has taken the approach of equipping their EPYC with 128 lanes of PCIe, enabling not only fast GPU <-> GPU communication, but also fast GPU <-> CPU/RAM/Storage.

DDWill :

I will come back in 2 years just to say I told you so lol.

Sure, come back in 2 years.

I still don't see

why AMD or Intel would adopt NVLink, instead of continuing with PCIe. Aside from all the other arguments in favor of PCIe that I've mentioned, it also has backward compatibility stretching back 15 years. Why throw all that away, do something hugely disruptive to their ecosystem,

and give Nvidia the strategic high ground?

The latest IBM POWER CPUs actually do support that. But that just goes to show what a strategic feature it is.

Although IBM may be adopting this, and although I am talking about enterprise technology being used later in the consumer markets once production costs reduce, which always happens, I don't think the majority of users here can relate to using IBM power servers, and are much more interested in seeing compatibility with mainstream, enthusiast and for some Xeon / Threadripper/ EPYC CPU's, 3ds artists etc.

As I mentioned POWER CPUs support PCIe 4.0, today. For some months, in fact.

According to this, EPYC 7 nm will be sampling with PCIe 4.0 support, by the end of the year:

Same answer as above.... plus sampling is just another way of saying testing stages, and not actually ready for launch...

It takes a bank of NVSwitches to accomplish that, burning a substantial amount of power in the process. DGX-2 only makes sense for cases where people need fast communication between > 8 GPUs. Otherwise, they're better off buying two DGX-1's.

If you look at benchmarks like the Vray benchmark, and see at the top of the leader board there people using 8x 1080ti's on the same motherboard and CPU as someone just using 4x 1080ti's, but are getting worse results and throwing away a huge amount of cash because they didn't account for the huge amount of bottlenecking.

NVswitch solves this, and because the NVswitch on the DGX2 basically turns 16 GPU's into one huge GPU with 0.5TB of GPU ram, and over 80,000 Cuda cores with low-latency, all communicating as one GPU. For most tasks, especially if its being used for something like production rendering, the majority of scenes wont even need to access system ram once the scene is transferred to the GPU's, and the GPU's will do all the work while the CPU(s) just manage the system and storage, which was the point of NVlink/switch in the first pace, to take away most of the involvement of the CPU, speeding up the process, cutting out the middle man.. Of course the majority cannot afford a DGX2, and would need to sell off a few houses to get one, but if I won one in a lottery , I certainly wouldn't be grumbling about it, although I might when I see the electric bill... A scaled down version once it reaches consumers would probably access 4 to 6 GPUs at most, maybe up to 8 on server boards, where it will have definite advantages over existing PCIE3, and the main focus on NVlink/NVswitch is that it takes away the need for accessing the CPU as regularly, and with the huge onboard memory coming with pro Volta cards now, that will be seen in consumer cards eventually, most people, even 3d artists wont need access to system ram once everything's loaded to the GPUs' for final production renders. Even the new Quadro GV100 uses a pair of NVlink2 connectors, doubling the bandwidth, and creating a 64GB, 10,240 Cuda core monster which would defiantly satisfy my need for 3d production work, but ooch , yes very pricey.

No, because the NVsiwtch is only used for GPU <-> GPU communication. It has nothing to do with the CPUs. Just because it sits on the same motherboard doesn't mean Intel has to know anything about it.

Are you seriously saying that Nvidia and Intel didn't work together on this, and that Nvidia didn't need any kind of permissions or guidance in using Intel's proprietary technology? Of course they did. Nvidia have to work closely with their partners all the time, whether that's software, drivers or hardware integration.

Because I regularly read the latest information on upcoming CPUs, but mainly enthusiast and pro markets, as my main use is for production rendering for industrial design and arch viz, and I like to evaluate the best time to upgrade to a new system, performance over the last generation, if its worth the cost of a new build , or to wait. Whitepapers are regularly available even for products not yet launched within a small timeframe window, and specs are regularly launched on tech sites showing the capabilities of the next CPU refresh. The next batch of i9's and Xeons are still only supporting pcie3.

The problem with that is that the GPUs need to be fed. So, they need fast access to main memory and storage. AMD has taken the approach of equipping their EPYC with 128 lanes of PCIe, enabling not only fast GPU <-> GPU communication, but also fast GPU <-> CPU/RAM/Storage.

I think you are presuming from your AMD sided arguments so far that I am some kind of Nvidia fanboy, but I consider these red vs green arguments a little immature. My next build may be Xeon, i9, may be Threadripper / EPYC, it all depends on what has the best performance for my budget at the time, and the software I use, so unfortunately on the GPU side, that currently means Nvidia's Cuda technology for VrayRT / Iray rendering, But the motherboard is still undecided as I probably wont build a new system until next summer, so plenty of time to evaluate. Although I may upgrade my GPU's in the next few months, and due to the industry I work for, would prefer Volta with RTX compatibility.

I am not a huge fan of Nvidia, I think their enthusiast and pro card prices are now seriously out of control, but they do dominate the industry I work in, so unfortunately I have to tolerate the very bitter taste in my mouth every time I have to upgrade my GPUs, however my aging 4x Titans can now be replaced my just one 250w card for GPU production rendering, the Titan V, so for me that's a tempting investment even at their price point, maybe two, and double my speeds, more than 2, and I would be running into the bottleneck problem on my aging system, due to two Titan V's near maxing out the aging ( launched in November 2010 ) pcie3 bandwidth. You say pcie designers haven't been dragging their heels but it was obvious both GPU and storage needs were nearing the limits of pcie3 yet they are still not ready, and taken so long that their pcie4 probably wont see the light of day, pcie5 has already superseded it, yet both have not made it onto a single consumer motherboard yet or consumer CPU integration list, nearing 8 years since the launch of pcie3, so its no wonder Nvidia took the initiative and designed their own interface, ready and in use now, that I believe will see the light into the consumer markets eventually...

As I said above, with the low latency sharing of both processing and memory shared between multiple GPUs, creating a huge Vram, most data models will not even need system ram, once all data is moved to the GPUs for final computation. One of the main reasons Threadripper and EPYC are still on my radar is because of the 64/ 128 lanes, but doing everything onboard the GPU's speeds things up enormously, so a pair of NVlinks for 2 cards or NVswitch tech for multiple cards makes this a reality for the first time due to the cores and huge Vrams on board now..

Ask any CG artist what the most annoying part of the project is, and its usually waiting to see the end results. with everything on board the GPUs, that's now a lot faster, almost instantaneous, compare to CPU.s which are now a lot better than the past but no where near the speed of GPU's for light tracing. Software like Arnold is impressive but still no where near the speed of having multiple GPUs. Yes GPUs generally burn more watts but for a lot less time per frame making them more efficient.

I still don't see why AMD or Intel would adopt NVLink, instead of continuing with PCIe. Aside from all the other arguments in favor of PCIe that I've mentioned, it also has backward compatibility stretching back 15 years. Why throw all that away, do something hugely disruptive to their ecosystem, and give Nvidia the strategic high ground?

Its called progress, and I think backward compatibility, although a good thing, can also be a bad thing in that it holds back.. again progress, and makes each new launch of software, firmware and hardware a nightmare to program for, and iron out all the bugs so that everyone's happy. On my aging system I am glad for backward compatibility, but the side of me that wants to see progress happening a lot faster to speed up my business, some of the old needs to be thrown away, to make way for new technologies that could be implemented now. Again I ask, why is it so hard to believe that one day soon, an onboard GPU will replace pcie with nvidia NV fabric, superseding pcie? Or that the next gen of GTX/RTX cards may use NVLink2, add two, double the bandwidth and create a monster GPU. I think most enthusiasts would live to see this, that is if the cost of NVlink2 comes down a great deal. But I think anyone hoping for prices to get lower , will be sorely disappointed, as R&D on these multibillion transistor chips is only going to escalate. I find it ridiculous in some ways, yes they throw in billions of dollars to design and test these things that needs to be recuperated, but at the end of the day you are left with a piece of sand, metal and plastic, with a scap value of $5, but with a retail value of a new small car, that has a much higher scrap value ??

Anyway, time well tell..