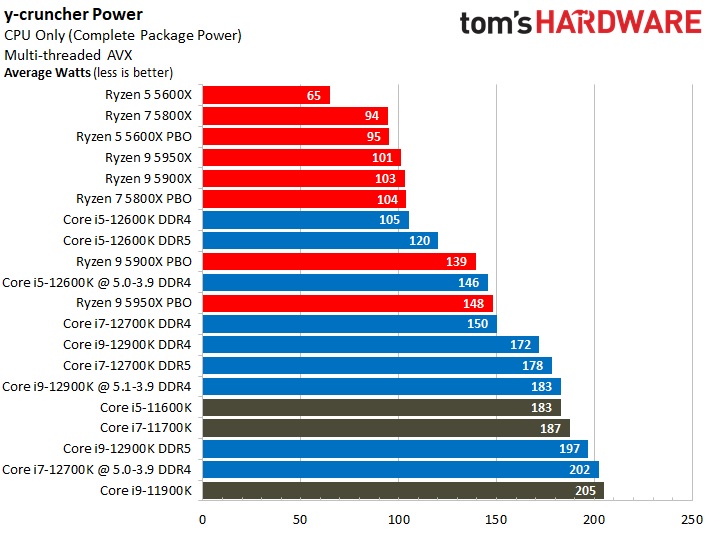

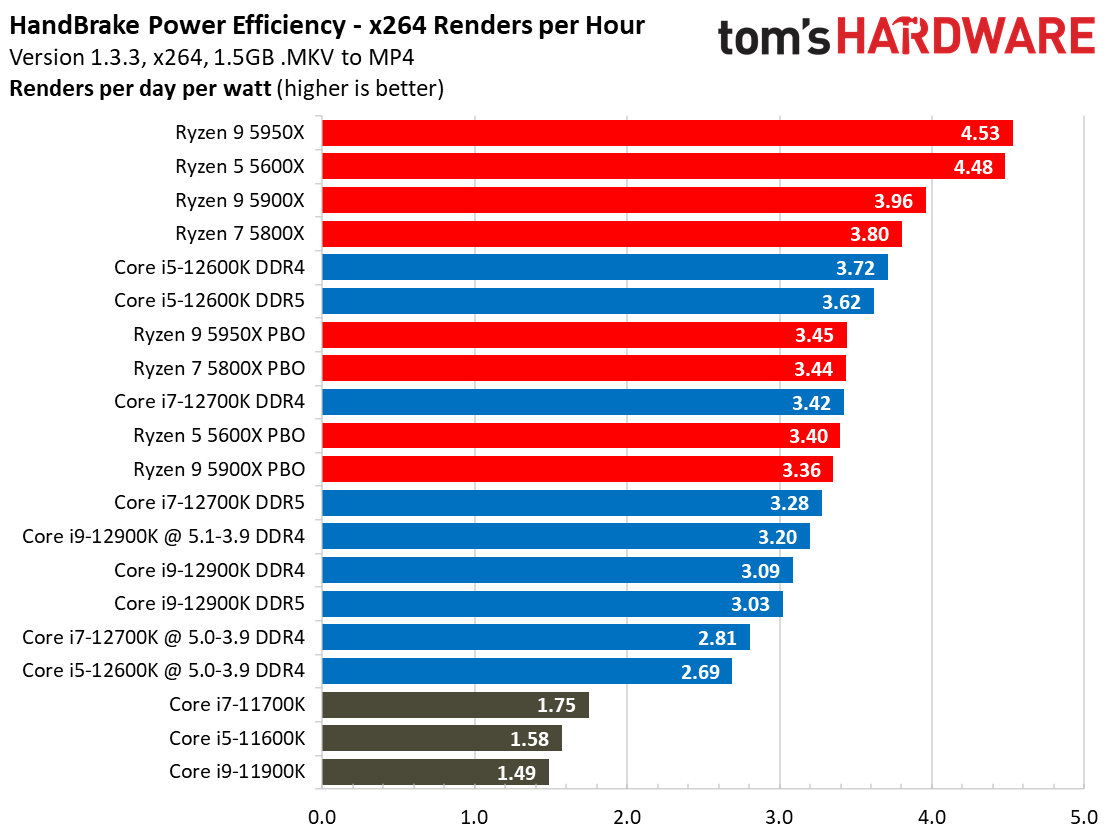

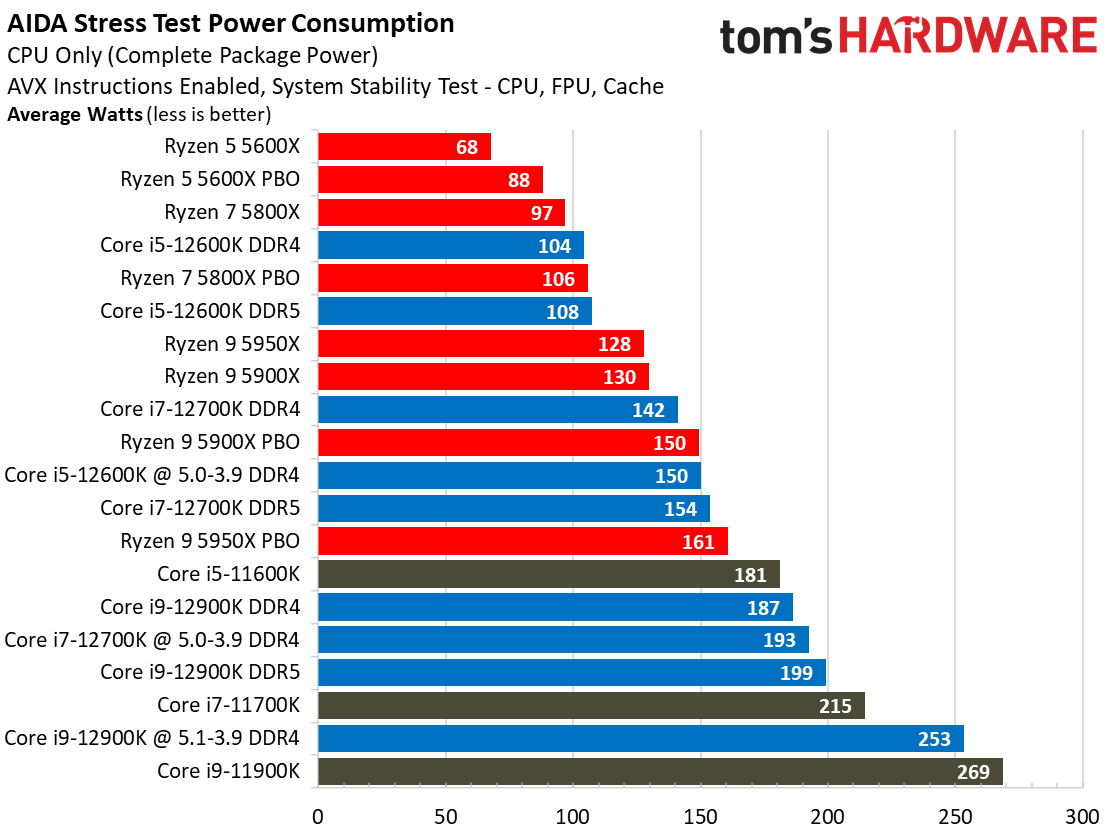

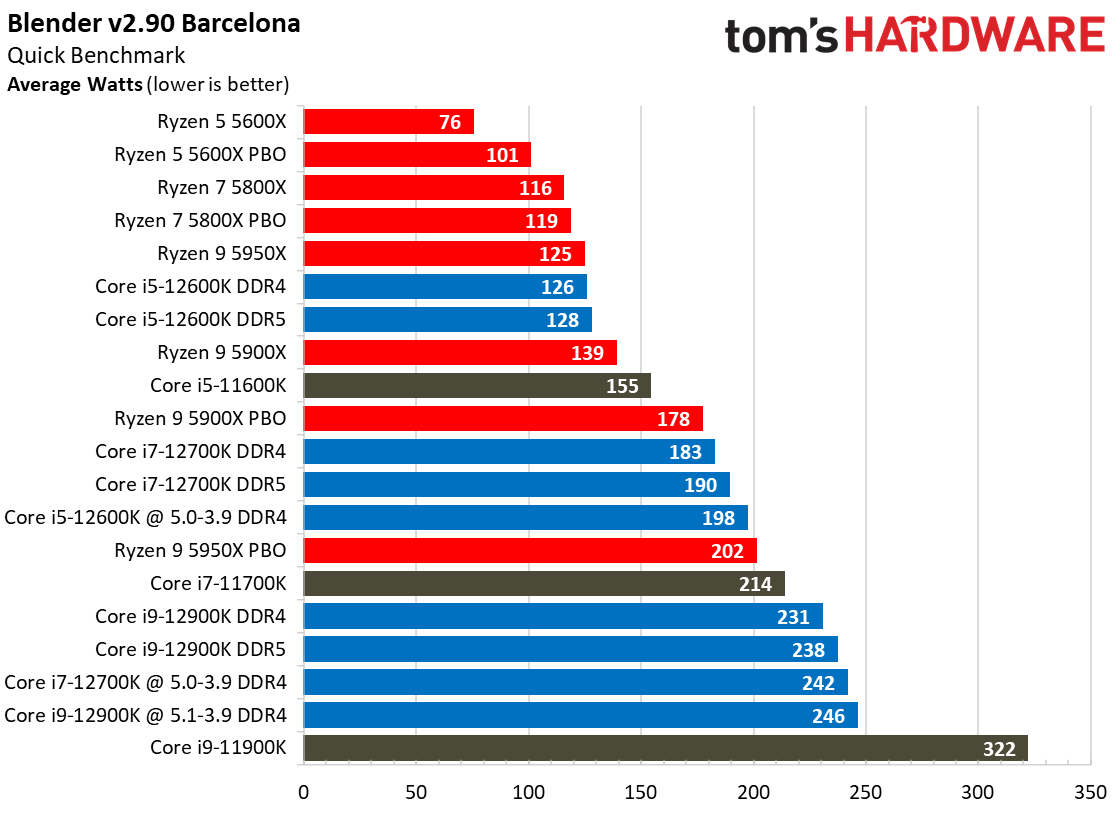

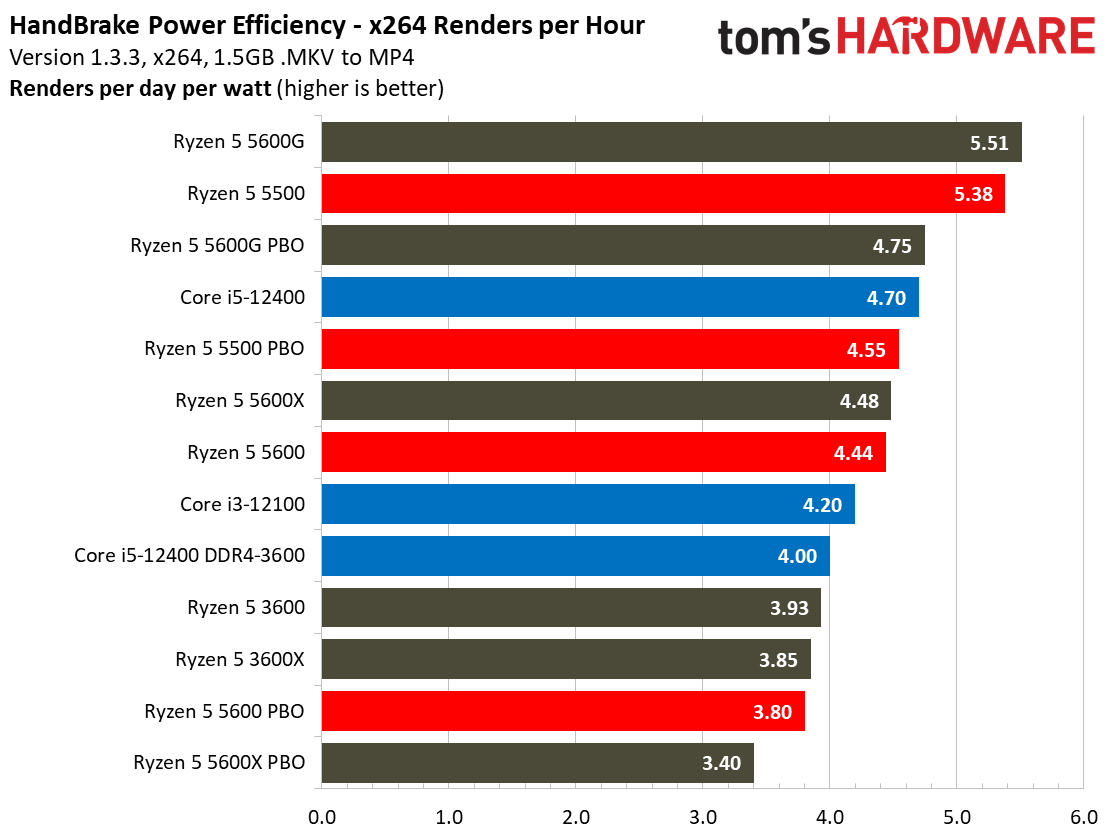

They only included an efficiency chart for the Handbrake results, but it's possible to determine the others based on each processor's performance in those tests.

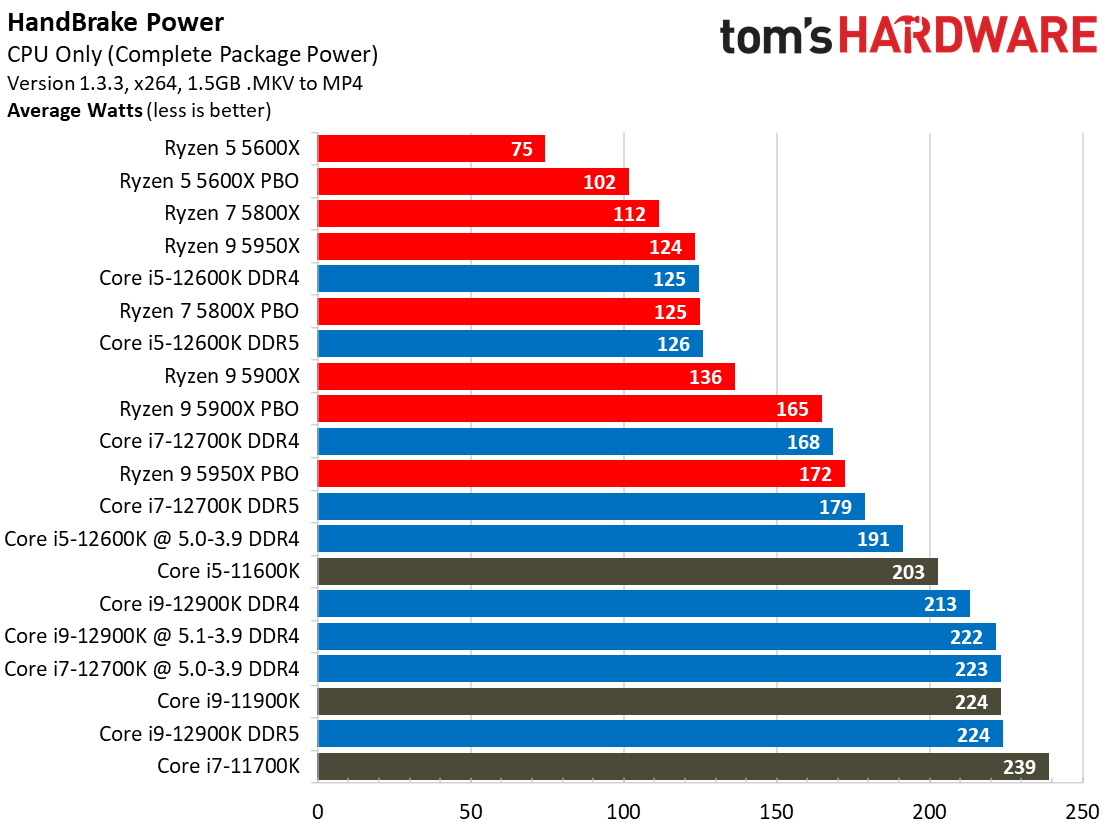

But let's take another look at that chart. If you eliminate the PBO entries, it clearly shows the 5800X is the

least efficient Ryzen 5000 model the reviewed, and the i5-12600K is the

most efficient Alder Lake CPU! You're comparing at outlier on the low end of Ryzen 5k (efficiency-wise) to an outlier on the high end of Alder Lake. Thus, your chosen matchup is not

at all characteristic of how the respective CPU families compare.

it's not really cherry-picking when it's arguably the 12th-gen part with the most mass-market appeal among those represented in the charts you posted.

It is

absolutely cherry-picking, when you don't pick samples

representative of their respective product lines. Your original statement was not limited to those two models, but applied across the

entire product stacks of both brands. The most

accurate way to do it would be to use geomean efficiency of Alder Lake models vs. Ryzen 5k.

it makes sense to compare parts with as similar performance as possible.

Depends on the goal. If the objective is to inform buyers deciding between CPUs at that

specific price point, then yes it makes sense to compare them. However, you're confusing the best methodology for one purpose (and that's the main purpose of reviews on sites like these) with the best methodology for the purpose of comparing the entire product lines.

The way to test how good your generalization is would be to look at how well it applies to any other matchup, in the range.

| Matchup | Relative Efficiency (Handbrake) |

|---|

| 5900X vs i7-12700K (DDR4) | 15.8% |

| 5900X vs i7-12700K (DDR5) | 20.7% |

| 5950X vs i9-12900K (DDR4) | 46.6% |

| 5950X vs i9-12900K (DDR5) | 49.5% |

Though seeing as the 12600K is the faster of the two, it should naturally be at a disadvantage in terms of efficiency.

That isn't a given, unless you're admitting the only way the i5-12600K pulled a win on performance is by Intel juicing its power limits. It's only a given that increasing performance comes at the expense of efficiency

within a single architecture!

Consider this: Zen 3 is more efficient than the Zen 2 XT-series, even though the two CPUs were made on the

exact same process node. And guess which one is faster?

If one were to reduce the clock rates a bit to provide more comparable performance to the 5800X, the efficiency would likely improve.

Yes, because guess what? It's the highest W/core across the

entire Zen 3 range, both rated & actual! Worse,

the 5800X has only 32 MB of L3, while the next higher models are both 64 MB. So, that puts it at a performance disadvantage. Comparing with the 5600X, the 5800X has less L3 per core.

| Model | W/core (rated) | W/core (Y-cruncher) | W/core (AIDA) | W/core (Handbrake) | W/core (Blender) |

|---|

| 5600X | 10.8 | 10.8 | 11.3 | 12.5 | 12.7 |

| 5800X | 13.1 | 11.8 | 12.1 | 14.0 | 14.5 |

| 5900X | 8.8 | 8.6 | 10.8 | 11.3 | 11.6 |

| 5950X | 6.6 | 6.3 | 8.0 | 7.8 | 7.8 |

The

only conclusion to draw from this is don't get a 5800X, if you care about power-efficiency!

I suppose I could have compared the 12400 against the 5600X to keep the core configurations more equivalent

But the number of cores & threads is the

only thing equivalent about them! On most of the multi-threaded benches, the i5-12400 gets absolutely stomped by the 5600X. The two CPUs are in different leagues, as indicated by their price disparities.

I guess another point is that the i5-12400 has a base power of 65W, which makes it

seem equivalent, but it has a turbo power of 117 W, which is 54% more than we

actually saw from a 5600X. Unfortunately, the i5-12400 review article on this site doesn't seem to have any power measurements.

Interesting, that.

the 7000 series might improve efficiency further.

It's on a whole generation newer fab node, so we're in trouble if it's not.

Or maybe not, if pushing clock rates higher happens to have more of an adverse effect on efficiency than the architectural/process gains.

Could happen, but I sure hope not. At least, not at stock. I don't really care what happens with PBO, because most people don't use it.

Though that goes for Raptor Lake too.

Not really. Raptor Lake is made on the same node and has the same microarchitecture for both sets of cores. They're already rumored to be increasing power limits for it. Since I don't believe in miracles, I don't believe it'll be any more efficient than Alder Lake.