Eh it doesn't really matter if it matches a threadripper 3000 or not since Apple will never provide that chip to any third party companies to work with, and the 3000 series is old hat anyway at this point. Its just a dude saying "look at how big my d***k is" while pointing at his crotch in good lighting. Honestly these chips don't really provide a useful option to anyone that isn't already on an Apple based infrastructure, unless you wanted to change your entire infrastructure to be beholden to the fruit god, a much more costly, less flexible, and generally less appealing, proposition.

News First Apple M1 Ultra Benchmark Posted, Nearly Matches Threadripper 3990X

Page 2 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AgentLozen

Distinguished

Something about Geekbench 5 scoring is really strange. The Core i9 12900k soundly beats the M1 Ultra in single threaded performance, but it loses by a wide margin in the multithreaded benchmark. M1 Ultra only has a few more cores than the 12900K. It's strange that it's multicore performance is so strong.

Additionally, Threadripper 3990x has way more multithreaded assets than the M1 Ultra or the 12900k, but it just barely pulls ahead. These numbers don't add up.

Additionally, Threadripper 3990x has way more multithreaded assets than the M1 Ultra or the 12900k, but it just barely pulls ahead. These numbers don't add up.

Something about Geekbench 5 scoring is really strange. The Core i9 12900k soundly beats the M1 Ultra in single threaded performance, but it loses by a wide margin in the multithreaded benchmark. M1 Ultra only has a few more cores than the 12900K. It's strange that it's multicore performance is so strong.

Additionally, Threadripper 3990x has way more multithreaded assets than the M1 Ultra or the 12900k, but it just barely pulls ahead. These numbers don't add up.

Geekbench is not a great benchmark and most likely doesn't scale to 64 cores.

Last edited:

hotaru.hino

Glorious

Yeah, there's too many variables that make the tests dubious at best. My questions are:Something about Geekbench 5 scoring is really strange. The Core i9 12900k soundly beats the M1 Ultra in single threaded performance, but it loses by a wide margin in the multithreaded benchmark. M1 Ultra only has a few more cores than the 12900K. It's strange that it's multicore performance is so strong.

Additionally, Threadripper 3990x has way more multithreaded assets than the M1 Ultra or the 12900k, but it just barely pulls ahead. These numbers don't add up.

- Did the Intel computer run Windows or macOS?

- What cooler did Apple use on the Intel PC?

- How fair is GeekBench? Does it give any CPU the same workload or does it use code paths to try and run an "optimized" workload? (plus Linus Torvalds criticized GeekBench for potentially dubious scoring methods)

Something about Geekbench 5 scoring is really strange. The Core i9 12900k soundly beats the M1 Ultra in single threaded performance, but it loses by a wide margin in the multithreaded benchmark. M1 Ultra only has a few more cores than the 12900K. It's strange that it's multicore performance is so strong.

Additionally, Threadripper 3990x has way more multithreaded assets than the M1 Ultra or the 12900k, but it just barely pulls ahead. These numbers don't add up.

None of this is strange, it's an expected outcome of M1's better power numbers. But it's going to take a bit of explaining to connect the dots.

Core i9 single threaded scores require a lot of power. I've seen measured figures like 29W package power when running a ST floating point benchmark.

That much power per core is not sustainable with all the cores on. This is why Intel always has such a massive difference between base clocks (AKA the minimum speed they guarantee with all cores running) and max turbo clocks (AKA single core speed). i9-12900K has a base P core frequency of 3.20 GHz, max turbo 5.1 GHz (or 5.2 GHz if Turbo Boost 3.0 is enabled). 3.2/5.2 = 0.62 = 62%.

AMD does similar stuff. With Intel and AMD CPUs, there's a huge frequency penalty for all-core loads. An 8-core x86 CPU never offers anything particularly close to 8x the throughput of a single core running alone.

Apple M1 Pro and M1 Max have a single core frequency of 3.228 GHz, and an all-core frequency of 3.036 GHz. Unlike Intel and AMD, these aren't really influenced by instruction mix, it's just 3.228 if ST and 3.036 if you fill up a cluster of 4 cores with 4 threads. (There's an intermediate frequency for 2 active cores in a cluster which I've forgotten.)

I just realized the cluster thing might need a brief explainer. Apple groups performance cores into clusters of four, and each cluster is independently clocked from the others. If you run a 5-thread load on a M1 Max (two clusters of four cores each), it will run four of the threads in one cluster at 3.036 and one by itself in a second cluster at 3.228.

3.036 / 3.228 = 0.94 = 94%. That means a M1 Ultra with 16 performance cores delivers about 15x the MT throughput of a single performance core in the same chip. x86 chips are simply incapable of a ratio like that.

How is Apple able to do this? Power efficiency. Measurements put the M1 Firestorm performance core at about 5 watts at its maximum frequency running worst-case code. Remember that the i9 P cores are over 25W. This is a profound advantage.

In a lot of ways, Intel ST scores are a bit unrealistic. They evaporate as soon as there's a few threads running. AMD isn't as bad as they've never pushed ST turbo freqs as hard as Intel, but they still have a pretty substantial difference between ST and all-core frequency.

I just looked up 3990x geekbench scores and there are numerous above 38000.... The M1 isn't even close. Why would you report that the 3990x only got 25000? Is this supposed to be a paid Apple article or something?

The geekbench database is built on public submissions. Lots of them are overclocked, some are even faked. If you look at the scores, there actually are an awful lot of 3990x scores clustered around 25000 - my guess is that scores in this range are correct for stock clocks.

No one credible uses Geekbench, at least as a multiplatform benchmark. It was created by the owner of a Mac review website, so any multiplatform comparisons may be skewed in favor of Apple.

Can you support this accusation? I don't know of any Mac review websites owned, run by, or affiliated with John Poole or his company, Primate Labs.

I can't think of any legitimate PC hardware review sites that use Geekbench as part of their test suite. It's also a synthetic workload, not directly based on real-world software, so it's not necessarily representative of real-world workloads either.

Geekbench contains no synthetic benchmarks, it's a collection of real world compute intensive tasks. Lots of its tests are packaged up open source programs, such as compression/decompression and a C compiler.

https://www.geekbench.com/doc/geekbench5-cpu-workloads.pdf

hotaru.hino

Glorious

This largely depends on the cooling capacity of the part. While I won't doubt that Apple has a efficiency advantage, the drop in frequency need not be as pronounced. For instance, my Ryzen 5600X is able to maintain 4.4GHz running an all-core workload. This isn't all that much of a penalty from the 4.6GHz it goes up to in single core workloads. And the most I did was stick a $50 (at the time) aftermarket air cooler.That much power per core is not sustainable with all the cores on. This is why Intel always has such a massive difference between base clocks (AKA the minimum speed they guarantee with all cores running) and max turbo clocks (AKA single core speed). i9-12900K has a base P core frequency of 3.20 GHz, max turbo 5.1 GHz (or 5.2 GHz if Turbo Boost 3.0 is enabled). 3.2/5.2 = 0.62 = 62%.

AMD does similar stuff. With Intel and AMD CPUs, there's a huge frequency penalty for all-core loads. An 8-core x86 CPU never offers anything particularly close to 8x the throughput of a single core running alone.

EDIT: Also I would argue that a lot of desktop parts have an aggressive V-F curve. I undervolted both my Ryzen 5600X and RTX 2070 Super with minimal, if any, performance loss but with a gain in efficiency. The RTX 2070 Super never gets to the 215W it's rated for despite performing basically the same as if it were allowed to reach it. I've also noticed on my RTX 3060 in my laptop, it can perform as well as a GTX 1080 (at least in 3D Mark) but floats around 70W, or three times less power. Compared to the RTX 3060 desktop version, it's about 85% as performant (in 3D Mark) but consumes almost half as much power (the desktop version is rated for 130W)

Again, not discounting Apple's efficiency here, but desktop parts aren't also shipped to be as efficient as they can for some reason.

Last edited:

Funny that you also made no mention of the price: $3999. That's a HELL of a lot of coin, specs be damned. And the boast of supposed 3090 level graphics performance? Guess we'll believe that when we see it actually tested in the wild given as that sounds an awful lot like Tarzan level chest thumping from Apple with absolutely no actual substance to back it. Then again, this is coming from the manufacturer so those kind of things are pretty par for the course.

Funny that you made no mention of the price of a 3990x, which appears to be at least $4K, or the price of assembled 3990x workstations with 3090 graphics cards and 64GB RAM, which are much more than that.

JamesJones44

Reputable

Is this really that impactful? Or just a benchmark.

I have a M1 Max with a MBP and a 12900k for gaming. They are pretty close, I do in memory database work and can execute a relatively expensive query (4 selects with 10 joins over 8 tables, the query is about 62 lines long) on the M1 Max in 300 ms and on the 12900K it will execute in 210 ms. When I run a load test with 50 clients the times come up a bit 700 ms on the M1 Max and 500 ms on the 12900K. If you look at general benchmarks of the M1 Max vs 12900k they are roughly in line with those numbers (single core vs multi core). The 12900K test is run with Alpine Linux and obviously the M1 Max is with macOS. Both have 64 GB of ram. I doubt my use-case is high on Apple's accelerator list since it currently is not GPU enabled.

JamesJones44

Reputable

Something about Geekbench 5 scoring is really strange. The Core i9 12900k soundly beats the M1 Ultra in single threaded performance, but it loses by a wide margin in the multithreaded benchmark. M1 Ultra only has a few more cores than the 12900K. It's strange that it's multicore performance is so strong.

Additionally, Threadripper 3990x has way more multithreaded assets than the M1 Ultra or the 12900k, but it just barely pulls ahead. These numbers don't add up.

I'm not fan of Geekbench, but the 12900k is 16 cores (8p and 8e) vs 20 cores on the M1 Ultra (16p and 4e). That's a pretty big difference, SMT is closer to a half core than a full core, so even if you take that into account it's still lopsided when it comes to raw specs. Just some basic math is 8 x 1997 = 15976 and 16 x 1793 = 28688. You can't add them like that because it doesn't take into account throttling, down clocking, SMT, e cores, etc, but it gives you an idea. If anything you can say the M1 Ultra multi core performance is disappointing given its single core score.

Last edited:

While I won't doubt that Apple has a efficiency advantage, the drop in frequency need not be as pronounced. For instance, my Ryzen 5600X is able to maintain 4.4GHz running an all-core workload. This isn't all that much of a penalty from the 4.6GHz it goes up to in single core workloads. And the most I did was stick a $50 (at the time) aftermarket air cooler.

It's only a 6-core CPU. These effects always are small at low core counts; they get worse the more cores you put in a single die or package. It's a scaling problem - package power can't scale to keep pace with core count.

Your 5600X has a TDP of 65W for 6 cores, or about 11W per core. IIRC AMD only needs 15W or so for max boost clocks, so 6 core parts like this don't have that bad an all-core penalty.

But when you try to put 16 cores in a single chip or package, as in the 12900K, or 64 as in the 3990x, that's when things get very real and multi-core clocks drop a ton.

Apple is subject to these scaling issues too, it's just that with their cores having such low power consumption they are still in the nice part of the scaling curve (where they don't have to cut multicore freqs in any significant way) at 16 performance cores in 1 package.

hotaru.hino

Glorious

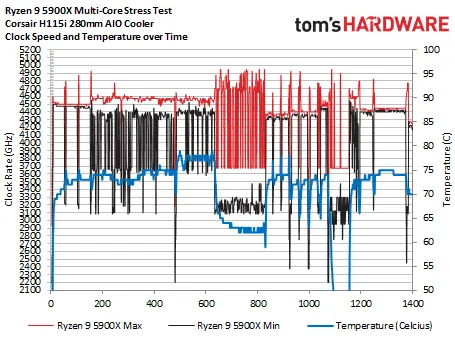

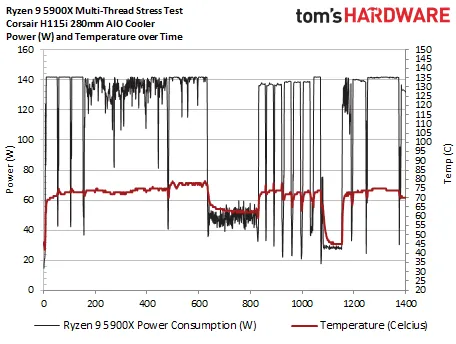

Admittedly yes, my example wasn't the greatest. So I'll grab another, the 5950X. This is from Tom's Hardware's reviewIt's only a 6-core CPU. These effects always are small at low core counts; they get worse the more cores you put in a single die or package. It's a scaling problem - package power can't scale to keep pace with core count.

Your 5600X has a TDP of 65W for 6 cores, or about 11W per core. IIRC AMD only needs 15W or so for max boost clocks, so 6 core parts like this don't have that bad an all-core penalty.

But when you try to put 16 cores in a single chip or package, as in the 12900K, or 64 as in the 3990x, that's when things get very real and multi-core clocks drop a ton.

Apple is subject to these scaling issues too, it's just that with their cores having such low power consumption they are still in the nice part of the scaling curve (where they don't have to cut multicore freqs in any significant way) at 16 performance cores in 1 package.

For the sake of argument, the processor still maintains about 4.6GHz with a multi-core workload. AMD advertises the maximum boost speed to be 4.9GHz.

Also keep in mind that Ryzen CPUs since Zen 2 have an I/O die. So you can't use package power consumption to obtain per-core power consumption. I'd have to wait a while to verify but in my setup the cores rarely suck more than 6W if they were peaking. They do take 10-11W if at stock, but the stock PPT value is 88W.

But again, at the end of the day, desktop parts tend to have an aggressive V-F curve.

I'm not fan of Geekbench, but the 12900k is 16 cores (8p and 8e) vs 20 cores on the M1 Ultra (16p and 4e). That's a pretty big difference, SMT is closer to a half core than a full core, so even if you take that into account it's still lopsided when it comes to raw specs. Just some basic math is 8 x 1997 = 15976 and 16 x 28688. If anything you can say the M1 Ultra multi core performance is disappointing given its single core score.

One thing to be aware of is that Alder Lake Performance/Efficiency means something a lot different than M1 P/E. For M1, an E core is a tiny ultra low power thing whose main role is running low intensity background jobs (Time Machine backup, check your mail, etc) at almost no power (sub 1W). It's not really part of the performance story. All four Apple E cores put together provide maybe 1.2x the compute throughput of a single Apple P core.

Alder Lake's Gracemont E cores are not that. They're actually quite important to AL multicore performance. Gracemont can't hit Golden Cove's ST performance peaks, or anywhere close to them, but they're decent enough and, more importantly, have a power/performance curve much friendlier to MT performance scaling.

Apple also does not have SMT, fyi. M1 Ultra is 20c, 20t.

JamesJones44

Reputable

not sure what happened here but even wcctech got it right and that is a low bar to pass...

Apple M1 Ultra SOC Benchmark Leaks Out, Slower Than Intel Alder Lake In Single-Thread But Close To 32 Core AMD Threadripper In Multi-Thread

The first benchmarks of Apple's M1 Ultra SOC have leaked out, showcasing huge performance gains versus Intel & AMD Desktop CPUs.wccftech.com

Those benchmarks from wccftech are very suspect. Unless they are the best hackintosh users on the planet, I've not seen an iMac Pro with a 12900k or a Mac Pro with a 3970x

Last edited:

JamesJones44

Reputable

One thing to be aware of is that Alder Lake Performance/Efficiency means something a lot different than M1 P/E. For M1, an E core is a tiny ultra low power thing whose main role is running low intensity background jobs (Time Machine backup, check your mail, etc) at almost no power (sub 1W). It's not really part of the performance story. All four Apple E cores put together provide maybe 1.2x the compute throughput of a single Apple P core.

Alder Lake's Gracemont E cores are not that. They're actually quite important to AL multicore performance. Gracemont can't hit Golden Cove's ST performance peaks, or anywhere close to them, but they're decent enough and, more importantly, have a power/performance curve much friendlier to MT performance scaling.

Apple also does not have SMT, fyi. M1 Ultra is 20c, 20t.

Yes, that was my point on even if you add SMT, you are still talking 16 performance cores on the M1 U vs 8 + maybe 4 for SMT for 12900k (SMT is not a liner scale so "how much" it adds is very dependent on workload).

M1 Ultra gets absolutely crushed in Cinebench 23--M1 Ultra is way down the list. Geekbench is what companies use to sucker inexperienced people.

SkyBill40

Distinguished

Funny that you made no mention of the price of a 3990x, which appears to be at least $4K, or the price of assembled 3990x workstations with 3090 graphics cards and 64GB RAM, which are much more than that.

Funny how this isn't my story though, right? Not my website, either. If either were, I would have definitely disclosed all the pricing and been completely forthcoming on ALL of the details with regard to testing criteria, which would also account for all of the pieces and parts used for the purpose of the test. You Apple mad, bro? So aggravated that you created an account today just to post?

The geekbench database is built on public submissions. Lots of them are overclocked, some are even faked. If you look at the scores, there actually are an awful lot of 3990x scores clustered around 25000 - my guess is that scores in this range are correct for stock clocks.

Some of the scores faked? Provide your verifiable citation to support your claim. Burden of proof is on you for that and no one else.

Last edited:

I love it how everyone compares x86 CPUs built to accept a wide array of user determined memory configurations to an entire system-on-chip design tuned to get maximum performance out of the pre-defined secondary components. I wonder how much faster zen 3 or alderlake would be compared to the m1 ultra if they too had dedicated 800Gbps memory or if the m1 ultra was limited to 3200mhz DDR4

Once AMD or Intel makes a SoC like you describe, then we can talk. Until then, the only ones we can buy, install software on, and use for work/play run MacOS.

These Apple SoCs have their disadvantages and they have their advantages. x86 systems also have their pros/cons. You can't really criticize people for comparing the systems just because they have vastly different architectures. That's not how the real world works. The person with a $2000 x86 system isn't going to feel better because the $2000 Mac Studio is "only faster because it's an SoC". They don't care how it's made, they just care how fast it is and how much it costs.

Something about Geekbench 5 scoring is really strange. The Core i9 12900k soundly beats the M1 Ultra in single threaded performance, but it loses by a wide margin in the multithreaded benchmark. M1 Ultra only has a few more cores than the 12900K. It's strange that it's multicore performance is so strong.

Additionally, Threadripper 3990x has way more multithreaded assets than the M1 Ultra or the 12900k, but it just barely pulls ahead. These numbers don't add up.

The i9 has 8 performance cores and 8 efficiency cores. The Ultra has 16 perf cores and 4 efficiency cores. Seems about right to me esp considering we've known that the Apple SoCs start seeing diminished returns with more than 8 perf cores. Still, considering the power usage, it's still amazingly efficient.

Funny how this isn't my story though, right? Not my website, either. If either were, I would have definitely disclosed all the pricing and been completely forthcoming on ALL of the details with regard to testing criteria, which would also account for all of the pieces and parts used for the purpose of the test. You Apple mad, bro? So aggravated that you created an account today just to post?

You PC mad, bro? My emotion is amusement, not anger. I'll be honest here: I created an account to lightly troll you insecure PCMR types (which is not everyone posting in this thread, there's some cool people here) who are so emotionally threatened by the idea that an Apple product might be fast. But I promise you this: unlike a real troll I will never knowingly say something false. I'm trolling you with the facts as I understand them.

Some of the scores faked? Provide your verifiable citation to support your claim. Burden of proof is on you for that and no one else.

https://browser.geekbench.com/v5/cpu/2433952

If you think 5441 single core / 16245 multi is a legit score for a Snapdragon phone SoC, you are living in a different world than me. This is a very obvious fake, which is why it got flagged as inaccurate. There are almost certainly lots of less obvious unflagged fakes in the database, they're just harder to spot.

Unlike a bunch of the disinfo that's been posted in this thread, this is a legitimate issue with Geekbench. If you want to really trust a GB5 score, you have to run it yourself, get one from a source you trust, or figure out how to cut through all the noise in the public database. (It's not just fakes which are a problem. People upload tons of bad scores because they didn't quit everything using CPU before running the benchmark, and the unpaid version of GB always uploads every run to the database.)

If I were you, this is the thing I would be hanging my hat on. Until the general public gets access to Mac Studio M1 Ultra hardware, nobody should treat that single score from an unknown source as legit. There's a good chance it's real, it's plausible given what we know about M1 family chips, but it needs to be independently verified.

By the way, it's funny to me that this, of all things, was what got you to throw down the PROVE IT BRUH gauntlet. Would you say that to me if I said there's a cheating problem in online video games? Probably not, right? Everyone knows it's true.

SkyBill40

Distinguished

I didn't throw down the gauntlet of "prove it bruh" as it was already your responsibility to do so and no one else. People shouldn't have to ask or demand citation to back a claim as the person making the claim should provide it in the first place.

PC mad? LOL. Good lord, you actually bit on that.

I have no issue with Apple making their own products and doing well in their specific niche market. Fast is subjective, relative, and is varied as there isn't some golden standard to judge. Assume all you want though as that's your prerogative. Insecure? Nah, man. Not at all. Believe whatever suits you. Not one of those PCMR types either.

As for Geekbench scores, that's been covered and nauseum here. It's seemingly not reliable by any means given the false scoring of which you speak. Seeing that, why does Apple use it? Why not use some of the far more reliable benchmarking software? Or does that other software not shine the light as well in their favor? I'm legit asking here. If you're an Apple guy, feel free to elaborate.

To your final point, there's no need to have you provide any proof for your non sequitur angle. Computer gaming has been overrun with cheating and the evidence is well documented. I think we've all felt the pain from that and it's not going away.

PC mad? LOL. Good lord, you actually bit on that.

I have no issue with Apple making their own products and doing well in their specific niche market. Fast is subjective, relative, and is varied as there isn't some golden standard to judge. Assume all you want though as that's your prerogative. Insecure? Nah, man. Not at all. Believe whatever suits you. Not one of those PCMR types either.

As for Geekbench scores, that's been covered and nauseum here. It's seemingly not reliable by any means given the false scoring of which you speak. Seeing that, why does Apple use it? Why not use some of the far more reliable benchmarking software? Or does that other software not shine the light as well in their favor? I'm legit asking here. If you're an Apple guy, feel free to elaborate.

To your final point, there's no need to have you provide any proof for your non sequitur angle. Computer gaming has been overrun with cheating and the evidence is well documented. I think we've all felt the pain from that and it's not going away.

Last edited:

I didn't throw down the gauntlet of "prove it bruh" as it was already your responsibility to do so and no one else. People shouldn't have to ask or demand citation to back a claim as the person making the claim should provide it in the first place.

Reasonable people having ordinary conversations don't instantly bark about YOUR RESPONSIBILITY TO PROVE blah blah blah when they doubt something, especially if it's not an outlandish idea. They just politely ask for more info, and only get your level of rude if the person in my position is evasive and refuses to provide any support. You went nuclear from the word go. It's almost as if you're really angry about something. I wonder what that could be?

PC mad? LOL. Good lord, you actually but on that.

Good lord you actually wrote "Apple mad", and now you mad it got turned back on you, eh?

As for Geekbench scores, that's been covered and nauseum here. It's seemingly not reliable by any means given the false scoring of which you speak.

One can make a distinction between the Geekbench results database, which is not great, and Geekbench the benchmarking tool, which is decent.

It wasn't always that way. Someone in this thread linked to the thing where Linus Torvalds was bashing it. Without even clicking through the link I'm guessing it was one of his rants about its scores treating AES cryptography as a CPU test. He didn't like this because most CPU tests in GB are genuinely testing the CPU core itself, but the AES test will use an accelerator on most platforms.

Modern GB still does AES the same way, but weights it so low in the composite scores that it doesn't influence them much. Not perfect, but that objection was mostly dealt with.

Seeing that, why does Apple use it? Why not use some of the far more reliable benchmarking software? Or does that other software not shine the light as well in their favor? I'm legit asking here. If you're an Apple guy, feel free to elaborate.

I think you're a little confused here. The Tom's article was about an anonymous Geekbench score showing up in the database. Nobody knows who put it there. It probably wasn't Apple.

One possibility is that a journalist got their review unit and ran the free version of GB5, which auto-uploads an anonymized report after every run. (One feature you unlock by paying for Geekbench is the ability to control these uploads, including not doing them at all.) Another possibility is that some troll decided to have some fun.

The benchmarks Apple uses to promote the Mac Studio are mostly professional creative apps - Final Cut Pro, Affinity Photo, Photoshop, and so on. They don't use Geekbench.

To your final point, there's no need to have you provide any proof for your non sequitur angle. Computer gaming has been overrun with cheating and the evidence is well documented. I think we've all felt the pain from that and it's not going away.

My point was that this isn't any different. If you were as familiar with the merits and demerits of Geekbench as you pretended to be, you wouldn't find it a controversial idea that its database has a lot of fake and low quality scores.

cryoburner

Judicious

He ran a site called "Geek Patrol" for a number of years that was focused on Macs, though I don't think it's been online for while. You can still find it at archive.org, though newer entries appear to just be from squatting sites after they either sold the domain or let it expire.Can you support this accusation? I don't know of any Mac review websites owned, run by, or affiliated with John Poole or his company, Primate Labs.

That's not to say they are necessarily purposely giving Apple hardware an edge, but with the developer having an Apple-centric background, there's definitely room for some amount of bias to enter there.

Right off the bat, I can see they are compiling the benchmark software using the native Xcode compiler for MacOS and iOS, developed by Apple, while for the other platforms they use the Clang compiler... which was also originally developed by Apple, even if it has been maintained by the open source community for a while. Still, Mac hardware could potentially be getting an edge in the results out of that, considering Apple's close ties to the compilers used. It's not like they are using the Intel compiler for Intel systems in the same way. And the majority of software for Windows has not been compiled with Clang, so it's not necessarily going to be representative of compiler optimizations found in most software. That's not necessarily a fault of the benchmark software, as they are probably trying to keep the compiler as similar as possible across devices, but it could potentially be beneficial to Apple.Geekbench contains no synthetic benchmarks, it's a collection of real world compute intensive tasks. Lots of its tests are packaged up open source programs, such as compression/decompression and a C compiler.

https://www.geekbench.com/doc/geekbench5-cpu-workloads.pdf

Then I see they arbitrarily weight the categories of tests, with the cryptography test amounting to 5% of the total score, integer tests amounting to 65% and floating point tests 30%. How they decided on those arbitrary weighting values is anyone's guess, and it sounds like all tests within those categories are given the same weight. Needless to say, any benchmark that tries to represent overall CPU performance by a single score in a one-size-fits-all approach is not going to represent the different ways people use computers. Who cares if a given processor is faster at certain tasks that push it into the lead over another, if those are not the tasks you will be using it for?

And I would describe these tests as "semi-synthetic". They do utilize some open-source routines to perform real-world tasks, but not necessarily in ways that will be entirely representative of how actual software will handle the same routines. For that, one would need to run tests on actual, complete software, rather than simply running a quick test that spits out a single number derived from an arbitrary collection of tests.

And of course, any prior x86 software for Macs not optimized for the M1 processors will be getting emulated, which can in many cases result in significant slowdowns, but would not be something represented by these benchmarks.

The benchmark itself might potentially be okay for making rough comparisons of similar hardware architectures with similar operating systems, but when you are changing up everything, there are far too many variables to derive meaningful results. The new Apple chips seem fine for what they are, but one shouldn't put too much weight on these numbers.

TRENDING THREADS

-

Question Which bottleneck/benchmark tools actually give the most reliable results?

- Started by HamishZwar

- Replies: 0

-

-

-

Question High performance automotive radiator coolant for PC water loop coolant?

- Started by fcar1999ta

- Replies: 3

-

-

Question Has anyone seen AMD Adrenaline Warning ⚠️

- Started by Mrpockets151

- Replies: 3

-

Question Cloned XP image on ssd will not boot unless sata port configured as IDE

- Started by kkroeker

- Replies: 3

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.