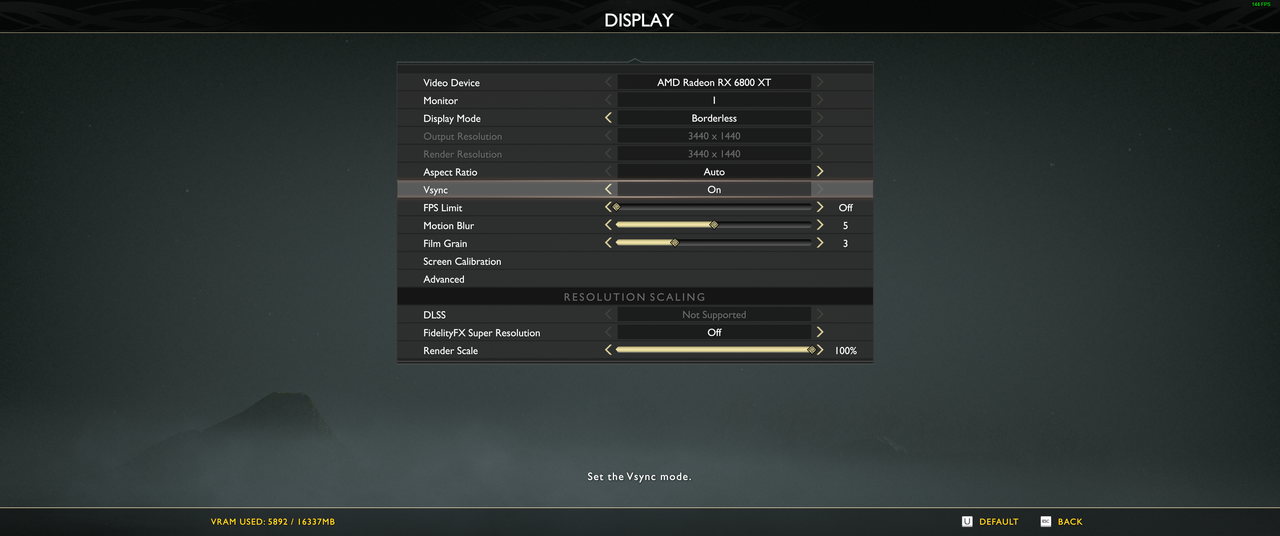

DLSS and FSR testing basically overlaps with testing at 1080p and 1440p. No, it's not the exact same, but at some point I just have to draw the line and get the testing and the article done. God of War, as noted, doesn't support fullscreen resolutions. That means to change from running at 4K in borderless window mode to testing 1440p, I have to quit the game, change the desktop resolution, and relaunch the game. Then do that again for 1080p. That would require triple the amount of time to test. Alternatively, I could drop a bunch of the DLSS and FSR testing and just do the resolution stuff. Either way, something was going to get missed, and I decided to focus on DLSS and FSR.

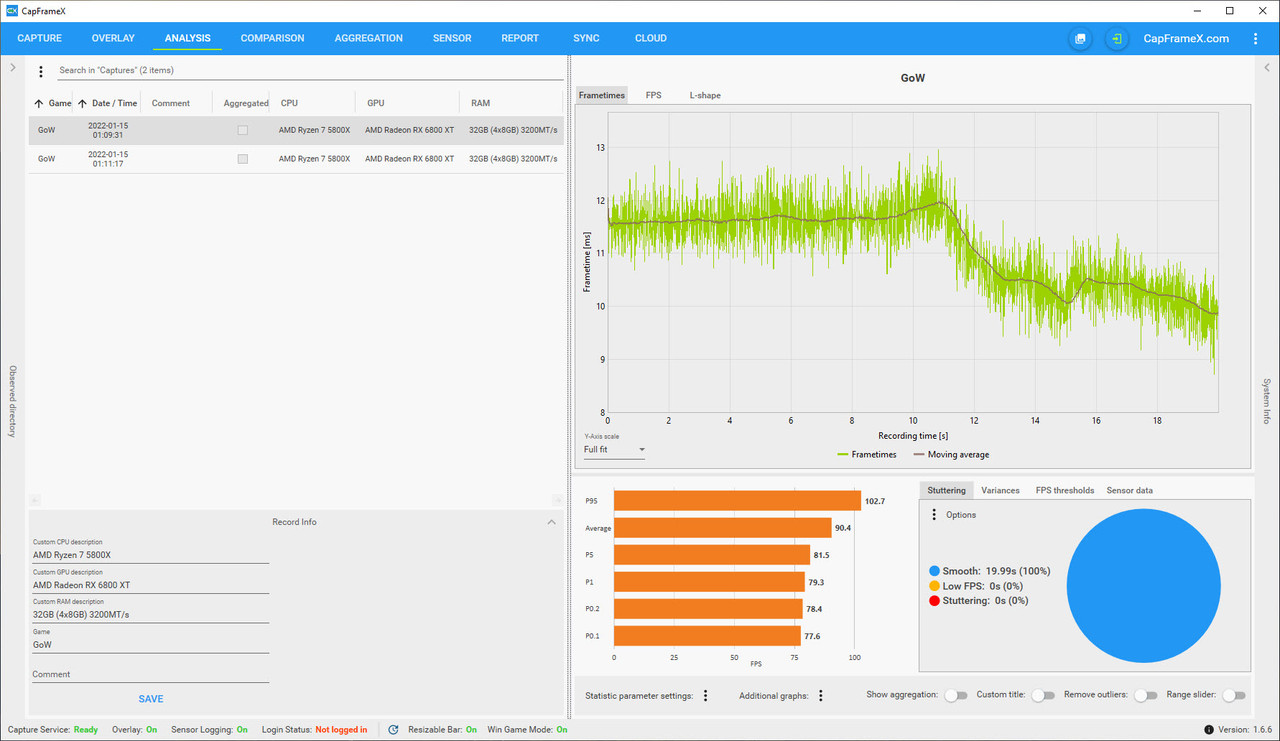

As pointed out in the text, an RX 5600 XT at 4K original quality with FSR quality mode achieved 64 fps average performance. Which means anything faster would be playable at 4K as well — that includes the entire RTX 20-series. Drop to 1440p and original quality and performance would be even higher, because FSR doesn't match native resolution performance exactly. Anyway, quality mode upscales 1440p to 4K, so 1440p is easily in reach of previous generation midrange GPUs, and 1080p would drop the requirements even further.