Ugh. "This video is not one of those BS clickbait videos!" Proceeds to deliver a BS clickbait video titled "I just found out the RX 6700 Performs the SAME as the RTX 4070..." Um, no. Not even close. Maybe in one particular game that is known to be a poor port, but that's not at all representative, so a title focusing on that aspect is by definition clickbait.

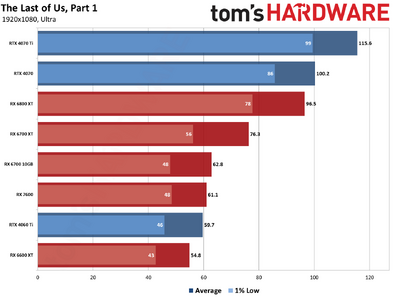

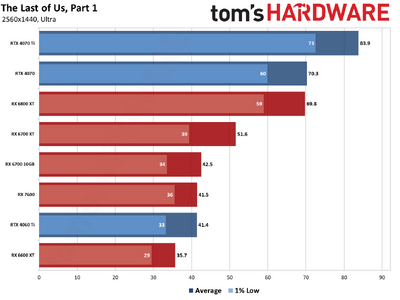

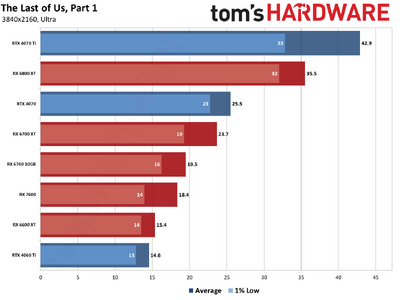

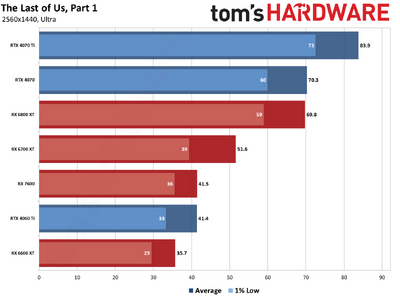

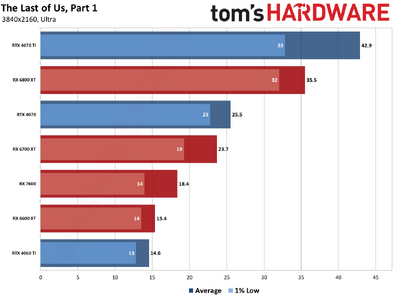

For the record, in a larger test suite, I found the RX 6700 10GB is about as fast as an RTX 2080 Super, and slower than RTX 3060 Ti. That's in rasterization performance. In ray tracing, it's below the RTX 2060 Super. The RX 6950 XT is about 60% faster, and the RTX 4070 is 43% faster (sticking with rasterization performance). Anything that falls well outside that mark is going to be due to whack coding or some other factor.

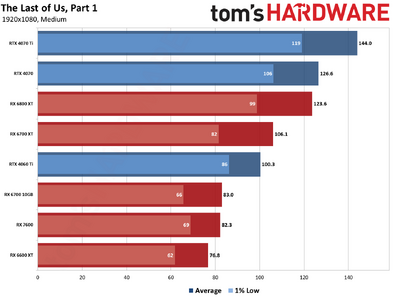

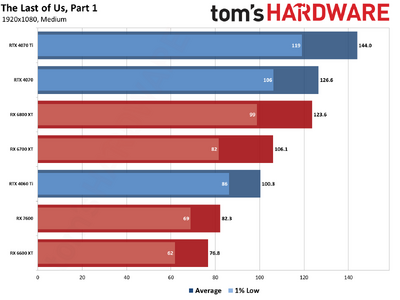

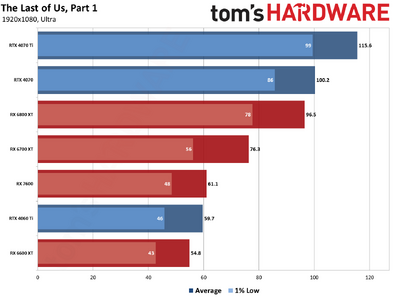

It looks like The Last of Us is hitting CPU limits. Also, the "OC + SAM" testing should really just be dropped and replaced with stock + SAM/ReBAR. It's making the charts messy and muddying the waters. Overclocking is variable and it's not usually representative of the end-user experience IMO. Anyway, there are lots of questions raised, and that video doesn't provide much in the way of answers.

What happens at 1440p or 4K? Because that would tell you if there's a CPU limit. There's very little reasonable explanation for why the RX 6700 would otherwise match an RX 6950 XT.

[Disclaimer: I couldn't handle listening to him after about 15 seconds, so I stopped and just skipped forward and looked at the charts. What I saw in the first bit was enough to make me question the rest.]

I started searching info about it and found the rumor that it performed really well in the 6700, so it was interesting to find out what was really going on. I´ll be fine anyway cause my monitor is only 1080p. Thanks Again! Cheers!

I started searching info about it and found the rumor that it performed really well in the 6700, so it was interesting to find out what was really going on. I´ll be fine anyway cause my monitor is only 1080p. Thanks Again! Cheers!