I asked the same question earlier. I mean, he even said he killed all enemies so he can go back at the start of the map to record FPS.

That's the biggest fail ever as a reviewer. How is removing 50+ enemies on the map indicative of GPU performance numbers?

How is running the same path but with enemies in different places, with varying explosions and other effects, representative of performance? You could have good runs and bad runs and a margin of error of 5% or more. My testing was <1% deviation between runs. If you could run the exact same test every time but with enemies, performance would be lower but should scale in the same way.

I list the specifics of how I test so that people can understand what was done. There are infinite ways to conduct tests, and no one single way is perfect. This is not a failure on the part of my testing, it’s just the way things are.

I can tell you that I went into this with no expectations and I didn’t try to make AMD look good or bad, nor did I try to make Nvidia look good or bad. I found an area with typically lower performance than some of the early zones, then confirmed results were repeatable, and then commenced testing the eight GPUs. It’s what I do with any game, including those with a built-in benchmark. Check your own bias at the door.

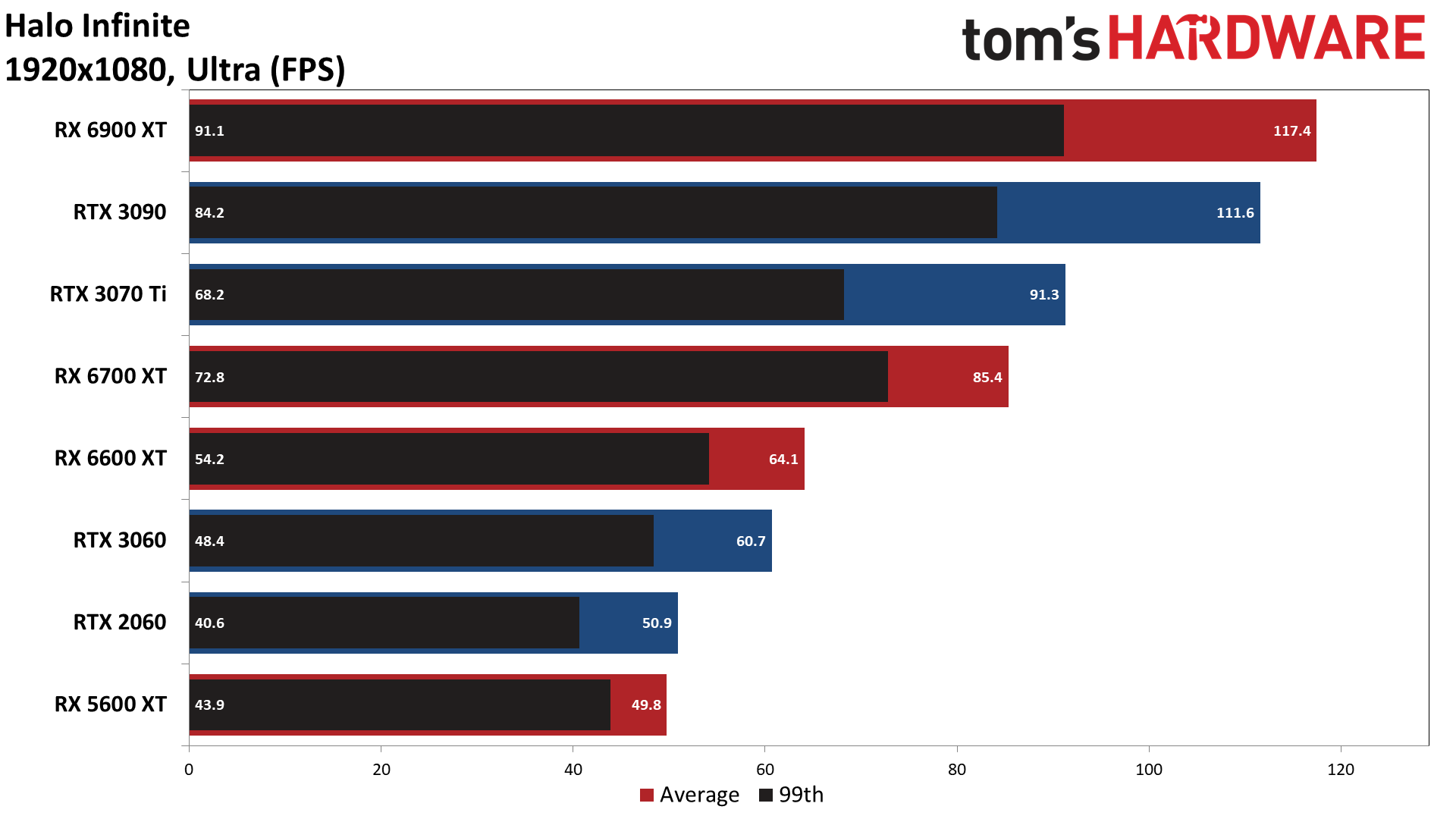

EDIT: For the record, I just ran a longer test sequence, running around the test area WITH and WITHOUT enemies. (I had actually checked this before, but didn't keep the results.) This was all at 1080p ultra on an RTX 3090. Here are the full results, including percentiles:

You'll note that the 99th percentile FPS is lower than in my original testing on the first test run, but that's because it was the first run of the level -- caching of data comes into play. The second test (Clear 2) matches closely on 99th percentile, but both runs have a higher average FPS because I ran through some less complex areas over the course of 90 seconds (vs. 30 seconds of only the more demanding area). Now look at the battle runs. I died on the second and had to cut that short, but instead of a <1% variation on average fps, it's now 3% and there's a 15% spread in 99th percentile fps. Lots of active enemies and fighting to not die means things are less controlled. However, the average FPS of the "battle" runs compared to my original data is within 3% if I exclude the (2) "death run".

Bottom line is that if you think the testing I did is total fail, because there weren't enemies on the level, this proves how little you know about the way games work. 90% of the graphics workload tends to be pretty much static, rendering the level and all the other stuff. The last 10% can include rendering other NPCs and such, but it usually doesn't change things more than a few percent. So why did I test without enemies? And why did I fully disclose the way I test? Just to make sure people understood how benchmarking works. As one final example, I did a test on the first part of the game, running through the doomed starship. The average FPS for that sequence was 172.3, with a 113.0 99th percentile FPS. Testing in that area was far less demanding, but it would be equally valid because it still represents part of the game. I use areas that tend to be lower in FPS just so people don't get their hopes up.