Hi, I currently own an msi Z390 Pro Carbon AC + i5-9600K @4.7GHz Turboboost + iGPU Intel UHD 630.

Today I bought a nVIDIA GTX 1660 Super OC 1875MHz "ROG-STRIX-GTX1660S-O6G-GAMING" (still has not arrived) for 2 reasons:

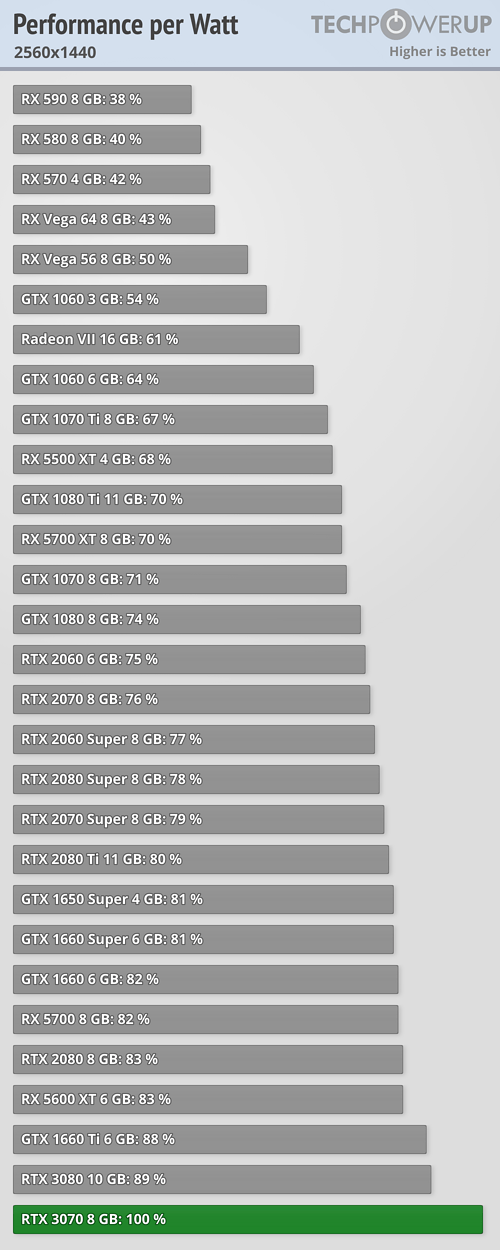

- Wait... I know it's terrifying to see that a RTX 3070 consumes maxed out 200W or even more, but what I am really looking for is the efficiency rate, that means the "points" or "performance" a GPU can give for each W of power used. Ideally and in theory, the GPU that gives me the most points per watt is the one that will be more efficient, hence the one that will do the tasks using the least power possible. Then I read this great article:

https://www.tomshardware.com/features/graphics-card-power-consumption-tested

And then I started to calculate, roughly, the numbers for possible GPUs, omitting and ignoring that different tasks will have different performances, and the fact that MAX TDP does not exactly equals power consumption, but anyway:

Oh, and before calculating anything, I thought: "well, obviously, the newer a GPU is, the better and more optimized and efficient it will be. Globally that rule will be a thing I believe, although I know that some models or variants will sacrifice power for performance brainlessly to be crowned as kings"

https://i.ibb.co/MS0tLzN/1.jpg

Acknowledging the errors and "asterisks" each number has behind, I guess we can conclude that RTX 3070 and GTX 1660 Super are the most efficient GPUs out there, data that fits the previous article I linked, which states these are amongst the most efficient cards in the market today. Now I ask this:

1. According to this, if I export a video that uses the GPU a lot (rest of components the same), would 1660 Super be the best choice for using the least amount of energy possible?

2. Lets say that with my current Intel UHD 630 iGPU, I play a game at max settings with constant 55-65FPS, meaning I am using the UHD 630 at 100% (task manager confirms this, CPU not holding GPU back at all). In other words... 15W of full GPU power. Then I activate VSync to cap the FPS to 60 max so I don't waste GPU power to create FPS that my monitor won't use. Now here's the real deal:

I highly doubt it, but according to what I just calculated, that means that the GTX 1660 Super, same game same settings, VSync enabled, will use about 13W to handle that game (a bit less than 15W because it's more efficient than Intel UHD 630). Which sounds completely ridiculous as it is way too low.

Is this true?

No?

How can we correctly approach this if what I said is not really what happens?

Today I bought a nVIDIA GTX 1660 Super OC 1875MHz "ROG-STRIX-GTX1660S-O6G-GAMING" (still has not arrived) for 2 reasons:

- I don't want or need an expensive GPU like 3070

- I don't want a freaking 180-200W (or even more) power hunger component

- Wait... I know it's terrifying to see that a RTX 3070 consumes maxed out 200W or even more, but what I am really looking for is the efficiency rate, that means the "points" or "performance" a GPU can give for each W of power used. Ideally and in theory, the GPU that gives me the most points per watt is the one that will be more efficient, hence the one that will do the tasks using the least power possible. Then I read this great article:

https://www.tomshardware.com/features/graphics-card-power-consumption-tested

And then I started to calculate, roughly, the numbers for possible GPUs, omitting and ignoring that different tasks will have different performances, and the fact that MAX TDP does not exactly equals power consumption, but anyway:

Oh, and before calculating anything, I thought: "well, obviously, the newer a GPU is, the better and more optimized and efficient it will be. Globally that rule will be a thing I believe, although I know that some models or variants will sacrifice power for performance brainlessly to be crowned as kings"

https://i.ibb.co/MS0tLzN/1.jpg

Acknowledging the errors and "asterisks" each number has behind, I guess we can conclude that RTX 3070 and GTX 1660 Super are the most efficient GPUs out there, data that fits the previous article I linked, which states these are amongst the most efficient cards in the market today. Now I ask this:

1. According to this, if I export a video that uses the GPU a lot (rest of components the same), would 1660 Super be the best choice for using the least amount of energy possible?

2. Lets say that with my current Intel UHD 630 iGPU, I play a game at max settings with constant 55-65FPS, meaning I am using the UHD 630 at 100% (task manager confirms this, CPU not holding GPU back at all). In other words... 15W of full GPU power. Then I activate VSync to cap the FPS to 60 max so I don't waste GPU power to create FPS that my monitor won't use. Now here's the real deal:

I highly doubt it, but according to what I just calculated, that means that the GTX 1660 Super, same game same settings, VSync enabled, will use about 13W to handle that game (a bit less than 15W because it's more efficient than Intel UHD 630). Which sounds completely ridiculous as it is way too low.

Is this true?

No?

How can we correctly approach this if what I said is not really what happens?

Last edited: