Hi there!

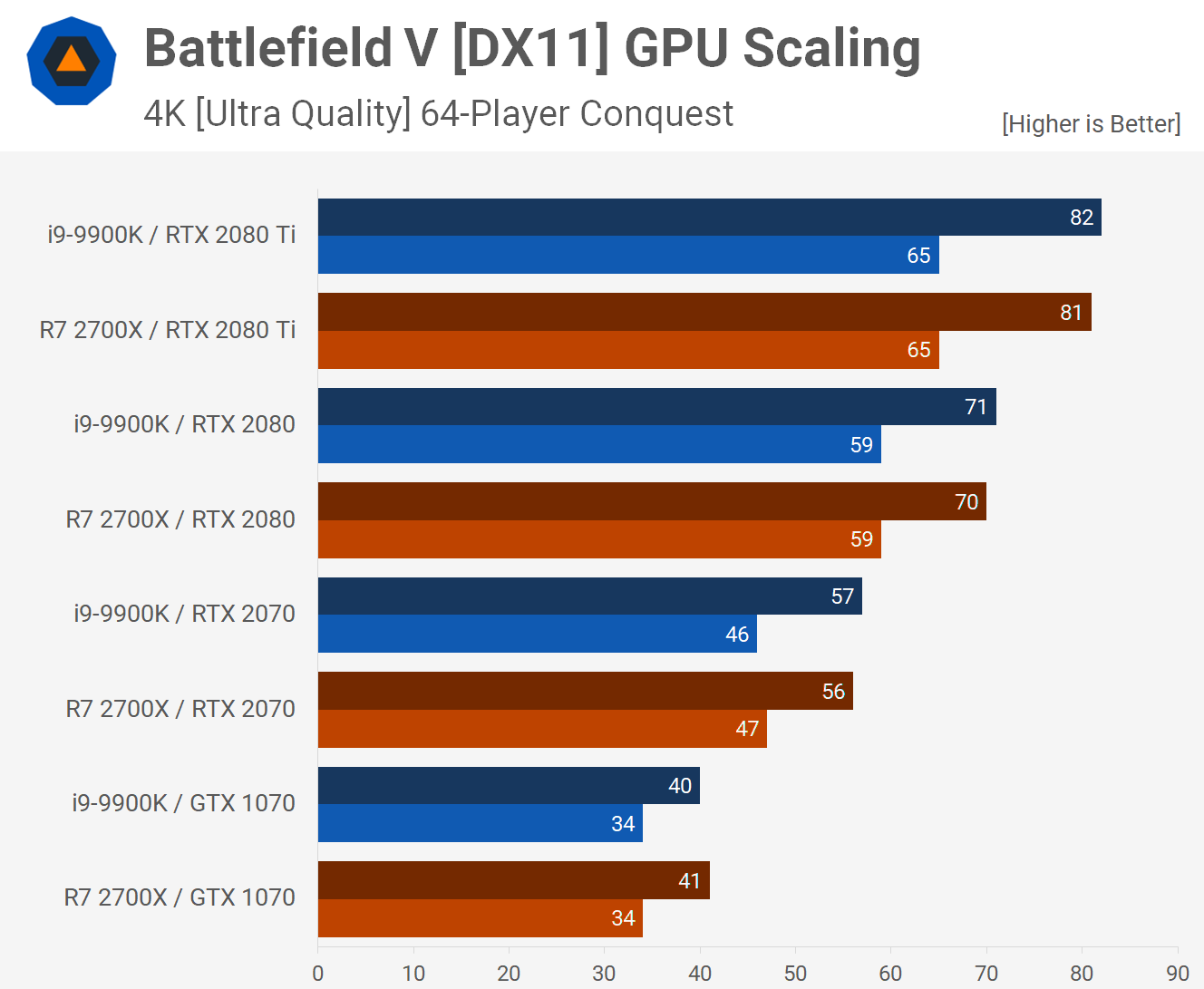

So I'm looking forward to build a new rig for gaming. Playing games in 1080p (21:9, 144Hz monitor) and then later in 1440p possibly will be in the focus. I'm planning to use this setup for the next 4-5 years and I am thinking of spending quite a lot of money, so high-end but not NASA-killer setups are in the talking (i5-9600k, i7-9700k, RTX2080-ish GPU).

I always had Intel processors but I am aware that AMD once again became a heavy-hitter, real-deal in the business. I am glad and everything but it is hard to let loose my initial discomfort of changing manufacturer and platform. However I am willing to do it considering that there are more than enough valid reasons behind it. Although emotions play a part and I surely can't really miss with an i7 or i5, nor can I with a Ryzen 5 (3600 and X) or Ryzen 7 (3700X).

So please, try to convince me buying a new rig with AMD architecture! But please keep in mind: framerate and longevity are the two key factors. I am not really interested in things like faster video export or faster times in WinRAR 😀

Thank you

So I'm looking forward to build a new rig for gaming. Playing games in 1080p (21:9, 144Hz monitor) and then later in 1440p possibly will be in the focus. I'm planning to use this setup for the next 4-5 years and I am thinking of spending quite a lot of money, so high-end but not NASA-killer setups are in the talking (i5-9600k, i7-9700k, RTX2080-ish GPU).

I always had Intel processors but I am aware that AMD once again became a heavy-hitter, real-deal in the business. I am glad and everything but it is hard to let loose my initial discomfort of changing manufacturer and platform. However I am willing to do it considering that there are more than enough valid reasons behind it. Although emotions play a part and I surely can't really miss with an i7 or i5, nor can I with a Ryzen 5 (3600 and X) or Ryzen 7 (3700X).

So please, try to convince me buying a new rig with AMD architecture! But please keep in mind: framerate and longevity are the two key factors. I am not really interested in things like faster video export or faster times in WinRAR 😀

Thank you