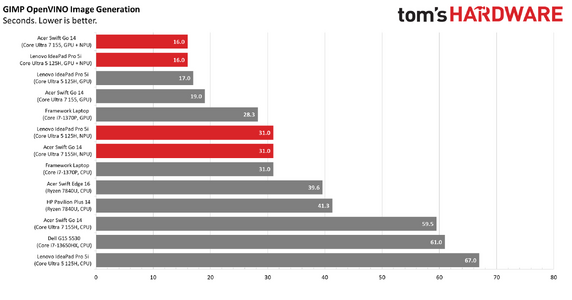

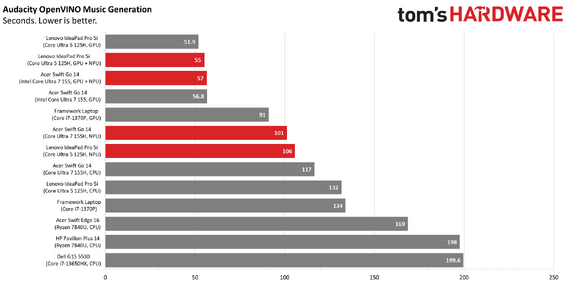

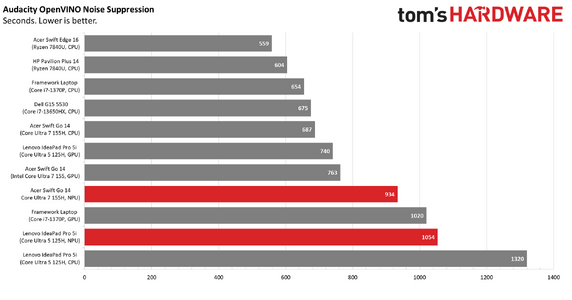

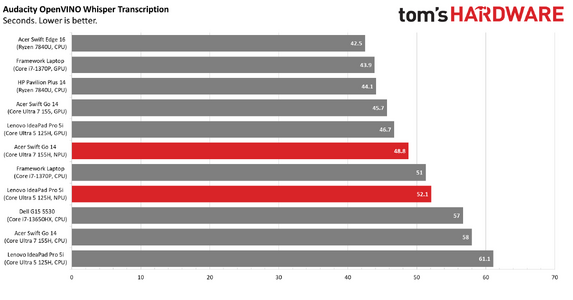

We tested Intel's new AI-friendly chips on real-world inference workloads such as music and image generation. The results may surprise you.

I tested Intel's Meteor Lake CPUs on AI workloads: AMD's chips sometimes beat them : Read more

I tested Intel's Meteor Lake CPUs on AI workloads: AMD's chips sometimes beat them : Read more