Intel already has memory controllers on the same chiplet as the CPU cores but unfortunately inter mesh communication isn't good.

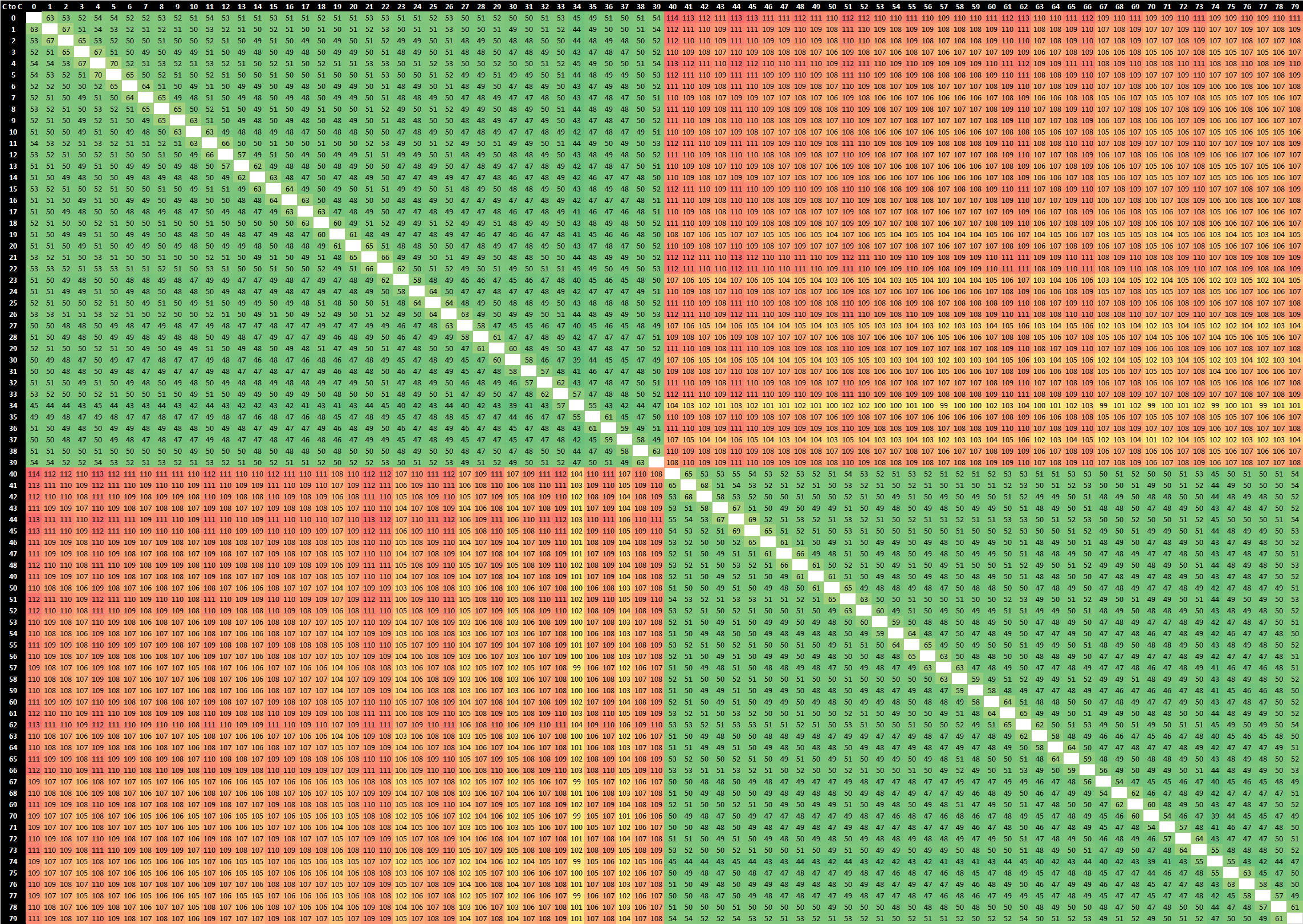

Meshes just aren't great at latency, as compared to AMD's chiplets that uses ring buses

The last server CPU Anandtech reviewed was Ice Lake, however it gives us a chance to compare a fairly recent mesh vs. Milan (Zen 3)'s interconnect topology.

If you click on these images and look at the numbers written in the cells, you can see that core-to-core communication latency is markedly better in Ice Lake.

Ice Lake SP 8380

EPYC Milan 7763

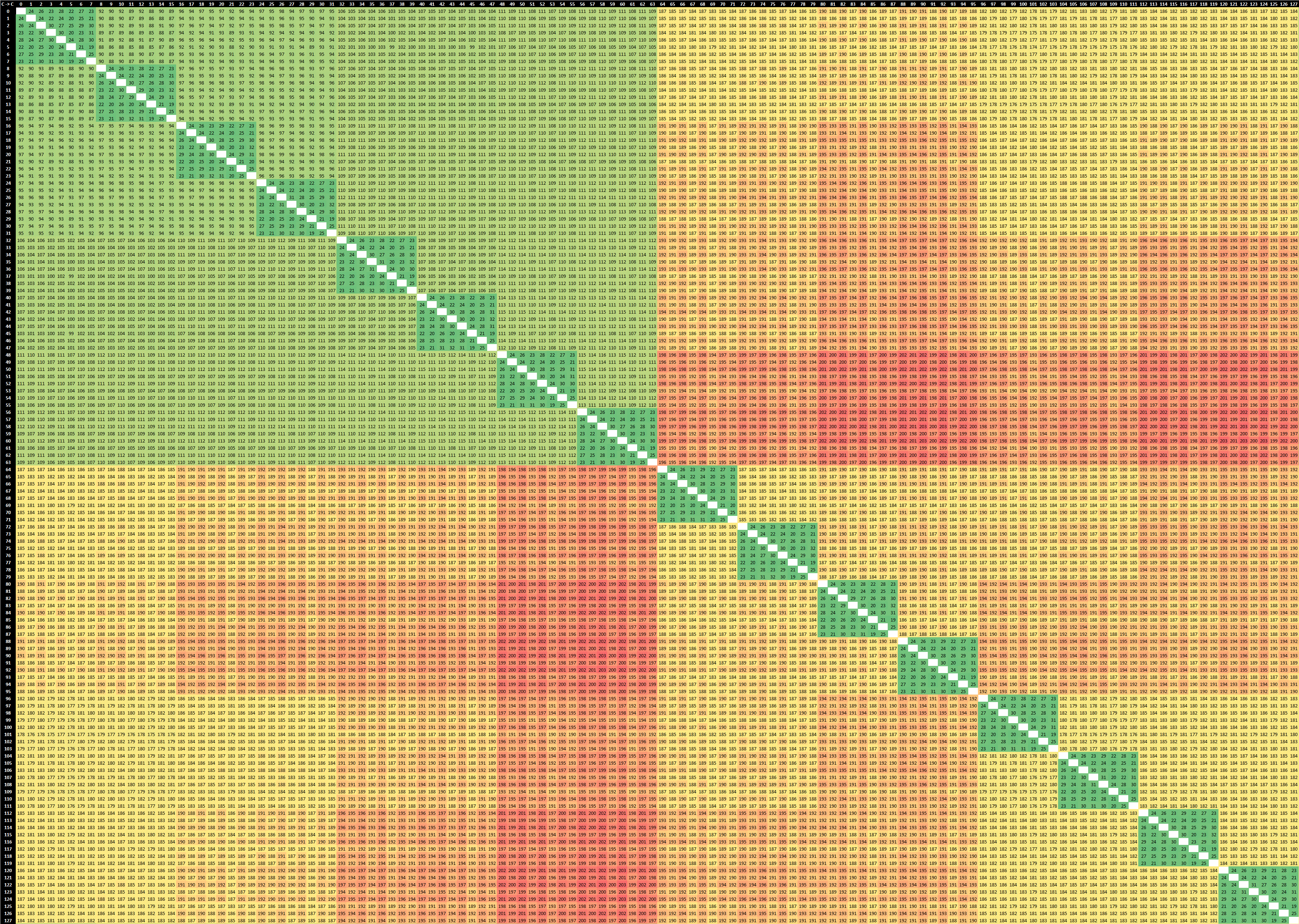

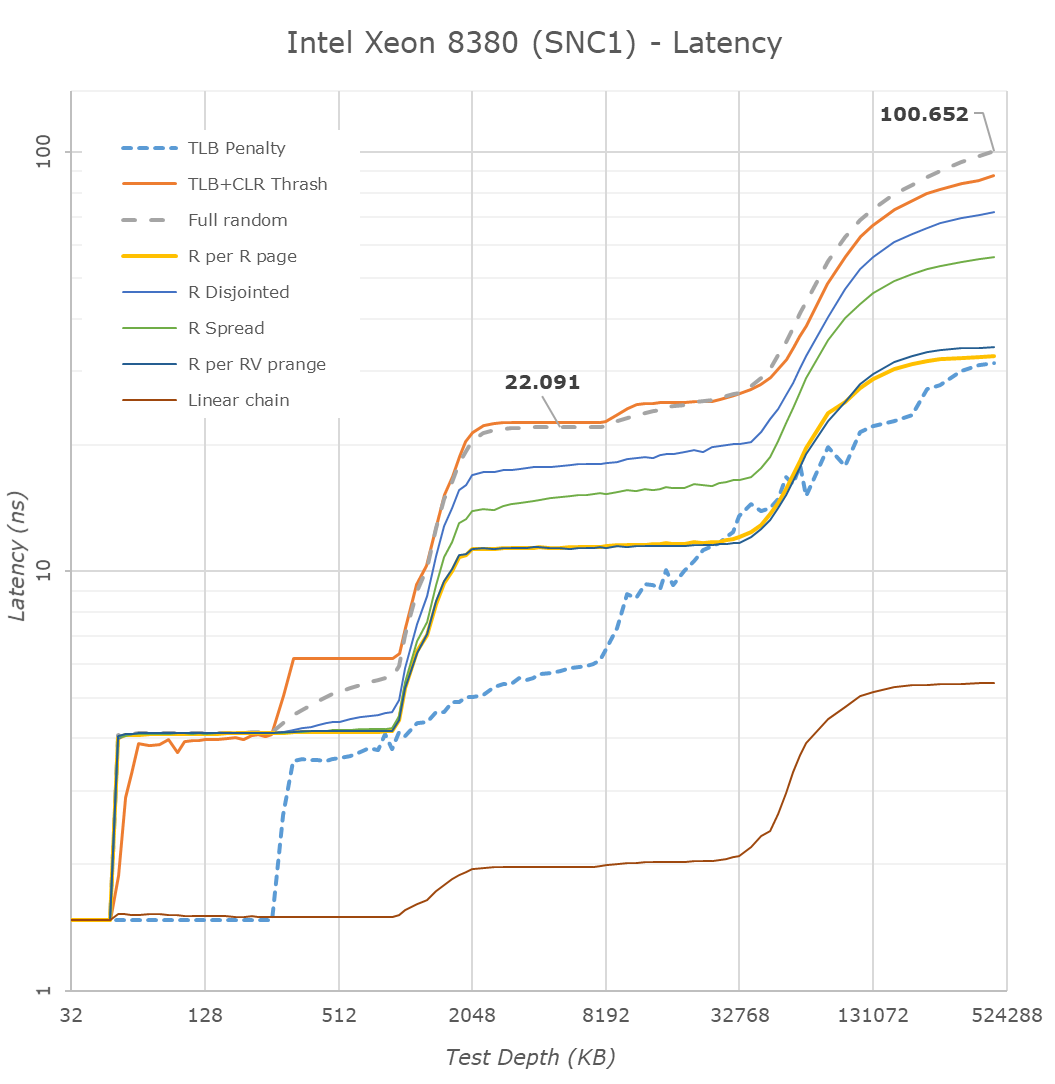

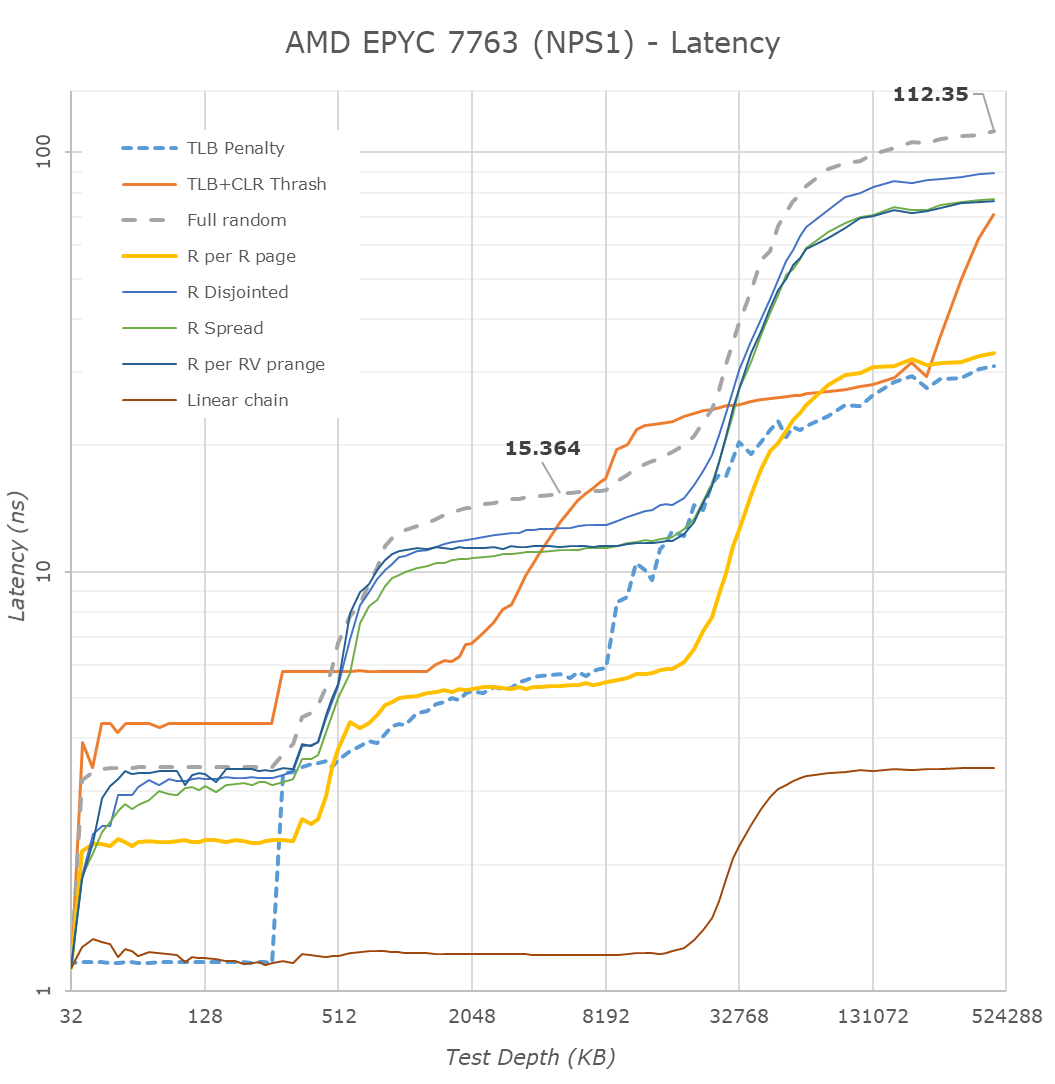

Now, here's how they compare on memory latency:

Ice Lake SP 8380

EPYC Milan 7763

Source: https://www.anandtech.com/show/16594/intel-3rd-gen-xeon-scalable-review/4

DRAM latency is definitely better in Ice Lake. Furthermore, in spite of having much more L3 cache, overall, Milan blows out of it sooner. That's because a chiplet can only populate its local L3 slice.

And besides, VM workloads reside on the private chiplet caches or out to DRAM, not go looking for caches in other cores.

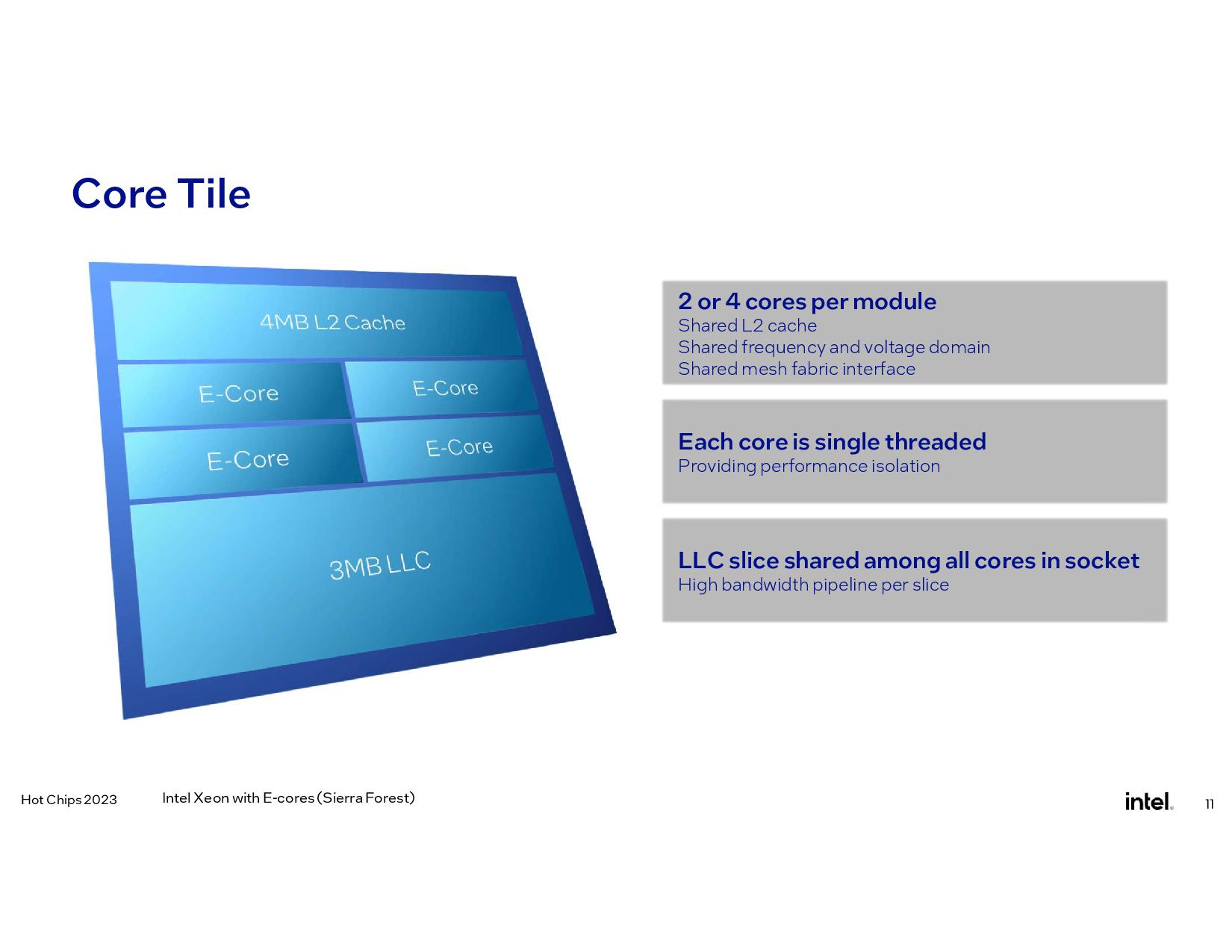

The point of Intel's shared L3 approach is that you can get more flexible sharing of L3 across the cores. In the best case, that could enable you to get more benefit from the same amount of L3 as in EPYC.

As for the part about "looking for caches in other cores", cache-coherency demands that all caches be checked, when you have a cache miss in a given slice of L3.