Intel set to ensure a smooth transition to new IFS leader as Randhir Thakur resigns.

Intel Foundry Services Chief Steps Down : Read more

Intel Foundry Services Chief Steps Down : Read more

I would love to see that actuallyI don't see AMD being a customer of Intel's foundry.

Perhaps you haven't noticed the difference in size between TSMC and Intel's foundry business on the latest nodes.Intel's Foundary Services need to be split off from Intel's Design Team.

Seperation of Church & State, but apply it to Chip Fabrication & Design.

At the same time, if Intel's Foundry Services were truly "Neutral & Independent", and they opened themselves up like TSMC to the world's companies.Perhaps you haven't noticed the difference in size between TSMC and Intel's foundry business on the latest nodes.

This would be the fastest way to turn Intel Foundry into Global Foundry, which I think was relatively larger compared to the competition when it got spun off.

Right now we have 2 top end foundries in the world, one is mostly in house. And if you disagree, then how much better would Intel's CPUs be if they went to TSMC? Would my 13900kf do 7ghz 2 core, 6.6ghz all core without increasing volts in bios instead of 6/5.6? There is competitive innovation being done on both sides right now and if there were one leading foundry and some stragglers we would have little of that and monopolistic pricing.

Right now the market caps of TSMC=$408B, Nv=$381B, AMD=$117B, Intc=119B and Intel is taking on the other 3. David and Goliath. And pressuring TSMC and AMD to innovate and keep prices down to keep up. Split up Intel chip design and foundry and this would end overnight.

Edit: Also having the two integrated helps optimize the one for the other. Kind of like in other areas of manufacturing. Many times the theoretical is not practical and empirically based adjustments must be made to design. Easier to do when both can be an open book to those in charge of making the decisions.

Global Foundries was competitive until its investors (mainly Saudis?) decided to stop sinking money into developing 7 nm and instead just to milk its existing production lines.This would be the fastest way to turn Intel Foundry into Global Foundry, which I think was relatively larger compared to the competition when it got spun off.

You should look at revenues, instead of market caps. Some of those companies are more overvalued than others, which skews the market caps.Right now the market caps of TSMC=$408B, Nv=$381B, AMD=$117B, Intc=119B and Intel is taking on the other 3. David and Goliath.

IFS just needs to get its legs under it (i.e. get enough business to be self-sustaining). And that's what they're trying to do.And pressuring TSMC and AMD to innovate and keep prices down to keep up. Split up Intel chip design and foundry and this would end overnight.

I believe the designers optimize for the manufacturing process a lot more than the manufacturing node optimizes for the chip designs. Having a split between the two doesn't need to end collaboration, as TSMC reportedly works closely with partners like Apple, AMD, and Nvidia.Edit: Also having the two integrated helps optimize the one for the other.

No, I don't think it is. I think Intel just tunes their designs to clock high, but it's not intrinsic to the process node.IFS would still focus on Frequency since that's their key advantage.

Obviously IFS will improve over time, but they're a few steps behind.

If it isn't intrinsic to their Process Node, then their focus in designing their process node seems to be with (Frequency & Performance) over (Power Savings & TransistorDensity & Energy Efficiency).No, I don't think it is. I think Intel just tunes their designs to clock high, but it's not intrinsic to the process node.

Really. What was GF competitive in making after they were spun off? Top end CPUs? GPUs? RAM? Anything? Or were they just trying to sell what they could for whatever people would pay them? That isn't competitive.Global Foundries was competitive until its investors (mainly Saudis?) decided to stop sinking money into developing 7 nm and instead just to milk its existing production lines.

That makes less sense than Apple splitting up the Apple store, software and hardware divisions.The chip-design parts of Intel don't need/want a massively capital-intensive chip-manufacturing division weighing them down, like a boat anchor around their necks. And the chip-manufacturing business is becoming too expensive for Intel to sustain, solely on the back of their in-house products. There's really no other option - Intel has to spin off their fabs, before they tank the entire company!

They were keeping pace with TSMC, up until 12 nm.What was GF competitive in making after they were spun off?

TSMC does development of interposers, chiplets, and die-stacking technologies:Who is going to help some company like Mediatek design their chiplets so there isn't a communication bottleneck, an IFS that is focused on manufacturing?

Are you talking about today, or a hypothetical future where IFS is a completely independent company? Even today, when IFS engages customers and works out a business arrangement, they should commit resources for supporting the customer as you'd have with any other fab. Intel knows that if it wants IFS to be taken seriously, it has to live up to the standards customers are used to, from other fabs they've used.Who is going to prioritize IFS to get the best performance out of their CPU designs, a collaboration of potentially competing IFS customers?

If Intel is still using them to fab their CPUs, and Intel's CPUs are fastest, then IFS would have that mantle.Without the mantle of making the fastest CPU silicon in the world IFS will be instantly second rate.

Catching up on some old news articles. They're not like years old - just a couple weeks.And what's with these thread necros lately? I don't think I even had an Arc GPU when I wrote that.

I think Intel failed at making a dense 10 nm. From what I've read, their original 10 nm targets were too ambitious, and they had to scale back. Their lower density (compared with TSMC N7) isn't for lack of trying.their focus in designing their process node seems to be with (Frequency & Performance) over (Power Savings & TransistorDensity & Energy Efficiency).

I've looked at Hypothetical #'s, and Intel would've won Single-Threaded & Light MT along with Gaming by a good margin if they split their P-core & E-core into two seperate line-ups.However, if their CPUs had only P-cores, they wouldn't scale to multithreaded workloads as well as most competing AMD CPUs, due to the performance and area of the P-cores. That's why we now have hybrid CPUs on the desktop. You can run the numbers, yourself. Intel couldn't find a better way to win at both single-threaded and multithreaded performance.

You're missing the point. Intel's goal was to build a single CPU that could beat Ryzen on lightly-threaded and heavily-threaded benchmarks.I've looked at Hypothetical #'s, and Intel would've won Single-Threaded & Light MT along with Gaming by a good margin if they split their P-core & E-core into two seperate line-ups.

I disagree that a the 7502P is a good proxy for a 32-core Threadripper. It would be somewhere between that and the 9374F, because Threadrippers tend to clock a little higher than normal EPYCs.A pure dedicated E-core with 40 E-cores in one Tile would ROCK ThreadRippers very foundation.

GF was mediocre and slowly fading to obscurity from the moment of the split: How AMD Left GlobalFoundries for TSMC | Meet Global (bnext.com.tw)They were keeping pace with TSMC, up until 12 nm.

And that is because Intel foundries are combined with the rest of the company. Once again from my previous post: "They aren't big enough for a giant design team that they would likely scalp straight from Intel to make." Certainly not big enough to compete with Samsung, much less TSMC. They won't be able to rest on their brand, they will have to produce results to stay competitive.TSMC does development of interposers, chiplets, and die-stacking technologies:

Same with Intel. Both are on the UCIe consortium, also.

- https://www.anandtech.com/show/16026/tsmc-teases-12-high-3d-stacked-silicon

- https://www.anandtech.com/show/16031/tsmcs-version-of-emib-lsi-3dfabric

- https://www.anandtech.com/show/16036/2023-interposers-tsmc-hints-at-2000mm2-12x-hbm-in-one-package

- https://www.anandtech.com/show/16051/3dfabric-the-home-for-tsmc-2-5d-and-3d-stacking-roadmap

- https://www.anandtech.com/show/1762...lerate-development-of-25d-3d-chiplet-products

The other companies would have their standards met, except ones like Apple. If Intel split off the foundry business would they have their capacity met? Would IFS pursue lithography machines and capacities and methods that worked best for Intel or best for the mean of their customers? Mediatek would likely prefer density and efficiency. Would IFS deal with the hassle of EMIB and foveros? Intel has taken some money losing chances on stuff that has had good potential, like Optane, Arc, Atom, Ice Lake, Broadwell. Some have laid the groundwork for leading stuff. This is a lot less likely to happen in the future if Intel shrinks like AMD did. And an independent foundry business is a lot less likely to just go along with such schemes. An independent IFS is a lot more likely to flounder into obscurity like GF and Intel would watch it's best products get relegated to low priority production capacities at whatever other foundry is leading the industry in 5 years.Are you talking about today, or a hypothetical future where IFS is a completely independent company? Even today, when IFS engages customers and works out a business arrangement, they should commit resources for supporting the customer as you'd have with any other fab. Intel knows that if it wants IFS to be taken seriously, it has to live up to the standards customers are used to, from other fabs they've used.

It is hard to stay at the top. Intel's designs are helping it's fabs and vice versa.If Intel is still using them to fab their CPUs, and Intel's CPUs are fastest, then IFS would have that mantle.

Look at TSMC: they were second-rate until they finally surpassed Intel, and they did that without ever designing their own CPU cores.

I'm on my second Arc. Went from 380 to 750. Seems like a long time to me, even though it isn't. I was just cranky because I couldn't respond to your last quote before the thread got closed. But you'll have that.Catching up on some old news articles. They're not like years old - just a couple weeks.

I don't know what you mean.Once again from my previous post: "They aren't big enough for a giant design team that they would likely scalp straight from Intel to make."

That's why they're trying to grow their foundry customer base before spinning it off. They need to become big enough to compete with TSMC. I think you've put your finger right on what I've been trying to tell you: Intel can't sustain a cutting-edge manufacturing operation, solely on the basis of their own products. They need more volume than that.Certainly not big enough to compete with Samsung, much less TSMC.

And that's a good thing.They won't be able to rest on their brand, they will have to produce results to stay competitive.

Same as every other business facing similar problems. If IFS didn't have the resources to pursue all the market segments, they just have to pick which segments where they think they can be most competitive.Would IFS pursue lithography machines and capacities and methods that worked best for Intel or best for the mean of their customers? Mediatek would likely prefer density and efficiency.

I think so, because the world is moving to die-stacking and chiplets. That's probably what it takes to stay in the game, these days.Would IFS deal with the hassle of EMIB and foveros?

Um, not sure Broadwell or Ice Lake really belong on there, since those weren't supposed to be risky.Intel has taken some money losing chances on stuff that has had good potential,

No, if the design portion of Intel cuts away the capital-intensive manufacturing appendage, it will be more profitable and should have more resources to invest in new initiatives.This is a lot less likely to happen in the future if Intel shrinks like AMD did.

Of course not. That wouldn't even make sense. Except for Optane (which Intel partnered on with Micron), those are all products based on chip designs, which you could fab anywhere. The foundry just does the manufacturing part, you understand?And an independent foundry business is a lot less likely to just go along with such schemes.

Or TSMC?An independent IFS is a lot more likely to flounder into obscurity like GF

Intel will get whatever product capacity and schedule it's willing to pay for, just like AMD, Nvidia, Apple, etc. do today.Intel would watch it's best products get relegated to low priority production capacities at whatever other foundry is leading the industry in 5 years.

Oh, so the fabs which were about 4 years late on a viable 10 nm node helped Intel stay on top? That's news to me and just about everyone else who follows the tech industry. The only reason they didn't sink Intel is the unprecedented demand surge that was going on, at the time. If Intel's 14 nm had to compete with Zen 2 and the first year of Zen 3 solely on merit, Intel would've been toast.It is hard to stay at the top. Intel's designs are helping it's fabs and vice versa.

Ever the risk, with those types of threads. Well, you can always PM me, if you still want to answer any of my points. I get that your responses won't be seen by the 3 or 4 other people still watching that thread, but oh well...I was just cranky because I couldn't respond to your last quote before the thread got closed. But you'll have that.

That's my point, given their current core design base, they can't pull that off.You're missing the point. Intel's goal was to build a single CPU that could beat Ryzen on lightly-threaded and heavily-threaded benchmarks.

They scale just fine for MT work-loads, suck for ST work-loads, and is decent for power consumption / heat output.Also, we don't know how well that E-core CPU would scale. Puget could be assuming linear scaling, which is too optimistic. I've played around with the core-scaling numbers from Alder Lake, and it has some real scaling problems for all-core workloads.

And finally, that's only one benchmark. The whole reason SPEC2017 includes like 22 different tests is because they behave differently.

No, they already did it. First with Alder Lake, and again with Raptor Lake.That's my point, given their current core design base, they can't pull that off.

The heat/power caveats are partly due to Intel 7 being worse than TSMC N5. Nothing the CPU designers can really do about that. But, the other part is that they designed the critical paths to be short enough that you can really clock those suckers up. Doing so uses a ton of power, but reliably returns performance increases. This is good for lightly-threaded workloads, especially if you don't care much about efficiency.13900K wins, but not by a huge margin, and with HUGE caveats like Heat Generation / Power Consumption as major issues.

According to whom and what data?They scale just fine for MT work-loads,

I don't think the juice was worth the squeeze.No, they already did it. First with Alder Lake, and again with Raptor Lake.

But that would also apply to a 12P2T configuration as well.The heat/power caveats are partly due to Intel 7 being worse than TSMC N5. Nothing the CPU designers can really do about that. But, the other part is that they designed the critical paths to be short enough that you can really clock those suckers up. Doing so uses a ton of power, but reliably returns performance increases. This is good for lightly-threaded workloads, especially if you don't care much about efficiency.

With 40 E-cores, you would naturally scale back frequency due to the volume of cores, that's true with any CPU that goes higher in core counts.According to whom and what data?

The best data I have on this is from Andandtech, in the good old days when Ian and Andre still worked there. Sadly most of their more interesting investigations were omitted from the Raptor Lake review, so we don't know how much their findings still apply.

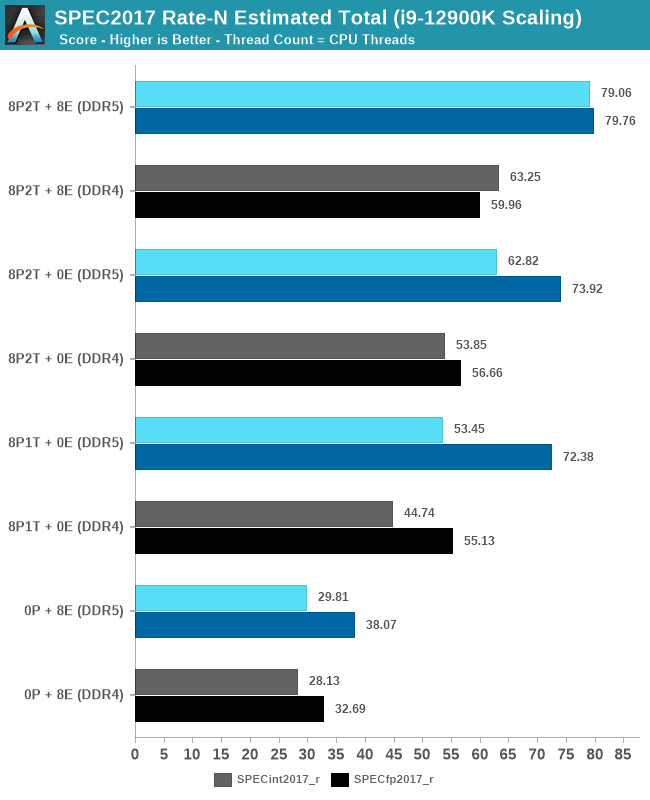

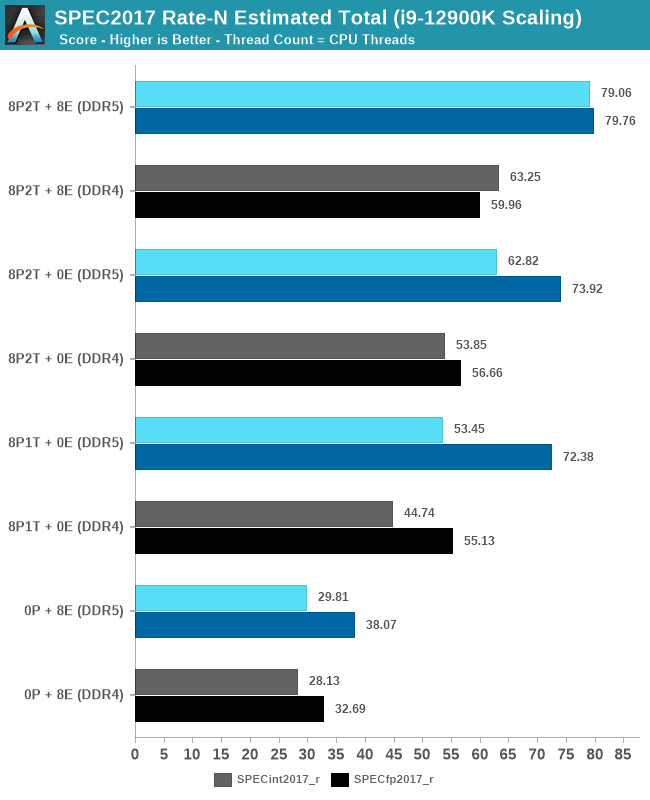

Let's start with this, because it tells a fairly straight-forward and stark tale about multi-core scaling in Alder Lake:

First, let's focus on just the DDR5 scores. If you subtract the "8P2T + 0E" score from "8P2T + 8E", you get (16.24, 5.84), which is only (54.5%, 15.3%) of the "0P + 8E" score and (3.09, 0.76) times as fast as a single E-core (see page 7 of the same article). If we look at just how the E-cores scale, by themselves, the "0P + 8E" case produces scores (5.68, 4.97) times as fast as a single E-core. So, it looks like the CPU (cache subsystem?, memory?) is topping out near 24 threads. So, this idea that you could get good scaling by dropping a 40 E-core CPU in a LGA 1700 socket isn't supported by that data. I'm not claiming that data is conclusive, because some of the benchmarks might have intrinsic limits on scalability, and then there are power and thermal limits.

Also, page 4 of that same article shows that you can use 48 W running POV-Ray on just the 8 E-cores. If you scaled that up to 40, without dialing back the per-core clocks, you'd hit 240 W. So, you'd have to scale back the frequencies a lot, and that would ding performance. This also tells us that 40 cores is about double what you really want to put in a LGA 1700 socket. I think we could say a 24 E-core configuration might work alright.

Finally, page 10 contains several single- & multi- threaded benchmarks of the P-cores, E-cores, and a Skylake i7-6700K. At the end, the authors conclude:

"Having a full eight E-cores compared to Skylake's 4C/8T arrangement helps in a lot of scenarios that are compute limited. When we move to more memory limited environments, or with cross-talk, then the E-cores are a bit more limited due to the cache structure and the long core-to-core latencies. Even with DDR5 in tow, the E-cores can be marginal to the Skylake"

Which, again, suggests there are SoC-level issues that would impact scaling to 40 E-cores. How well you could address them in a dual-channel DDR5 socket is a cause for some doubt.

Even if it's by 1%, getting the win gets you the crown -- and that gets you more sales.I don't think the juice was worth the squeeze.

Their margin of victory isn't all that great compared to what Intel wanted.

Heh, the all-core boost is already well past the efficiency sweet spot of the P-cores, which is why the chip gets so darned hot! It would be interesting to know how much they'd have to lower the all-core base & boost speeds, to accommodate 10 or 12 P-cores. Regardless, we know 8 E-cores are faster than 2 P-cores, so it wouldn't change the math justifying the hybrid architecture.But that would also apply to a 12P2T configuration as well.

You would be able to distribute the power to 12 cores and not push each P-core past their sweet spot so much unless you were OCing.

I'm not arguing against E-cores or having lots of them. I'm just saying that a more fundamental reworking of the macro-architecture is needed to scale them up. Separately, I expect you're going to run out of memory bandwidth as you get much above 24 E-cores or so. I think running 32 threads in a dual-channel socket is already pretty nuts, which is reinforced when you look at the performance differences of DDR4 vs. DDR5, for heavily-threaded workloads. If you put 40 E-cores in a LGA1700, not only will you have to clock them a lot lower, but they're also going to spend a lot of time blocking on memory accesses.With 40 E-cores, you would naturally scale back frequency due to the volume of cores, that's true with any CPU that goes higher in core counts.

That would better help distribute the power amongst all cores and make better use of the existing power budget of the socket.

Any issues with Core-to-Core latencies is something that Intel can fix in newer iterations.

They know how great Direct links are between Quad-Core Clusters, they only need to look at older Zen architecture with 4-core CCX's and see how low latency can get with direct Core-to-Core communication links.

Eeking by Ryzen 7000 by throwing Power at the problem & generating a boat-load of heat isn't what I call impressive engineering or problem solving.Even if it's by 1%, getting the win gets you the crown -- and that gets you more sales.

The E-core strategy is even more impressive in Raptor Lake, given that Intel made it on the same node and microarchitecture, yet had to compete with a new AMD microarchitecture on a new node.

It's only faster for massively MT workloads, which is why I want to shove them together into a dedicated CPU.Heh, the all-core boost is already well past the efficiency sweet spot of the P-cores, which is why the chip gets so darned hot! It would be interesting to know how much they'd have to lower the all-core base & boost speeds, to accommodate 10 or 12 P-cores. Regardless, we know 8 E-cores are faster than 2 P-cores, so it wouldn't change the math justifying the hybrid architecture.

E-cores were never designed or optimized for ST workloads.However, you touch on the related issue of P-core scaling, which is another interesting question. We can't answer that directly, but we can at least compare them to how well the E-cores scale. Using the same data as my previous post, the SPEC 2017 scores for "8P1T + 0E" are (6.57, 5.11) times faster than a single-threaded P-core (int, fp). Again, the corresponding numbers for the E-cores were (5.68, 4.97) . So, there's already worse scaling at just 8 E-cores. Scaling will only get worse from there.

Or you can have L4 Cache that would feed lots of bandwidth, this would be a perfect time to attach 1 or 2 packages of HBM for L4 Cache, compensate for not enough memory bandwidth from Dual DDR5 Memory Channels.I'm not arguing against E-cores or having lots of them. I'm just saying that a more fundamental reworking of the macro-architecture is needed to scale them up. Separately, I expect you're going to run out of memory bandwidth as you get much above 24 E-cores or so. I think running 32 threads in a dual-channel socket is already pretty nuts, which is reinforced when you look at the performance differences of DDR4 vs. DDR5, for heavily-threaded workloads. If you put 40 E-cores in a LGA1700, not only will you have to clock them a lot lower, but they're also going to spend a lot of time blocking on memory accesses.

You know, there's a reason why ThreadRipper and Intel HEDT have more memory channels? You can't simply scale up cores without thinking about memory bandwidth.

That's because you're thinking about these cores only from a desktop perspective. What Intel and AMD both do is make one core microarchitecture that has to scale from laptops to gaming desktops and servers. For the sake of cost-control and to fit the most cores per sever CPU, they have an incentive to keep the cores on the smaller end of the spectrum. Then, to deliver good gaming performance, they add tight timing constraints, so you can clock them really high.Eeking by Ryzen 7000 by throwing Power at the problem & generating a boat-load of heat isn't what I call impressive engineering or problem solving.

Everybody fawns over how Apple's cores have amazingly-high IPC, but that's because they're used in relatively lower core-count products, where energy efficiency is a priority. Furthermore, Apple sells products, rather than bare chips. So, Apple can afford to make their cores bigger and more expensive, in order to maximize efficiency.It's classic American Big Engine, more HP at the cost of efficiency thinking.

It's no efficient engine design like Japanese & Europeans do on their engines.

If you look at the laptop CPUs, they cut P-cores a lot faster than they cut E-cores as you go from the high-end to lower-end models. And Meteor Lake wants to cut the number of P-cores down to 6. So, that's telling us Intel views the E-cores as relevant for more moderately-threaded workloads, also.It's only faster for massively MT workloads, which is why I want to shove them together into a dedicated CPU.

Not true. They come from a long lineage of cores that were sold in CPUs with as few as 2 cores. These days, I think most Jasper Lake models are quad-core.E-cores were never designed or optimized for ST workloads. They were always designed for MT workloads in massive quantity.

Adding SRAM cache gets expensive, to the point where you might as well use a socket with more memory channels, which is what I'm saying.Or you can have L4 Cache that would feed lots of bandwidth, this would be a perfect time to attach 1 or 2 packages of HBM for L4 Cache, compensate for not enough memory bandwidth from Dual DDR5 Memory Channels.

That's all well & good that they're both using 1 or 2 Micro-Architectures, but they way Intel is using it on the DeskTop side is what I have contention with.That's because you're thinking about these cores only from a desktop perspective. What Intel and AMD both do is make one core microarchitecture that has to scale from laptops to gaming desktops and servers. For the sake of cost-control and to fit the most cores per sever CPU, they have an incentive to keep the cores on the smaller end of the spectrum. Then, to deliver good gaming performance, they add tight timing constraints, so you can clock them really high.

It's not like Intel & AMD's primary main Core CPU Architectures hasn't gotten bigger over time in terms of # of Transistors, it's just masked really well with process node shrinks while the Transistor count has gone up over time.Everybody fawns over how Apple's cores have amazingly-high IPC, but that's because they're used in relatively lower core-count products, where energy efficiency is a priority. Furthermore, Apple sells products, rather than bare chips. So, Apple can afford to make their cores bigger and more expensive, in order to maximize efficiency.

Unfortunately, if you want more efficiency, without sacrificing performance, it's going to cost more. No way around that.

So, the irony of your analogy is that it's precisely because Intel and AMD's cores aren't bigger that they rely so heavily on clockspeed for performance. And we all know how power scales with clock speed (hint: not well).

Intel needs to cut P-cores for mobile for Power/Thermal/Battery reason.If you look at the laptop CPUs, they cut P-cores a lot faster than they cut E-cores as you go from the high-end to lower-end models. And Meteor Lake wants to cut the number of P-cores down to 6. So, that's telling us Intel views the E-cores as relevant for more moderately-threaded workloads, also.

Even on desktop i9, Intel says it's faster to put the 9th thread on an E-core, than to double-up any of the P-cores.

Crestmont, the successor to Gracemont might just perform better all around, we'll see. But I'm looking forward more to what they do with the E-core then what Intel does with the P-cores. There's more room for performance growth from the Quad-cluster of E-cores.Not true. They come from a long lineage of cores that were sold in CPUs with as few as 2 cores. These days, I think most Jasper Lake models are quad-core.

Yet AMD manages to add X3D L3 cache in for a reasonable price, there's no reason why Intel can't put SRAM on it's own package and attach it as a L4 Chiplet.Adding SRAM cache gets expensive, to the point where you might as well use a socket with more memory channels, which is what I'm saying.

Yet Sapphire Rapids will have HBM as a option.HBM isn't good as a normal cache, because it's actually rather high-latency. To use it in another way, a lot of software changes are needed. Also, you can't add much capacity via HBM, without using a bigger package. And more cores will demand more memory.

I know ThreadRipper has reasons for more memory channel.Do you realize that AMD added more memory channels to Threadripper for reasons? You can't expect to beat a Threadripper, without similar memory bandwidth. That's pretty much all there is to it.

Yes, obviously. They do get wider and deeper as transistor budgets increase, but not as aggressively as Apple.It's not like Intel & AMD's primary main Core CPU Architectures hasn't gotten bigger over time in terms of # of Transistors, it's just masked really well with process node shrinks while the Transistor count has gone up over time.

Meteor Lake and I think also Arrow Lake will still locate all of the CPU cores on a single tile. Maybe some future generation will separate them onto their own chiplets, but that carries a latency penalty.On DeskTop side, I want to see pure "P-cores" & "E-cores" as seperate products.

It's finally do-able thanks to chiplets.

For sure. Otherwise, why do it?Crestmont, the successor to Gracemont might just perform better all around, we'll see.

I'm saying it's still not enough cache to truly make up for the 128-bit memory interface.Yet AMD manages to add X3D L3 cache in for a reasonable price, there's no reason why Intel can't put SRAM on it's own package and attach it as a L4 Chiplet.

That seems far less complicated than X3D and can offer real benefits.

And I'm very interested to see how they're going to use it. I think the big win (requiring a big investment) will be to use it as a faster tier of DRAM, rather than a transparent cache.Yet Sapphire Rapids will have HBM as a option.

Memory speeds increase, but so do CPU speeds and core counts. The extra headroom that DDR5 adds is already consumed by the Raptor Lake i9, as you can easily see in the DDR4 vs. DDR5 benchmarks.I know ThreadRipper has reasons for more memory channel.

But we're in the DDR5 era and have massively OCed DDR5 memory DIMMs.

Somebody is going to make a new CPU to beat a 3-year-old one? And not long before a successor 2 generations newer is about to be introduced??And the E-core CPU's only need to beat "Older ThreadRippers that I listed above", not current gen ones.

It needs to offer "Older ThreadRipper" like performance, for a fraction of the price.