Intel tipped its hand that PCIe 4.0 Optane SSDs are ready for sampling

Intel has PCIe 4.0 Optane SSDs Ready, But Nothing to Plug Them In To : Read more

Intel has PCIe 4.0 Optane SSDs Ready, But Nothing to Plug Them In To : Read more

Maybe they are releasing it because they already tested it on ryzen and it's still faster on intel with PCI3 than it is on ryzen with PCI4...the interface alone doesn't make things go any faster,you need a CPU that has enough cycles to get enough info off the disk.I get companies can try and fudge the reasons for their actions, but if it ends up clear Intel delayed an SSD or turned off features they'd built because they worked too well with others' hardware, isn't that the sort of thing that gets you sued for anticompetitive behavior?

This means no PCIe 5.0 in the near future .... did Intel cancel it ? I thought it was coming in 2020 ?

IIRC, an Intel server CPU with PCIe 5.0 is on their roadmap for 2021. They need it for CXL, which is how they plan on communicating with their datacenter GPUs and AI accelerators. So, I'm pretty sure it'll actually happen next year.This means no PCIe 5.0 in the near future .... did Intel cancel it ? I thought it was coming in 2020 ?

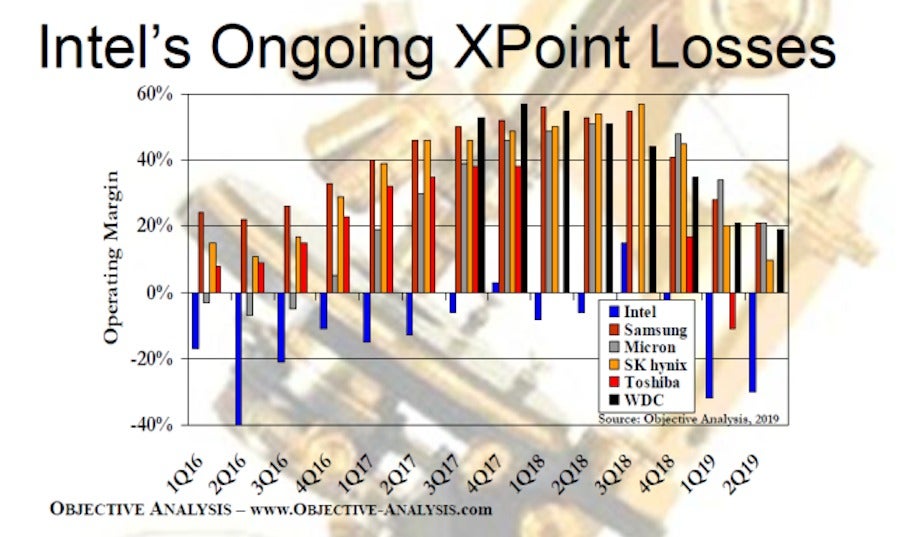

Source?main market for optane is optane RAM DIMMs and intel made the same amount of money from that in the last quarter that AMD made from all desktop CPU and GPU sales put together...

[...]and intel made the same amount of money from that in the last quarter that AMD made from all desktop CPU and GPU sales put together...

IIRC, an Intel server CPU with PCIe 5.0 is on their roadmap for 2021. They need it for CXL, which is how they plan on communicating with their datacenter GPUs and AI accelerators. So, I'm pretty sure it'll actually happen next year.

However, PCIe 5.0 won't be coming to desktops, in the foreseeable future. It's more expensive to implement, burns more power, and has tighter limitations on things like trace length. Beyond that, there's no need, as even PCIe 4.0 is currently borderline overkill, for desktops.

I follow this argument, and it's one of the more compelling ones for yet faster speeds. But, you need to think through how such a product would be introduced to the public.There is a huge need for PCIe 5.0 , we will not need 16 lnes anymore for GPU and this will open up more lanes for other cards ...

That ain't gonna happen. You should read more about the limitations of Thunderbolt 3, which will require a very short, very expensive cable to reach its maximum potential. And that's still PCIe 3.0.also I can see thunderbolt 5 with 4 lanes of PCIe 5.0 potential , an externa GPU with the same bandwidth of 16 lanes 3.0 today...

This is actually conceivable. You could put the connector right next to the CPU. There's not much concern about legacy, because docking stations tend to be proprietary, anyhow (except for USB3 or Thunderbolt -based ones, but let's leave those aside). The biggest issue would be making the connector robust enough to deal with frequent plugging/unplugging, dirt, etc. Those might be the deal-breakers, in that scenario.also docking stations for notebooks ... will be something huge. I know it will draw alot of power , but can be limitied to plugged power only and not batteries when docked only.

But that should include their entire NAND-based SSD business, as well. I have a few NAND-based Intel SSDs, but no Optane models.In 3Q19 Intel already had 1,3 B. revenue in the Non-Volatile Memory Solutions Group.

I follow this argument, and it's one of the more compelling ones for yet faster speeds. But, you need to think through how such a product would be introduced to the public.

For instance, let's say AMD decided to make their PCIe 4.0 GPU slots x8. ...except, what if you want to put a PCIe 3.0 card in that slot? Even if the slot is mechanically x16, you don't want to give up half the lanes for PCIe 3.0 cards. So, you have to go ahead and make it a full x16 slot, anyway.

Looking at the flip size, if they made the RX 5700 XT a PCIe 4.0 x8 card, and someone plugged it into an Intel board, they'd be upset about getting only 3.0 x8 performance.

So, the catch in what you're proposing is that there's no graceful way to transition to narrower GPU slots that won't burn people who don't have a matched GPU + mobo. If pairing an old GPU with a new mobo, or a new GPU with an old mobo, you still want x16. So, that means you can't get lane reductions in either the card or the mobo. At least, not when first introduced, which is when the technology would have the greatest impact on price, power, and other limitations (e.g. board layout). There's just no easy transition path to what you want, even if the downsides could eventually be solved (spoiler: I think they can't).

That ain't gonna happen. You should read more about the limitations of Thunderbolt 3, which will require a very short, very expensive cable to reach its maximum potential. And that's still PCIe 3.0.

This is actually conceivable. You could put the connector right next to the CPU. There's not much concern about legacy, because docking stations tend to be proprietary, anyhow (except for USB3 or Thunderbolt -based ones, but let's leave those aside). The biggest issue would be making the connector robust enough to deal with frequent plugging/unplugging, dirt, etc. Those might be the deal-breakers, in that scenario.

Given how well laptops could "get by" with a slower, narrower link (if not just Dual-Port Thunderbolt 3), I don't know if the demand would be there to justify it.

Maybe it is faster on ryzen and intel will still release it because they main market for optane is optane RAM DIMMs and intel made the same amount of money from that in the last quarter that AMD made from all desktop CPU and GPU sales put together...

It's yet another hurdle, though. Since PCIe is not a major bottleneck and PCIe 5 will undoubtedly add cost and have other downsides, I don't see it happening.Well we will have to "move on" one day , we cant be stuck using long 16 lanes slots forever just to make people with older cards "happy"

Oh, but you want them to be 4x as fast? I think it's not technically possible. I guess 100 Gbps Ethernet shows us that you could reach well beyond TB3-DP's 40 Gbps using fiber optics, but those cables aren't exactly consumer-friendly.As for the limitations of the cable length of the TB3 , we do ave eGPU boxes already and I dont think that TB5 will be any difference. expensive cables ? they still have a market.

The thing is that you could have a docking station with a PCIe 3.0 x16 connection, today. To improve docking station connectivity, you don't need PCIe 5. The market will go for whatever's cheapest and most practical. For the foreseeable future, that will exclude PCIe 5.And finally , about the notebook docking station , the demand is here but the technology is not yet. PCIe 5.0 will open it .. and the demand will follow , it will change the desktop replacement forever.

Yes, I could see it being used as an in-package interconnect. That's a corner case I didn't really want to get into, because it's basically invisible to PC builders and end users.Does this also make sense as a chiplet interconnect for consumer chips?

It's yet another hurdle, though. Since PCIe is not a major bottleneck and PCIe 5 will undoubtedly add cost and have other downsides, I don't see it happening.

For a good example of how server technology doesn't always trickle down to consumers, consider how 10 Gigabit ethernet has been around for like 15 years and is still a small niche, outside of server rooms. Meanwhile, datacenters are already moving beyond 100 Gbps.

Oh, but you want them to be 4x as fast? I think it's not technically possible. I guess 100 Gbps Ethernet shows us that you could reach well beyond TB3-DP's 40 Gbps using fiber optics, but those cables aren't exactly consumer-friendly.

The thing is that you could have a docking station with a PCIe 3.0 x16 connection, today. To improve docking station connectivity, you don't need PCIe 5. The market will go for whatever's cheapest and most practical. For the foreseeable future, that will exclude PCIe 5.

I get that. But there's the market challenge I mentioned in transitioning to narrower GPU slots, and then there are the physical realities of PCIe 5.0 requiring motherboards with more layers, signal retimers, and generally burning more power.it is about Freeing more lanes for other cards as I said. if you have 16 lanes of PCIe 5.0 , you can use 8 lanes for GPU as standard and the rest for other cards

Those are not consumer-friendly, like existing Thunderbolt or USB cables.the cables are not big issue , we already have PCIe 3.0 8 lanes and 16 lanes cables . expensive yes , but has a market.

But Optane DIMMs don't use PCIe, so what do they have to do with this article?Maybe it is faster on ryzen and intel will still release it because they main market for optane is optane RAM DIMMs and intel made the same amount of money from that in the last quarter that AMD made from all desktop CPU and GPU sales put together...

I think @TerryLaze 's point is that Intel will release the PCIe SSDs anyway, because they don't really care about that market - they mainly care about the Optane DIMM market.But Optane DIMMs don't use PCIe, so what do they have to do with this article?

Intel can use optane as ram, there is absolutely no reason to use optane as a disk on a pci slot if you can use it as main ram.I could see it both ways. Intel is clearly threatened by AMD's inroads into the datacenter, and providing PCIe 4.0 Optane drives, right now, would only serve strengthen their platform's potential. On the other hand, if the nonvolatile solutions group is sufficiently independent, within Intel, then maybe they're motivated just to ship whatever they can sell.

Two possible reasons: capacity and software support.Intel can use optane as ram, there is absolutely no reason to use optane as a disk on a pci slot if you can use it as main ram.

This product is clearly made to target systems that can not use optane as ram at all.