1. Some people on here probably never heard of IPC or frequency or power efficiency and probably think that all quad cores are the same. They probably think that an i7 7700K is the same as an Intel Core 2 quad Q6700.

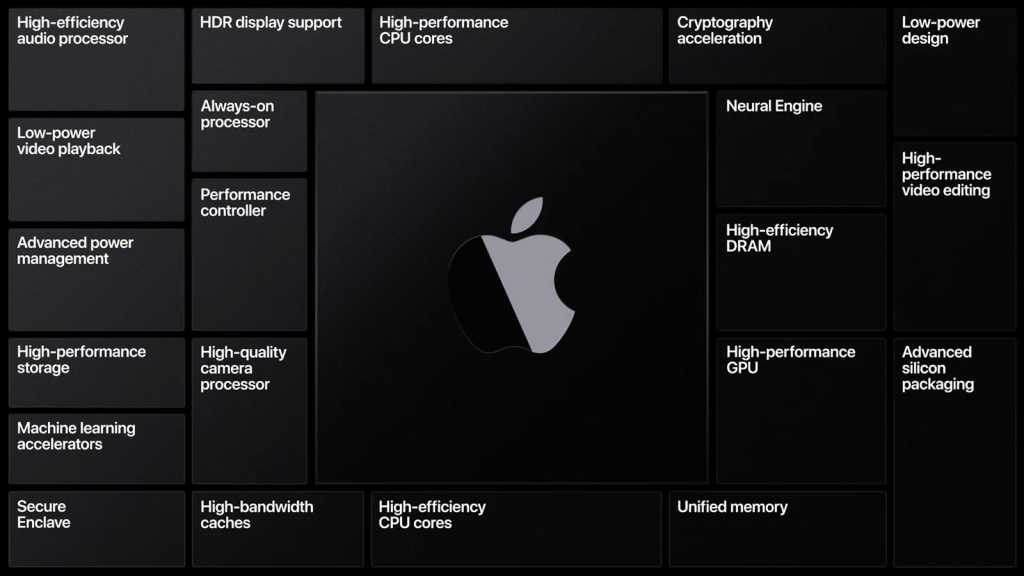

2. This is a quad-core but across the board it destroys the newest 8core/8thread (4700U) offering of AMD as well as Intel’s own 10th gen 6core/12thread 15W cpu (i7 10710u) not just in single threaded workloads but in multithreaded workloads too. And against the 8c/16t 4800U, there are plenty of workloads where Intel wins and in some it wins bigly.

3. These cpus have a nominal tdp of just 15W. Just 3 years ago if you wanted a quad core mobile cpu you needed to go for 45W skus (e.g. 7700HQ). Compare the i7 7700HQ in Geekbench 5

here (ST:829, MT:3417) versus the i7 1165G7

here (ST: 1533, MT 5769). So now you get 70% more multithreaded cpu performance (not to mention the 85% more single-threaded performance) for 1/3 of the power! And we are also talking about a large laptop versus an ultraportable.

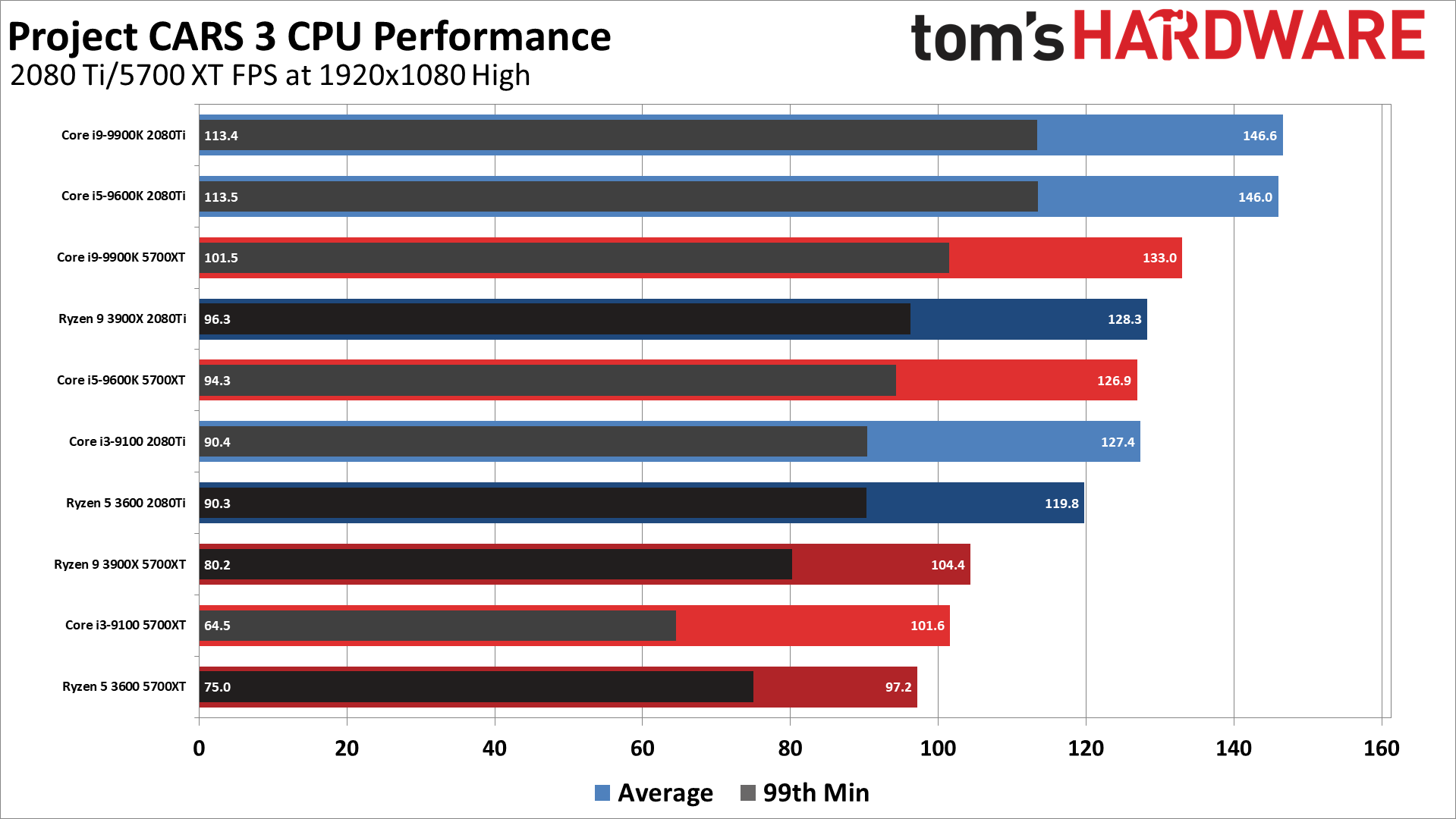

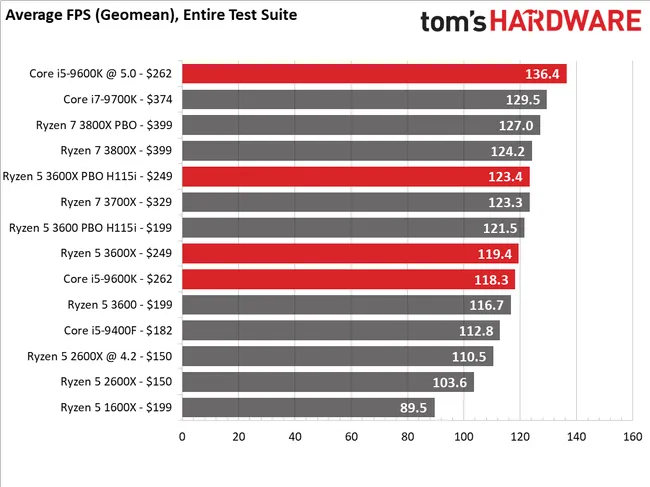

4. Funny that some people mention gaming. These cpus pack immensely better integrated graphics - a 4x improvement over the UHD graphics of Cometlake cpus and 2x over Icelake cpus (which we know were on par with AMD’s apus).

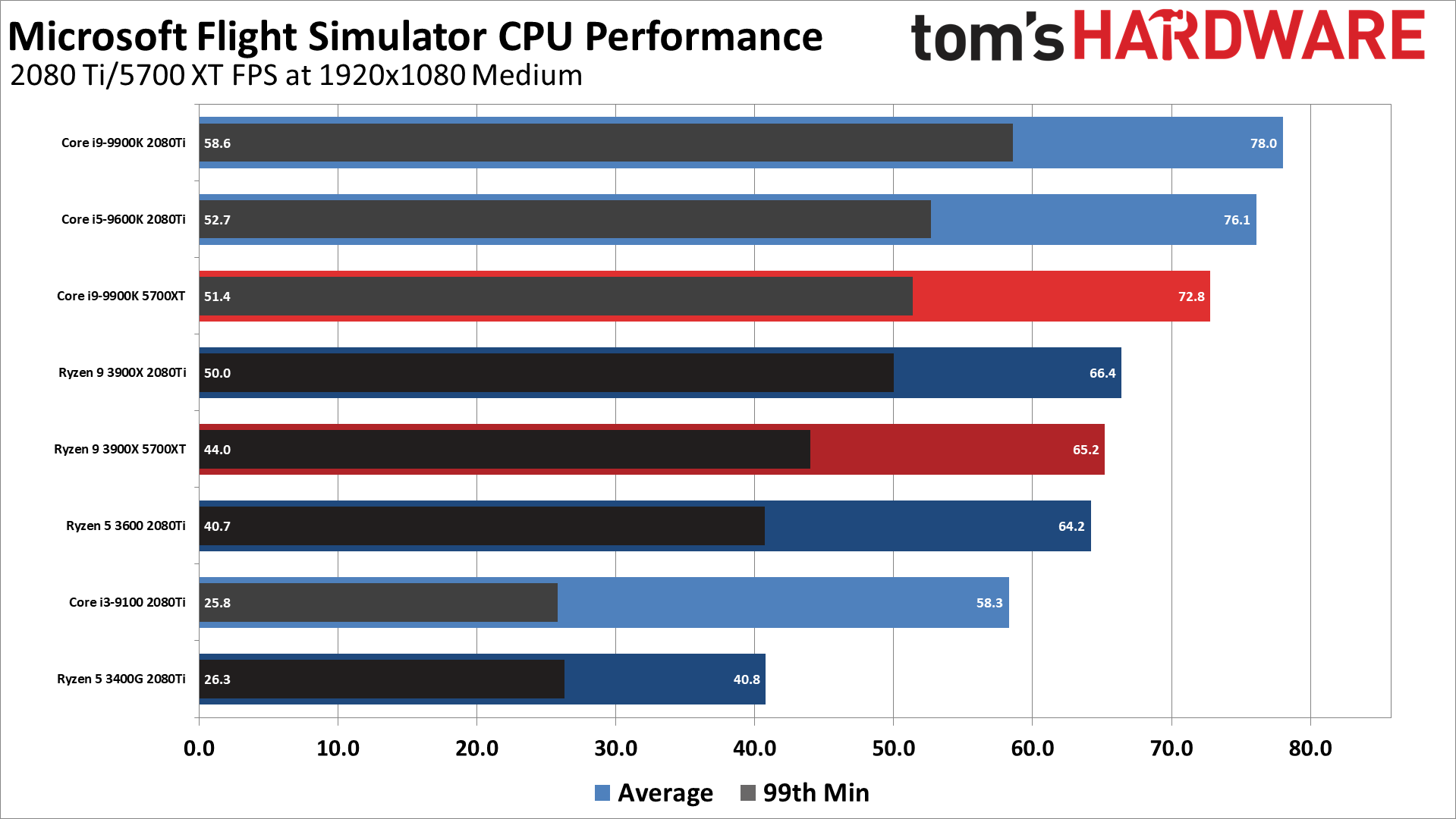

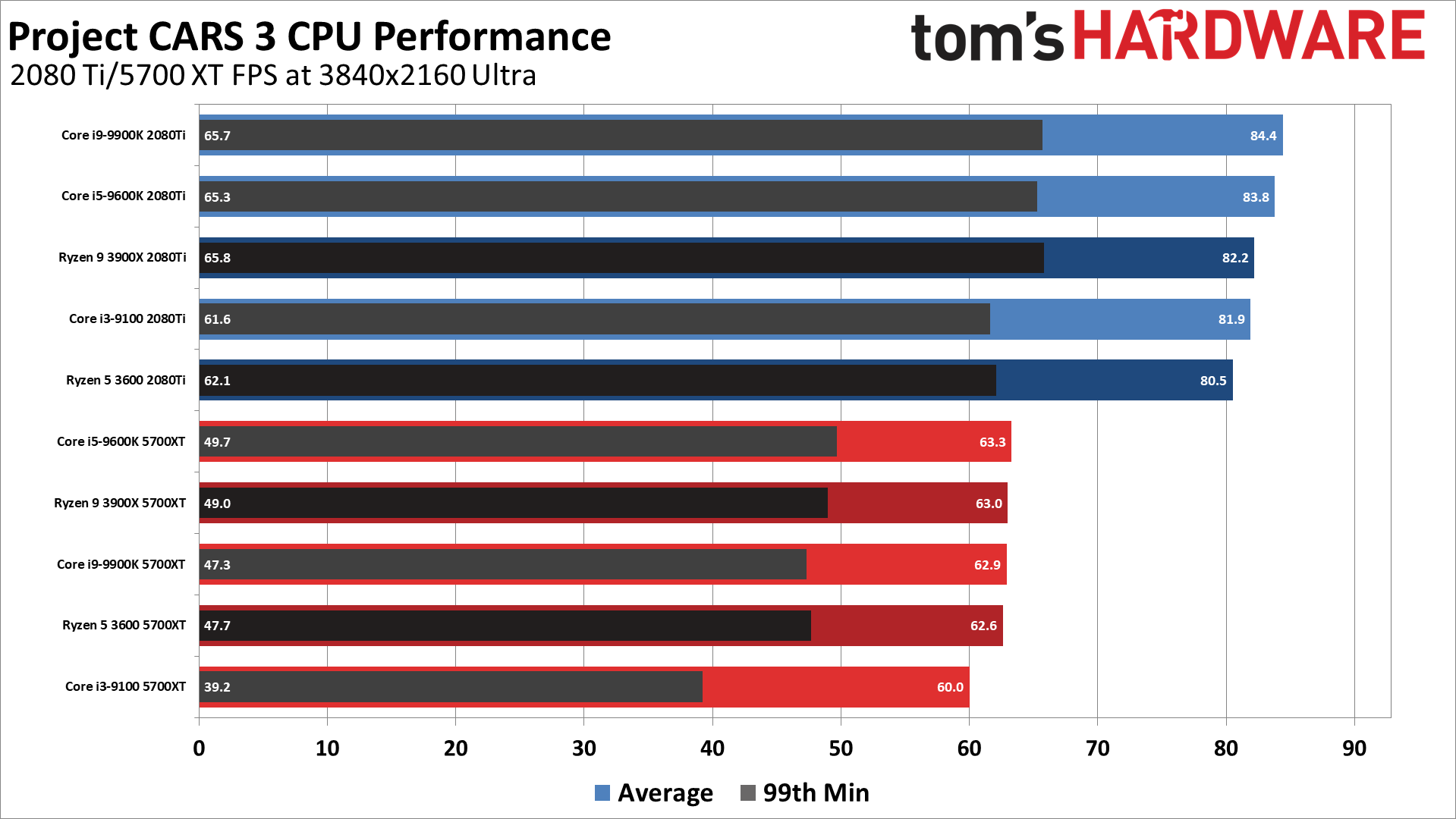

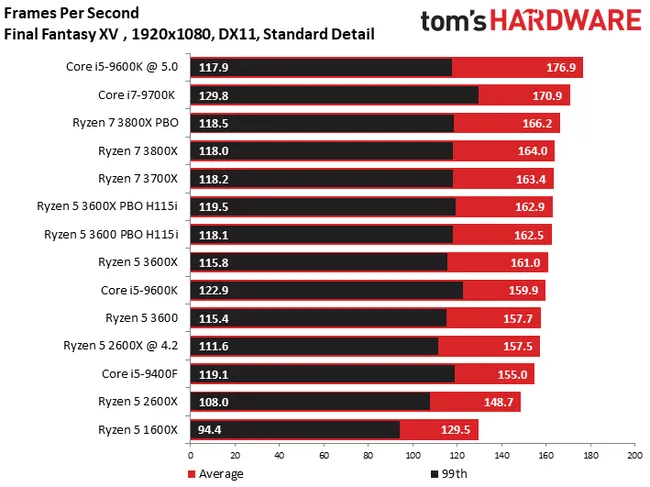

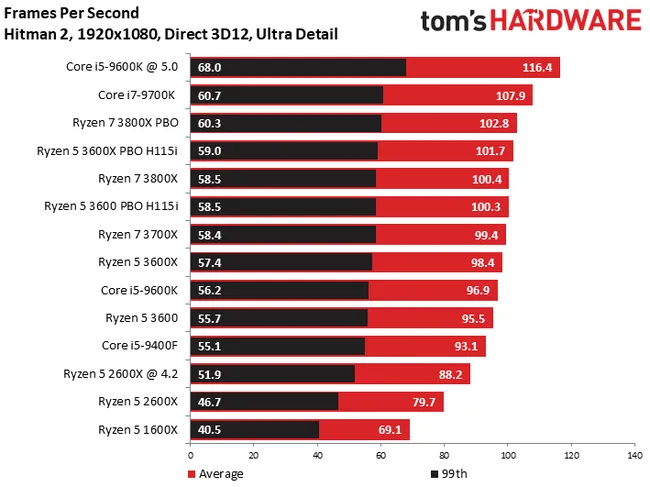

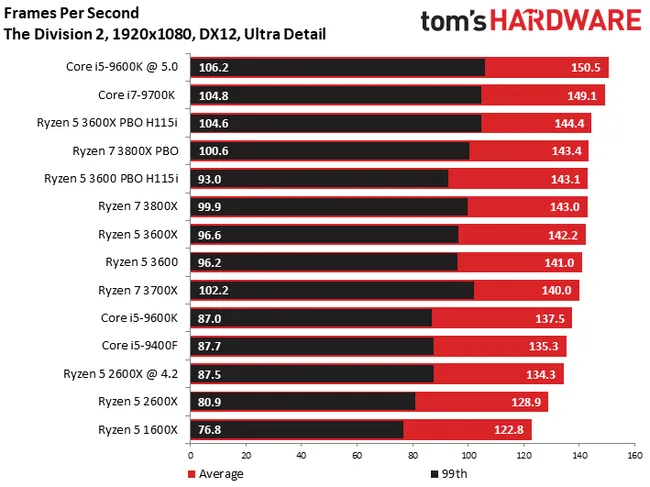

5. These cpus are primarily meant for (i) thin-and-light ultraportable laptops and/or (ii)for laptops without a discrete gpu. They are not meant to be paired with powerful discrete graphics cards. But even if you were to pair them with a discrete gpu in beefier laptops, Intel wins in gaming cpu performance anyway. It wins even with their last-gen quad core cpus let alone these ones. And unless you are going to be playing games in 720p you would need a lot more gpu horsepower for such a quad core cpu to bottleneck the gpu. A mobile rtx 2060 ain't such gpu.

6. Even if you were to pair it with a better gpu you wouldn't leave too much performance on the table even in 1080p. And of course no bottleneck in 4K.

7. Speaking of pairing it with better gpus, a great thing about Intel mobile cpus is that they have thunderbolt. So you can buy a gpu-less laptop and use an e-gpu with thunderbolt only whenever you want to do heavy gaming. As it has been shown, the x4 PCIe3 interface of Thunderbolt, even with a 2080Ti,

contrary to popular belief, only incurs about 10% of gpu performance loss.

8. In any case, if mobile, high-fps gaming is your focus you are better off buying a a laptop with a powerful discrete gpu and an H-series 45W cpu - the tigerlake H-series will be released in CES in January. And that to be paired with the right high-refresh ratio screen.

.

.