Wait, no, that drop off and increase in clock speed is after it completed, it even says beneath it:

For reference, here’s what the frequency functionality looks like when operating under settings with MCE disabled via XMP II and the “No” prompt. This plot takes place over a 23-minute Blender render, so this is a real-world use case of the i9-9900K. Notice that there is a sharp fall-off after about 30 seconds, where average all-core frequency plummets from 4.7GHz to 4.2GHz. Notice that the power consumption remains at almost exactly 95W for the entire test, pegged to 94.9W and relatively flat. This corresponds to the right axis, with frequency on the left. This feels like an RTX flashback, where we’re completely power-limited. The difference is that this is under the specification, despite the CPU clearly being capable of more. You’ll see that the frequency picks up again when the workload ends, leaving us with unchallenged idle frequencies.

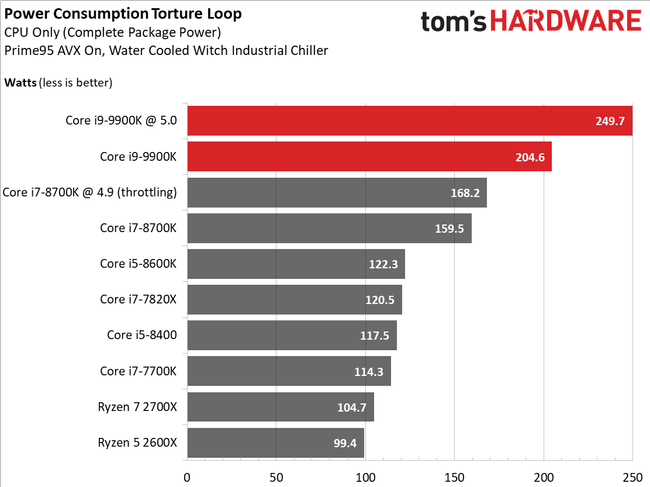

The article itself even states how there's better performance because of the violations of Intel's rules, and how performance is punished for following Intel's spec.

So, doesn't this mean that, if Intel's spec, which Intel doesn't seem to be too enthusiastic about enforcing, is followed, then their performance (also, benchmarks) will suffer?

If their spec is followed, will they be able to hold on to the so-called (and quite overblown) "gaming performance" crown?

And that's with desktop systems. I would agree that laptops should be following things strictly, but, again, are they for this system? Or is single-thread the only thing they can do because keeping the rest of the cores minimally utilized is the only way they can take advantage of their high clocks and keep thermals at sane levels?

EDIT: Also, did you ever wonder

why around the 8th gen or so that Intel suddenly changed their methodology on how they determined TDP? If they applied the new standard to their older chips, would they have lower values than were advertised back in their day (Kaby Lake and earlier)? If they applied the same standard to Ryzen, would Ryzen's TDP be lower as well?