AMD didn't have anything to do with developing the 3d stacking technology behind 3D Vcache. It was developed by TSMC and AMD just used it. What should AMD get credit for?

TSMC's announcement of 3D stacking from 2018:

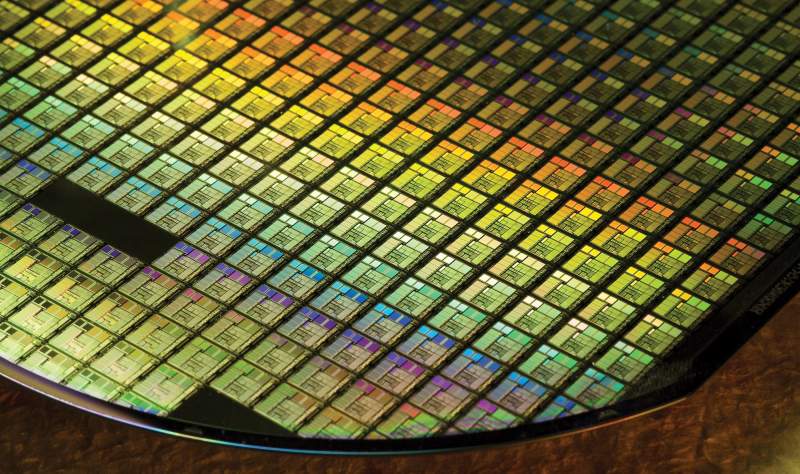

TSMC is announcing that they have a new Wafer-on-Wafer 3D stacking technology. Just don’t expect it to show up on GPUs any time soon.

www.eteknix.com

Any mention of AMD in that? AMD announced 3D VCache in 2021.

Actually, AMD do have things.. I don't know the details but AMD collaborated with TSMC to develop some sort of their own version or ideas and they have patents in this regard. They have to collaborate with TSMC because the later have packaging technologies and expertise that AMD lacks.

AMD's work with TSV stacking goes back to 2016 in their collaboration with Samsung and others with HBM, but they have TSMC/3D V-Cache patents in 2019, so they were working on it with TSMC way before they announced the first product in 2021, and I've read some where that the TSMC-AMD collaboration goes even before TSMC's announcement.

AMD patents does mean they own the tech. or they invented it, but they solved some issues and thought of some ideas, so you can say its their own implementation of the tech, and they still do, they have announced second gen 3D V-Cache and also have patents on cooling the stacked cache as well.

Intel can have TSMC tech, but they can't have the AMD specific solutions without licensing them (if they actually need them), and as the article said, intel is developing their own version as well, maybe these will be different than TSMC's own solutions, or maybe similar with intel's own touch (implementation).

What I'm saying is, that the statement of AMD doesn't have anything to do with that is wrong, they have but not everything.