Underpower, ideally a combination of undervolting and underclocking, does wonders. AMD GPU's have been great undervolters for several generations now (also one reason why they were so popular during the cryptomining craze), and the same is pretty much true on many nVidia GPU's as well as you mentioned

@TheHerald . Manufacturers should be optimizing more for power efficiency, but they are optimizing for performance for the sake of marketing and competition.

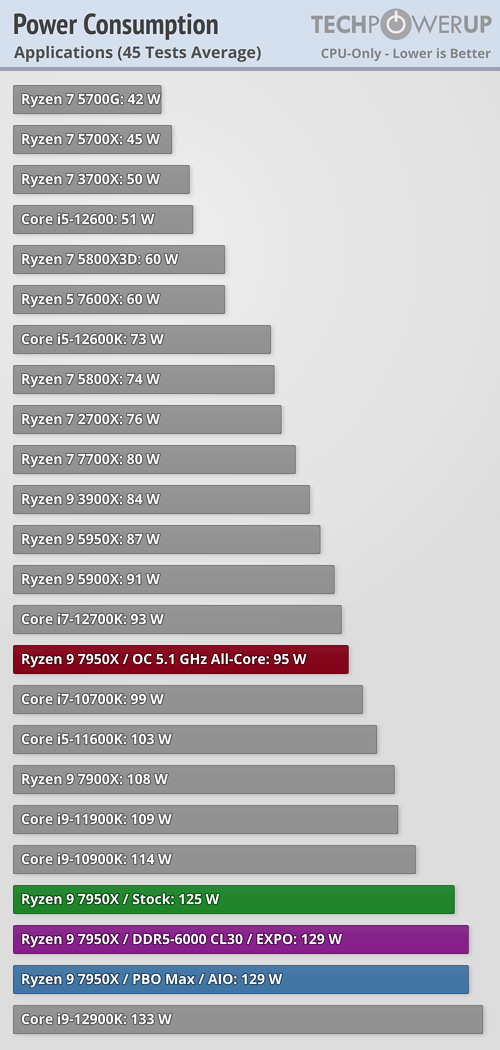

I think this is what others are trying to say in this thead -- that efficiency is still too backseat to performance on these high-end chips. The memory bandwidth and capacity is there with these huge alotments of HBM3, HBM4, etc. for AI training -- it's just a matter of clocks coming back down a little with voltage following and therefore power coming down to the square of the voltage drop. Intel in particular is a great offender of being too high on the power-voltage-clock speed curve as seen by the high TDP of 14th gen CPU's and especially the 14900K/KS. This is certainly to compete with AMD, but it comes at the cost of more consumption on the grid for what really doesn't matter at the end of the day in the real world: that last little squeeze of performance. AMD's X3D processors are an excellent example as they are purposely held back in clock speeds and peak power consumption, but they perform like champs.

Back to efficiency vs. raw perf, it's also true as some have said that 1500W in itself isn't an indicator of efficiency as we have to see what the performance level is at that rating. Fortunately, these chips and most don't run at peak power 100% of the time, but then chips for AI training are probably living harder lives than the rest of their datacenter counterparts.

Lastly, no, I don't see direct regulation being necessary at this time and agree rather with incentives and disincentives, especially taxing and/or higher electric rates on the biggest consumers. Microsoft probably already anticipates this, which is why it's worth it to them to go as far as spending huge dollars on self-owned nuclear power generation. And no, megacorps don't self-regulate -- they want and expect governments to set the bars because they want to know what the playing field is and for everyone to have that same playing field... well, they all like their carve-outs and loopholes, but that's for another time and place.