D

Deleted member 2731765

Guest

Hello,

It appears that we are getting several leaks/rumors about next-gen CPUs and GPUs from Intel and AMD. This time we have some GAMING benchmarks of INTEL's upcoming Raptor Lake flagship SKU, 13th GEN processor. The first gaming and synthetic performance benchmarks of Intel's Core i9-13900K Raptor Lake 5.5 GHz CPU have been leaked by Extreme Player at Bilibili (via HXL).

The Intel Core i9-13900K Raptor Lake CPU tested in the leaked benchmarks is a QS sample that features 24 cores and 32 threads in an 8 P-Core and 16 E-Core configuration. The CPU carries a total of 36 MB of L3 cache and 32 MB of L2 cache for a combined 68 MB of 'Smart Cache'. It also comes with a base (PL1) TDP of 125W & an MTP of around 250W.

In terms of performance, we have more detailed gaming and synthetic benchmarks with the Intel Core i9-13900K (5.5 GHz) and Core i9-12900K (4.9 GHz) running at their stock frequencies on a Z690 platform with 32 GB of DDR5-6400 memory and a GeForce RTX 3090 Ti graphics card. The Core i9-13900K already has a 12.2% clock speed advantage over the Core i9-12900K so it should be faster by default even if the architecture is the same. The extra uplift comes from the increased cache as it gets over a 50% bump (68 MB vs 44 MB).

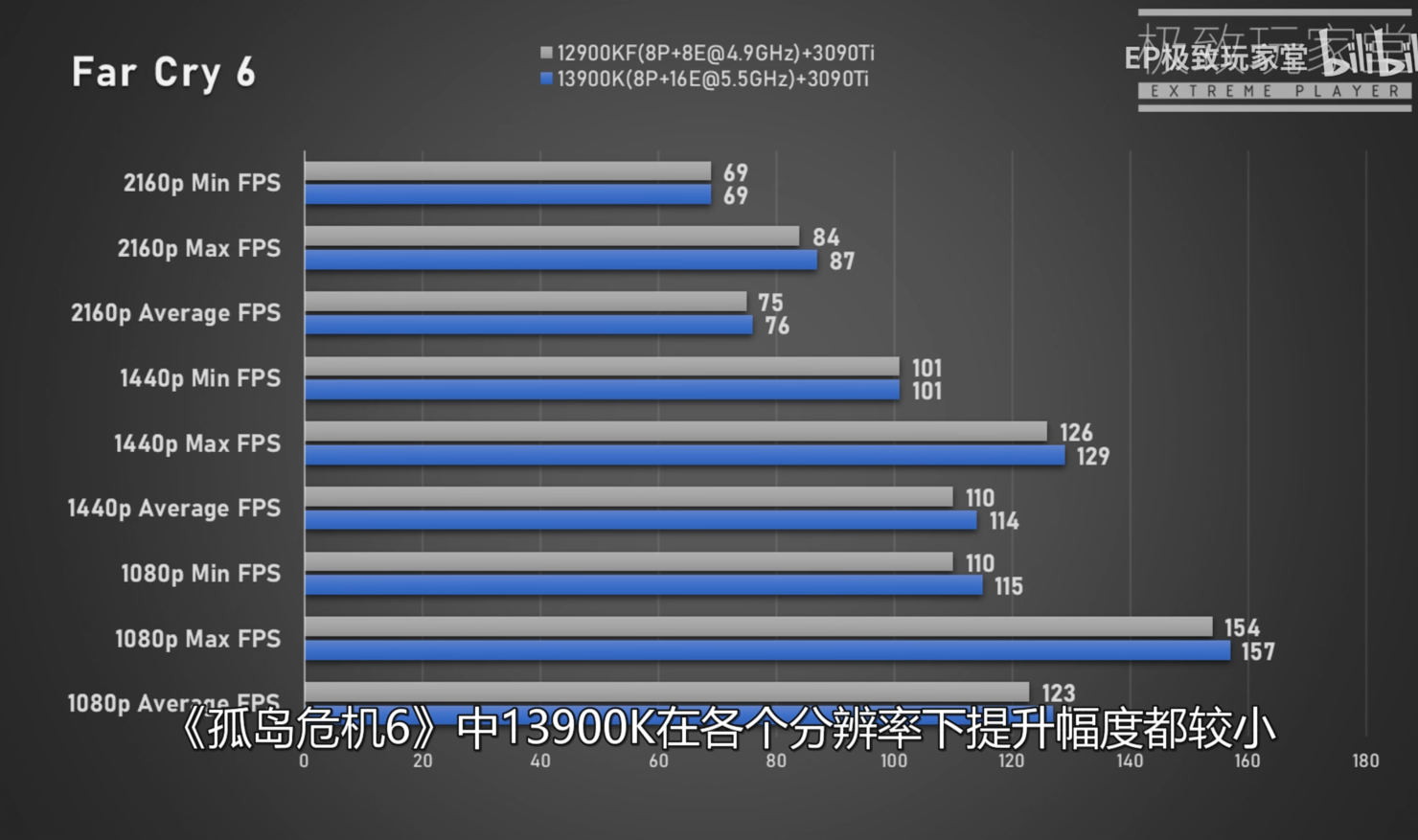

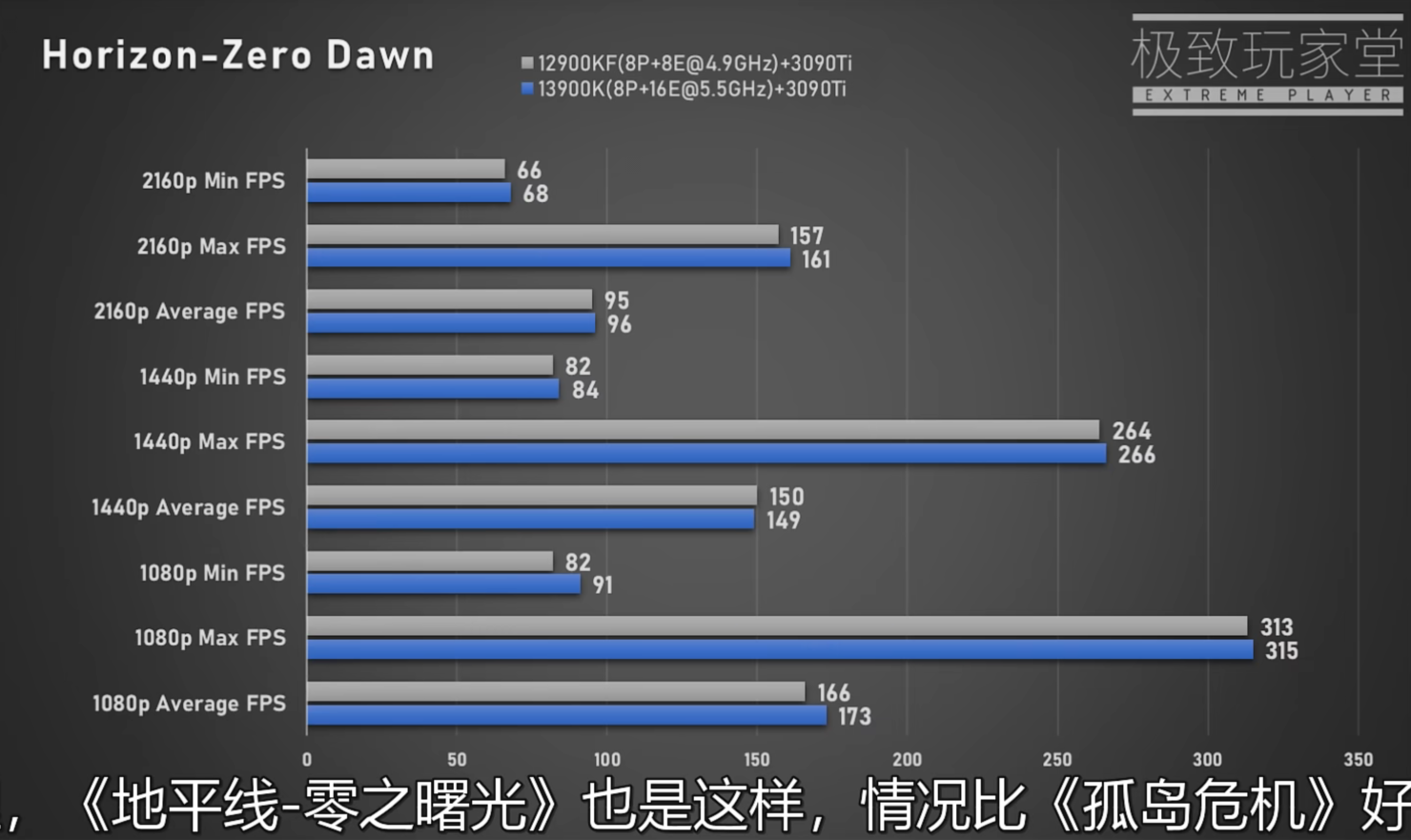

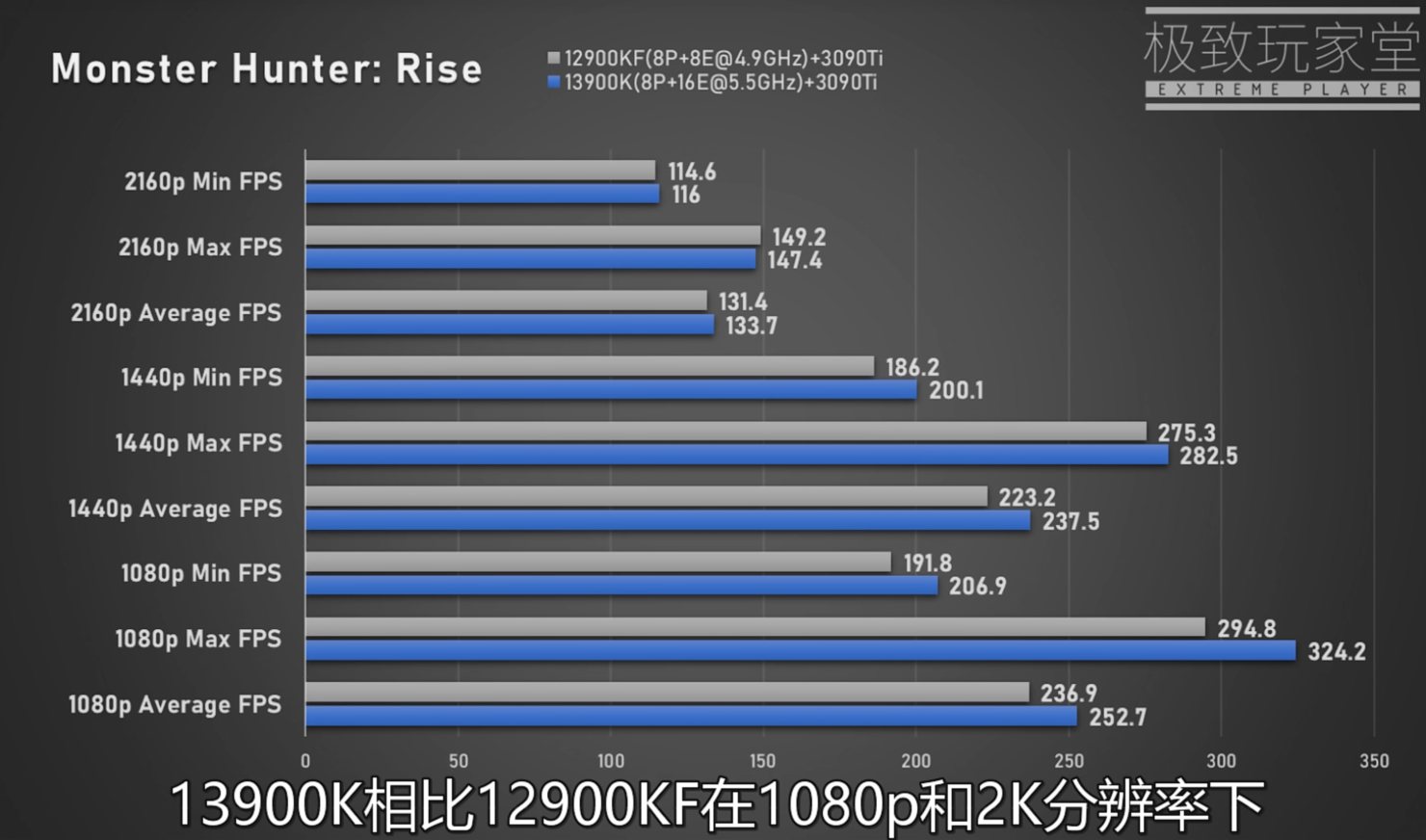

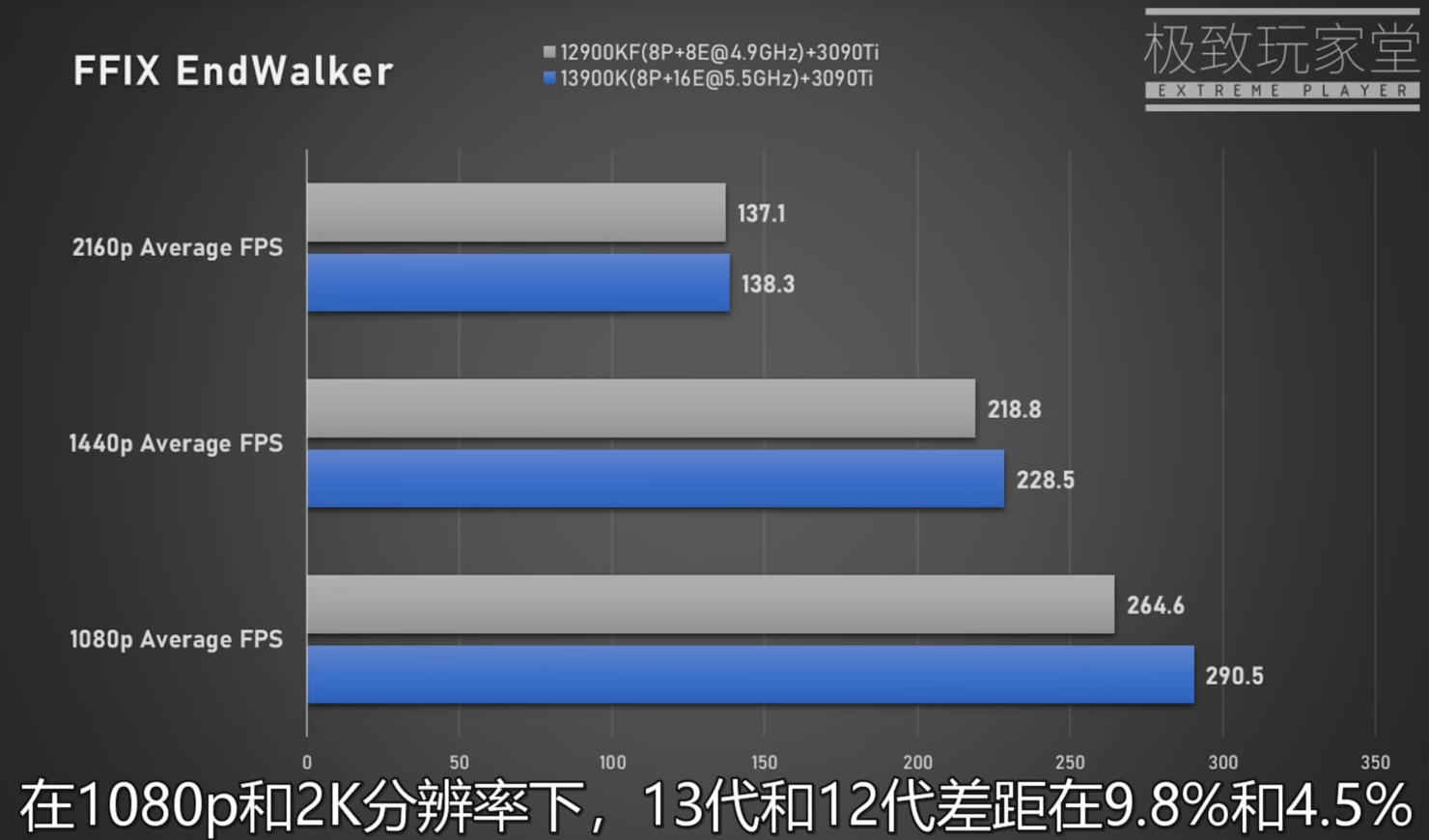

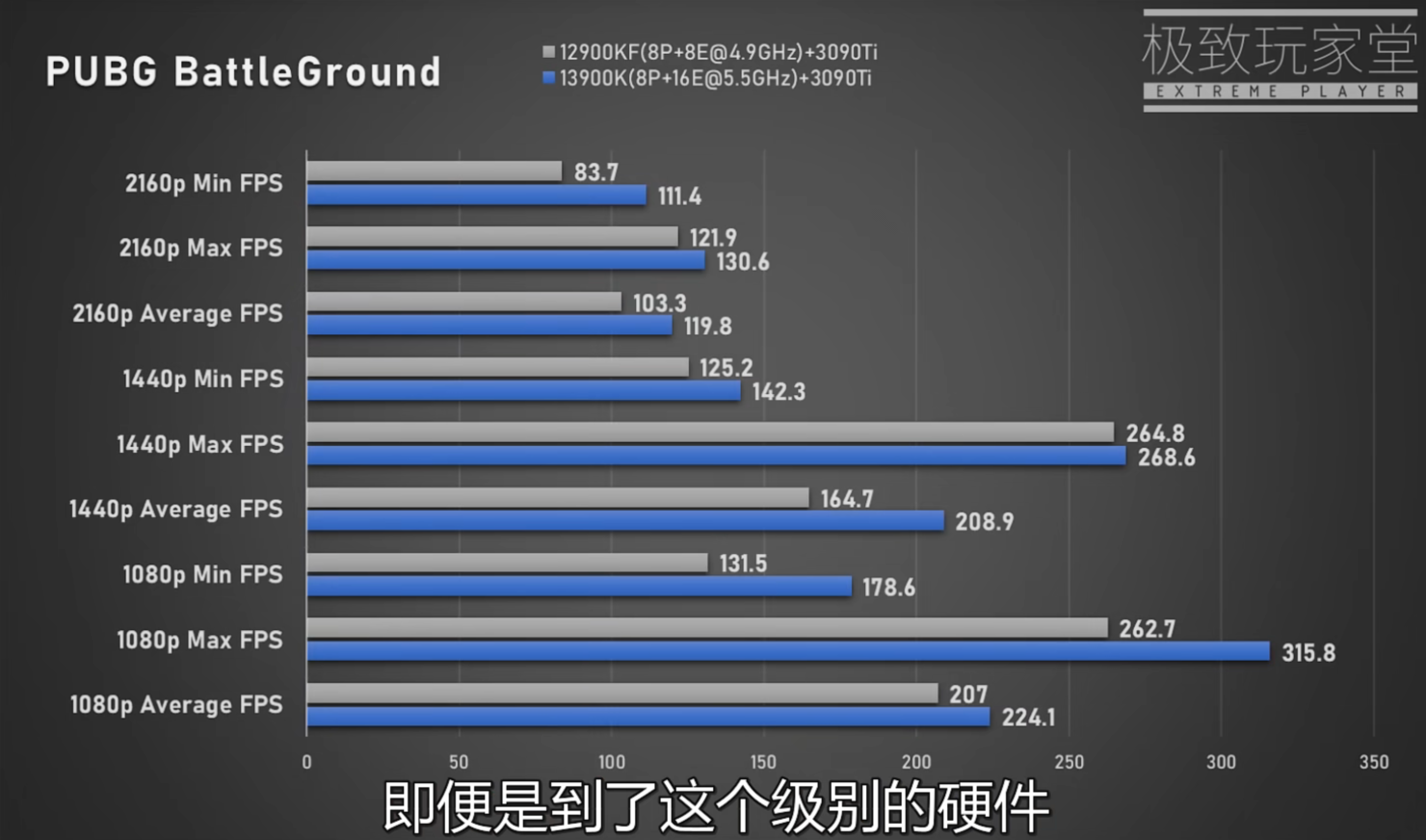

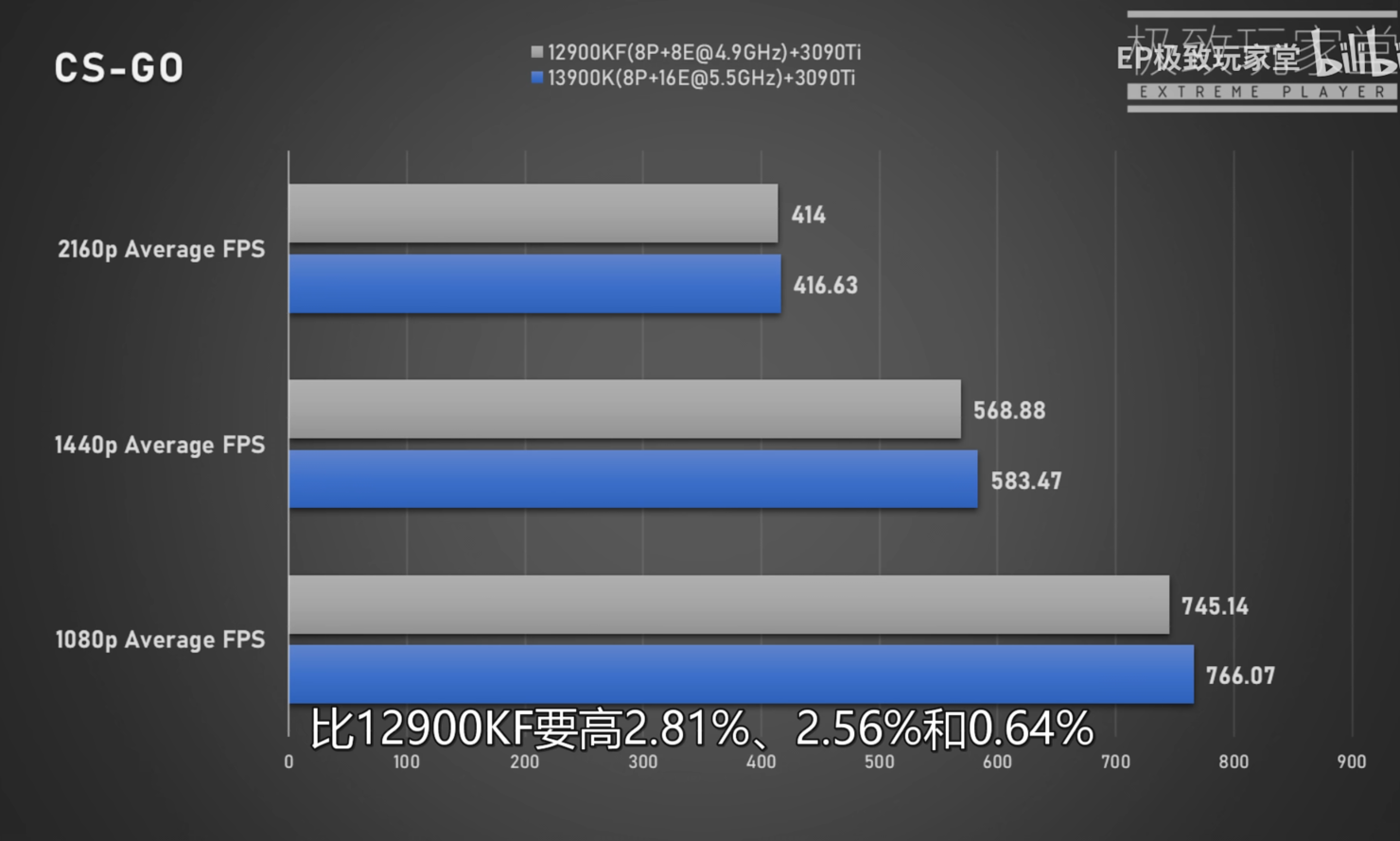

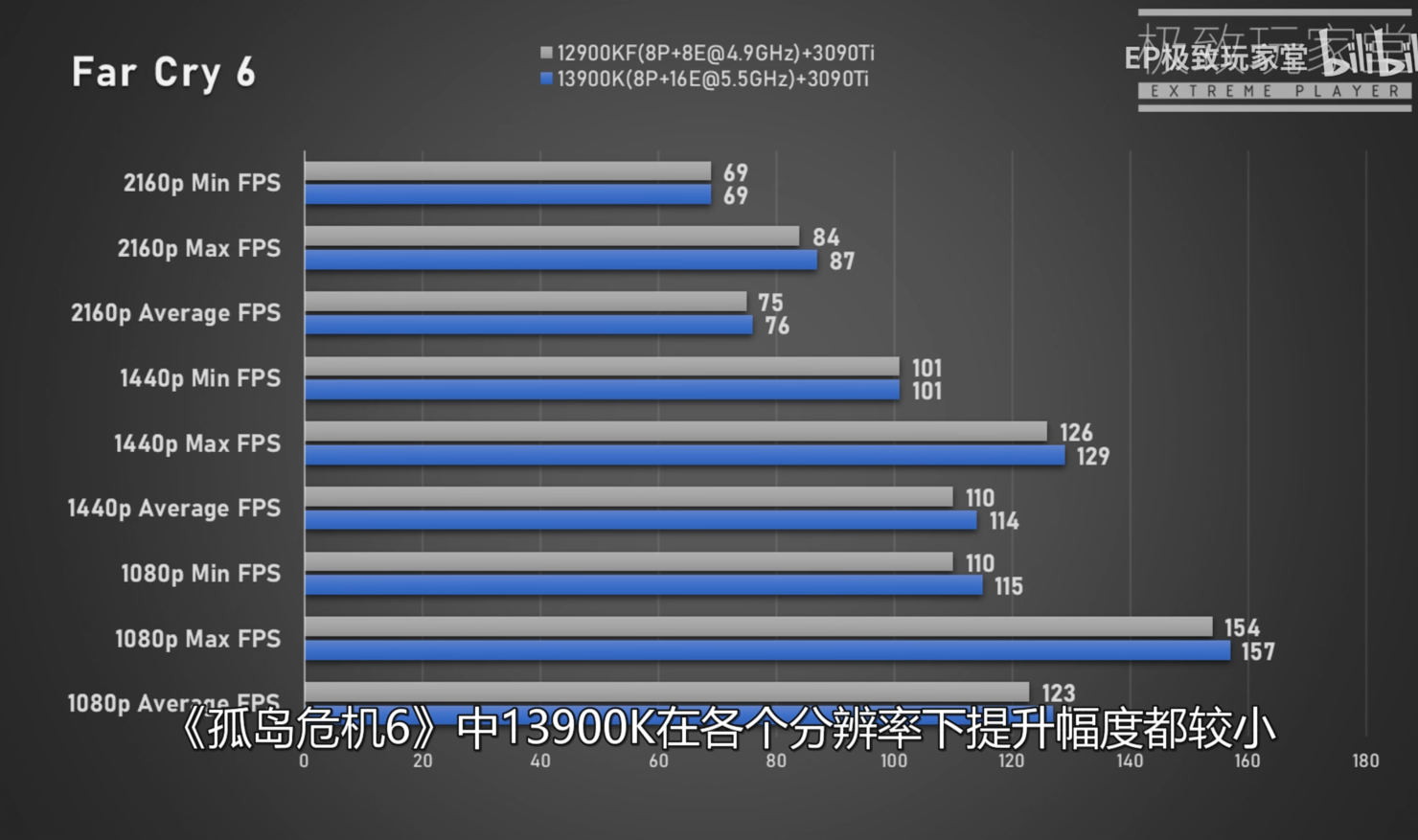

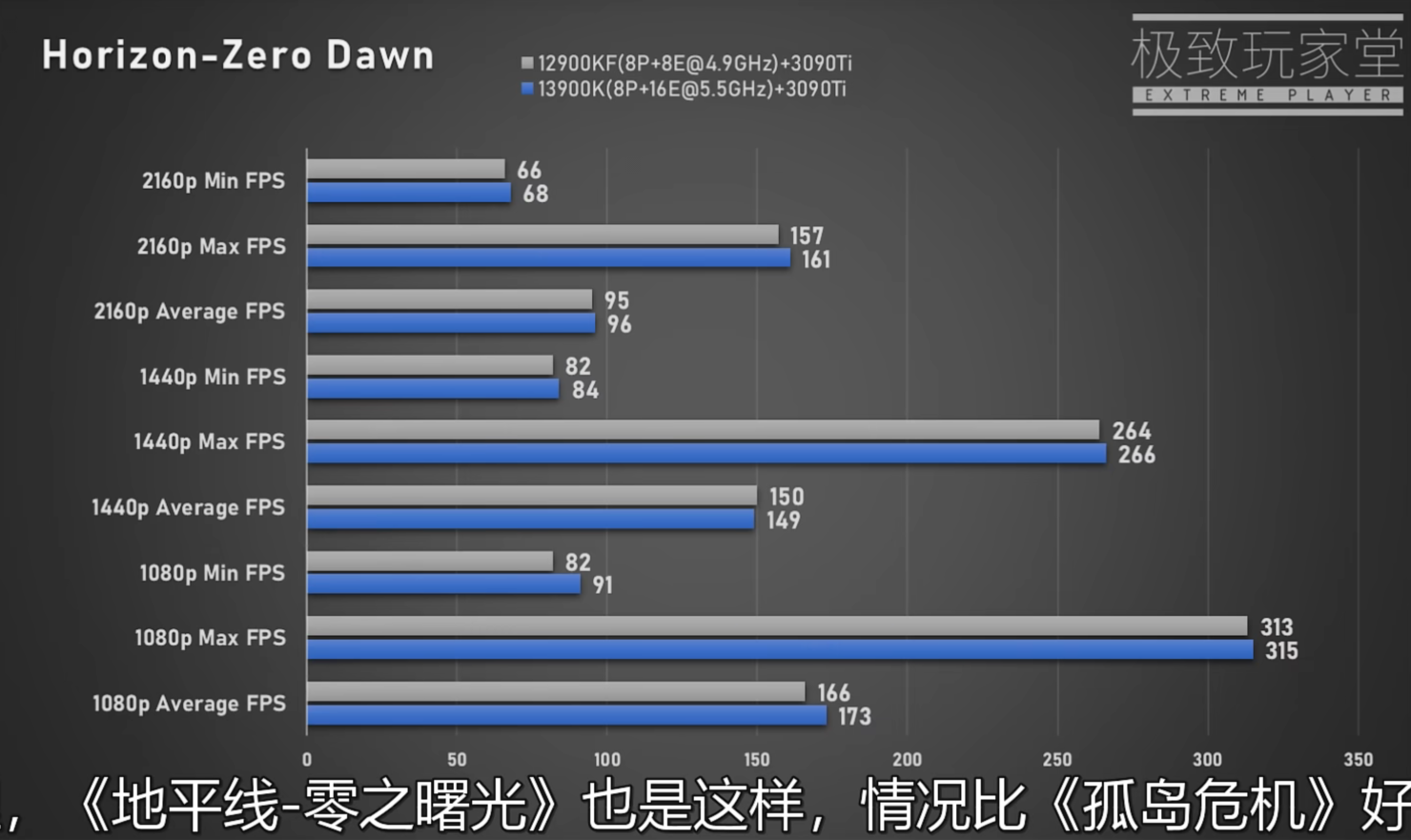

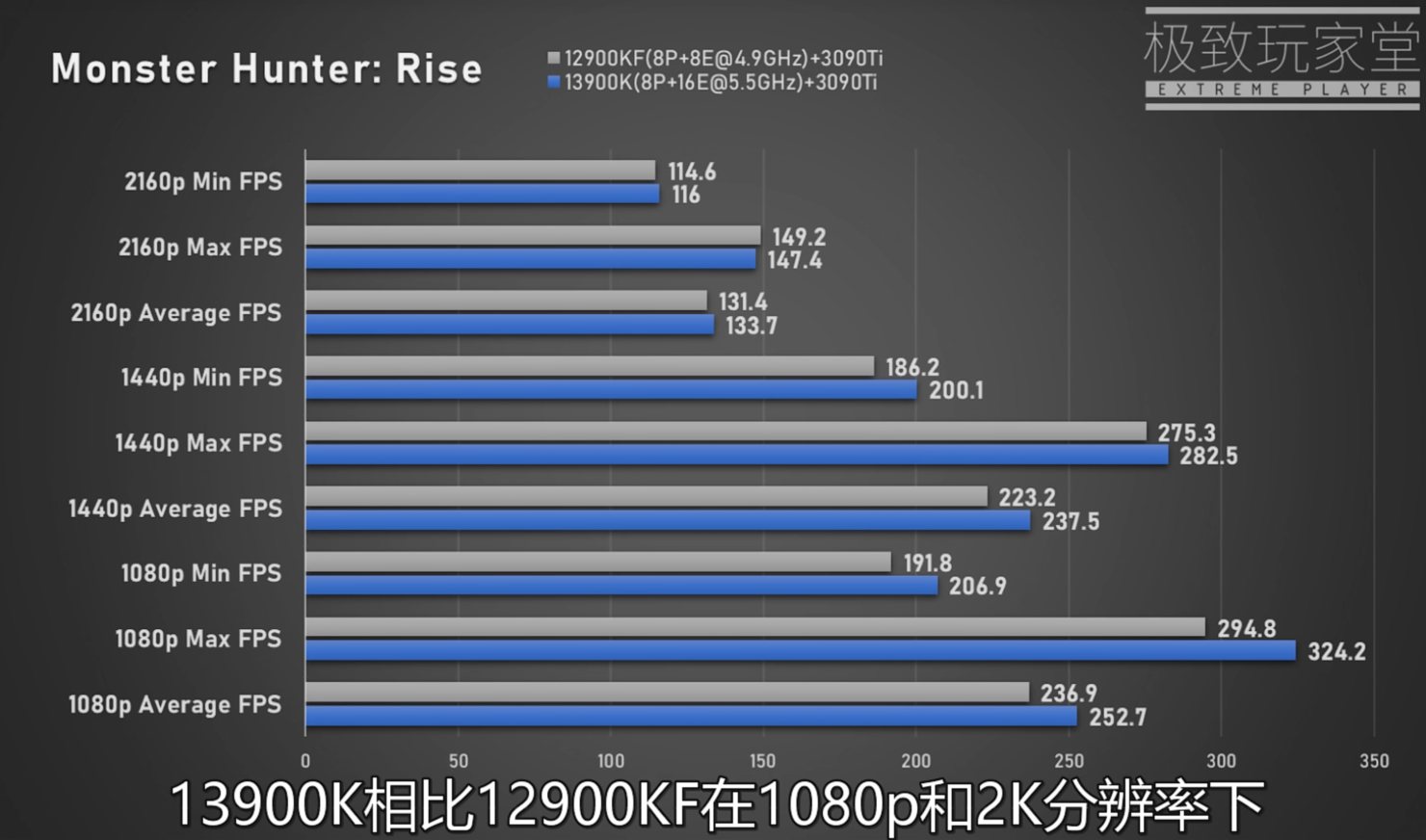

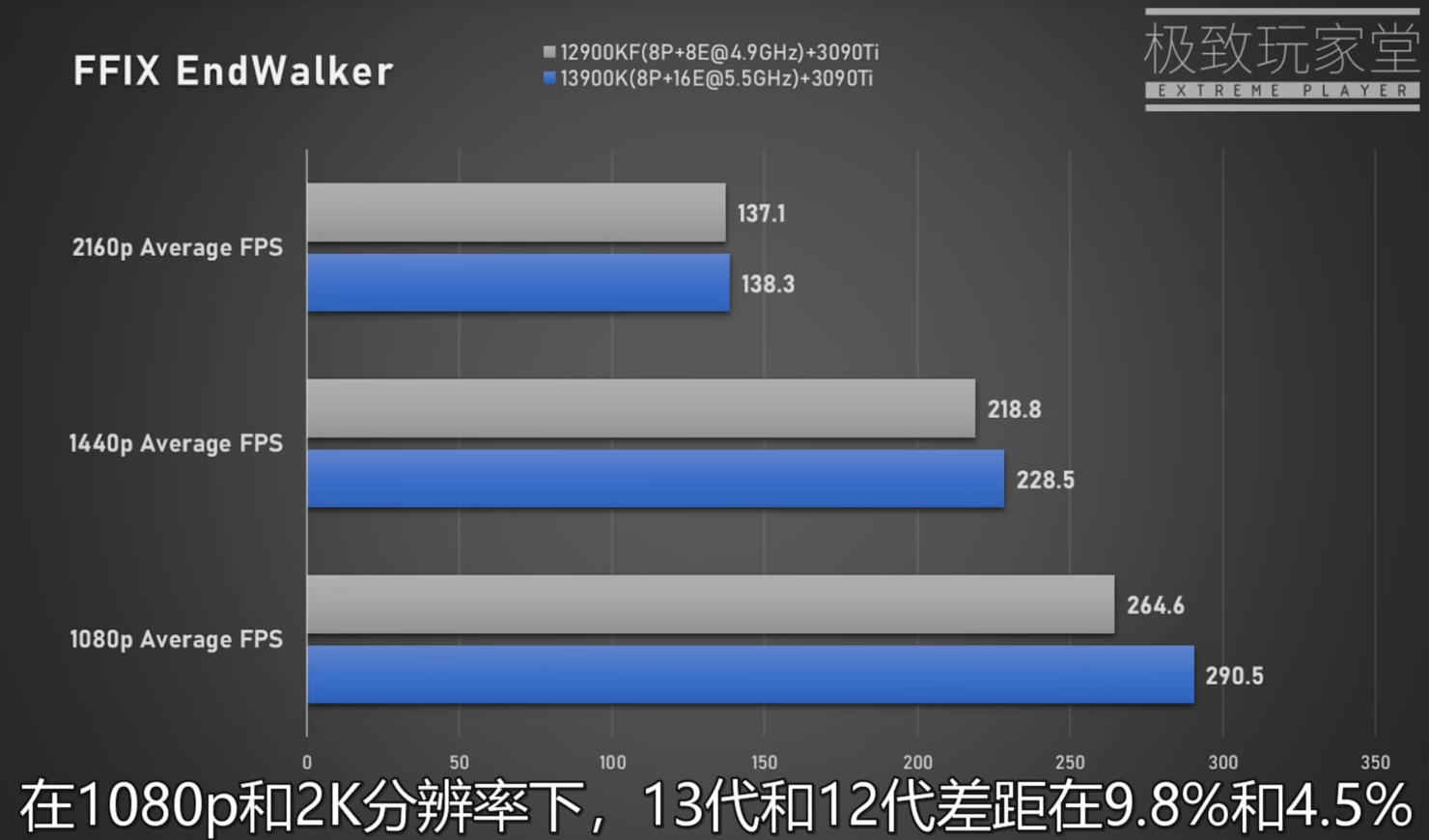

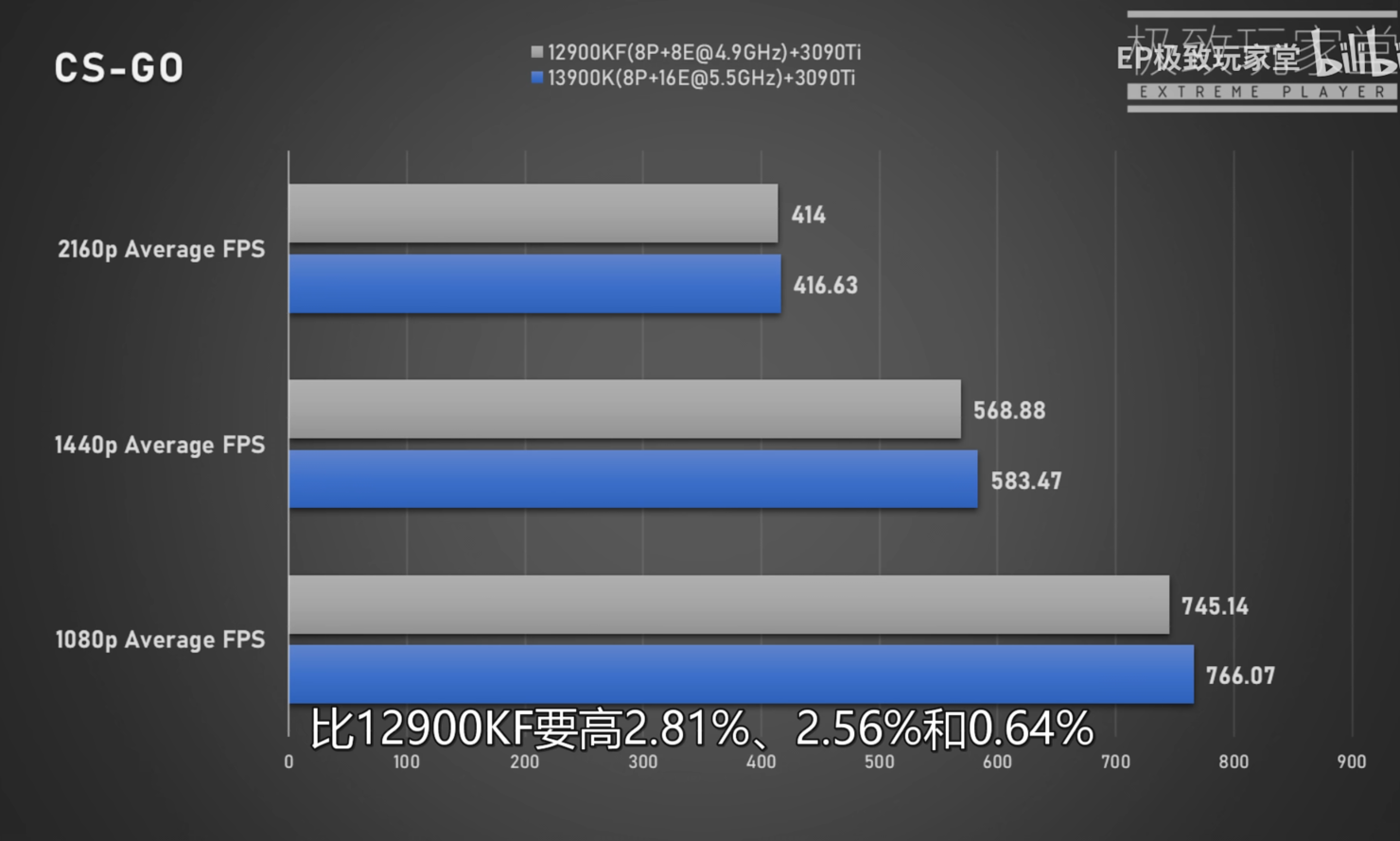

The gaming performance was tested in various titles at 2160p, 1440p, and 1080p resolutions. The average performance improvement seems to be around 5-10% across all three resolutions for the Intel Core i9-13900K Raptor Lake CPU versus its Core i9-12900K Alder Lake predecessor.

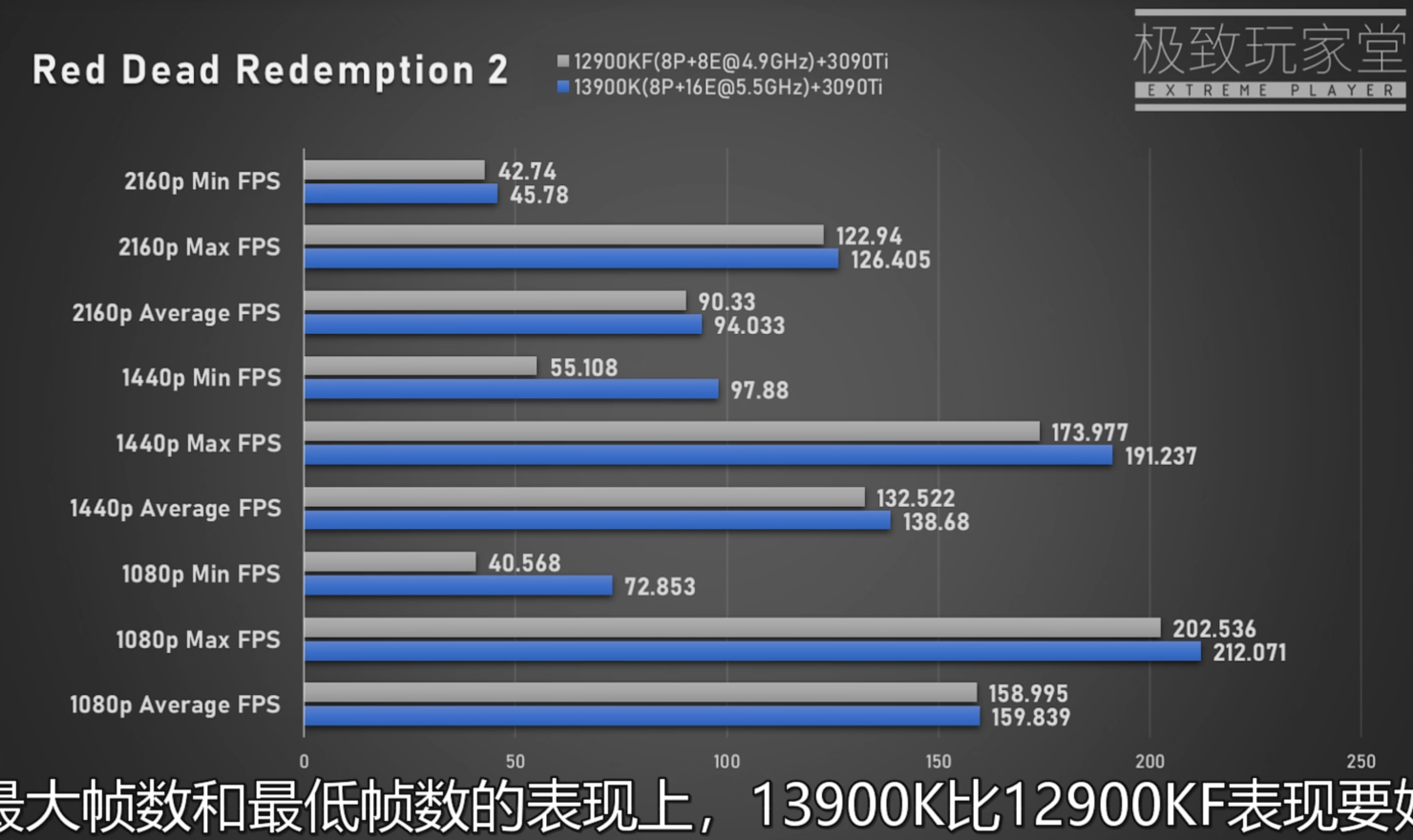

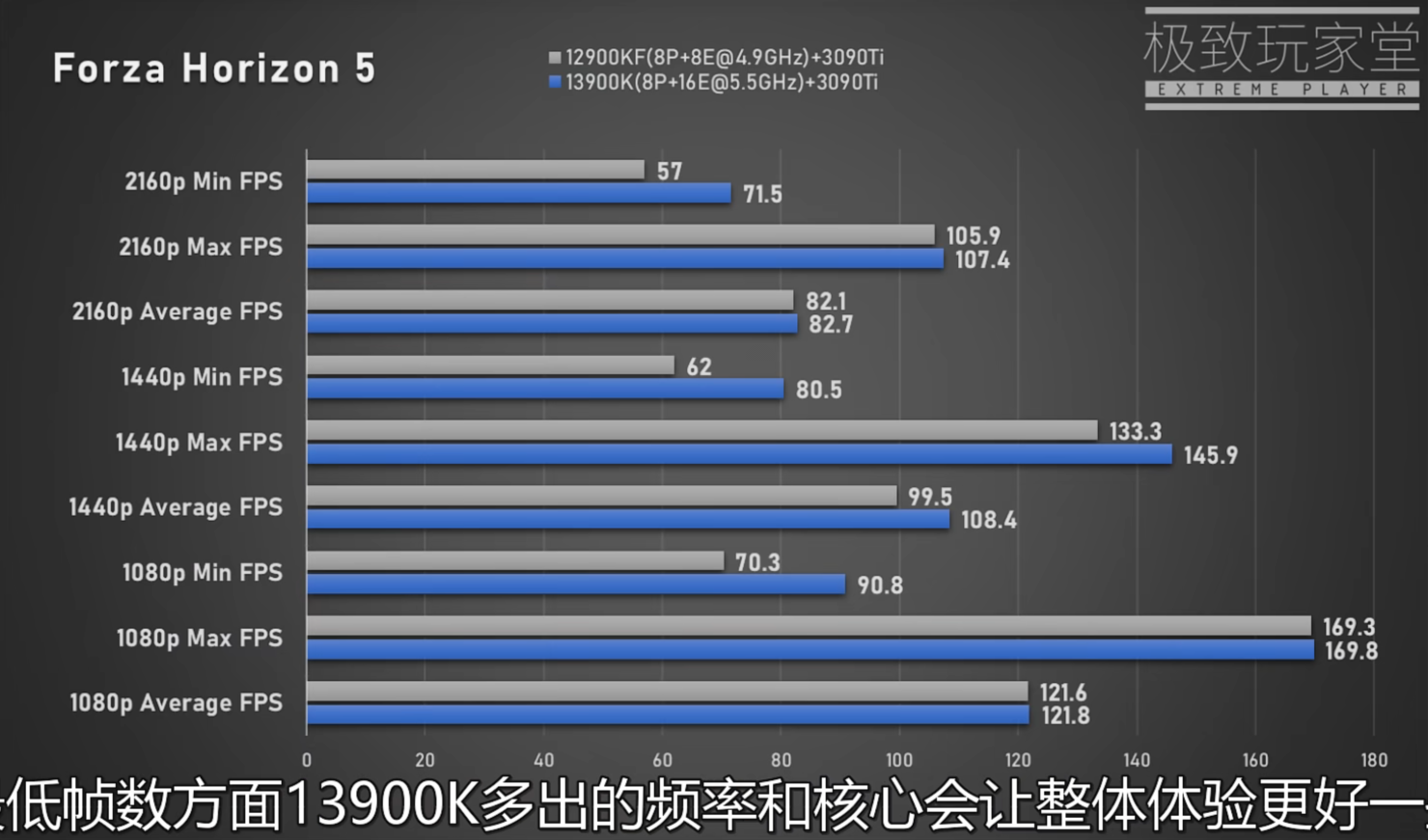

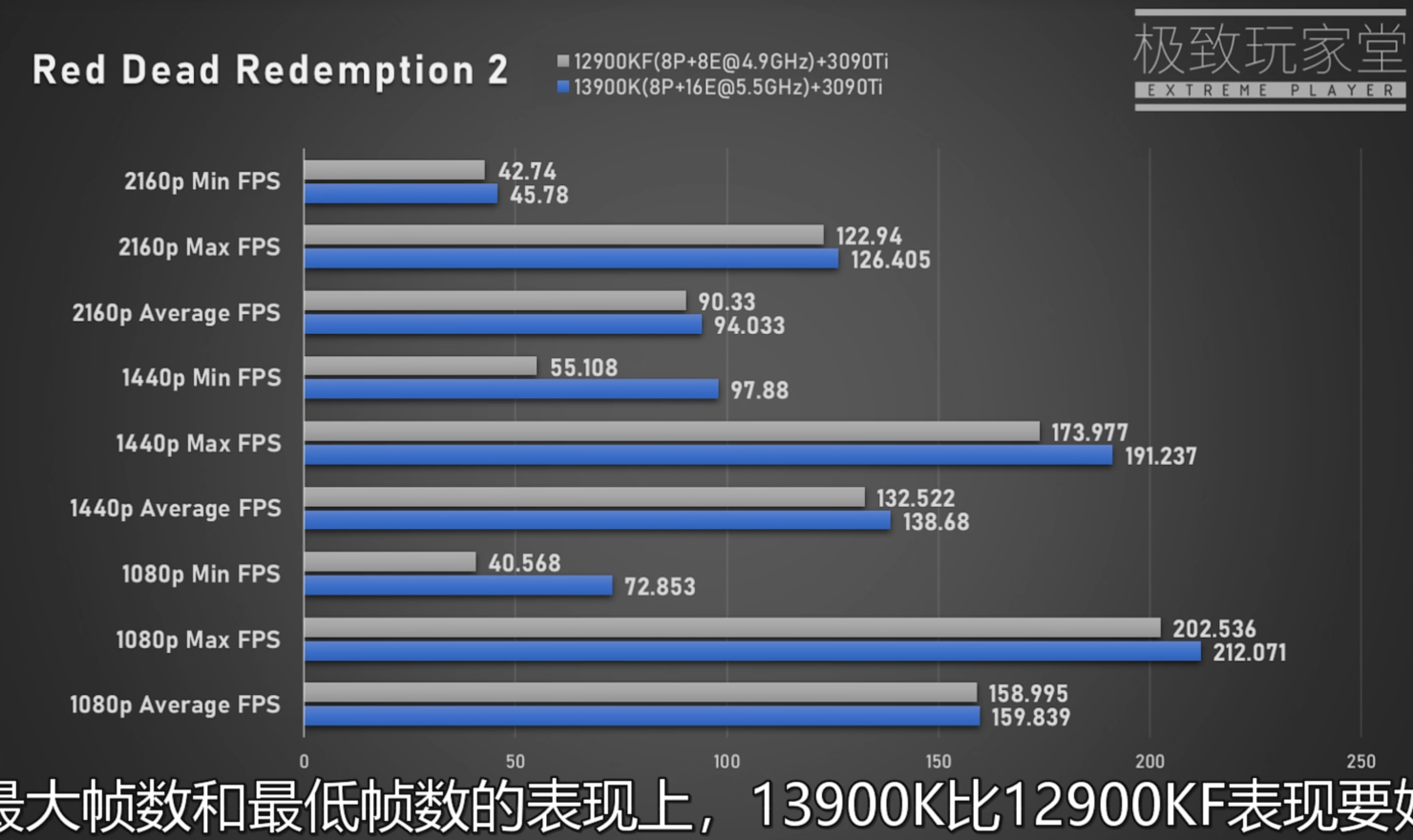

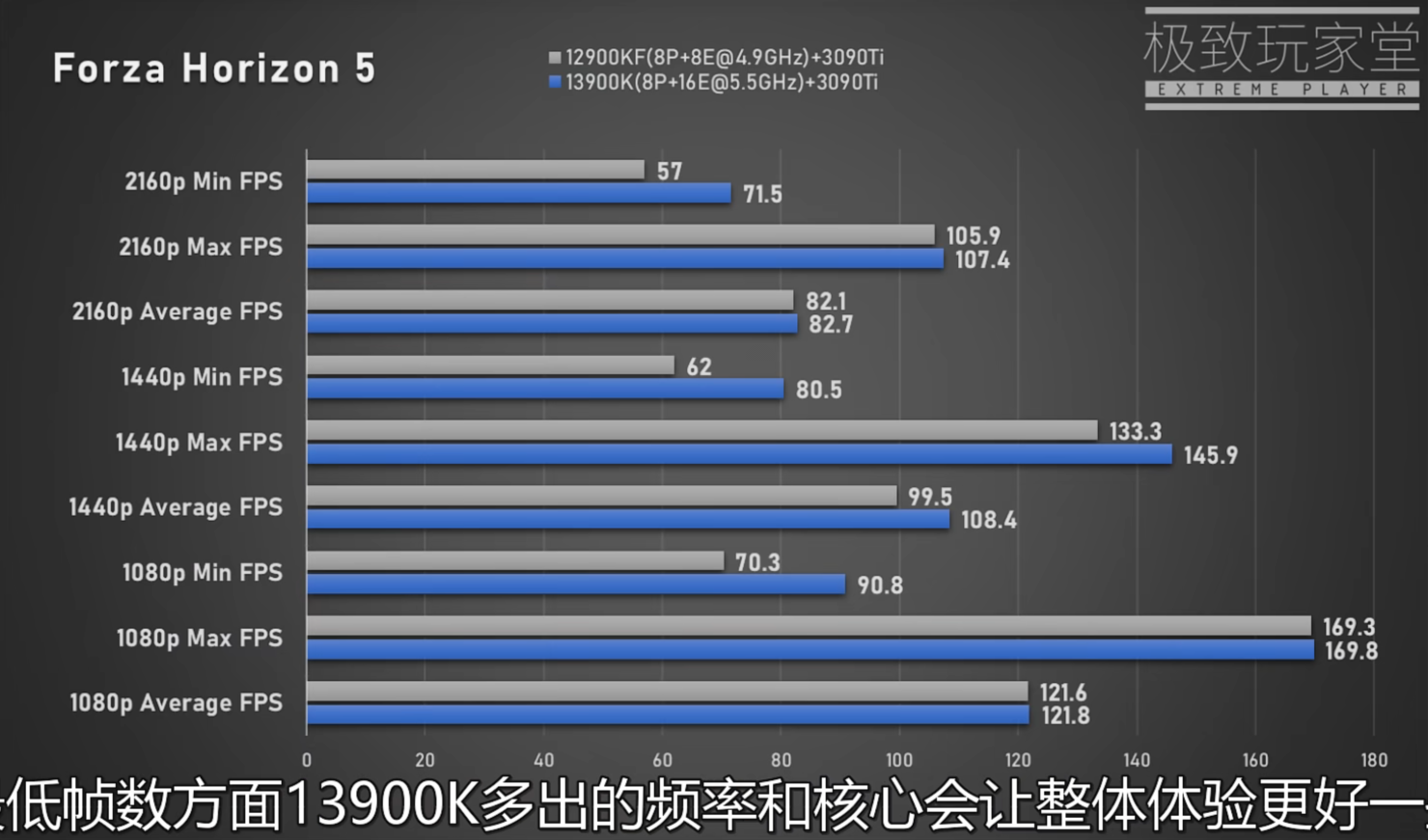

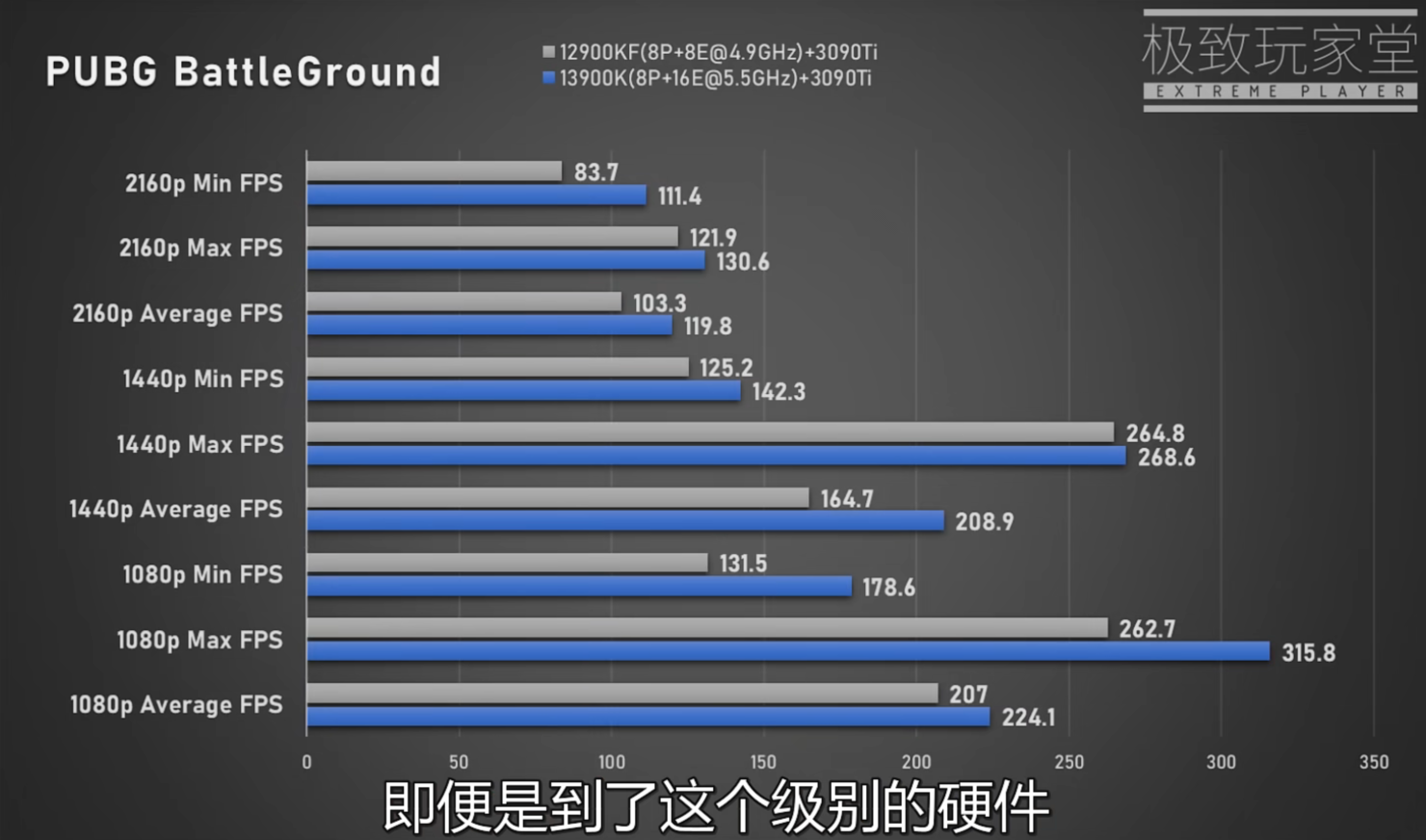

There are only a few cases where the chip showed huge gains. The cache and higher clocks really seem to be benefitting the minimum frame rate with around 25-30% jumps in a few titles such as PUBG, Forza Horizon 5, and up to 70-80% gains in Red Dead Redemption 2. 😵😀

One interesting comparison that has been made is the POWER consumption figures where the Intel Core i9-13900K consumes up to 52% higher in games than the Core i9-12900K and an average of 20% higher power consumption across all three resolutions tested.

This means that the next-gen Raptor Lake CPU lineup is going to be more power-hungry than Alder Lake, even in games. 😱👎

The breakdown of average FPS at each resolution is as follows:

It appears that we are getting several leaks/rumors about next-gen CPUs and GPUs from Intel and AMD. This time we have some GAMING benchmarks of INTEL's upcoming Raptor Lake flagship SKU, 13th GEN processor. The first gaming and synthetic performance benchmarks of Intel's Core i9-13900K Raptor Lake 5.5 GHz CPU have been leaked by Extreme Player at Bilibili (via HXL).

The Intel Core i9-13900K Raptor Lake CPU tested in the leaked benchmarks is a QS sample that features 24 cores and 32 threads in an 8 P-Core and 16 E-Core configuration. The CPU carries a total of 36 MB of L3 cache and 32 MB of L2 cache for a combined 68 MB of 'Smart Cache'. It also comes with a base (PL1) TDP of 125W & an MTP of around 250W.

In terms of performance, we have more detailed gaming and synthetic benchmarks with the Intel Core i9-13900K (5.5 GHz) and Core i9-12900K (4.9 GHz) running at their stock frequencies on a Z690 platform with 32 GB of DDR5-6400 memory and a GeForce RTX 3090 Ti graphics card. The Core i9-13900K already has a 12.2% clock speed advantage over the Core i9-12900K so it should be faster by default even if the architecture is the same. The extra uplift comes from the increased cache as it gets over a 50% bump (68 MB vs 44 MB).

The gaming performance was tested in various titles at 2160p, 1440p, and 1080p resolutions. The average performance improvement seems to be around 5-10% across all three resolutions for the Intel Core i9-13900K Raptor Lake CPU versus its Core i9-12900K Alder Lake predecessor.

There are only a few cases where the chip showed huge gains. The cache and higher clocks really seem to be benefitting the minimum frame rate with around 25-30% jumps in a few titles such as PUBG, Forza Horizon 5, and up to 70-80% gains in Red Dead Redemption 2. 😵😀

One interesting comparison that has been made is the POWER consumption figures where the Intel Core i9-13900K consumes up to 52% higher in games than the Core i9-12900K and an average of 20% higher power consumption across all three resolutions tested.

This means that the next-gen Raptor Lake CPU lineup is going to be more power-hungry than Alder Lake, even in games. 😱👎

The breakdown of average FPS at each resolution is as follows:

- Intel Core i9-13900K vs Core i9-12900K at 1080p: 4.22% Faster Performance on Average

- Intel Core i9-13900K vs Core i9-12900K at 1440p: 6.97% Faster Performance on Average

- Intel Core i9-13900K vs Core i9-12900K at 2160p: 3.30% Faster Performance on Average

- Intel Core i9-13900K vs Core i9-12900K All Res Avg - 4.83% Faster

- Intel Core i9-13900K vs Core i9-12900K at 1080p: 27.93% Faster Minimum FPS

- Intel Core i9-13900K vs Core i9-12900K at 1440p: 21.83% Faster Minimum FPS

- Intel Core i9-13900K vs Core i9-12900K at 2160p: 12.82% Faster Minimum FPS

- Intel Core i9-13900K vs Core i9-12900K All Res Min Avg: 20.86% Faster

- Intel Core i9-13900K vs Core i9-12900K at 1080p: 6.29% Faster Maximum FPS

- Intel Core i9-13900K vs Core i9-12900K at 1440p: 4.42% Faster Maximum FPS

- Intel Core i9-13900K vs Core i9-12900K at 2160p: 2.58% Faster Maximum FPS

- Intel Core i9-13900K vs Core i9-12900K All Res Max Avg: 4.43% Faster

- Intel Core i9-13900K vs Core i9-12900K at 1080p: 19.1% Higher Power Consumption

- Intel Core i9-13900K vs Core i9-12900K at 1440p: 19.8% Higher Power Consumption

- Intel Core i9-13900K vs Core i9-12900K at 1440p: 26.2% Higher Power Consumption

Last edited by a moderator: