Then let's look at some values. Or just one because I'm feeling lazy.

Looking at page 13 (or 19 in the PDF) of the

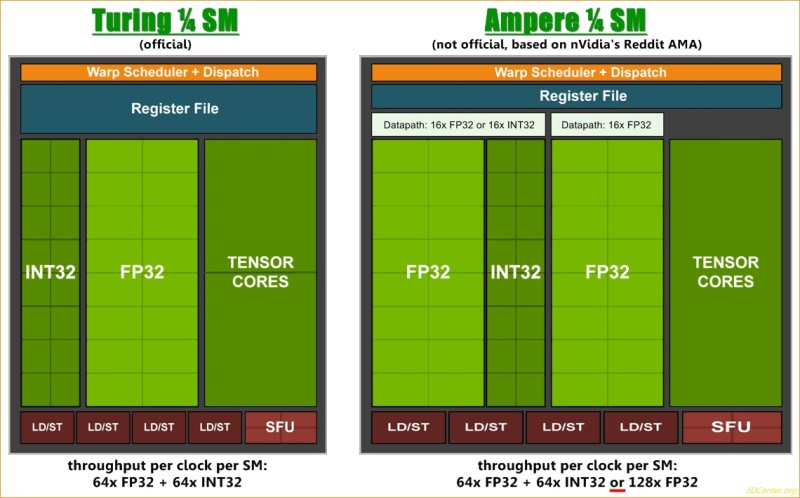

whitepaper on Turing, there's a graph of what games had a mix of INT and FP instructions. I'm just going to pick out Far Cry 5 from this. So if we take this graph, let's just assume for every 1 FP instruction, there were 0.4 INT instructions. And if we laid this on one of Turing's SMs, this means that if there are 64 FP instructions, there's only 25 INT instructions, or a utilization rate of ~70%. For Ampere, since there are some CUDA cores that are split between FP and INT, we can balance which one does what for better utilization. And doing some math, for the best utilization in Far Cry 5's example, 90 FP instructions plus 36 INT instructions, using 126 out of 128 of the CUDA cores vs 89.

So right off the bat, without adding any more CUDA cores, Ampere should have about a 1.4x lead over Turing in this example. So how much does Ampere get in practice? 1.28x based on

TechPowerUp's 4K benchmark.

And sure Ampere doesn't have 2x the memory bandwidth, but it has almost double L1 cache, which should soak up the deficiency.

Either way, my commentary is pointing out the odd conclusion the article seems to hint that NVIDIA only needs to add 75% more shaders for double the performance.