All that debating and not a single person bothered to point out the differences in execution resources between a haswell core and a piledriver core, nor the differences in instruction pipeline length, nor the differences in cache performance, nor any of the facts about the actual hardware that would have made the case for the i3 far more clear. A bunch of people going back and forth "no this one is better because it has a bigger number" ... "no this one is better because I think it's 50% faster in single core workloads." Sort of pathetic to be honest.

The very first response to such a thread should have been very clearly:

"Core count and clock speeds are not measurements of execution performance."

Any AMD enthusiast should be VERY familiar with this concept. It wasn't so long ago that AMD was beating Intel at lower clock speeds and/or with less CPUs and with less power dissipation. Coming onto a forum to tout core count as a measure of compute performance is just as ridiculous as trying to make the case that Netburst was faster than AMD offerings at the time because it had more GHZ.

----------

So, in case anyone cares how to handle these threads on the next go around:

A haswell core hasmore execution resources than an entire piledriver module (if we look at the number of transistors allocated to non-cache functions, and perhaps more importantly, how many of those resources can be simultaneously scheduled to perform operations). Haswell also has a shorter instruction pipeline, and substantially better cache performance. The result: Clock for clock, a hyper-threaded haswell core can be up to ~25% faster than a PD module when compared 2 threads vs 2 threads. If we actually observe the number of effective ALUs and FPUs and simultaneously accessible execution ports/pipes on these two designs, it becomes clear that counting cores is about as stupid as counting cylinders in an engine without ANY regard for displacement, valve-train design, fuel management, ignition systems, atmospheric modifications, etc.

When the workload is only 1 thread per module, vs 1 thread per haswell core, the PD module suffers a major penalty, as the number of execution resources it can schedule that work out to is reduced significantly (it can not "split" the thread across the 2 cores, the single thread only has access to as little as HALF the execution resources in some cases (ALUs, primarily). By contrast, when the workload drops to a single thread on the haswell core, there is no reduction in the available execution resources for that thread. The out of order scheduler can attempt to use up to all 8 execution ports/pipes of the haswell module to work on that thread.

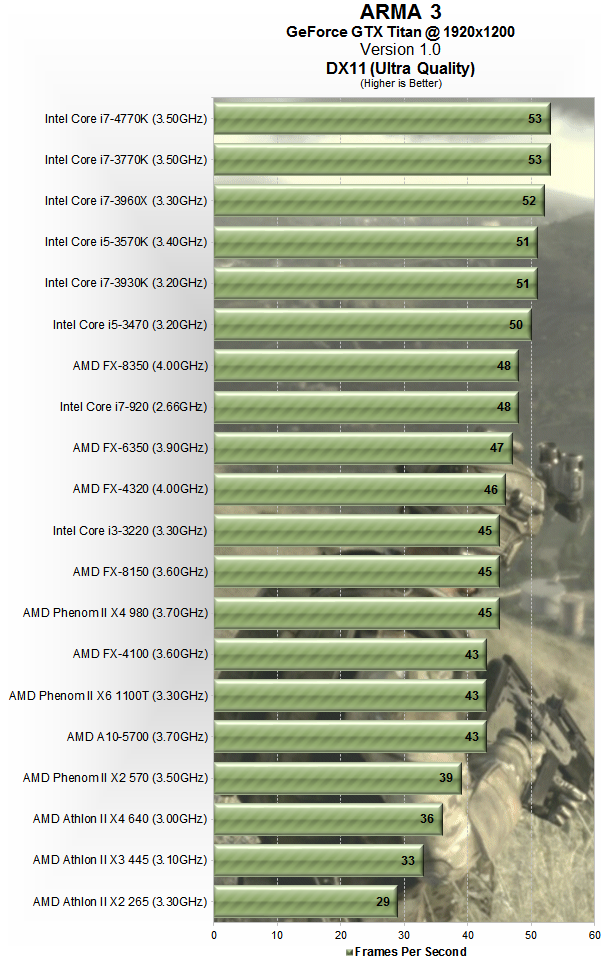

The result of this, is that the i3-4150 is equally fast or faster than the FX-6300 in any workload that saturates up to 5 threads. The ONLY condition where the FX-6300 can pull ahead of the i3-4150, is when the workload saturates and scales out to all 6 cores of the FX-6300, and then, the margins are not a make it or break it thing (~20%). On the other hand, in real-world workloads, especially gaming workloads that can not and do not scale proportionally into more-cores, the i3-4150 can indeed actually produce up to 50% higher minimum FPS in compute bound conditions. This is because the most critical execution workloads in the game engine have access to more execution resources via the superior intra-core parallelism of the haswell architecture.

-----------

Many people on the interwebs try to use the PS4 and Xbox as validation of the many-weak-core AM3+ approach for desktop gaming. They are operating on a fundamentally flawed set of assumptions and ideas. The consoles are configured with a PAIR of 4-core jag modules on a proprietary back-plane for communication between modules. inter-core performance scaling from increased thread count is poor on the jag design to begin with due to the shared L2 cache space and access, and worse still when communications from module to module are involved. The result of all this, is that unlike an FX-8350 or similar AMD desktop part, which demonstrates useful performance scaling all the way up to 8 threads in many workloads, a pair of Jag modules strapped together like that simply won't. In fact, there's no reason to believe that game development on the PS4 requires any hyper-focus on improving parallelism of the workload to beyond a few threads, as the overhead involved with spawning and managing additional threads would quickly become counterproductive to performance on this platform, even though it has a high core count.

Consoles are a fixed hardware configuration. The games are compiled and optimized for that specific hardware and it is a fully functioning HSA/hUMA platform. There is a dramatic performance difference between a binary that has been compiled to run on ANY configuration of desktop hardware made in the last 5-8+ years (common), and a binary that has been compiled with all of the optimization flags FOR a specific piece of hardware. The compute overhead of ports from these consoles will have the same problems that all other console ports have always had. They are forced to shed all of the scheduling optimizations and special instruction usage that maximizes performance on the fixed hardware. In this case, they will also be forced to shed all of the advantages of being in a hUMA environment, which will add significant compute overhead to the port in some cases. To make matters worse, we won't see any developers compiling a console port with a heavy use of modern instruction capabilities as they will want to maximize their target audience. There's also no practical way for them to build a piece of custom software for every arrangement of hardware out there (though, PC gamers SHOULD be encouraging developers to at least offer a FEW different binaries to choose from based on the platform in question. but that's a can of worms for another thread).

Simple answer here is that the PS4/Xbox1 does not mean that the FX-8350 or any other FX chip with lots of weak cores is a good desktop gaming chip, but I'm sure the marketing department of AMD wouldn't mind if you kept on believing that and sharing that.

In fact, the BEST solution to dealing with the compute overhead "ballooning effect" that comes out of typical console ports that must shed all compiling/scheduling optimizations, is to have a CPU with VERY powerful cores, to overcome the losses via brute force.

---------------

Just to make it clear, I am not an i3 owner, I am not an Intel owner. I have an FX-6300 on a UD3P as my primary desktop. I like the system very much for my needs and interests. It offers enterprise class features that a similar cost to implement i3 system would not, and I was able to obtain this hardware in a MC combo for a very reasonable price. It's a fantastic platform for some purposes, but is not well suited to real-time workloads like gaming.