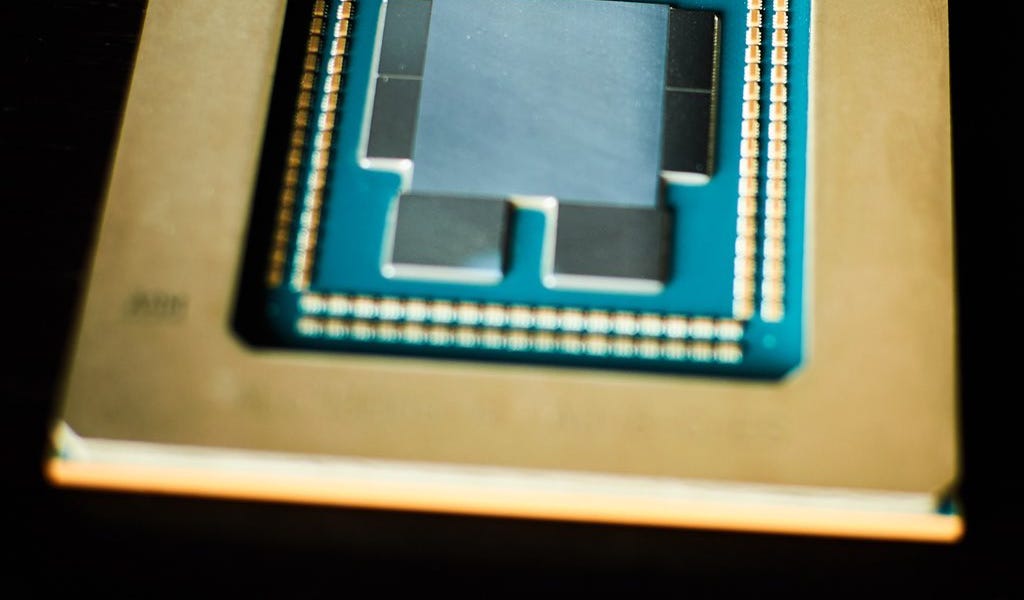

There was also this, but the GPU portion is disabled in these chips. It does show that the latency is massive and the only place the VRAM was worthwhile is where the extra memory bandwidth can be put to use by the CPU:

Break out the broken chips

www.tomshardware.com

Thanks! Yeah, it's disappointing that SoC doesn't seem architected to provide much bandwidth to the CPU core complexes. So, it lost out on high latency and didn't benefit from the additional bandwidth.

Still, it's nearly as good at multithreaded as the top-end 8-core Zen+ CPU and better single-threaded perf than the 1800X Zen1 CPU. So, that's not too bad for an 8-core Zen 2, especially considering the price. It's just a shame the GPU portion was disabled.

These rumors seem to pop up every so often, but we all should remember they lost a bunch of CPU engineers because they didn't want to make a server CPU.

Yeah, but that was already 4 years ago! I looked it up:

I've been at companies that would make 2-3 revisions to their product strategy in 4 years!

They basically lost all of the core engineering team behind the M series.

No way. The design teams are pretty big, actually. I doubt they lost more than 1/3rd. Probably disproportionately more of the senior members, but still...

Right now Apple uses AWS/Google and maybe MS for all their cloud services, and sure while they do have enough money to open their own data centers or probably have their own hardware installed in the others I'm just not sure this is really on the table.

They could

partially migrate services to their own HW. Anything where you'd want the exact same code to seamlessly migrate between end-user machines/devices and cloud. For those cases, you'd need the same ISA extensions in both places, and Apple is pretty far ahead with their adoption of optional ARMv8-A ISA extensions. And their AMX is a completely separate hardware block that's not even mapped into the ISA. Then, there's their Neural Unit.

The other thing that having their own server CPUs would do is to give them more negotiating power over Amazon/Google.

They could also do something along the lines of SPR + HBM where the on package is still there, but they also have DIMM slots wired up.

Exactly. I really think there's a good case for them to just use CXL for the off-package memory, so you can have like 128 lanes and they can be flexibly re-tasked between storage, peripherals, accelerators, and memory.

Their memory controller technology is certainly up for it since the current biggest one is what 1024 bits so the equivalent of 16 channels.

That's for 2 dies, though. So, it's equivalent to the 512-bit memory controllers we see in a lot of server CPUs, today. Genoa is the biggest, at 786-bit.