Back2TheKitchen88 :

Now I honestly havo no idea what to do. I know that in future AMD's drivers are gonna improve and RX 480 will most likely surpass GTX 1060 in all ways, plus its cheaper. I think I'll get RX 480 and stay brand loyal as well. By the way does NVidia have any significant software that AMD doesn't have in order to persuade consumer?

1. There's no justification at all for the driver statement... historically there is nothing to support that.

2. But it must be said that the first thing you should include in a GFX card question is the intended resolution. At 1080p. The answer for 1080P is different at 1440p which is different from 4k. The reason you see so many posts containing misinformation on this topic is because both users and reviewers use GPUZ or some similar tool to read what they think is VRAM usage but it's not. No such tool exists.

http://www.extremetech.com/gaming/213069-is-4gb-of-vram-enough-amds-fury-x-faces-off-with-nvidias-gtx-980-ti-titan-x

GPU-Z claims to report how much VRAM the GPU actually uses, but there’s a significant caveat to this metric. GPU-Z doesn’t actually report how much VRAM the GPU is actually using — instead, it reports the amount of VRAM that a game has requested. We spoke to Nvidia’s Brandon Bell on this topic, who told us the following: “None of the GPU tools on the market report memory usage correctly, whether it’s GPU-Z, Afterburner, Precision, etc. They all report the amount of memory requested by the GPU, not the actual memory usage. Cards will larger memory will request more memory, but that doesn’t mean that they actually use it. They simply request it because the memory is available.”

If you have a VISA card with a $500 charge on it and $5,000 credit limit, you credit report's list of liabilities will say:

VISA = $5,000 because a lender evaluating your ability to pay must think about your ability to pay and right now you have a potential liability of $5k

While there are exceptions due to bad console ports, lack of optimizations for new game engines or next DX version, at 1080p, 2GB and 4GB is the same in 95+% of all games.

https://www.pugetsystems.com/labs/articles/Video-Card-Performance-2GB-vs-4GB-Memory-154/

http://www.guru3d.com/articles_pages/gigabyte_geforce_gtx_960_g1_gaming_4gb_review,12.html

In the above extremetech reference, they were unable to use more than 4GB in any game at any resolution except when they raised settings (at 4K) so high that the game was unplayable. So what's the significance of a game asking for more than X GB if when you pay it those settings, you get 14 fps ? When ya lower the settings enough to get > 30 fps ... bam... you no longer need more VRAM.

Today ...

1080p 3GB or more is just fine

1440p 4 GB is just fine

4k 8 GB is just fine ..... however until we see Display Port 1.4 Monitors and 120_ Hz screens, don't recommend "going there"

That's not to say that there aren't games that might show a performance decrease with less than that amount, the fact is ... a) that's rare and b) the fps hit is not that significant

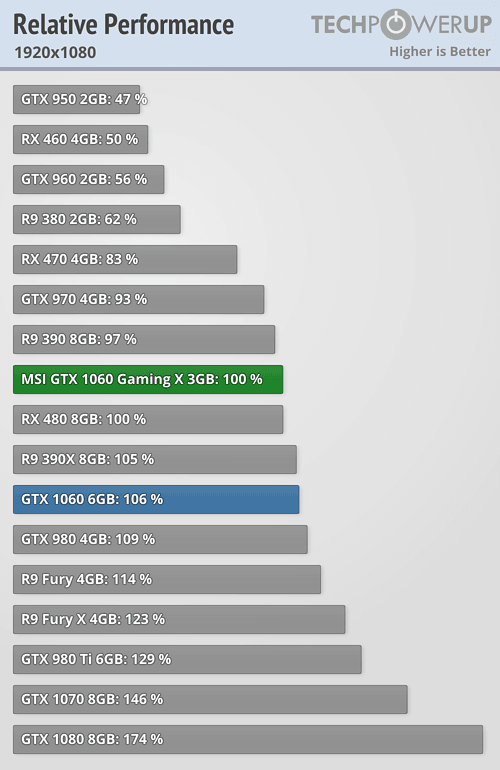

Here's the 3 GB 1060 .. with 1080P screen I can't see spending $220 for a 4 GB 480 ($260 for 8GB) when ya can get a 1060 3 GB for $195

http://www.newegg.com/Product/Product.aspx?Item=N82E16814137034

As for the software / driver / other differences

a) Both improve their drivers over time

b) Historically, nVidia does it somewhat more frequently and is much faster outta the gate with multicard profiles

c) nVdia has PhysX / AMD doesn't ... Think of it like an air conditioner in your car.... you won't get to use it all the time but when ya can, it sure does make an impression.

d) nVidia has Shadowplay which records your game play... (i.e;. Fraps) AMD has Game DVR which is GVR based .... GVR works on both platforms....edge to Shadowplay

e) There's GeForce Experience versus AMDs Gaming Evolved and the nVidia utility is somewhat more advanced, has more options according to reviewers ... I don't get into it enough to notice.

f) Ever since the GTC 7xx / AMD 2xx series (last 3 generations) nVidia cards have 2 to 3 times the overclocking ability as AMD cards. If you OC your cards with Afterburner or whatever, this is huge. For example:

-1060 3GB overclocks 14.5%

-480 4GB overclocks 6.5%

g) NVida has G-Sync and AMD had Freesync. Both work fine with corresponding cards / monitors.... but G-Sync comes with ULMB (Motion Blur Reduction) and Freesync does not. You can see the effects of eliminating motion blur here

https://frames-per-second.appspot.com/