forget about 4K ?? this is the new trend . all my friends upgraded to 4K but two ... and with HDR only "perfect" for 4K panels , you dont know what you are talking about !

Also , with LG Oled TVs now supporting Gsync , they are the way to go.

Using a 4K screen is fine (as it's more or less all you can find for televisions now), but that doesn't mean the extra pixels will make a significant difference to one's viewing experience. As far as watching video goes, aside from perhaps 4K blu-ray discs, the detail levels of most video sources are compressed so much as to make the extra resolution over 1440p largely redundant. Any 4K streaming service will appear a bit blurry on a 4K screen when viewed up-close due to the low bit-rates and their resulting artifacts, with the amount of data being transferred typically being lower than even a 1080p Blu-ray, and as a result, such content tends to look rather similar on a 1440p display.

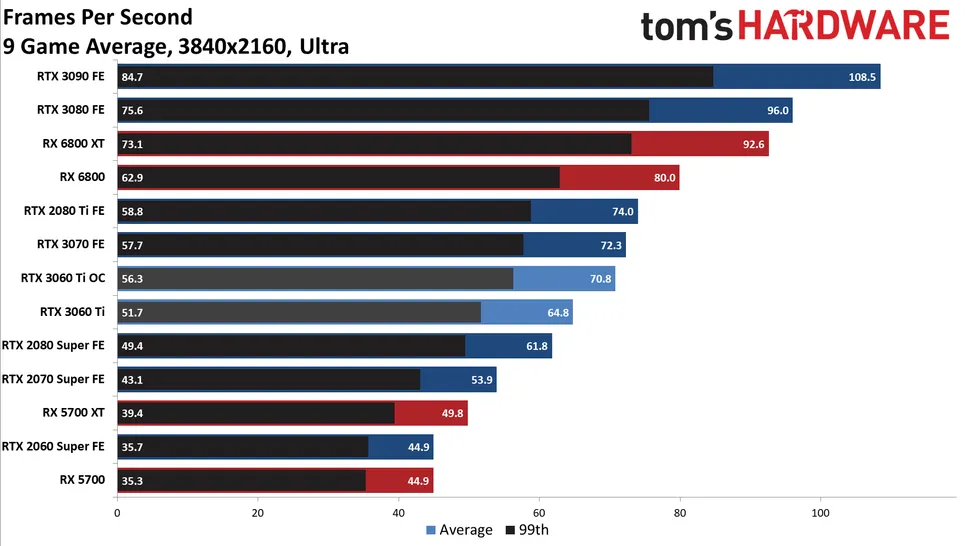

As for games, sure you can render them at native 4K, but the performance hit is very large for what amounts to a minor increase in sharpness at typical viewing distances. Many will consider turning up the graphics options and getting higher frame rates at 1440p to arguably result in a better viewing experience. Of course, we're seeing new upscaling options gaining traction that allow lower resolutions to be upscaled fairly well, and something like DLSS or AMD's alternative have the potential to make viewing below-native resolutions a lot better in supported games.

However, that comes to the second issue, which is the current lack of reasonably priced high-refresh 4K gaming monitors. The only 4K screens that accept 120+Hz input under $1000 are a handful of 27" models, and that size is arguably a bit small to gain much benefit out of the extra pixels. And OLED panels are still a bit questionable for heavy PC use, as they still tend to be subject to burn-in from displaying the same image for extended periods, which is part of why you still don't commonly see the technology used for even high-end PC monitors.

Eventually, high-refresh 4K panels with OLED (or similar-performing technology) will make sense for PC gaming, but I don't think we're quite there yet, at least not for anything outside of home theatre PC use, where 1440p hasn't really been marketed as an option.

Yeah I'm not sure why a PC gaming enthusiast would want their PC to be less capable of current gen consoles now!!

The new consoles will be making heavy use of upscaling in most games to output a "4K" signal at a reasonable performance level, just as the prior-gen "4K" consoles did. You might occasionally get native 4K in some ports of games designed for older hardware, but the actual rendering resolution of most titles will likely be around 1440p or lower, with framerates targeting 60 or even 30fps in some cases, especially as the hardware ages and more-demanding games are developed.

And it's arguably fine that they won't often be rendering at 4K, since again, most people won't really notice much difference between 1440p and 4K while actually playing a game at typical viewing distances. The limited performance of these consoles is better put toward maintaining higher frame rates and rendering more detailed game assets rather than needlessly putting a significant amount of processing power toward making the image slightly sharper.