My personal opinion is the 3050 is better than the 2060, uses better DLSS, better RTX, at gives a better 1080p gaming experience, regardless of a couple fps difference I can't see or tell exist in anything except a benchmark.

Better RTX and DLSS? Maybe if they didn't drastically reduce the Tensor and RT core counts. The original 2060 had 30 RT cores and 240 Tensor cores, while the 3050 drops those to 20 RT cores and 80 Tensor cores. So any per-core performance gains are negated by the reduction in core count.

In terms of real-world performance, Techpowerup's benchmarks show the 2060 6GB performing 13% faster on average across 25 games at 1080p, 14% faster at 1440p, and 15% faster at 4K, despite the VRAM deficiency...

https://www.techpowerup.com/review/evga-geforce-rtx-3050-xc-black/31.html

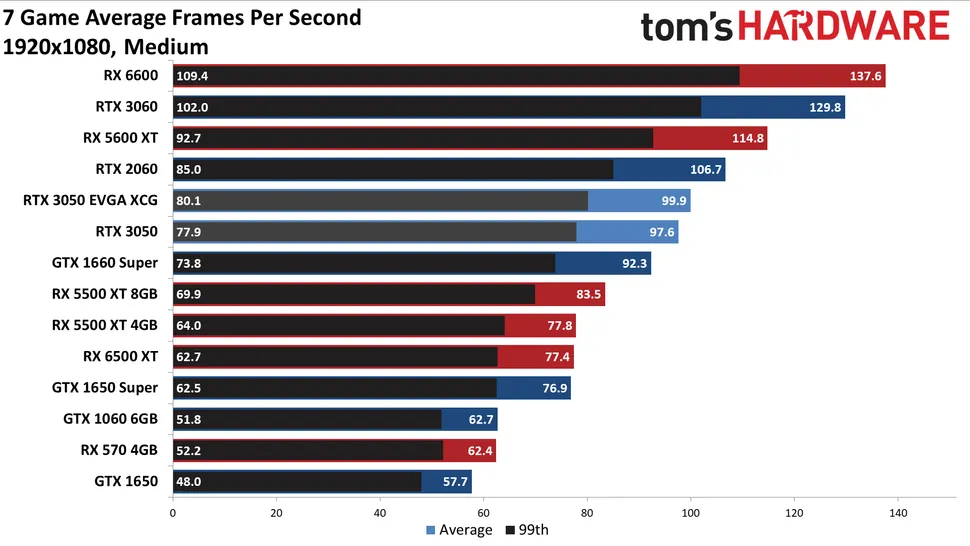

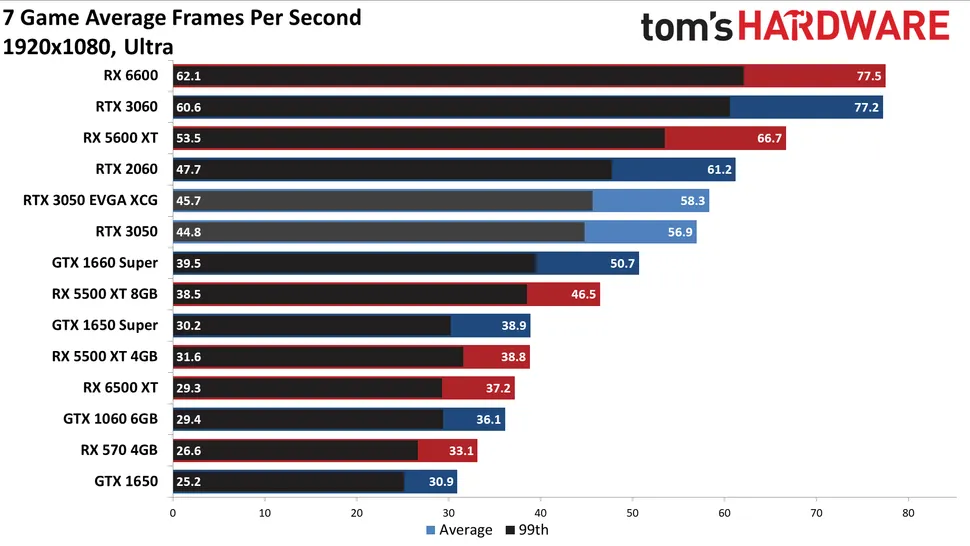

OC cards only improve the 3050's performance by around 1-2%. And since they only gave it 8 PCIe lanes, it loses 1-2% of it's performance when installed in a PCIe 3.0 motherboard. The card's performance is closer to that of the 1660 SUPER than the 2060, at least outside RT and DLSS, which the 16-series lacks.

As for RTX and DLSS, they showed the performance hit of enabling raytracing to be relatively similar for both cards. The 2060 still performed slightly better on average with RT enabled, outside of DOOM Eternal, which was the only example where the 2060's 6GB of VRAM resulted in a significant performance hit with raytracing turned on. And both saw a relatively similar performance uplift with DLSS enabled as well. The 3050 might manage a slightly better performance increase when upscaling with DLSS, and slightly less of a hit from RT, but generally still not enough to take the lead over the 2060's DLSS and RT performance.

That's not to say the 3050 is a bad card, but as far as performance goes, it's pretty much a slightly faster 1660 SUPER with more VRAM, DLSS and some limited RT capabilities. Even at it's $250 MSRP, it felt a bit underwhelming to me, considering the 1660 SUPER was offering similar performance in most games at a $230 price point more than two years prior to its release. For it's higher price, there's no reason why it's performance shouldn't have at least matched or exceeded that of the 2060, which came out over 3 years ago. And current market pricing has made it a significantly worse value than what prior cards offered, though that's been the case for all cards the last year or so.

For general gaming, and all intents and purposes, a 1070, 1660ti, 2060 and 3050 all land in the same place±, the fps differences being so minor as to not realistically mean anything.

I would generally agree, and the 3050 and 2060 get the added benefit of RT and DLSS that arguably gives them an edge above the rest. But the 1660 SUPER also performs very similar to the 1660 Ti and 1070, and launched a couple years prior to the 3050 at a lower suggested price. So, even at the cards intended MSRPs, the 3050 wasn't really bringing more performance to the table than what one could have bought two years prior, outside the features that trickled down from the 2060. And while that level of RT hardware is better than none at all, it's still a bit questionable how useful that will be going forward, as the 2060 was already only borderline useable for RT, and the 3050 doesn't really improve upon that, despite coming out a few years later.

As for the power draw difference, the stock 2060 was officially a 160 watt card, while the stock 3050 is a 130 watt card, so it's not exactly a huge difference, and OC versions of either will likely drive power levels higher, despite not improving performance to any perceptible degree. Though the power listed for that particular overclocked 2060 seems inaccurate, since Tom's only found it to draw around 180-190 watts in their review.