I wouldn't expect much of a "final review" on something that will likely become an internal driver detail nobody actually needs to know about besides hardware developers and driver/firmware writers.I would still wait until AMD Solution is fully reviewed by a 3rd party and Nvidia's before making a final decision on this.

News Nvidia Says Feature Similar to AMD's Smart Access Memory Tech is Coming to Ampere

Page 2 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I wouldn't expect much of a "final review" on something that will likely become an internal driver detail nobody actually needs to know about besides hardware developers and driver/firmware writers.

True I still want to see how the final products will perform. Once the hardware is out on AMD's side and NV has enabled it on their hardware.

digitalgriffin

Splendid

There was a comment on Phoronix forums a while ago about it and yes, it's about modifying BAR. This however requires BIOS and kernel support, and wasn't possible in Windows for quite a while.

AMD enabled the feature on Windows when they had enough control to make sure it works, they never said it was a feature only they could support.

Yes, one could ask why Microsoft/Intel/Nvidia never came together to make it possible before... Oh wait.

Edit: typo

Yet it requires an 500 series chipset and ryzen 5000 chip even though theres little difference between it and the 3000 io die.

They wouldnt be artificially trying to promote sales through a feature that is already there would they? It makes AMD look like nvidia in tactics. Honesty and value are two of the reasons i stayed loyal to AMD for years.

SAM is marketing...😉 At best--at the very best possible--AMD says no more than 6%. Likely that's at 1080P--probably 0% at 4k. I'm definitely going with an RX-6800XT just as soon as I can get my hands on one, for my 4k gaming, but it's difficult to blame AMD for attempting to squeeze out the last drop of marketing blood from Zen 3 and RDNA 2. The perceptual problem for nVidia here is that since it doesn't manufacture x86 CPUs of any kind it remains to be seen what nVidia is going to do about this...😉 IMO, nVidia has been far more CPU dependent than ATi/AMD going back as far as 2002--so it won't surprise me at all to see that AMD is now also leveraging its CPUs to add as much discrete GPU performance as possible!

Bottom line: SAM is marketing, mainly, and nothing wrong with that! AMD is manufacturing and selling both CPUs and GPUs, and nVidia is not--and AMD is using SAM marketing to accentuate this advantage, imo.

Bottom line: SAM is marketing, mainly, and nothing wrong with that! AMD is manufacturing and selling both CPUs and GPUs, and nVidia is not--and AMD is using SAM marketing to accentuate this advantage, imo.

digitalgriffin

Splendid

As it's already been said, it's really convenient that NVidia claims now they have had this capability all along, (assuming all of this is fact) and NOW that AMD is enabling it Nvidia will be too. But will the scalpers scoop up this feature before we get it? lol They RUSHED Ampere to beat AMD, and now all of a sudden they have an equivalent feature to SAM all along they were just too greedy or lazy to implement it......no thanks.

I switched over to the "NVidia camp" since the GTX 10 series, but honestly, between the botched RTX 20 AND 30 series launch as well as their apparent greed with pricing.....I've had enough. I already have a Ryzen 5600x CPU, and am awaiting the 6800XT launch, I'm supporting AMD for now.

Its a matter of universal compatibility and tech support cost. Nvidia wasnt worried about that extra 5% until AMD started biting their heels.

Freesync exist because of gsync. Crossfire exist because of sli/nv-link.

Last edited:

digitalgriffin

Splendid

SAM is marketing...😉 At best--at the very best possible--AMD says no more than 6%. Likely that's at 1080P--probably 0% at 4k. I'm definitely going with an RX-6800XT just as soon as I can get my hands on one, for my 4k gaming, but it's difficult to blame AMD for attempting to squeeze out the last drop of marketing blood from Zen 3 and RDNA 2. The perceptual problem for nVidia here is that since it doesn't manufacture x86 CPUs of any kind it remains to be seen what nVidia is going to do about this...😉 IMO, nVidia has been far more CPU dependent than ATi/AMD going back as far as 2002--so it won't surprise me at all to see that AMD is now also leveraging its CPUs to add as much discrete GPU performance as possible!

Bottom line: SAM is marketing, mainly, and nothing wrong with that! AMD is manufacturing and selling both CPUs and GPUs, and nVidia is not--and AMD is using SAM marketing to accentuate this advantage, imo.

6% is still 6% to some. Its more beneficial for large datasets or packed datasets (textures, hash sets, etc) than anything.

Considering that AMD is now charging a premium, they need to justify that premium as top tier. Any little slip will hurt. Remember AMD also had a long history of stumbles. So any loss will be viewed as "typical" Personally i think they jumped the fence too soon. They arent there yet.

But at the same time I cant fault AMD to try to maximize profit margins with limited supply before rocket lake and 3080ti bring back competition.

Well, Nvidia says its SAM equivalent works on Intel CPUs. If Nvidia can do it on Intel, then I'd strongly suspect older AMD CPUs are one AGESA update from getting support too should AMD decide to make it so.Yet it requires an 500 series chipset and ryzen 5000 chip even though theres little difference between it and the 3000 io die.

They wouldnt be artificially trying to promote sales through a feature that is already there would they?

mitch074

Splendid

AFAIK BAR memory size changes have been supported for quite a while on Linux - probably for storage arrays. I don't think an AGESA update would be needed, and technically it should work on any PCI-e revision, however for graphics work it may only really be beneficial with PCI-e 4.0 and faster - thus the requirement for a 5xx series chipset.Well, Nvidia says its SAM equivalent works on Intel CPUs. If Nvidia can do it on Intel, then I'd strongly suspect older AMD CPUs are one AGESA update from getting support too should AMD decide to make it so.

Now, I wouldn't be surprised if AMD decided to backport support for this technology to other hardware (if it isn't already the case but locked up behind a software switch) - it IS an open specification after all - but maybe they don't want to enable it driver-side until they've tested is as rock stable.

Remember with RX5xxx how everybody was up in arms because of driver instabilities? What would happen, do you think, if they enabled this feature for everybody and BSODs started appearing all over the place?

Yeah - I wouldn't enable it by default either. Maybe I'd have it deactivated by default, needing a registry setting to switch on. If, on top of that, my main CPU competitor made it so that enabling the feature needed a different CPU instruction than my own, I'd really, REALLY look twice before enabling it.

TerryLaze

Titan

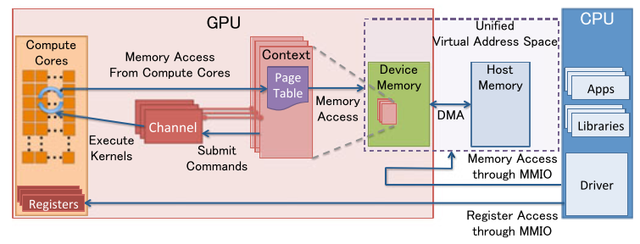

Then what was your original point here? That SAM is not using a driver anymore? That it is based on just blindly copying Gbs of data as one piece to the GPU starting at address $0 and stopping when the data ends no matter if the Vram is full or not?The GPU does not need to know anything, keeping tabs on what memory is used for what or free is the drivers' job.

Before:

1- check if the VRAM IO hits the currently active 256MB GPU VRAM memory page

2- send commands to the GPU to move the IO window to the target VRAM memory range when the window is currently at the wrong address

3- wait for the command to complete

4- read/write

5- rinse and repeat for every VRAM IO, threads have to take turns accessing VRAM when they need access to different pages

Now:

1- read/write

No one said SAM is usable only on the x570 platform. AMD stated that any 500 series motherboard will do. Please fix, that's misleading.

Murissokah

Distinguished

Its a matter of universal compatibility and tech support cost. Nvidia wasnt worried about that extra 5% until AMD started biting their heels.

Freesync exist because of gsync. Crossfire exist because of sli/nv-link.

Yeah, this is how I feel about it too. They never bothered to do it and now that AMD has they are playing catch up. As for the marginal gain, there's a big challenge in making good use of this tech without making it a requirement. Games like Fallout 4 that are heavily influenced by memory could definitely harness this to improve performance, but how would it perform for the vast majority of people who don't have SAM? In consoles it makes a lot of sense to optimize for this, but on PC it's compatibility hell.

Got to love when one company brags about marketing brand-new features that are merely a firmware tweak away from getting matched by every other hardware manufacturer that has the necessary flexibility (maximum BAR mask size in this case) built into older products, just not exposed yet for whatever reason.

Probably much wiser to wait-and-see before commenting on hearsay. Reviewers have yet to test AMD's implementation (which clearly works), and as for Nvidia 'reply' - are we really surprised? Much, much more concerning...for Nvidia to brag about enabling this feature through a simple firmware update does imply a not insignificant level of sandbagging.

I'd like to think a wide spectrum of people will be interested in how this develops. So, once again...let's wait and see before stating assumed as fact.

I wouldn't expect much of a "final review" on something that will likely become an internal driver detail nobody actually needs to know about besides hardware developers and driver/firmware writers.

The performance improvement AMD shows is not insignificant. Everyone will/should be interested in the introduction benchmarks of this.

Last edited:

There was a comment on Phoronix forums a while ago about it and yes, it's about modifying BAR. This however requires BIOS and kernel support, and wasn't possible in Windows for quite a while.

AMD enabled the feature on Windows when they had enough control to make sure it works, they never said it was a feature only they could support.

Yes, one could ask why Microsoft/Intel/Nvidia never came together to make it possible before... Oh wait.

Edit: typo

Resizable BAR support / 04/20/2017

https://docs.microsoft.com/en-us/windows-hardware/drivers/display/resizable-bar-support

SAM is marketing...😉 At best--at the very best possible--AMD says no more than 6%. Likely that's at 1080P--probably 0% at 4k. I'm definitely going with an RX-6800XT just as soon as I can get my hands on one, for my 4k gaming, but it's difficult to blame AMD for attempting to squeeze out the last drop of marketing blood from Zen 3 and RDNA 2. The perceptual problem for nVidia here is that since it doesn't manufacture x86 CPUs of any kind it remains to be seen what nVidia is going to do about this...😉 IMO, nVidia has been far more CPU dependent than ATi/AMD going back as far as 2002--so it won't surprise me at all to see that AMD is now also leveraging its CPUs to add as much discrete GPU performance as possible!

Bottom line: SAM is marketing, mainly, and nothing wrong with that! AMD is manufacturing and selling both CPUs and GPUs, and nVidia is not--and AMD is using SAM marketing to accentuate this advantage, imo.

This is an unbelievably shocking statement and to present it in a tech forum, where FPS/performance is so revered, is plain bizarre...the gains AMD have shown are NOT insignificant. I have to conclude that, as Nvidia have done multiple times in the past - and are still doing - that you are being paid to say this.

mitch074

Splendid

It wouldn't be the first time that Windows enabled a feature no driver wanted to use.Resizable BAR support / 04/20/2017

https://docs.microsoft.com/en-us/windows-hardware/drivers/display/resizable-bar-support

Also, as said before, activating it differs depending on the CPU, making it rather tricky to use.

Most people have already made their choice based on existing performance figures. Whatever gains BAR-resize may or may not provide won't be of much significance until the next generation where it will be baked into launch-day results and likely already forgotten.The performance improvement AMD shows is not insignificant. Everyone will/should be interested in the introduction benchmarks of this.

PapaCrazy

Distinguished

Let' see independent testing that absolutely, positively, 100% proves these "smart access" features don't induce micro-stutter. The FPS numbers aren't everything.

They could never get two GPUs using the same exact architecture, memory, speeds, etc. to play nice over PCI... I am not at all convinced they can do it between two distinct platforms and processors. Even with PCI 4.

They could never get two GPUs using the same exact architecture, memory, speeds, etc. to play nice over PCI... I am not at all convinced they can do it between two distinct platforms and processors. Even with PCI 4.

Resized BARs eliminate the need for GPU drivers to move the visible VRAM page around for the CPU to read/write GPU memory. It is practically inconceivable that giving the CPU flat access to the entire VRAM address space would lead to worse performance than forcing the CPU to go through extra steps to tell the GPU to re-map a 256MB window around VRAM before each read/write every time drivers need access to a different chunk of VRAM.Let' see independent testing that absolutely, positively, 100% proves these "smart access" features don't induce micro-stutter. The FPS numbers aren't everything.

The only downside I can imagine is the flat(ter) memory layout making it more difficult to secure sensitive data in VRAM.

I believe the resizable BAR is more of a workstation feature. Bandwidth gain is great for stuff like data processing. I think this is one of those thing nvidia left out to prevent people from using gaming card for professional work.If NVidia had this capability all along I feel this begs the question of why they had to wait for AMD to implement it. Looks bad either way.

But now that AMD putting it in. And using it to compete with their best gaming card the 3090 even. That kinda force their hand on the matter. And I believe they couldn't just turn it on either. It'll be next year before the feature is ready for consumers.

I believe AMD did say something like their SAM will gain more performance if the game devs take more advantage of it. And then there's also the fidelityFX super resolution vs DLSS. So, in this graphic card generation we won't see the full picture well into the next year I'm guessing.

I believe the resizable BAR is more of a workstation feature. Bandwidth gain is great for stuff like data processing. I think this is one of those thing nvidia left out to prevent people from using gaming card for professional work.

But now that AMD putting it in. And using it to compete with their best gaming card the 3090 even. That kinda force their hand on the matter. And I believe they couldn't just turn it on either. It'll be next year before the feature is ready for consumers.

I believe AMD did say something like their SAM will gain more performance if the game devs take more advantage of it. And then there's also the fidelityFX super resolution vs DLSS. So, in this graphic card generation we won't see the full picture well into the next year I'm guessing.

To repeat what others, and myself, have said - since there's very clearly a drive to pacify this - virtually all consumers (and reputable tech sites!!) deeply care about 'price to performance', particularly important, clearly, in a competitive environment. Price is important. Performance is important - for many obvious and not so obvious reasons...

--> Any attempt to dumb this down is, quite frankly, shocking, and places a flashing "I am a paid writer" above their head.

We already know the significant benefits of AMD's approach. We now wait, with obvious great interest, for Nvidia's approach - and if we do indeed find that their 'feature' could have been turned on in the past, we'll then, have a few more words to say...of that you can most certainly be rest assured!!

Let's wait for proper testing before posting more of Nvidia's firefighting.

Last edited:

All this proves what I have been thinking for years, NVIDIA doesn't care about consumers at all. Just trying to screw the consumer whenever possible to maximize profits. AMD is far better at bringing technology to the consumer and enhancing its product line.

Got to love when one company brags about marketing brand-new features that are merely a firmware tweak away from getting matched by every other hardware manufacturer that has the necessary flexibility (maximum BAR mask size in this case) built into older products, just not exposed yet for whatever reason.

mitch074

Splendid

Not one, three - the CPU firmware, the BIOS/UEFI and the VBIOS. Three sensitive pieces of firmware that need to work together along with the OS to work without blue screens.Got to love when one company brags about marketing brand-new features that are merely a firmware tweak away from getting matched by every other hardware manufacturer that has the necessary flexibility (maximum BAR mask size in this case) built into older products, just not exposed yet for whatever reason.

Regardless of how fast the VRAM is, bandwidth to the CPU would be limited by PCIe bandwidth (32 GB/s for PCIe 4.0 x16). So it'd have less bandwidth than dual channel DDR4-3200 (51.2 GB/s), and worse latency.The point of Smart Access Memory is so that applications can fully address the entire VRAM buffer, it's precisely what it does. I understand it does not affect Infinity Fabric frequency (in fact I never said that), but adding faster memory means you can feed the CPU faster and AMD has shown the gains themselves. Higher VRAM bandwith from GDDR6X should improve on these gains.

As for the RX6000 the on-die cache is a GPU cache and SAM memory operations are handled by the CPU. The GPU cache cannot cache operations that were not processed by it. Notice how AMD never mentions the cache and repeatedly talks about the "high bandwitdh GDDR6 memory" when talking about SAM. For reference:

Murissokah

Distinguished

Regardless of how fast the VRAM is, bandwidth to the CPU would be limited by PCIe bandwidth (32 GB/s for PCIe 4.0 x16). So it'd have less bandwidth than dual channel DDR4-3200 (51.2 GB/s), and worse latency.

You're right, I hadn't considered that. In this case, it only seems useful in cases you'd expect the system RAM to be saturated, as they can work in tandem.

TRENDING THREADS

-

Question No POST on new AM5 build - - - and the CPU & DRAM lights are on ?

- Started by Uknownflowet

- Replies: 13

-

Question Will a 120 watt inverter power my gaming laptop?

- Started by nelska

- Replies: 11

-

-

-

-

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.