The Ryzen 3000 series with 12 cores/ 24 threads or 16 Cores/32 Threads? That all i been seeing...all computer news articles seem to be following it...is it true or going be 8 Cores /16 Thread again....

Last edited:

While 1080 med runs 100FPS faster,do you have any understanding on what benchmarks are?Uhhh....It looks like AMD's CSGO 9900k number pretty much perfectly matches 1080p high AVG in your chart.

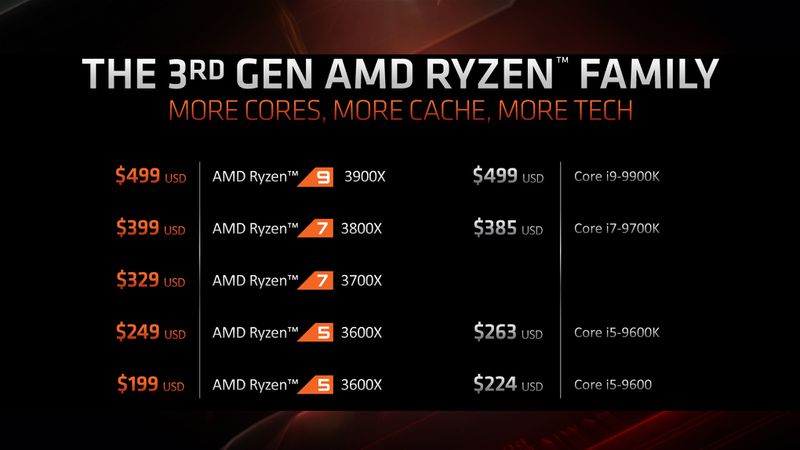

Pick a price point and stick with it. You can't make an apples-to-apples comparison if you allow the retail price to double between generations since inflation is nowhere near 100% and chips of a given complexity get cheaper to make as fab processes shrink and mature.The Ryzen 3900x was announced and will release with 12 cores and 24threads. If this becomes mainstream, It will have tripled the high-end mainstream gamer's core count.

How many people think of CPUs much beyond $300 as mainstream? I suspect those are well beyond most people's pain threshold.

More like enthusiast high-end on mainstream platform, similar to those $1000 CPUs from 10-20 years ago. Yes, they existed, but made absolutely no sense for 99.9% of people.I meant "mainstream" as in a mainstream platform. The 9900k is mainstream in mind for higher-end gamers.

If the Intel roadmap leaks are accurate, we already know what is going to happen this time around: Intel will mostly sit out the low-end and mainstream until its 7nm process goes to mass production in 2021 assuming all goes well.There's a friggen trend there for Intel, but now they don't have the fabbing advantage from before so... I'm not sure what will happen this time around.

Yup Intel CPU can keep up gaming even if they made these CPU few yaers ago and have security issues (which allouwed windows to give users choice to disable if they believe they are not at risk as there no known attacks on their computers) .Wow 12/24 vs 8/16 and AMD still looses or only gets the same result in all benches except for cs go where the results do not seem to correspond to what the 9900k is actually capable of.

Some guys forget that we get a 12C24T at the price of Intel's 8C16T and still saying that Intel does better on games which clearly will be more or less on par based on claimed IPC improvements and clock speeds which we will soon find out.

Anyways a person preferring the 9900K after the availability of 3900X is highly unlikely and pretty much useless unless Intel reduces its price.

Unfortunately everybody has their own opinions.

I just heard that the benchmark figures amd showed was with none of the latest midigation patches for intel, so intel cpus were helped a lot there.

Also, the benchmarks were done before the latest windows patch that optimized windows for ryzen.

Meaning that performance may be slightly better than expected.

I remember my K6-3/450 costing $400 or so in 1998 or so, and, I certainly remember when the P2/350 finally fell to $400...; and, I remember AMD asking $1000 for it's latest socket 939 or 940 FX57 processor in 2005 or 2006...

Once in a while deals come along...the Celeron 300A, the 2600K, and possibly the 3600X and 3800X, etc... !

While 1080 med runs 100FPS faster,do you have any understanding on what benchmarks are?Uhhh....It looks like AMD's CSGO 9900k number pretty much perfectly matches 1080p high AVG in your chart.

In gaming the 7700k is on par with the 9900k ,amount of cores doesn't help much there.

While you're not wrong, now more than ever before, those roadmaps need to change so Intel can stay relevant in the consumer market. I wonder if they really care that much, but I'm absolutely sure they don't want to lose face.If the Intel roadmap leaks are accurate, we already know what is going to happen this time around: Intel will mostly sit out the low-end and mainstream until its 7nm process goes to mass production in 2021 assuming all goes well.

So we can expect little more than 14nm refreshes with additional hardware mitigations and minor improvements baked in for the next two to three years from Intel.

Going with EMIB and multi-chip solutions wouldn't fix the underlying issue that Intel can't push 14nm much further than it already has and chiplets wouldn't change that in any meaningful way at this point. Chiplets make more sense on young manufacturing processes where the yield spread is wide and the defect rate is too high for large chips, Intel's yields on 14nm are likely high enough that chiplets would provide little to no benefit for the increased manufacturing costs, even more so if Intel made 8C16T chiplets of which its entire current mainstream portfolio would only have one anyway so no chiplet binning and matching benefit there. While Intel could hypothetically bring Sunny Cove to 14nm, the extra complexity that gave Sunny Cove (Icelake) its ~18% IPC improvement would likely translate to 500+MHz lower clocks on 14nm (possibly on top of the already 300MHz lower clocks on 10nm) so this isn't a particularly viable option either. Then you'd also have the issue of TDP getting out of hands as more cores get added, doubt that many people would look favorably at 150-200W 12-16 cores Intel chips that barely keep up with AMD's 105W parts.Think about it this way: given the "history" ("tale" is a better fit, lol) of ThreadRipper, why can't Intel do something similar? They own the fabs, they own EMIB and, more importantly, they already have a decent design to use.

I'd make an argument in favor by saying that 7nm for AMD has worked BECAUSE they moved a lot of stuff out of the main SoC and just left the CCX'es on their own. Intel already has 10nm working (I'll give them the benefit of the doubt), so simplifying the SoC elements for 10nm and leaving the IO stuff in an optimized 14nm node would work perfectly well IMO. So, as you say "young manufacturing process" fits perfectly.Going with EMIB and multi-chip solutions wouldn't fix the underlying issue that Intel can't push 14nm much further than it already has and chiplets wouldn't change that in any meaningful way at this point. Chiplets make more sense on young manufacturing processes where the yield spread is wide and the defect rate is too high for large chips, Intel's yields on 14nm are likely high enough that chiplets would provide little to no benefit for the increased manufacturing costs, even more so if Intel made 8C16T chiplets of which its entire current mainstream portfolio would only have one anyway so no chiplet binning and matching benefit there. While Intel could hypothetically bring Sunny Cove to 14nm, the extra complexity that gave Sunny Cove (Icelake) its ~18% IPC improvement would likely translate to 500+MHz lower clocks on 14nm (possibly on top of the already 300MHz lower clocks on 10nm) so this isn't a particularly viable option either. Then you'd also have the issue of TDP getting out of hands as more cores get added, doubt that many people would look favorably at 150-200W 12-16 cores Intel chips that barely keep up with AMD's 105W parts.

The only viable solution to Intel's 14nm brick wall problem is breaking through to 10nm and beyond. The rest does not make sense without it.

Not a bad thing to point out, but as I said in the other thread we were talking about this, it could be a positive side-effect of simplifying the die and removing silicon that just doesn't add to what the cores actually should be working on (and this is also part of my argument of removing the iGPU; it all fits, somehow 😛). Remember that* anything that competes for space in the die and it's not directly doing "core" stuff is taking away speed potential, sort of speaking. There's a big load of stuff you can get rid of for such things that will allow you to clock higher, I'm sure. This is to say, I'm sure if Zen2 wasn't chiplets, they would not have been able to clock* them so high.Lisa said that their engineers thought clocks would go down when they went to 7nm. Their clocks of higher-end CPUs actually went up by a fair margin over 12 or 14nm.

Intel's 10nm mobile clocks are lower than 14nm mobile CPUs. If their desktop clocks go down as well, it won't be good unless IPC or some other performance metric increases a lot. That's about as positive as I can be.

Intel's 10nm might be working, but not well enough for Intel to risk chips larger than quad-cores (don't remember seeing anything beyond that on roadmaps even for servers), not well enough to make mainstream chips for more than mobile and embedded and not well enough to achieve the same or higher clock frequencies as previous-gen parts achieved. Intel's 10nm isn't ready to compete against anything other than low power, which is why it is missing from all other segments on roadmaps.Intel already has 10nm working (I'll give them the benefit of the doubt), so simplifying the SoC elements for 10nm and leaving the IO stuff in an optimized 14nm node would work perfectly well IMO.

While I don't disagree, remember those 10nm CPUs they're trying to churn out are still "full SoC" instead of the chiplets approach. There's a big difference in both, so it's not trivial to note.Intel's 10nm might be working, but not well enough for Intel to risk chips larger than quad-cores (don't remember seeing anything beyond that on roadmaps even for servers), not well enough to make mainstream chips for more than mobile and embedded and not well enough to achieve the same or higher clock frequencies as previous-gen parts achieved. Intel's 10nm isn't ready to compete against anything other than low power, which is why it is missing from all other segments on roadmaps.

This is a lot like Broadwell: an entire generation dedicated to low power because the process isn't up to speed for anything else yet. Except this time around, Intel will be 2-3 years behind everyone else on process tech instead of 2-3 years ahead so Intel can't afford spending years on refining 10nm. Intel needs to put all the 10nm money it hasn't spent already on rushing to 7nm to catch up, which means no more 10nm fabs than necessary to meet 10nm commitments and no capacity to spare for mainstream chips.

Yes I understand how benchmarks work. Do you? Where is this benchmark that shows that zen2 is 100 FPS slower at 1080p med? All we have for zen2 benchmarks is AMD’s at this point. To my knowledge there aren’t any details on what quality settings or hardware was used in those benchmarks. Unless they are pulling some seriously shady stuff in that benchmark the 3900x should outperform the 9900k in CSGO period. At every resolution and quality setting. CSGO is the most meaningless benchmark in that stack anyway.While 1080 med runs 100FPS faster,do you have any understanding on what benchmarks are?

If zen2 can't hit the same 500FPS mark it's not just as fast it's a 25% difference in speed.

I agree. We can’t know anything 100% until independent reviews come out but AMD’s benchmarks are pretty encouraging. Considering the lack of security mitigations and win 10 scheduler updates in those benchmarks it’s hard to imagine they aren’t representative of what reviewers are going to see if not slightly handicapped. The actual benchmarks might be better. AMD are showing enough confidence in the new stack to step them up in price and create a new product tier. They have massive motherboard vendor support. They have a ton of buzz around this release. Why would they risk the bad publicity with misleading benchmarks?Im still waiting for 3rd party reviews of 3900x vs 9900k. Both non-hedt $499 cpus. Although the 9900k can be bought a bit cheaper usually.

Apparently ther is some confusion about the 3950x release date. Although AMD's Lisa Sue said that it would be launched in september, amds website says 7/7.

I believe it will be in september since sept means 7 i think.

AMD's Ryzen APUs are all monolithic too. Doing chiplets at the entry-level adds too much manufacturing cost and risk to an already cheap die.While I don't disagree, remember those 10nm CPUs they're trying to churn out are still "full SoC" instead of the chiplets approach. There's a big difference in both, so it's not trivial to note.

Yes, but the Ry3K APUs are still in 12nm! I have no idea if they'll put a full die in 7nm now that they've committed to chiplets.AMD's Ryzen APUs are all monolithic too. Doing chiplets at the entry-level adds too much manufacturing cost and risk to an already cheap die.

To put this into perspective, Icelake with its four cores, IGP, memory controller, PCIe, etc. is only 130sqmm, only slightly larger than Ryzen's IO die. There isn't much to split there. On top of that, the hypothetical quad-core Sunny Cove chiplet would be somewhere in the neighborhood of 40sqmm, which isn't much room to cram the 400+ IO pads connecting it to the IO die plus all of the power and ground pads. Then you have to add interface logic and drivers on both sides of this bus, chance of defects in this extra overhead, extra manufacturing and field failures from the increased mechanical complexity, etc.

Chiplets don't make sense below a certain point where overheads aren't worth the trouble and it does not seem like Intel intends to push 10nm beyond that point. Basically, Intel launched a minimum viable product on 10nm to prove to investors that it did get 10nm to work so it won't get sued by investors for lying about getting 10nm (mostly) sorted out and this will probably be the end of 10nm Intel CPUs.

Chiplets don't make economic sense at the entry-level, which is why AMD went monolithic 12nm for its 3200G and 3400G instead of using a chiplet and IO-die with IGP or GPU chiplet. In all likelihood, AMD's next generation of entry-level APUs are going to be monolithic 7nm: can't afford the extra design and manufacturing overheads associated with chiplets on ~$100 chips, which is about the same price point as the cheapest Icelake models.Yes, but the Ry3K APUs are still in 12nm! I have no idea if they'll put a full die in 7nm now that they've committed to chiplets.