Why are you calling it so-called??

That's what every refresh has been in the last years, better binns due to better yields resulting in better clocks and not

much anything more.

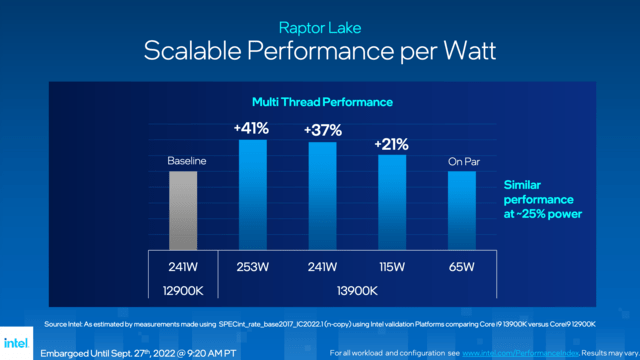

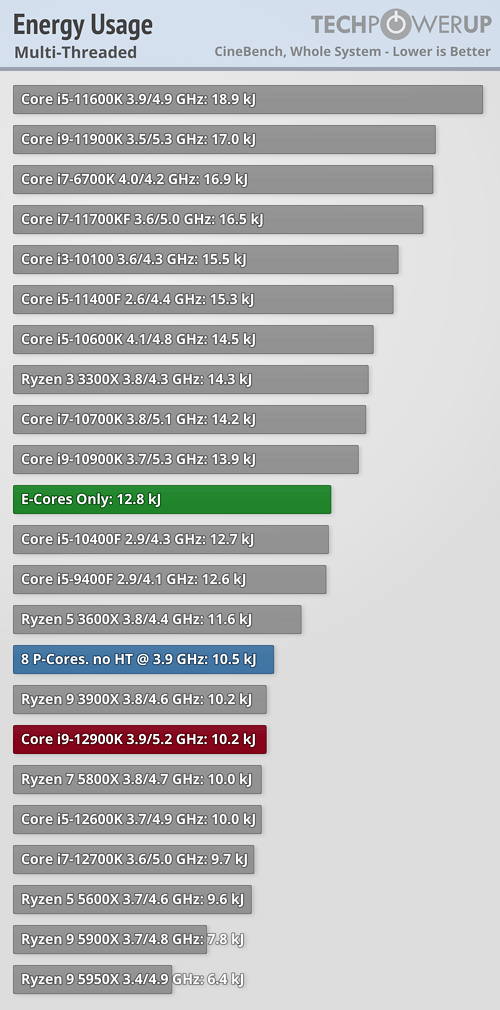

The 13900, k and ks, already use 300-350W depending on how much cooling you use and on the default settings of the mobo. If you overclock you can get to more than 400W on good "normal" (non-exotic) cooling for both the k and ks.

Unless reviewers start to use the warranted max turbo power as a limit every CPU will just reach whatever limit the cooling of the system represents.

With the Core i9-13900K, Intel delivers impressive performance. Our in-depth review confirms: Raptor Lake is the world's fastest CPU for gaming. Even in applications the processor is able to match AMD's Zen 4 Ryzen 9 7950X flagship. If only power consumption wasn't so high...

www.techpowerup.com

The Intel Core i9-13900KS is the fastest CPU that Intel is offering this generation. Our review confirms that its clock speeds reach 6.0 GHz, but power consumption also sets a new record. How's gaming performance vs 7800X3D and 7950X3D? Our review has the answers.

www.techpowerup.com