Not an Apple user. But I think Apple's OS would do well if they sold a compatible version for PCs.

Aside from all the other reasons not to do this, a big one is that Apple just migrated almost completely

off of x86! Why on earth would they backtrack on that?

Also, maybe you're too young to remember this, but Apple actually tried the model of selling their OS to run on 3rd party hardware, for a couple years, back in the late 90's. I even knew a guy who had a licensed Mac clone (this was back when they were PowerPC-based, so the clones were actually built expressly to run MacOS). IIRC, one of the first things Steve Jobs did, upon his return to Apple, was to put a stop to this practice.

Apple makes huge margins on their hardware. If they would sell the OS separately, either it would be too expensive to be very interesting to most, or Apple would be giving up a significant chunk of revenue. Also, it would be more costly for Apple, because they would now have to support a much wider range of hardware than they do today, and that translates into higher development and testing costs.

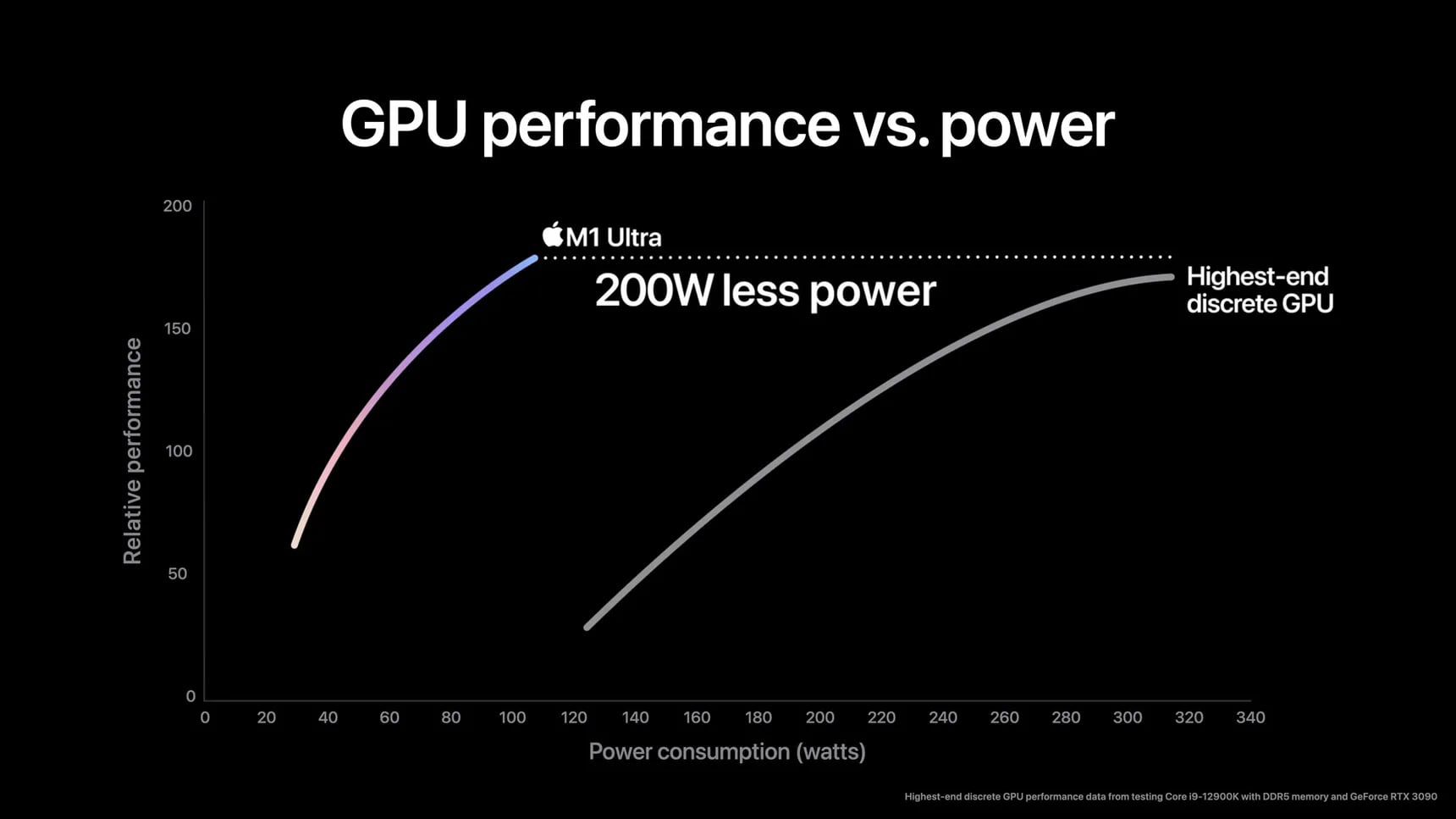

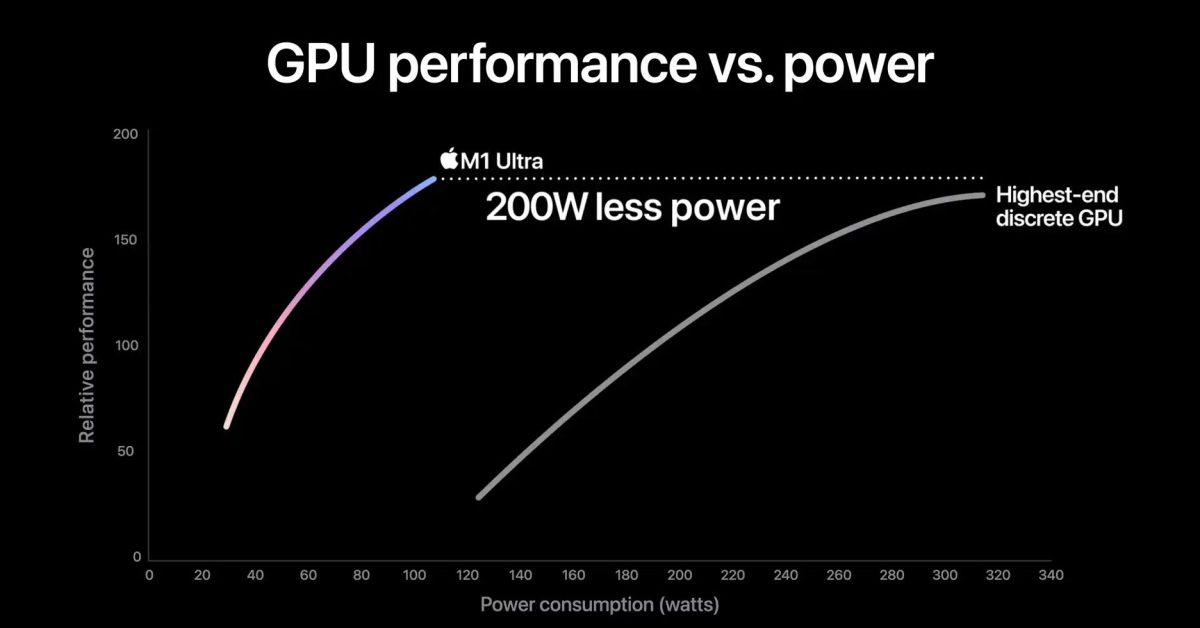

Same goes for any CPUs and GPUs they develop.

Not sure quite what you mean by this part.