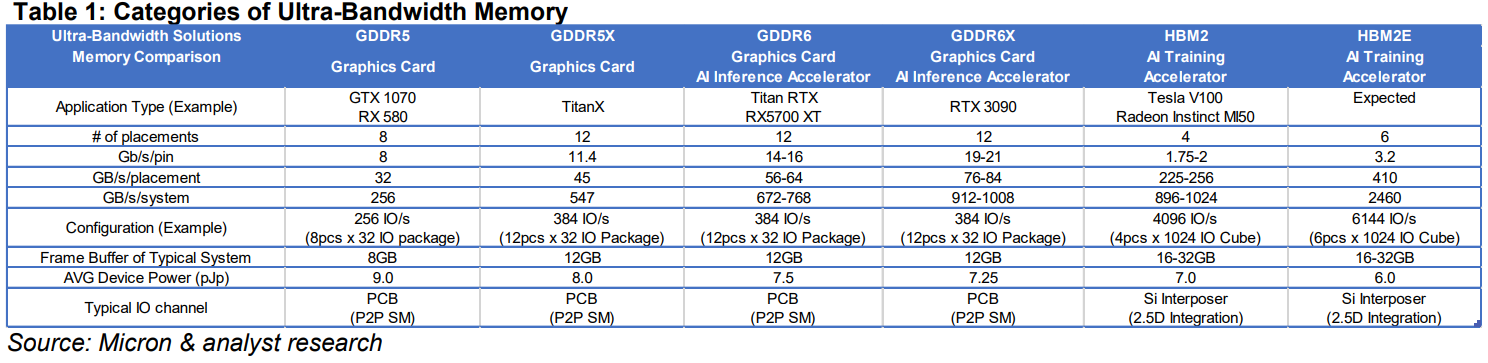

I'm sure all those extra GDDR6x chips on the backside didn't help. But I don't understand anything over 12Gigs memory. Have we ever seen one game go over 12GB @ 4K?

I would also think you are correct. NVIDIA is worried about AMD coming back into the serious contender race. Plus there are serious upgrades to the RT engine from what I understand (100% gains). That might explain the large power increase.

It could be that the max TDP will only be in ray tracing, true. I'm not sure about GDDR6X -- 12GB should be 12 chips, which is basically the same as Titan RTX and 2080 Ti +1.

If the cards routinely draw over 350W, though, that is not good in my book. I really think Nvidia should have stuck with a TDP of 350W at most for a consumer GPU. We had 230W with GTX 780 / 780 Ti. That dropped way down to 165W for 980 and 250W for 980 Ti. Then it went to 180W for the 1080 and 250W for 1080 Ti. RTX 2080 pushed it to 215W (225W for FE) and 2080 Ti is at 250W / 260W for FE.

Now, with 7nm, we're talking about 350W or more? That seems ludicrous. 7nm was supposed to drop power and improve efficiency. Traditionally, Nvidia even does lower power on Gen1 of a new lithography, saving higher power use for Gen2 and a newer/faster line of GPUs. Of course, if it's Samsung 7nm or 8nm instead of TSMC 7nm, that might explain some things. :-\

Again, the 12-pin connector exists, but I hope it's optional -- as in, that there are boards with dual 8-pin. I don't want to worry about upgrading PSUs in addition to what I suspect will be a very expensive GPU line. Maybe only 3090 gets 12-pin, but even so, that's potentially an extra $200-$300 for a new high-end PSU with a 12-pin connector.

Realistically: ONLY high-end PSUs will be made with 12-pin connectors for the next 6+ months, which means maybe it will be $300-$400 for a PSU! I can already see the marketing: "You need 80 Plus Titanium and at least 850W! Because the Titanium branding will increase the price by 50% over Platinum." That would be bad on so many levels.

View: https://www.youtube.com/watch?v=jBOMlWl7fFk