That's the point, they do. Since all the rendering threads are the same, the fighting doesn't really matter since you still get 20~50% extra IPS then just having a single target.Ok, but then how do threads not fight over resources in a demanding benchmark like cinebench? or do they? I have no idea.

Discussion Thoughts on Hyper-Threading removal ?

Page 2 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Having application aka game utilising both P and E cores properly i don't think is possible atm? Devs are patching games to prioritise P cores because of E cores in the mix causes performance issues.

HT afaik allowed commands to begin before the last finished, not having HT anymore means that whole core be waiting. Since first core does majority of the work, not having multiple workers there won't be as good as it could be.

HT afaik allowed commands to begin before the last finished, not having HT anymore means that whole core be waiting. Since first core does majority of the work, not having multiple workers there won't be as good as it could be.

Having application aka game utilising both P and E cores properly i don't think is possible atm? Devs are patching games to prioritise P cores because of E cores in the mix causes performance issues.

HT afaik allowed commands to begin before the last finished, not having HT anymore means that whole core be waiting. Since first core does majority of the work, not having multiple workers there won't be as good as it could be.

That's not what SMT/HT is, and there is no such thing as commands, it's instructions and superscaler uArch is what enabled prediction and pre-execution, and that was before SMT existed.

x86 cheat sheet that lists all the registers.

https://cs.brown.edu/courses/cs033/docs/guides/x64_cheatsheet.pdf

Essentially what we call a "core" is just a bunch of registers exposed to the OS via the BIOS. What we call "threads" are just streams of binary operations that get executed on those registers. Assembly lets you see what is actually happening on the hardware without all the abstraction.

Basic Hello World program in ASM that is compiled on NASM.

https://www.devdungeon.com/content/hello-world-nasm-assembler

Code:

; Define variables in the data section

SECTION .DATA

hello: db 'Hello world!',10

helloLen: equ $-hello

; Code goes in the text section

SECTION .TEXT

GLOBAL _start

_start:

mov eax,4 ; 'write' system call = 4

mov ebx,1 ; file descriptor 1 = STDOUT

mov ecx,hello ; string to write

mov edx,helloLen ; length of string to write

int 80h ; call the kernel

; Terminate program

mov eax,1 ; 'exit' system call

mov ebx,0 ; exit with error code 0

int 80h ; call the kernelUnder the _start we see the x86 instructions. First is moving the value 4 to the 32-bit AX register. Then moving 1 to the 32-bit BX register, then moving memory address that holds the string data "hello" to the 32-bit CX register, then putting the length of that string into the 32-bit DX register. Finally calling the Linux OS function stored as 80h on the interrupt table which will execute it's own machine code to read and send those values to STDOUT which ends up on the screen.

For MSDOS we would use a different function

https://medium.com/ax1al/dos-assembly-101-4c3660957d25

If you wanted to do it without calling an OS function, you could instead get the memory address of the video memory representing the screen known as the frame buffer, then do a MOV <address>,<variable> to send it's contents into video memory and it would show up on the screen. For IBM PC BIOS compatible systems the VGA frame is 64kb starting at A000 and going to AFFF.

That is how stuff actually gets done on the CPU. If there is only one set of registers then it's impossible for more then one stream of instructions to be executed at any point in time. To execute another stream the contents of the registers first have to be saved into cache memory, then the values for the registers of that new stream are loaded into the ones being used and it goes from there, it's known as a context switch. Instead if we create a second set of registers that the OS can see, the OS can now send that stream of instructions to that second set avoiding the need for the expensive context switch. The OS genuinely believes there is a second value CPU to process on.

Order 66

Grand Moff

Nice post! That explains a lot. It’s not a competition, I know, but I dare say that you would give @bit_user a run for his money in terms of quality and detail of posts. Thanks for the links.That's not what SMT/HT is, and there is no such thing as commands, it's instructions and superscaler uArch is what enabled prediction and pre-execution, and that was before SMT existed.

x86 cheat sheet that lists all the registers.

https://cs.brown.edu/courses/cs033/docs/guides/x64_cheatsheet.pdf

Essentially what we call a "core" is just a bunch of registers exposed to the OS via the BIOS. What we call "threads" are just streams of binary operations that get executed on those registers. Assembly lets you see what is actually happening on the hardware without all the abstraction.

Basic Hello World program in ASM that is compiled on NASM.

https://www.devdungeon.com/content/hello-world-nasm-assembler

Code:; Define variables in the data section SECTION .DATA hello: db 'Hello world!',10 helloLen: equ $-hello ; Code goes in the text section SECTION .TEXT GLOBAL _start _start: mov eax,4 ; 'write' system call = 4 mov ebx,1 ; file descriptor 1 = STDOUT mov ecx,hello ; string to write mov edx,helloLen ; length of string to write int 80h ; call the kernel ; Terminate program mov eax,1 ; 'exit' system call mov ebx,0 ; exit with error code 0 int 80h ; call the kernel

Under the _start we see the x86 instructions. First is moving the value 4 to the 32-bit AX register. Then moving 1 to the 32-bit BX register, then moving memory address that holds the string data "hello" to the 32-bit CX register, then putting the length of that string into the 32-bit DX register. Finally calling the Linux OS function stored as 80h on the interrupt table which will execute it's own machine code to read and send those values to STDOUT which ends up on the screen.

For MSDOS we would use a different function

https://medium.com/ax1al/dos-assembly-101-4c3660957d25

If you wanted to do it without calling an OS function, you could instead get the memory address of the video memory representing the screen known as the frame buffer, then do a MOV <address>,<variable> to send it's contents into video memory and it would show up on the screen. For IBM PC BIOS compatible systems the VGA frame is 64kb starting at A000 and going to AFFF.

That is how stuff actually gets done on the CPU. If there is only one set of registers then it's impossible for more then one stream of instructions to be executed at any point in time. To execute another stream the contents of the registers first have to be saved into cache memory, then the values for the registers of that new stream are loaded into the ones being used and it goes from there, it's known as a context switch. Instead if we create a second set of registers that the OS can see, the OS can now send that stream of instructions to that second set avoiding the need for the expensive context switch. The OS genuinely believes there is a second value CPU to process on.

Just a historical note: Intel omitted HyperThreading from: Pentium M, Core, and Penryn. It didn't return until Nehalem. So, that's a real gap with no hyperthreading.Hyper-Threading - the revolutionary Technology Intel has kept going ever since the Pentium 4 days.

Their implementation of HyperThreading in the Pentium 4 had some notable cases where performance was worse with it enabled than disabled. When it returned, in Nehalem, it was rather more refined.

I'm curious to hear about the supposed upsides of removing it. Also, whether they're nixing it from their server P-cores, or just the client version. In a modern CPU with register-renaming, it seems to me the actual silicon footprint of HT/SMT should be pretty small.I would like it very much if you should share your opinions on the removal of Hyper-Threading in Arrow Lake CPUs

I'm not too surprised to see it dropped in favor of hybrid, but I'm a little surprised to see them drop it without further increasing the number of E-cores. I wonder if we'll see a repeat of the situation like we had with Comet Lake vs. Rocket Lake, where the older 10-core i9's could sometimes outperform the newer 8-core ones. Except, in this case, we're probably talking about each having 8+16 cores. If so, it's not great to be in a position of having to explain away a drop in multithreaded performance.

Last edited:

The solution to this is something Linux calls "core scheduling", where the kernel only schedules threads from the same process on the same physical core. This eliminates most - if not all - SMT-related security issues, at the slight expense of some performance.Probably due to security concerns, due to exploits.

The kernel doesn't default that setting to "on", but I'm sure some distros do. I'd be surprised if Windows Server didn't have a comparable feature, and MS could put it in Windows client software, if Intel or their customers pestered them enough.

Also, I'd just point out that some side-channel attacks involve snooping shared cache. So far, Intel's E-cores all have shared L2 cache (per cluster of 4), which probably makes it a lot easier for one core to spy on another, in the same cluster. And while you could do a similar "core scheduling" technique to combat it, that comes at a higher price with E-cores and won't work for L3 cache.

Neither ARM's internally-designed, mainstream cores nor Apple's ARM cores ever had it. In some ways, you could say Intel is the one following them, by opting for hybrid without SMT.If Intel removes hyperthreading and makes a successful product then others may follow.

I can't give you a citation on this, but I'm pretty sure I saw a compelling case that SMT is less power-efficient. I'm not really sure why, though I have a couple ideas. ARM and Apple have been coming from a place of optimizing their cores more for power-efficiency. So, that would explain why they never really delved into the realm of SMT. It could also explain why Intel might be interested in it, at least in client CPUs.

They're rumored to have two different compute dies, for Arrow Lake. The low/mid-range one is being made on Intel 20A, while the high-end one will be made on TSMC (presumably N3E). They could conceivably exclude HT from just the low/mid-range die. That would be the best way to mitigate the risk (if they felt the need to do so).I don't think there is a way to roll it out slowly. Either it has the capability or it doesn't.

Exactly. I'm sure they ran high-level simulations, before even doing RTL-level simulations.Just remember that Intel already has upcoming silicon in testing phases a long time before we see it. So they will have tested how removal of hyperthreading

The Arrow Lake-S models are said not to have LP E-cores.and more reliance on e cores and lp cores

Huh? Alder Lake-S launched waaay before Alder Lake-P. And Lunar Lake (laptop) is supposed to launch a couple quarters after Arrow Lake (desktop). So, we have two examples of desktop preceding laptop CPUs!Also keep in mind that desktop is never first. Mobile is where the money is really at, so you'll see laptops with this before we see it in desktop.

Floating point-heavy benchmarks tend to show little performance gain with HT enabled. Sometimes, they even perform better with it off!the mayor problem right now is that there is zero software that can even use the amount of IPC that CPU cores have right now,

I'm turning it off my self in 13th and 14th gen high end cpus so no big deal. Games run better without it and power draw drops significantly. The performance loss is around 10% in heavy MT workloads. HT is only required nowadays in cpus without ecores, it you have the ecores it's kinda irrelevant.

35below0

Respectable

CPU "efficiency" also depends a lot on how programs are made. Lots of games in particular are NOT programmed with optimization or efficiency in mind. Getting the most use of hardware is not at the top of the list of priorities.

Hitting the deadline and maybe stability are the main goals.

In addition, games aren't always made to take advantage of multiple cores and threads. Seems to me like that's more of a performance critical software target.

Games can always chug and everyone will either expect patches or blame it on the drivers. Or buy new hardware.

So talk of 20% performance here or there is really neither here nor there because it will depend heavily on what the CPU is doing. Benchmarks, file transfers, youtube or gaming are very different scenarios. But you all know this.

I don't expect Intel will nix HT and roll out a new CPU without touting an improvement. Even if performance is not increased it will not be noticeably reduced, and probably power usage will decrease. Or maybe the price.

Hitting the deadline and maybe stability are the main goals.

In addition, games aren't always made to take advantage of multiple cores and threads. Seems to me like that's more of a performance critical software target.

Games can always chug and everyone will either expect patches or blame it on the drivers. Or buy new hardware.

So talk of 20% performance here or there is really neither here nor there because it will depend heavily on what the CPU is doing. Benchmarks, file transfers, youtube or gaming are very different scenarios. But you all know this.

I don't expect Intel will nix HT and roll out a new CPU without touting an improvement. Even if performance is not increased it will not be noticeably reduced, and probably power usage will decrease. Or maybe the price.

TerryLaze

Titan

Cinebench is floating point heavy, isn't it?! That's why bulldozer wasn't that great at it.Floating point-heavy benchmarks tend to show little performance gain with HT enabled. Sometimes, they even perform better with it off!

And it has good performance gains from HT.

Or with a bit more level head-ness.They're rumored to have two different compute dies, for Arrow Lake. The low/mid-range one is being made on Intel 20A, while the high-end one will be made on TSMC (presumably N3E). They could conceivably exclude HT from just the low/mid-range die. That would be the best way to mitigate the risk (if they felt the need to do so).

We know, not a rumor, that intel uses tsmc for the igpu.

It makes 1000 times more sense to say that only the high end will have the new XE graphics made at tsmc while the mid/low range is going to stay at intel HD graphics.

Just like in previous gens where they only made the higher end on a new process.

Yes, I was more thinking in general, and future chips.The Arrow Lake-S models are said not to have LP E-cores.

Huh? Alder Lake-S launched waaay before Alder Lake-P. And Lunar Lake (laptop) is supposed to launch a couple quarters after Arrow Lake (desktop). So, we have two examples of desktop preceding laptop CPUs!

I didn't know that. This may have to do with needing to put TSMC in the mix on the latter. For the former were there were production issues? It was Intel 7, but it is annoyingly hard to find a list of node/product. They did try out E-cores in mobile first so perhaps they had no reason to hold back Alderlake-S from going forward, though we still had a lot of issues with licensing and anti-cheat in games and some business software.

Yes, I would qualify it as fp-heavy.Cinebench is floating point heavy, isn't it?! That's why bulldozer wasn't that great at it.

Bulldozer had a lot of issues, so it's far too simplistic to pick out one feature of the microarchitecture and try to use it to explain its performance on a workload. You'd have to do in-depth performance analysis to have any idea whether its lack of performance on Cinebench was due to problems that could've been mitigated by HT.

Also, did you forget that Bulldozer shares one FPU between two cores? For a fp-heavy workload, that should put it at a natural disadvantage!

The best way to measure the effectiveness of HT, on a given workload, is to benchmark it with HT on vs. off, on the same CPU! Obviously, if you eliminate HT from the CPU's design, there might be some savings and optimizations that could make the non-HT case even faster, but if we're just talking about whether HT helps a given workload, then you'd want that to be the only variable you're changing.

Source?And it has good performance gains from HT.

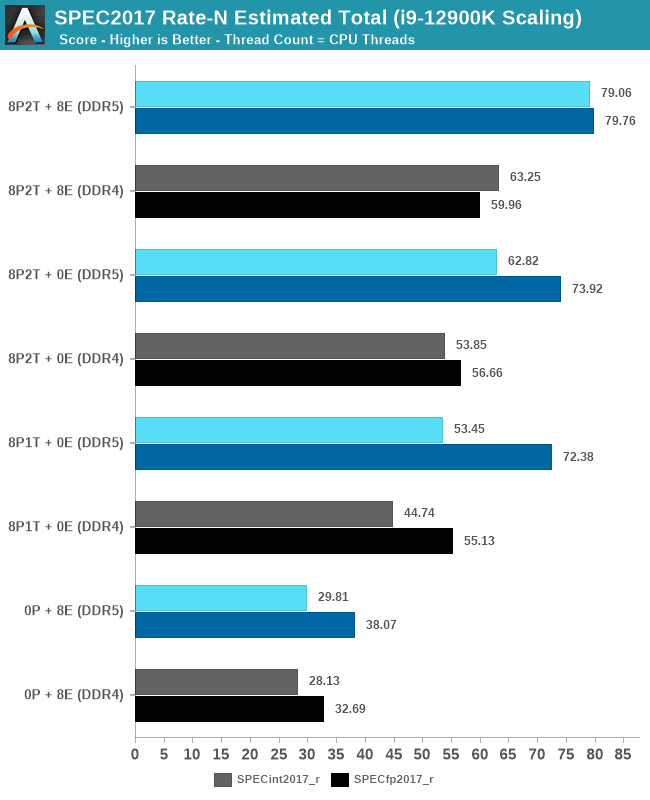

Here's the data I'm using:

That's measuring 12 real-world, fp-heavy apps. Out of those, the i9-12900K's 8P1T + 0E configuration runs 5 of them faster than 8P2T + 0E.

If you look at the aggregates, fp performance is virtually identical between the 8P1T + 0E and 8P2T + 0E cases:

And before anyone reads too much into the cases with 8E, remember that Alder Lake had a ring bus penalty, when enabling the E-cores. So, that is making the 8P2T + 8E cases look worse than they should be.

TerryLaze

Titan

That's the only reason I brought them up.Also, did you forget that Bulldozer shares one FPU between two cores? For a fp-heavy workload, that should put it at a natural disadvantage!

And all 5 of them are super obvious that they are not scaling well with the amount of cores, since even the result of the full CPU is lower than with the e-cores off, or extremely close anyway.That's measuring 12 real-world, fp-heavy apps. Out of those, the i9-12900K's 8P1T + 0E configuration runs 5 of them faster than 8P2T + 0E.

The way SPECfp Rate-N works is that they run N copies of the benchmark, with OpenMP disabled. So, in the ideal case, you should see linear scaling. There's no chance for poor threading within the app to affect this. If it doesn't scale linearly, the only explanation can be bottlenecks in the system!And all 5 of them are super obvious that they are not scaling well with the amount of cores, since even the result of the full CPU is lower than with the e-cores off, or extremely close anyway.

digitalgriffin

Splendid

Probably due to security concerns, due to exploits.

Per thread encoding was supposed to take care of this. (Each thread's instructions were encrypted at CPU level by the prefetch loader so code could not be injected) What ever happened to that? It WAS on the roadmap for both AMD and Intel.

Per Thread encryption might make the ops of Read/Jump look like @#%$@#/*@#&@#$$. So injecting a "read/jump" physics attack in instead of a @#%$@#/*@#&@#$$ using a physics attack would just likely crash the program.

Last edited:

digitalgriffin

Splendid

What do you mean? Also, won't the performance loss be massive? I've heard that hyperthreading improves performance by about 40%.

This is a bit complicated. But basically, x86 is really RISC with a ton of internal registers. Some uOps might use 15% of registers like X1, B1, K2. Then another op comes along and executes on registers X5, B9, K5. No conflict no problem. But if they need the EXACT same register resource and there's only 1, then you get a conflict. And this is why AMD having two integer units sharing 1 FP units became an issue and a lawsuit.

Register-renaming can detect and mitigate false conflicts. Basically, if you have two instructions which write the same ISA register, then the CPU knows anything after the second write is accessing a value that's logically distinct from the first, and therefore can rename them to a different internal, physical register.This is a bit complicated. But basically, x86 is really RISC with a ton of internal registers. Some uOps might use 15% of registers like X1, B1, K2. Then another op comes along and executes on registers X5, B9, K5. No conflict no problem. But if they need the EXACT same register resource and there's only 1, then you get a conflict.

TerryLaze

Titan

583.imagick_r shows that the system is is not bottlenecked because it shows the scaling that one would expect.The way SPECfp Rate-N works is that they run N copies of the benchmark, with OpenMP disabled. So, in the ideal case, you should see linear scaling. There's no chance for poor threading within the app to affect this. If it doesn't scale linearly, the only explanation can be bottlenecks in the system!

And then others linke 519.lbm_r show us that fewer cores = higher clocks, which has nothing to do with HT or with e-cores, or with scaling for that matter.

Bottom line, even in FP HT can add up to 37% of performance, of course it doesn't add it always, just like more cores don't always add performance either.

SPECrate 2017 is a benchmark suite for measuring system performance. Some benchmarks put more stress on core microarchitecture, some stress the chip interconnect, some stress the cache hierarchy, and some stress the memory subsystem. If all of its component benchmarks scaled well with core count, it would basically mean they're not adequately stressing other aspects of the system. So, the mere fact that LBM (Lattice Boltzmann Method - a 3D Computational Fluid Dynamics test) doesn't scale well with so many cores, on that CPU, doesn't somehow make it invalid or not a proper measure of scaling.583.imagick_r shows that the system is is not bottlenecked because it shows the scaling that one would expect.

And then others linke 519.lbm_r show us that fewer cores = higher clocks, which has nothing to do with HT or with e-cores, or with scaling for that matter.

The DDR4 vs. DDR5 benchmark, from the same article, clearly shows that LBM is memory-bottlenecked:

Right. There are wins and losses. That's consistent with what I said. However, my main point was that FP workloads tend to be more of a wash than integer, where it's clearly a net win.Bottom line, even in FP HT can add up to 37% of performance, of course it doesn't add it always, just like more cores don't always add performance either.

In 9 out of the 10 SPECint tests, HT provided a significant benefit:

digitalgriffin

Splendid

Oh I know. But I'm dumbing t down for those who don't study architecture. That said my point about a resource being utilized remains true. If you are out of FMA registers you are out of FMA registers. No amount of renaming will fix that (or the fact AMD was sharing 1 fp unit with 2 cores)Register-renaming can detect and mitigate false conflicts. Basically, if you have two instructions which write the same ISA register, then the CPU knows anything after the second write is accessing a value that's logically distinct from the first, and therefore can rename them to a different internal, physical register.

Joseph_138

Distinguished

I specifically waited to upgrade my PC from my core i7 6700 system, until the i5 got hyperthreading. The 10th gen i5 10400, is just a smidge behind an 8th gen Core i7 8700, which also has 6 cores and 12 threads, but the i5 has lower power consumption, and it wasn't very expensive. I bought a Dell XPS 8940, that the seller pulled the graphics card out of during the video card apocalypse. I made sure it was a top spec system, apart from the CPU. The only thing it doesn't have is the optional Killer wifi card. I only paid $300 for it. Even when I was finally able to get a Dell branded 3070, I still ended up paying far less for the whole system than it would have cost if I had ordered it straight from Dell. 😛

A lot of people were doing that, buying prebuilts, taking the video cards out, and either keeping or scalping them, and selling the rest of the computer at fire sale prices on ebay. When I saw what some people were dumping them for, I knew it was time to upgrade.

A lot of people were doing that, buying prebuilts, taking the video cards out, and either keeping or scalping them, and selling the rest of the computer at fire sale prices on ebay. When I saw what some people were dumping them for, I knew it was time to upgrade.

bandit8623

Distinguished

my 8700k i can oc to 4.6ghz all cores with smt on(12 threads). if i go higher my core 3 always has errors. lost the lottery.

if i disable smt i can go to 4.9ghz all cores (6 threads).

real world? Games like valorant and cs2 i get higher more stable frames with smt off and 4.9 ghz.

Why? because these games use about 5 threads. games that use more threads i would likely see better results with smt on.

new processors already have so many cores im not sure SMT is really needed anymore. especially if you can push more frequency with smt off.

if i disable smt i can go to 4.9ghz all cores (6 threads).

real world? Games like valorant and cs2 i get higher more stable frames with smt off and 4.9 ghz.

Why? because these games use about 5 threads. games that use more threads i would likely see better results with smt on.

new processors already have so many cores im not sure SMT is really needed anymore. especially if you can push more frequency with smt off.

Last edited:

gamerk316

Glorious

My suspicion is Intel has a software trick up its sleeve.

Remember: HT came about back in the days where we had *ONE* CPU core, and was a decent way to get throughput if part of the CPU core was doing nothing due to how deeply pipelined the Pentium 4 was. It took a minimal amount of die for a relatively decent performance boost.

Thing is, we now have dozens of CPU cores on the die. So why bother with HT, when we have literally a dozen CPU cores, each one with parts of their pipeline empty waiting for work. Why not just use those CPU cores, and utilize the parts that aren't currently doing work to do essentially what HT was already doing?

The benefit would be both security and reclaiming the die space HT at up, which could then be used for *something else* (or just lowering production costs).

Basically, I think Intel is just doing what is effectively HT on it's standard cores.

Remember: HT came about back in the days where we had *ONE* CPU core, and was a decent way to get throughput if part of the CPU core was doing nothing due to how deeply pipelined the Pentium 4 was. It took a minimal amount of die for a relatively decent performance boost.

Thing is, we now have dozens of CPU cores on the die. So why bother with HT, when we have literally a dozen CPU cores, each one with parts of their pipeline empty waiting for work. Why not just use those CPU cores, and utilize the parts that aren't currently doing work to do essentially what HT was already doing?

The benefit would be both security and reclaiming the die space HT at up, which could then be used for *something else* (or just lowering production costs).

Basically, I think Intel is just doing what is effectively HT on it's standard cores.

TerryLaze

Titan

Because it still adds at least around 30% of throughput on any app that scales with cores for every core that has HTT.Thing is, we now have dozens of CPU cores on the die. So why bother with HT, when we have literally a dozen CPU cores, each one with parts of their pipeline empty waiting for work. Why not just use those CPU cores, and utilize the parts that aren't currently doing work to do essentially what HT was already doing?

Why would anybody want to lose that amount of "free" performance?

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.