Specifications and ordering codes for Intel's Alder Lake-S processors unveiled.

US Pricing Listed For Intel Alder Lake CPUs : Read more

US Pricing Listed For Intel Alder Lake CPUs : Read more

I think you are over thinking it. Remember when Ryzen 3000 landed and not all of the CPU cores would hit max boost clock, only the faster cores could? AMD and Microsoft changed the windows scheduler so that it would target threads to the faster cores. Same deal here. AMD chips won't be affected.Um, looking at your chart, Intel 12900k lists for about $100 less and has more cores and threads than

the 5950X. Great deal if it weren't for the fact that the 5950X is a 16 CORE, 32 THREAD processor.

And 8 of the Intel cores are not full boat cores.

One more question I haven't heard the answer for. The point behind big little is power savings and running small/background tasks so the big ones can do the heavy work. This means Microsoft will finally start taking advantage of more cores. Does this mean that if the work is shared properly amongst the cores in an AMD processor, it will be slower because the large cores can't do the background tasks as well as the Intel small cores? If not, what's the point? Will Microsoft make it so os and background tasks will not be handed off in such a fashion unless there is a little core for them?

So... You had the money to buy a 5950X, but didn't buy a cheap GPU with it? And I'll tell you right away: you won't have a fun time trying to get 6 monitors working from an Intel iGPU. I don't believe you even can in theory.I owned the 5950x for a short period of time and took it back because my use case is one where I need a very fast processor, but also need the ability to support 5-6 monitors. The lack of an integrated graphics processor on the AMD left me to make the choice of keeping it or waiting for the 12900K, which does have an IGP, which will allow me to simultaneously use the onboard video ports and multiple discreet GPUs.

Intel has a proven history of coming back with great products when moving to new architectures and I am very confident that the new line will be exceptional. I will certainly be an initial adopter and hopeful that they have learned a valuable lesson from how AMD has raised the bar.

This isn't a smart phone!One more question I haven't heard the answer for. The point behind big little is power savings and running small/background tasks so the big ones can do the heavy work. This means Microsoft will finally start taking advantage of more cores. Does this mean that if the work is shared properly amongst the cores in an AMD processor, it will be slower because the large cores can't do the background tasks as well as the Intel small cores? If not, what's the point? Will Microsoft make it so os and background tasks will not be handed off in such a fashion unless there is a little core for them?

I currently have an Intel 7700K, an Nvidia 1650 and am running 5 monitors for work and stock trading. The system is 3.5 years old and I wanted to upgrade to a fast processor while maintaining my ability to drive my 5 monitors. In addition to replacing the 7700K with the Ryzen 5950X, I wanted to augment my 1650 with my Radeon RX6800 for crypto mining. It was only after purchasing and setting everything up that I realized that I could not use both integrated and discreet graphics at the same time. Sure, I could drop down to a Ryzen 5600G which provides IGD, but why do that when Intel's newest processor is right around the corner. Technology is a tool, not my personal friend, so I do not hold allegiances to companies or brand names. Both Intel and AMD make fantastic products and each product is tailored to specific use cases. For mine, I need to be able to use both the onboard and GPU, so that I can isolate the RX6800 for crypto mining, while driving my other needs off of the 1650 and onboard graphics. Yeah, I should have read the details on the 5950's lack of integrated graphics, but that is my money to worry about. With regard to my desire for Intel over AMD, it comes down to Intel being very mindful of different customer use cases and offering integrated graphics on their higher end products, unlike AMD, who leave that functionality out.So... You had the money to buy a 5950X, but didn't buy a cheap GPU with it? And I'll tell you right away: you won't have a fun time trying to get 6 monitors working from an Intel iGPU. I don't believe you even can in theory.

Sounds to me like you're trying to make a "buyers remorse" statement due to your unpreparedness. What you're describing is not the fault of the 5950X, but entirely yours.

Regards.

/facepalmI currently have an Intel 7700K, an Nvidia 1650 and am running 5 monitors for work and stock trading. The system is 3.5 years old and I wanted to upgrade to a fast processor while maintaining my ability to drive my 5 monitors. In addition to replacing the 7700K with the Ryzen 5950X, I wanted to augment my 1650 with my Radeon RX6800 for crypto mining. It was only after purchasing and setting everything up that I realized that I could not use both integrated and discreet graphics at the same time. Sure, I could drop down to a Ryzen 5600G which provides IGD, but why do that when Intel's newest processor is right around the corner. Technology is a tool, not my personal friend, so I do not hold allegiances to companies or brand names. Both Intel and AMD make fantastic products and each product is tailored to specific use cases. For mine, I need to be able to use both the onboard and GPU, so that I can isolate the RX6800 for crypto mining, while driving my other needs off of the 1650 and onboard graphics. Yeah, I should have read the details on the 5950's lack of integrated graphics, but that is my money to worry about. With regard to my desire for Intel over AMD, it comes down to Intel being very mindful of different customer use cases and offering integrated graphics on their higher end products, unlike AMD, who leave that functionality out.

I would be careful with the terminology. A CPU that downclocks to save power is not the same as a core with less transistors in it dedicated to logic. This is not even trying to be pedantic as the distinction is important. The new cores Intel is going with are akin to the Cell design, kind of, but the simple analogy is bigLITTLE from ARM; the big difference is asymmetry. Problem is, Intel wasn't able to nail the scheduler for an asymmetrical uArch for X86 and that leaves you with a full core that can't do certain AVX instructions because the efficient cores weren't build with those in. To be blunt, this is going to spawn a similar discussion to what AMD defined as a "core" with their CMT approach. The little/efficient cores are basically that same concept, but a bit better presented: they lack support of complex operations/instructions, clock way lower and don't have the same decoding pipeline as the performance/big cores.This isn't a smart phone!

The small cores on alder are not there for power savings, they are there to get the most performance out of the power budget available.

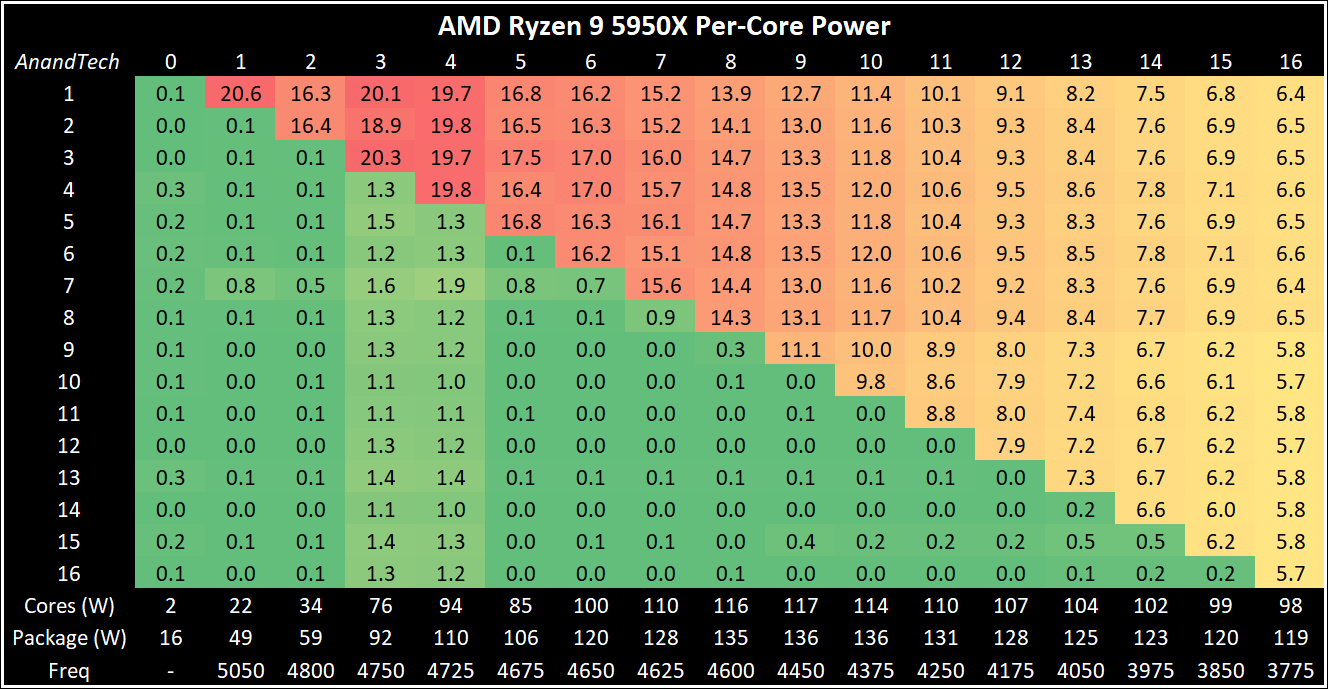

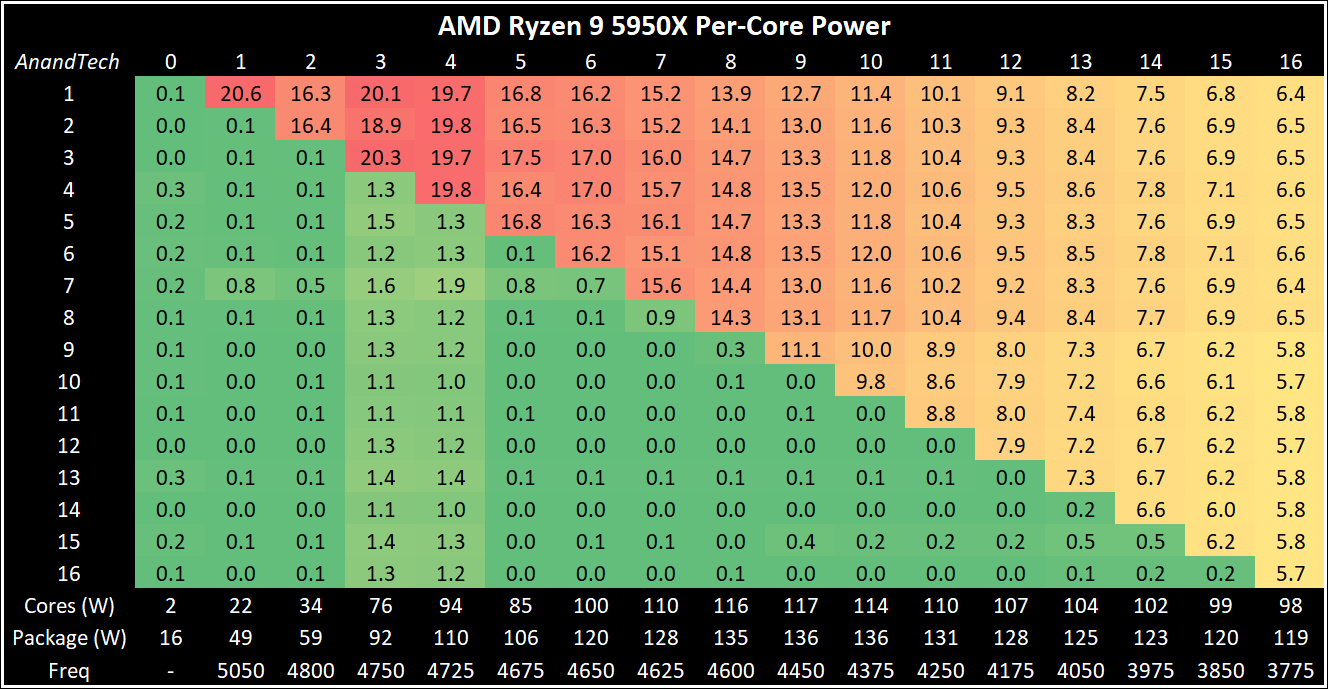

The 5950x has 16 real cores but when all of them are running all of them turn into little cores, they go from 5.05 single core to 3.77 for each of them at all core.

With alder the P cores will retain full all core turbo when all cores, including the E cores, are all loaded.

https://www.anandtech.com/show/1621...e-review-5950x-5900x-5800x-and-5700x-tested/8

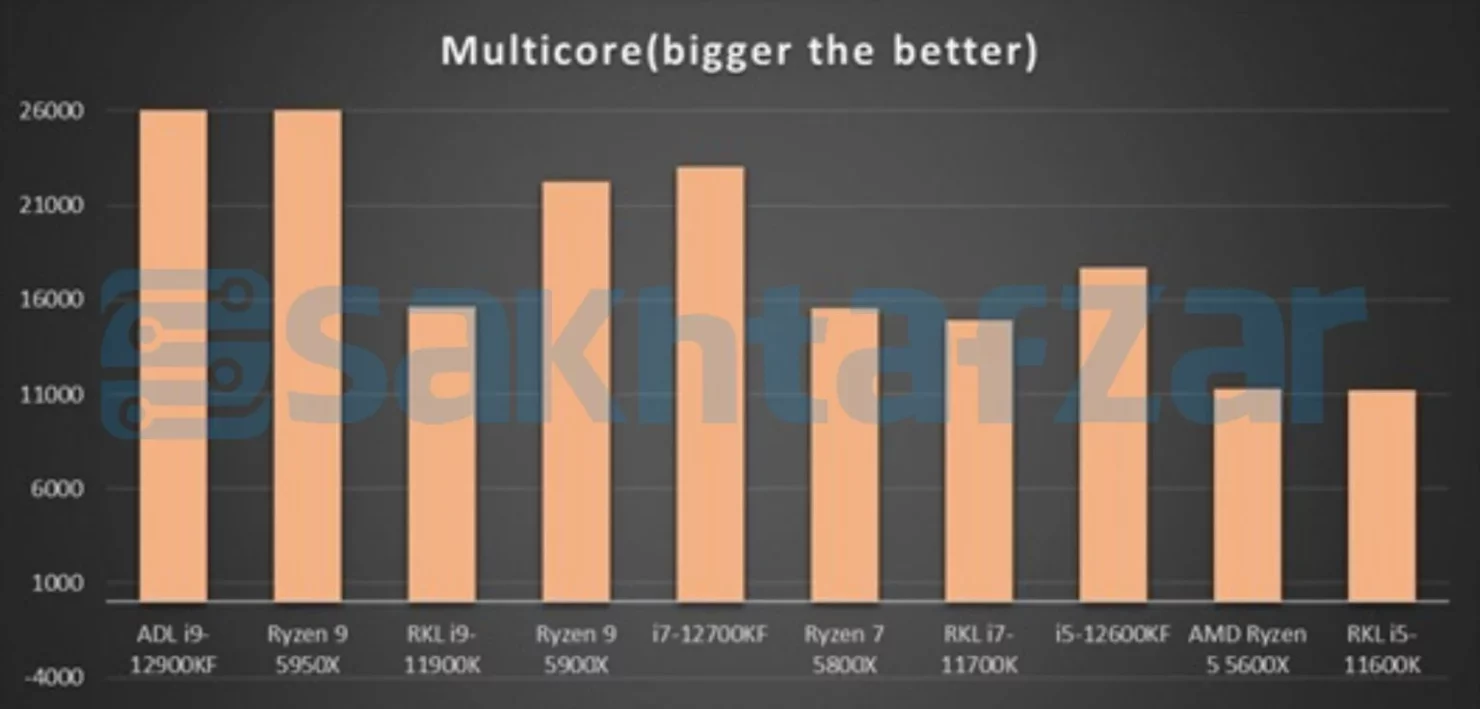

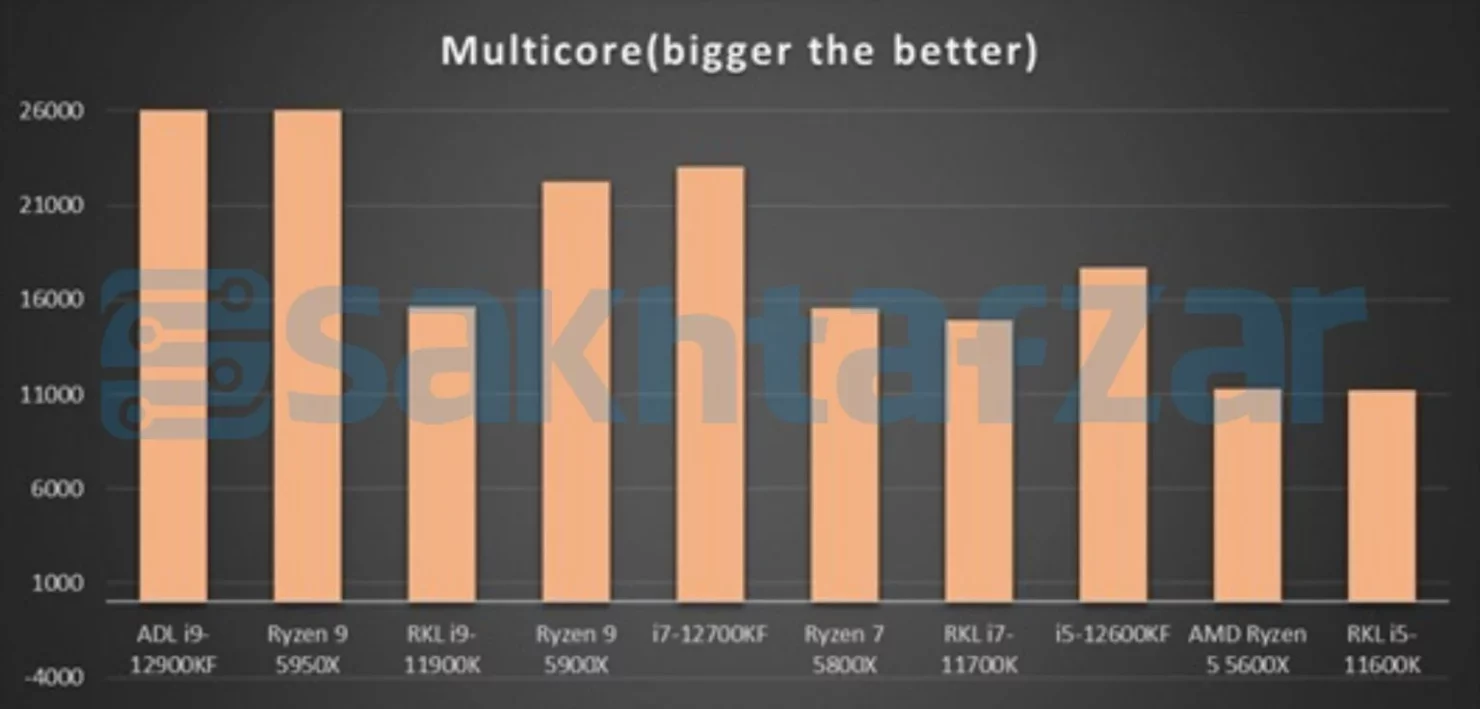

According to leaked benchmarks Intel 12th gen qualification samples are already beating or matching or trading blows with AMD’s 5000 retail offerings. And that’s not even accounting for an expected 10% aggregated performance boost from final stepping, final clock speeds, final microcode, final Windows 11 scheduler and decent DDR5 modules. Also the pricing matchups favour Intel this time around. The $600/$575 12900K/12900KF rivals/beats the $800 5950X, the $420/$390 12700K/12700KF rivals / beats the $550 5900X and the $280/$260 12600K/12600KF rivals / beats the $450 5800X. AMD 6000 series won't be much of an upgrade for most workloads. And certainly they won't be cheaper than their 5000 series counterparts - they will simply more or less replace them at the existing price points. Then AMD will just opt to (occasionally) discount/firesale the 5000 series to match Intel. So even if you are holding out for a 5900X, that's not the right time to buy. It could soon be discounted to $420-$450.Definitely waiting for them to work the problems out of this one, for $550 the 5900X looks like a better prospect (or its 6000 series successor, won't take much to slap around the i9 I'm thinking).

There is no chipset fan with Z690 boards. Leaked Z690 boards show there is no chipset fan. The reason AMD needed a fan on X570 was because the chipset was built on 16nm (marketed as ‘12nm’ by Glofo). And you are wrong about DDR5 needing more power than DDR4. Each generation of DDR is specifically designed to be more power efficient than the previous (and one way of achieving this using lower voltage). It also brings a form of on-chip ECC.Also I don't really want heatsinks and fans for memory and chipsets... so I think I will hold out until the second runs of DDR5 CPUs.

According to leaked benchmarks Intel 12th gen qualification samples are already beating or matching or trading blows with AMD’s 5000 retail offerings. And that’s not even accounting for an expected 10% aggregated performance boost from final stepping, final clock speeds, final microcode, final Windows 11 scheduler and decent DDR5 modules. Also the pricing matchups favour Intel this time around. The $600/$575 12900K/12900KF rivals/beats the $800 5950X, the $420/$390 12700K/12700KF rivals / beats the $550 5900X and the $280/$260 12600K/12600KF rivals / beats the $450 5800X. AMD 6000 series won't be much of an upgrade for most workloads. And certainly they won't be cheaper than their 5000 series counterparts - they will simply more or less replace them at the existing price points. Then AMD will just opt to (occasionally) discount/firesale the 5000 series to match Intel. So even if you are holding out for a 5900X, that's not the right time to buy. It could soon be discounted to $420-$450.

Cinebench R23

There is no chipset fan with Z690 boards. Leaked Z690 boards show there is no chipset fan. The reason AMD needed a fan on X570 was because the chipset was built on 16nm (marketed as ‘12nm’ by Glofo). And you are wrong about DDR5 needing more power than DDR4. Each generation of DDR is specifically designed to be more power efficient than the previous (and one way of achieving this using lower voltage). It also brings a form of on-chip ECC.

That's just a stupid way of thinking. That's like someone refusing to pay more for a V6 engine than a V8 because 8 is a bigger number than 6, ignoring that there are factory V6 engines generating multiple times more HP than some factory V8 engines. If you're smart, you pay for performance, not for cores. For CPU's, if the multithreaded performance is equal, than the fewer cores it has, the better. As your software requires fewer threads, the CPU's with lower core counts will increasingly get faster vs the higher core count competition. If your software only uses 4 threads, then you're stuck using a maximum of 25% of your 16 core CPU's HP, while being able to utilize 50% of the potential of your 8 core.People thought I was being cynical for calling this an 8-core processor, with Intel trying to trick the layman into paying 16-core pricing.