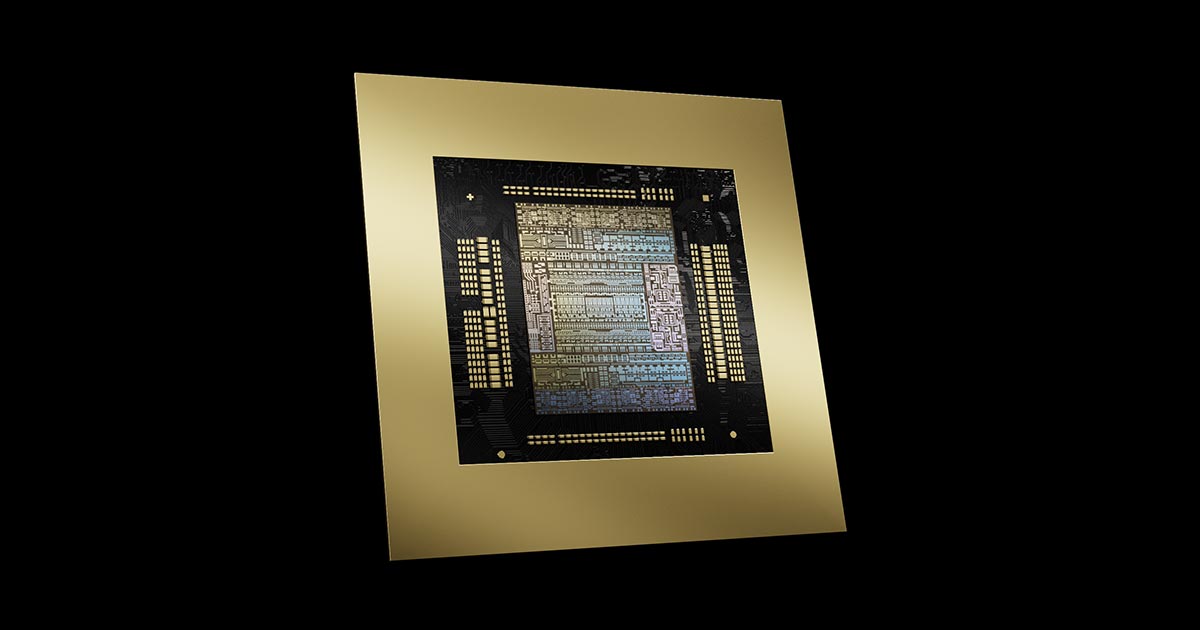

The U.S. government updated its semiconductor export restrictions to encompass more computer hardware. Specifically, the ban now encompasses Nvidia's RTX 4090D, which was made to comply with the previous sanctions.

US wields the banhammer against sanctions-compliant Nvidia RTX 4090D 'Dragon' — updated law prohibits 70 teraflops or greater GPUs from export to C... : Read more

US wields the banhammer against sanctions-compliant Nvidia RTX 4090D 'Dragon' — updated law prohibits 70 teraflops or greater GPUs from export to C... : Read more