libx264, libx265, and libsvtav1, using the "medium" presets on the first two and I think the default on AV1. I had some odd behavior while testing where some of the presets caused issues on my 13900K — as in, the CPU hit 100C and the PC crashed. I can't recall exactly, but I think the VMAF scores weren't increasing much with the slower encodes. It's probably the use of a target bitrate, which maybe overrides the preset or something.

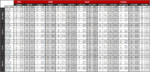

I guess I didn't make that entirely clear, and the charts on this one are a bit of a kludge so I left off the UHD 770 results. I didn't test the 7950X mostly because it's not really a CPU showdown, though I suspect performance is going to be relatively close to the 13900K. Here's the full table of data for reference. (It will probably look a lot better in here than it would on our main site! Too bad it didn't import the bold and borders and centering.) Anyway, 13900K is faster at CPU-based encoding than the UHD 770 QuickSync, which isn't too surprising given the 8 P-core and 16 E-core configuration.

Update! No, our forums did not like my table. Let's try pasting the image instead...

View attachment 211