I'm trying to boost my 3090's clock using GreenWithEnvy (Linux) app. I can boost the GPU clock +200 MHz and see that the "max clock" reflects the boost. But when I put some load on the device, the actual (current) clock hardly goes beyond 1900! Why is that?

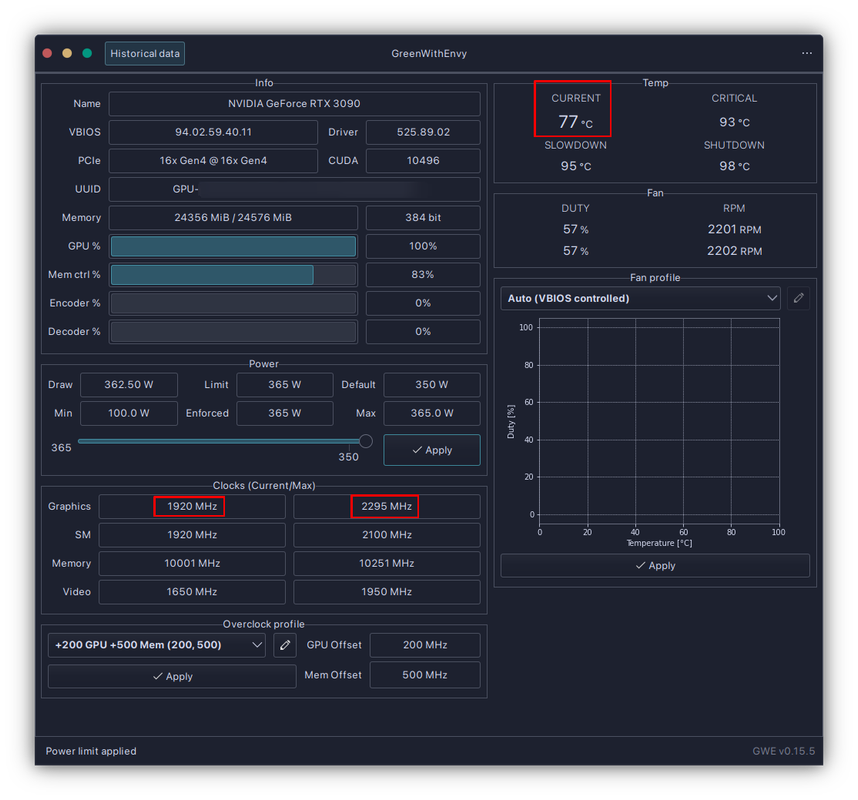

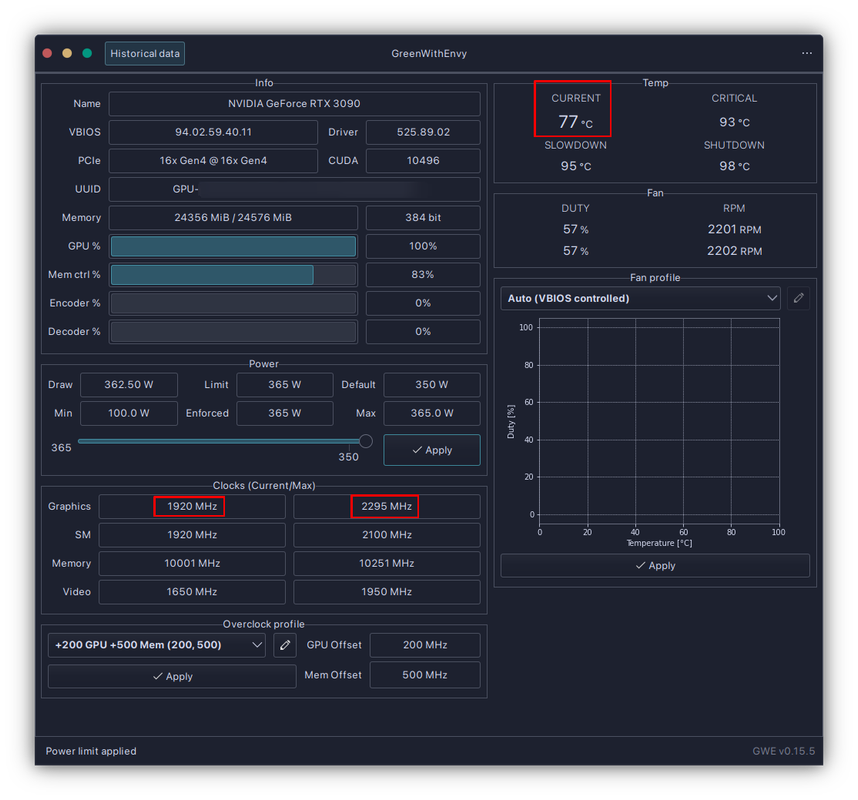

Here's a screenshot of my settings:

Here's a screenshot of my settings: