Hello,

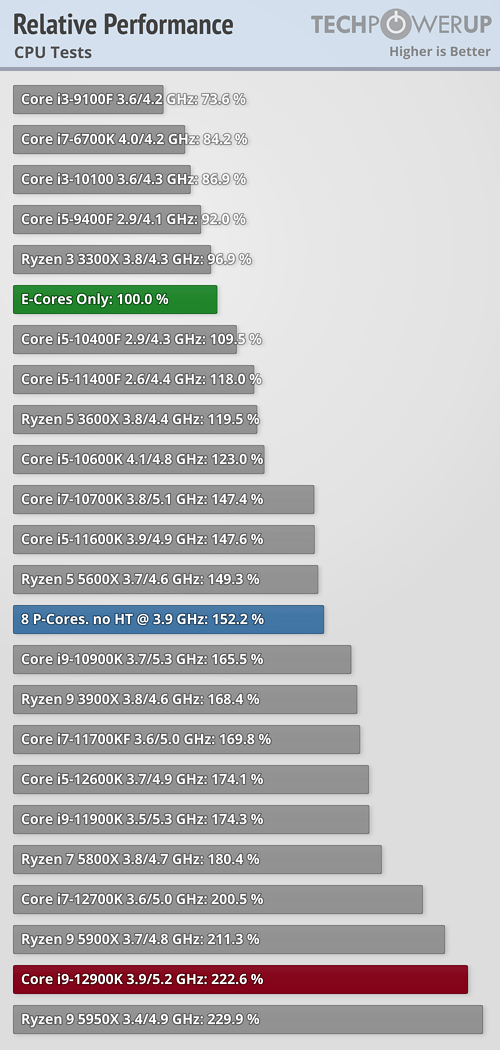

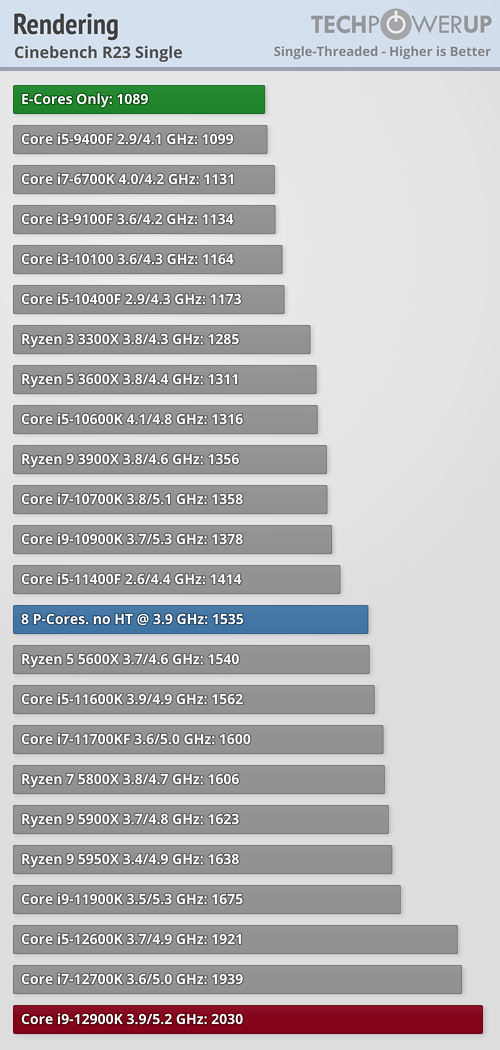

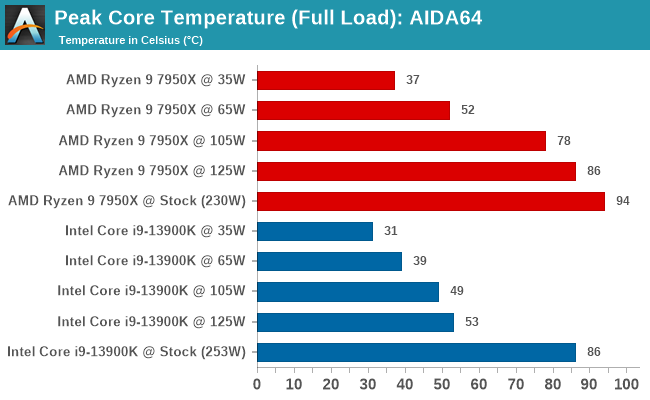

I know we've had 8 performance cores since the 9th generation Intel CPUs. I was wondering why Intel still chose to add to i9-13900K 16 efficient cores rather than 8 (or 4) performance cores. Would it be overheat issues?

This might be not correct but I think 10, 12, or even 16 performance cores would look great!

I know we've had 8 performance cores since the 9th generation Intel CPUs. I was wondering why Intel still chose to add to i9-13900K 16 efficient cores rather than 8 (or 4) performance cores. Would it be overheat issues?

This might be not correct but I think 10, 12, or even 16 performance cores would look great!

Last edited: