Maybe it is due to poor game engine scalability.

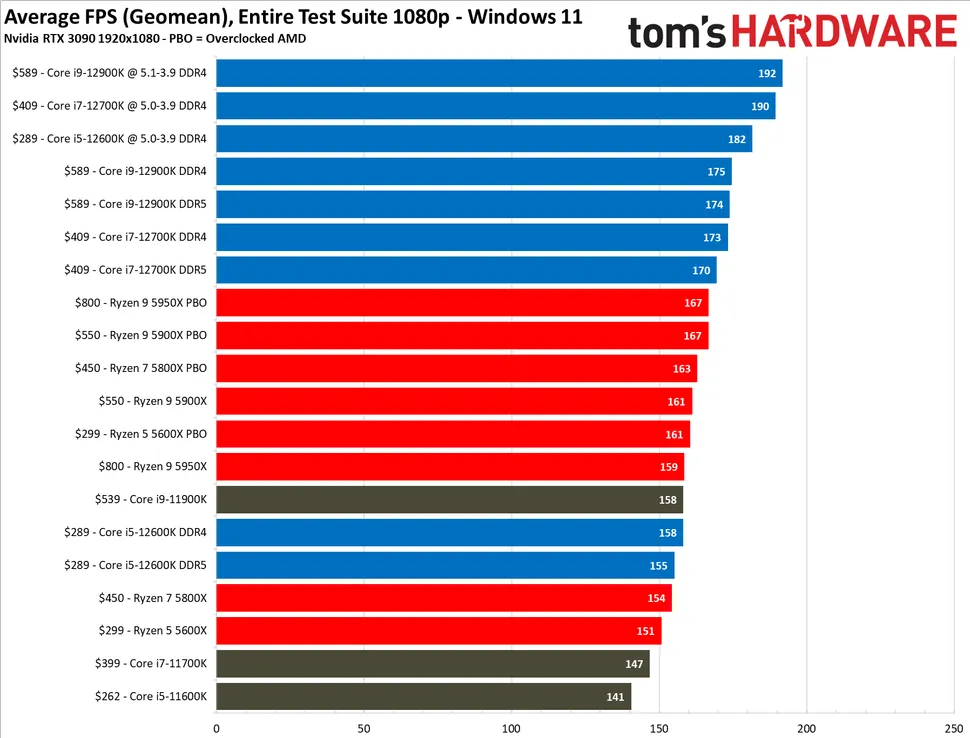

Both charts I linked are conglomerate results from multiple games.

Game engine gives too much work upfront rather than properly sequences it. For example, it needs to render all those frames and calculate meta data of how objects outside of your view moves. Game engine instead of making sure that information which it needs to render now is processed first, instead it mixes up information which is unnecessary together.

Every frame needs to be have objects calculated and positioned on it. The more frames you generate per second, the faster you have to complete those calcs before the next frame can be processed.

Since CPU has access time, processing speeds and limited cache, it can't process all this information immediately. This is when render latency kicks in.

Yes, laws of physics apply. Computing speed also increases every generation (and has since your i5-4670 in 2013)

If on the other hand these two tasks would be separated to core 0 and core 1, CPU could render both of those tasks without a delay.

Yes, this is multi-threading. It's been a thing for a while. As I mentioned with scalability though, there are only so many chunks you can chop a task into and pass it efficiently through a CPU. Also, all workloads scale differently.

In my view, game engines are not ideally optimised, this is why we are seeing under-utilized CPUs being able to offer slightly different performance.

Again, it's exponentially more difficult for the devs of the game engine, as well as the devs of the game to scale performance to today's high core count CPUs and get meaningful performance gains. Diminishing returns. On the flipside, if a game/engine is optimized for, say, 16 threads and doesn't scale DOWN to the most common 4-8 threads, then you're alienating the majority of your customer base that can't afford a $500+ CPU. Because of this, it has been and always will be the most efficient to rely on per-core performance for inter-generational gains.

A good example is Ryzen 7 5800X3D vs Intel's i9-12900ks. Ryzen chip has more internal memory. It is able to load more tasks up to its memory where it can access to necessary information faster and thus offer better performance despite being a weaker chip overall.

- We don't have "official" benchmarks for the 5800X3D (aside from AMD's slides) yet.

- Given that I already said that most game engines don't utilize more than 6-8 threads, the reason you get more performance from 1 CPU to the next is a result of how fast it can push data through a given core/thread. That can be done in many combinations of ways (including but not limited to):

- CPU cache (L1/L2/L3) size and architecture

- CPU frequency - Given the same CPU, 5GHz pushes data through faster than 2GHz.

- IPC (instructions per clock) - You see this by setting to comparison CPUs (ie 5800X3D vs 12900KS) to the same frequency and noting their performance differences.

So to conclude, I think that it is an issue of trying to process too much time sensitive information at once and under-utilizing hardware.

But...EACH frame is time sensitive. And you want more FPS. Not sure if you're suggesting "predictive" rendering for future frames and/or retaining as much of the previous frame as possible and only calculating the differences. I have to imagine that's already being done since it's a simple concept. Unfortunately the game can only predict so much. It doesn't KNOW that you're going to shoot that window or just look through it until you click your mouse. The problem with predictive rendering is just that, it introduces a whole swath of calculations that need to be done for scenarios that may or may not actually occur, hence adding unnecessary overhead and impacting "optimization".

It is quite uncommon to see CPU as a bottleneck as in general, CPUs tend to be faster than games need them to be and it is GPU which struggles to keep up with tasks.

I showed you charts that disprove this statement. Likewise, per my earlier post, you yourself can disprove this statement by lowering in-game quality/resolution and see a higher FPS as a result.