Is this a decent server to start build on ?

pcserverandparts.com

pcserverandparts.com

with these two xeon

which is a little better then the ryzen 5 pro 2400ge but up side thers 2 for the price

id like to get this an add some tb ssds an a slim bluray drive an slow buy an add some ram to it which i was thinking about 512gb total an if possible to build an add a custom water loop for the two xeons

an if this doesnt sound to crazy to put a Radeon-Pro-3200

in an run all this on truenas core with some vm's for a pop-os 23.04 lts vm an two andriod cloud based os's for two laptops an use something like anbox-cloud.io which iv got no ides how to do but id like to try

do you think this can be done an if so any pointers

id like to run the os with vm's on an 2tb nvme on a pci adapter

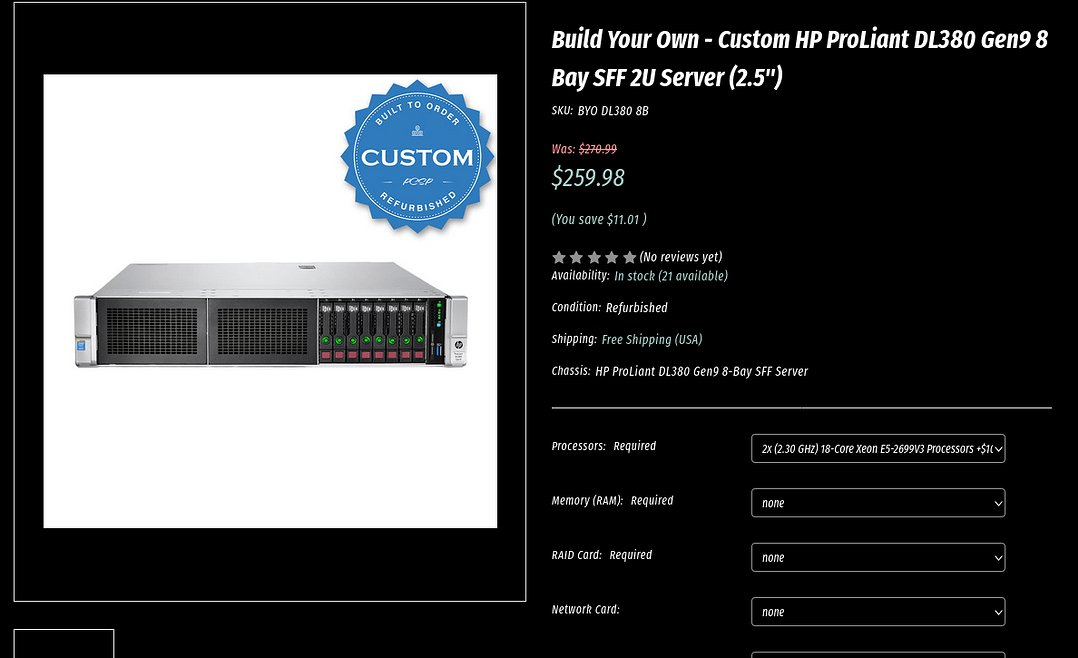

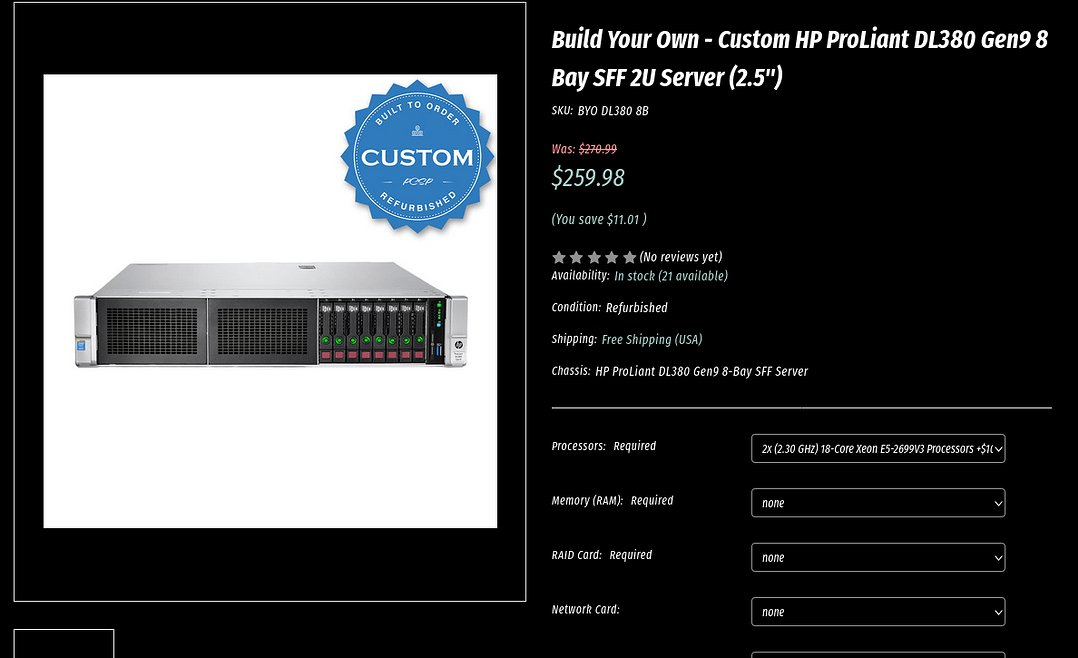

Build Your Own - Custom HPE ProLiant DL380 Gen9 8 Bay SFF 2U Server (2.5")

Customize your own HP ProLiant DL380 Gen9 SFF server with processors, memory, network card and more from PCSP. All servers purchased form PCSP.com also come…

with these two xeon

which is a little better then the ryzen 5 pro 2400ge but up side thers 2 for the price

id like to get this an add some tb ssds an a slim bluray drive an slow buy an add some ram to it which i was thinking about 512gb total an if possible to build an add a custom water loop for the two xeons

an if this doesnt sound to crazy to put a Radeon-Pro-3200

in an run all this on truenas core with some vm's for a pop-os 23.04 lts vm an two andriod cloud based os's for two laptops an use something like anbox-cloud.io which iv got no ides how to do but id like to try

do you think this can be done an if so any pointers

id like to run the os with vm's on an 2tb nvme on a pci adapter