Yes. And what that means is what I said. None of it has anything to do with @Kamen Rider Blade 's thinking that TF32 values are packed in memory.text at bottom says 16bit muplied by n, align1 = 16bit, align2 = 32bit, align4 = 64bit, align8 = 128bit

News AMD Addresses Controversy: RDNA 3 Shader Pre-Fetching Works Fine

Page 3 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Kamen Rider Blade

Distinguished

How many extra operations must you perform every time you convert to & from register just to use TF32?

What about when you have to store the data.

That's extra work / operations just to support TF32 if it's not natively supported in hardware & spec as a native fp type.

What about when you have to store the data.

That's extra work / operations just to support TF32 if it's not natively supported in hardware & spec as a native fp type.

The code sample from the link I posted makes it look like a single instruction converts from FP32 -> TF32. Probably for an entire 32-element Warp.How many extra operations must you perform every time you convert to & from register just to use TF32?

That same link said there's no inverse conversion instruction, because TF32 are conformant to FP32. That makes it sound like the conversion instruction just rounds off the fractional part and replaces the low-order bits with 0's.What about when you have to store the data.

A conversion instruction and the fact that the tensor cores support it means it is supported in the HW, IMO.That's extra work / operations just to support TF32 if it's not natively supported in hardware & spec as a native fp type.

Kamen Rider Blade

Distinguished

I wonder how much work goes underneath the instruction to get it to convert.The code sample from the link I posted makes it look like a single instruction converts from FP32 -> TF32. Probably for an entire 32-element Warp.

I'm not much into AI, so I don't know if "rounding off the fractional part and replacing the low-order bits with 0's" is 'Ok' to do.That same link said there's no inverse conversion instruction, because TF32 are conformant to FP32. That makes it sound like the conversion instruction just rounds off the fractional part and replaces the low-order bits with 0's.

In nVIDIA hardware specifically.A conversion instruction and the fact that the tensor cores support it means it is supported in the HW, IMO.

It's not part of IEEE 754 though, it's nVIDIA's own variant for their hardware, which has massive marketshare.

So that's fine for them.

But the bigger question is, should there be larger adoptions in terms of the wider Standards Body in the programming / hardware community?

Or is there a alternative solution?

kerberos_20

Champion

alternative solution is fp16 or bfloat16, which also tells you if its okay to use it or not, you just dont use fp16 if you need high precisionI wonder how much work goes underneath the instruction to get it to convert.

I'm not much into AI, so I don't know if "rounding off the fractional part and replacing the low-order bits with 0's" is 'Ok' to do.

In nVIDIA hardware specifically.

It's not part of IEEE 754 though, it's nVIDIA's own variant for their hardware, which has massive marketshare.

So that's fine for them.

But the bigger question is, should there be larger adoptions in terms of the wider Standards Body in the programming / hardware community?

Or is there a alternative solution?

To @Kamen Rider Blade 's point about standardization, fp16 is actually included in the 2008 revision of IEEE 754.alternative solution is fp16 or bfloat16,

It was only about 5 or 6 years ago that the Deep Mind folks (a UK-based company that Google acquired) decided fp16 had the wrong balance between precision and range, for AI. That's when they proposed BFloat16, which they added to Google's own TPUs and many hardware vendors have adopted, since. Interestingly, it was not included in the 2020 update of IEEE 754. If it continues to see widespread use, maybe it'll appear in the 2028 revision.

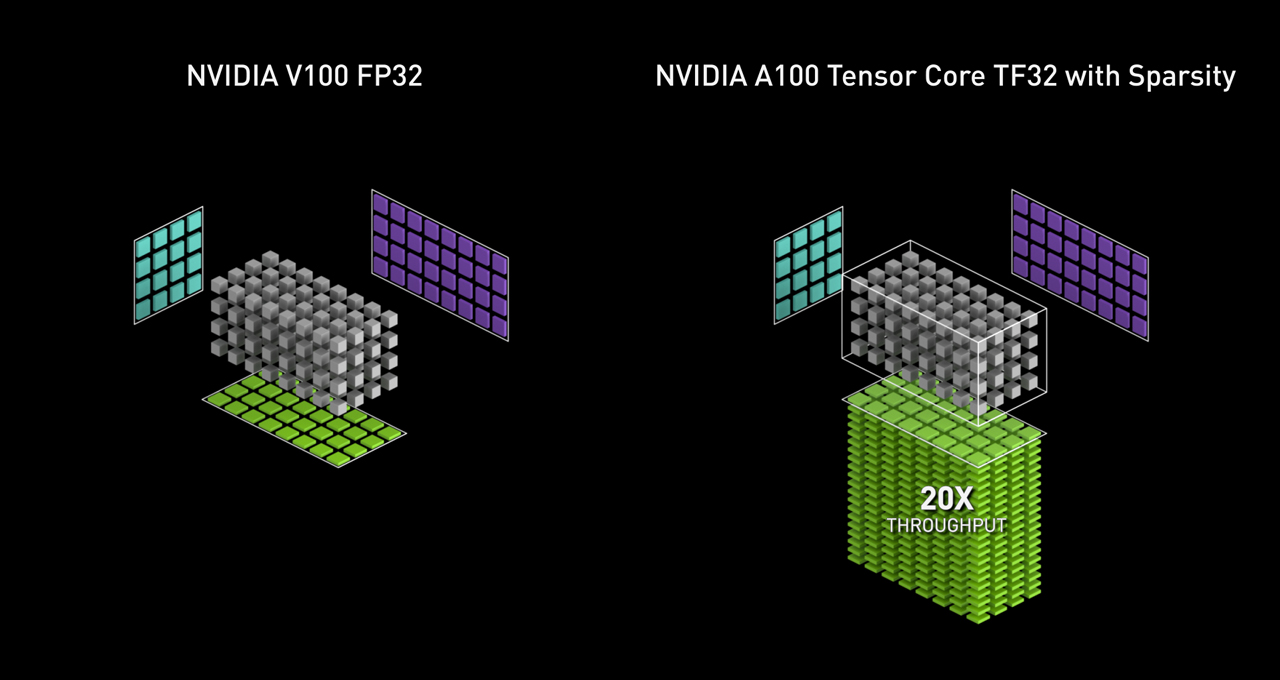

If you want to understand the rationale behind Nvidia TF32 format, here's probably the best explanation you'll find:

NVIDIA Blogs: TensorFloat-32 Accelerates AI Training HPC upto 20x

NVIDIA's Ampere architecture with TF32 speeds single-precision work, maintaining accuracy and using no new code.

blogs.nvidia.com

Something I hadn't previously noticed: it has just enough precision to exactly represent all fp16 values! That makes it a superset of both BFloat16 and fp16! In fact, it's the smallest such representation.

Kamen Rider Blade

Distinguished

Something I hadn't previously noticed: it has just enough precision to exactly represent all fp16 values! That makes it a superset of both BFloat16 and fp16! In fact, it's the smallest such representation.

If that's the case, implementing a fp24 wouldn't be a bad idea.

AMD had implemented fp24 in the past, I'm sure they can implment it again in their hardware.

AMD have a Special version of fp24 that matches Pixar's PXR24 format in bit size, but was slightly off in bit-layout.

I'm sure they can make a slight adjustment to support PXR24.

This way it covers everything nVIDIA's TensorFloat covers & more.

_1-bit: Sign

_8-bit: Exponent

15-bit: fraction

That easily "Meets & Exceeds" nVIDIA's TensorFloat while being "Bit-Aligned".

Instead of bFloat16, we can call it aFloat24 =D

It should easily allow casting from FP32 down to AMD's FP24 formats.

Then we need to convince IEEE 754 that it's worth adding these FP24 formats into the standard and get all the compilers to support it.

I still don't know what problem you're trying to solve, here.Then we need to convince IEEE 754 that it's worth adding these FP24 formats into the standard and get all the compilers to support it.

Anyway, a nice thing about TF32 is that it's already usable as fp32. The only compiler support needed is to convert to it, but you do that on the GPU and Nvidia's tools already support it.

Kamen Rider Blade

Distinguished

Wider Standards support for more fp data types on CPU & across the industry in all sorts of hardware.I still don't know what problem you're trying to solve, here.

But that's proprietary to nVIDIA.Anyway, a nice thing about TF32 is that it's already usable as fp32. The only compiler support needed is to convert to it, but you do that on the GPU and Nvidia's tools already support it.

I'm not a big fan of "Proprietary" in any way / shape / form.

I like wide open standards support by the industry using the same data types.

Making things nice and portable between all vendors of Hardware & Software.

That just increases hardware complexity.Wider Standards support for more fp data types on CPU & across the industry in all sorts of hardware.

BTW, since I noticed that TF32 can losslessly represent both fp16 and bf16, I have come to believe the reason they did it was simply because it's what the underlying hardware had to do to support both formats. Then, someone got the bright idea to expose it, so that users could simultaneously utilize both the range of bf16 and the precision of fp16. I don't think the format is as arbitrary as we might've presumed.

So is CUDA. They seem to like it that way. Vendor lock-in, you know?But that's proprietary to nVIDIA.

Same, but I doubt you'll convince Nvidia of that. Especially if it makes their hardware more expensive, less efficient, and/or slower.I like wide open standards support by the industry using the same data types.

Oh I'm fully aware, and I frequently point this out to others. It just seems like too much of a performance deficit to be explained by "fine wine" right now. The average performance in benchmarks right now is indicating essentially zero performance improvement from the dual-issue shaders, while nVidia got a ~15% improvement adding support for parallel INT execution with the 20 series, and another 25% adding FP support to that INT pipeline with the 30 series. Of course some of that improvement will be due to other architectural changes, but I don't have any way to break it down. Either way, that seems like far too much ground to make up to just be explained by drivers.I don't. AMD gpu drivers have notariously been slow to extract the full power of their GPUs. This is where the mis-'belief' AMD GPUs age like fine wine. In reality Nvidia has a much larger software team dedicated to drivers and they tend to extract most out of their GPUs right away whereas due to AMDs more limited team size it takes them months to years to fully utilize their silicon. So yeah if history is any indicator in two years time these 7000 series GPUs could gain an additional 10 percent or more in performance.

Kamen Rider Blade

Distinguished

::shrugs:: Oh Well.That just increases hardware complexity.

I'm sure everybody has said that about every new instruction set added by Intel

Sometimes you gotta put in the work to have new options for improved long term performance.

Neither is my proposal for more fp data types, it's not arbitrary.BTW, since I noticed that TF32 can losslessly represent both fp16 and bf16, I have come to believe the reason they did it was simply because it's what the underlying hardware had to do to support both formats. Then, someone got the bright idea to expose it, so that users could simultaneously utilize both the range of bf16 and the precision of fp16. I don't think the format is as arbitrary as we might've presumed.

The current fp data types are very "One Size Fits" all and not enough finesse in terms of data size options for end users to choose from.

It's like clothing sizes, we need more options since people's body types vary by a wide degree.

I know! That's nVIDIA's way, they're the proprietary everything vendor.So is CUDA. They seem to like it that way. Vendor lock-in, you know?

Many people in the industry HATE them for that.

Especially the "Open Source" community.

I don't expect to convince Jensen Huang of anything, he's happy at the perch at the top of his little dGPU mountain with his 80% dGPU marketshare.Same, but I doubt you'll convince Nvidia of that. Especially if it makes their hardware more expensive, less efficient, and/or slower.

But the rest of the industry can work on solutions and options that are "Open Standards".

That's why I want to see fp24 so badly.

It is the middle size data type between fp16 & fp32 that has been missing and can be used appropriately for AI or Gaming.

Depends on which variant of fp24 you want to use.

Each one has it's use case.

When Intel added a new instruction, the hardware implementing it would usually have to be added per-core, meaning somewhere between only one and a couple dozen times per CPU. When a GPU adds something like that, it gets replicated anywhere between a hundred and tens of thousands of times.I'm sure everybody has said that about every new instruction set added by Intel

On Nvidia, you have:The current fp data types are very "One Size Fits" all and not enough finesse in terms of data size options for end users to choose from.

- fp8 (Hopper and later)

- fp16

- bf16 (Ampere and later)

- tf32 (Ampere and later)

- fp32

- fp64

Kamen Rider Blade

Distinguished

Even if you cap the upper data size at fp64:When Intel added a new instruction, the hardware implementing it would usually have to be added per-core, meaning somewhere between only one and a couple dozen times per CPU. When a GPU adds something like that, it gets replicated anywhere between a hundred and tens of thousands of times.

On Nvidia, you have:

That's as many as 6 sizes. I don't think we need any more. That's just my opinion.

- fp8 (Hopper and later)

- fp16

- bf16 (Ampere and later)

- tf32 (Ampere and later)

- fp32

- fp64

What about:

fp24

fp40

fp48

fp56

Those are all viable data sizes.

umeng2002_2

Reputable

The Ryzen 1000 launch was "incredibly rough"? I bought an 1800X on release, paired it with 64GB DRAM from the QVL, and it worked fine from Day 1. Other than enabling XMP I did nothing to juice it up. My impression then and now was that 90% of the "problems" were people who ignored the QVL and / or chose to go beyond stock settings. AMD advertised stock performance at a ground-breaking price for 8 cores, and delivered. You run it 1 Hz higher than stock and problems are on you.

People really only had issues with overclocking. Overclocking is so easy these day, people expect it to be flawless. It reminds me of people get mad at AMD for USB issues when they OC their infinity fabric near the known limits.

But what is the value proposition? What is the benefit which justifies the extra hardware needed to implement them? That's the part I'm struggling with.Even if you cap the upper data size at fp64:

What about:

fp24

fp40

fp48

fp56

Those are all viable data sizes.

Just because you can do something doesn't mean it's worth doing.

Kamen Rider Blade

Distinguished

Depending on the problem you're trying to solve, using a fp data type of the appropriate size would lead to RAM savings which affects how fast you can load / process everything.But what is the value proposition? What is the benefit which justifies the extra hardware needed to implement them? That's the part I'm struggling with.

Just because you can do something doesn't mean it's worth doing.

That's the entire point of having data types of the correct size.

If you don't need the full range of fp64, but you need more than fp32, there should be some options in between.

Same with fp16 and fp32.

It all boils down to how efficient you want to be about solving your problem.

kerberos_20

Champion

for gaming...That's why I want to see fp24 so badly.

It is the middle size data type between fp16 & fp32 that has been missing and can be used appropriately for AI or Gaming.

fp24 was abondoned with shader model 3.0

directx dictates min precision storage 10bits, fp24 had 16bit precision, but exponent was low, it wasnt enough so they went with fp32 instead

you know, you can order costumized silicon to your needs, right?Depending on the problem you're trying to solve, using a fp data type of the appropriate size would lead to RAM savings which affects how fast you can load / process everything.

That's the entire point of having data types of the correct size.

If you don't need the full range of fp64, but you need more than fp32, there should be some options in between.

Same with fp16 and fp32.

It all boils down to how efficient you want to be about solving your problem.

One approach to this is memory compression. In graphics, texture compression is implemented in hardware and used quite a lot. In AI, some hardware uses similar methods to compress AI model weights.Depending on the problem you're trying to solve, using a fp data type of the appropriate size would lead to RAM savings which affects how fast you can load / process everything.

That's the entire point of having data types of the correct size.

If you don't need the full range of fp64, but you need more than fp32, there should be some options in between.

fp64 is something of a special case. That's mainly used by science, finance, and engineering (mechanical, structural, aerospace, etc.) applications. They would probably prefer to have more precision, even at the expense of a little performance, than to tweak it down to the last few % at the risk of errors.

Kamen Rider Blade

Distinguished

Basically, the PXR 24-bit fp format would've been more useful then?for gaming...

fp24 was abondoned with shader model 3.0

directx dictates min precision storage 10bits, fp24 had 16bit precision, but exponent was low, it wasnt enough so they went with fp32 instead

PXR's 1-bit sign, 8-bit exponent, & 15-bit fraction would be closer to what they wanted?

Or was it fp32 was the only acceptable solution?

That's not the point, having customized silicon isn't the solution I want to see.you know, you can order costumized silicon to your needs, right?

It's to get the industry to go in on the new expanded standards together.

Be more flexible on data type size.

Kamen Rider Blade

Distinguished

But Gaming and other fields can get by with less precision and have had done so in the past.One approach to this is memory compression. In graphics, texture compression is implemented in hardware and used quite a lot. In AI, some hardware uses similar methods to compress AI model weights.

fp64 is something of a special case. That's mainly used by science, finance, and engineering (mechanical, structural, aerospace, etc.) applications. They would probably prefer to have more precision, even at the expense of a little performance, than to tweak it down to the last few % at the risk of errors.

Not everything needs absolute precision of the larger data-types.

And you can still use memory compression on smaller data types.

No, because it's dictionary-based. That's not friendly for being accelerated in texture engines.Basically, the PXR 24-bit fp format would've been more useful then?

You might find it interesting to read about the texture compression formats currently in use.

Games aren't the ones using fp64 (with perhaps the rare exception). fp64 is typically implemented at 1/32 the rate of fp32, in gaming GPUs. That's a strong incentive for games not to use it.But Gaming and other fields can get by with less precision and have had done so in the past.

Kamen Rider Blade

Distinguished

That's not what I mean, I'm talking about the bit-arrangement of PXR24 vs AMD's FP24 format.No, because it's dictionary-based. That's not friendly for being accelerated in texture engines.

Ok, when I get free time.You might find it interesting to read about the texture compression formats currently in use.

I know, most Games have no real reason to use FP64 for numerous reasons.Games aren't the ones using fp64 (with perhaps the rare exception). fp64 is typically implemented at 1/32 the rate of fp32, in gaming GPUs. That's a strong incentive for games not to use it.

But somewhere in between FP32 & FP64 might have a data type that could be useful for certain use cases.

kerberos_20

Champion

fpu can handle 80bit..u can use it if you need higher precisionThat's not what I mean, I'm talking about the bit-arrangement of PXR24 vs AMD's FP24 format.

Ok, when I get free time.

I know, most Games have no real reason to use FP64 for numerous reasons.

But somewhere in between FP32 & FP64 might have a data type that could be useful for certain use cases.

Kamen Rider Blade

Distinguished

Is this what you're talking about:fpu can handle 80bit..u can use it if you need higher precision

https://en.wikipedia.org/wiki/Extended_precision#x86_extended_precision_format

This is what I'm talking about:

Last edited:

TRENDING THREADS

-

Question No POST on new AM5 build - - - and the CPU & DRAM lights are on ?

- Started by Uknownflowet

- Replies: 13

-

-

-

-

Question Is Breakingnews suitable for local and international news tracking?

- Started by breakingnews

- Replies: 0

-

Discussion What's your favourite video game you've been playing?

- Started by amdfangirl

- Replies: 4K

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.