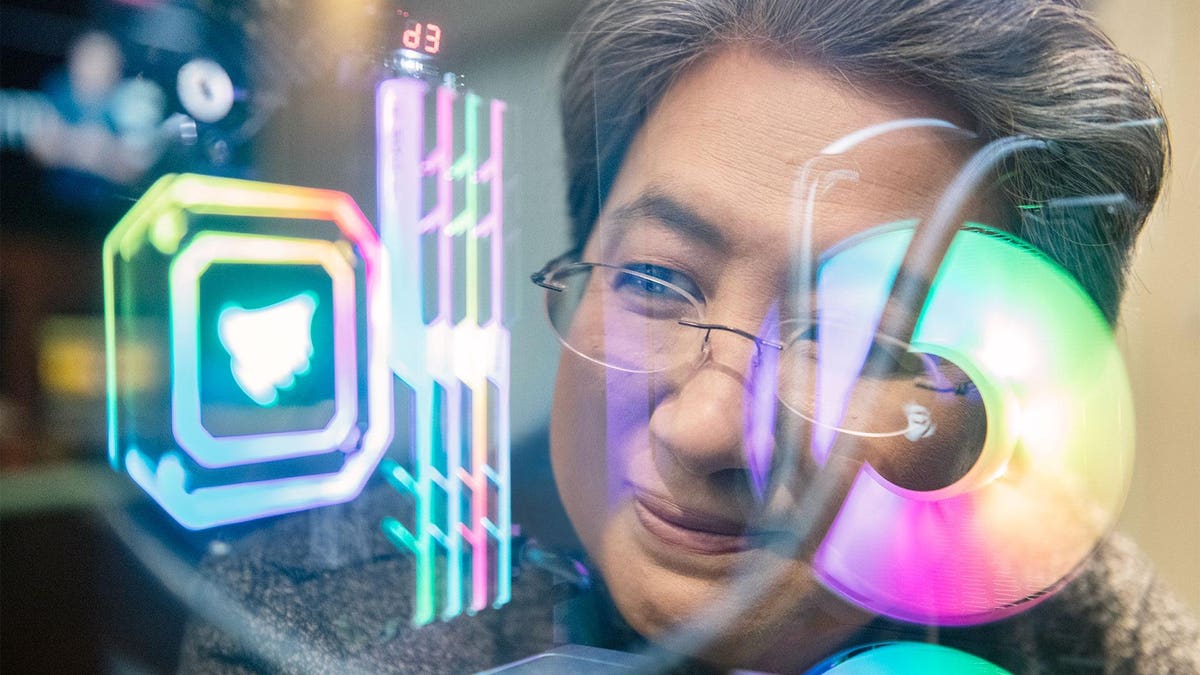

AMD demoed its Ryzen XDNX AI engine at its offices in Taipei, Taiwan, during Computex 2023.

AMD Demoes Ryzen AI at Computex 2023 : Read more

AMD Demoes Ryzen AI at Computex 2023 : Read more

AI for the masses.

Here we can see the XDNA AI engine crunching away at a facial recognition workload.

It's used to blur out the background during a video call, no green screen required. It's not all hype.Why do you need "AI" that recognizes faces on a consumer laptop. You're going to point your laptop camera at people until you get arrested or something?

AI seems to be all about hype and "concepts", with very few practial use cases.

You need ai for that? 🤔It's used to blur out the background during a video call, no green screen required. It's not all hype.

We've been able to blur a background for years without "AI chips".It's used to blur out the background during a video call, no green screen required. It's not all hype.

Newer Intel igpus already do that, as does Arc and Nvidia gpus.It's used to blur out the background during a video call, no green screen required. It's not all hype.

AI is good for upscaling. At least AI that doesn't come from AMD.We've been able to blur a background for years without "AI chips".

If that's the best example of AI, [Moderator edit to remove profanity. Remember that this is a family friendly forum.], then AI is way overhyped.

It's a tool, and people will find ways to use the tool if it is good.Why do you need "AI" that recognizes faces on a consumer laptop. You're going to point your laptop camera at people until you get arrested or something?

AI seems to be all about hype and "concepts", with very few practial use cases.

It's not so much "need" but rather "you can do this with 1W rather than loading the CPU at 75% and consuming 25W." Throwing out the term "buzzword" to dismiss something you don't understand doesn't mean the tech isn't actually doing something useful.You need ai for that? 🤔

It's the latest buzzword that's all.

Dedicated hardware should be faster and more efficient.Newer Intel igpus already do that, as does Arc and Nvidia gpus.

The Intel igpus are pretty bad at it though tbh.

Yes, a "buzzword". And it'll continue to be that at least until we normal individuals are benefited directly from this technology.It's not so much "need" but rather "you can do this with 1W rather than loading the CPU at 75% and consuming 25W." Throwing out the term "buzzword" to dismiss something you don't understand doesn't mean the tech isn't actually doing something useful.

Smartphones? They all have AI accelerators now (or at least, the good ones do). They're being used to do cool stuff. Image enhancement, smart focus, noise cancelation. We talk to Siri and Google and Alexa and over time we're getting better and better results and we hardly even stop to think about it.

There was a time (about 15 years ago) where just doing basic speech recognition could eat up a big chunk of your processing power and could barely keep up with a slow conversation. Now we have AI tools that can do it all in real-time while barely using your CPU, or we can do it at 20X real-time and transcribe an hour of audio in minutes. That's just one example.

The stuff happening in automotive, medical, warehousing, routing, and more is all leveraging AI and seeing massive benefits. We have AI chip layout tools that can speed up certain parts of the chip design process by 10X or more (and they'll only become better and more important as chips continue to shrink in size). But sure, go ahead and call it a "buzzword."

All of these things existed before this "AI" hype. None of these examples require "AI chips".They're being used to do cool stuff. Image enhancement, smart focus, noise cancelation. We talk to Siri and Google and Alexa and over time we're getting better and better results and we hardly even stop to think about it.

All of these things existed before this "AI" hype. None of these examples require "AI chips".

I worked at Lernout & Hauspie for a while. Pretty much the pioneers of today's speech recognition. Microsoft ended up buying the tech. This tech is what Alexa, Siri and Cortana is still using today.

You know when they inventend all these software patterns? 1987

This idea that speech recognition is some new field fueled by AI is a bit ridiculous.

And I'll give you the benefit of the doubt. This idea that AI will somehow greatly enhance speech recognition (I haven't seen that happen, but ok). Even if that happens, is this a billion $ industry that the AI evangelists suggest? I don't think it is, I don't think it is at all.

Speech recognition, trained on gobs of data (just sound files with the words behind them), is absolutely not what Dragon, Microsoft, Lernout and Hauspie, etc. did. Yes, the end result might seem similar, but the mechanics are fundamentally different. It's like saying, "We had algorithms that could play a perfect game of checkers back in the 90s" is the same as "We have a deep learning algorithm that taught itself to play checkers and now plays a perfect game."All of these things existed before this "AI" hype. None of these examples require "AI chips".

I worked at Lernout & Hauspie for a while. Pretty much the pioneers of today's speech recognition. Microsoft ended up buying the tech. This tech is what Alexa, Siri and Cortana is still using today.

You know when they inventend all these software patterns? 1987

This idea that speech recognition is some new field fueled by AI is a bit ridiculous.

And I'll give you the benefit of the doubt. This idea that AI will somehow greatly enhance speech recognition (I haven't seen that happen, but ok). Even if that happens, is this a billion $ industry that the AI evangelists suggest? I don't think it is, I don't think it is at all.

No, it says "object detection" and "face detection". These are less computationally-intensive than face recognition.Here we can see the XDNA AI engine crunching away at a facial recognition workload.

First, it wasn't actually doing face recognition. However, let's say it was.Why do you need "AI" that recognizes faces on a consumer laptop. You're going to point your laptop camera at people until you get arrested or something?

To do it well, you do.You need ai for that? 🤔

There's a big difference between sort of being able to do something and being able to do it well.We've been able to blur a background for years without "AI chips".

edit

Not as a researcher, you didn't. If you had, you'd know that deep learning has revolutionized speech recognition.I worked at Lernout & Hauspie for a while.

No. Back when Amazon started the Alexa project, deep field speech recognition could not be done. They had to lure some of the best researchers in the world and invent the tech.This tech is what Alexa, Siri and Cortana is still using today.

A lot of AI applications are just sophisticated pattern-recognition problems. Like finding cancerous tumors in X-ray photos, or maybe matching a disease diagnosis and treatment regimen with a set of lab results and symptoms.But what if I just want the computer to do what I tell it to do, not what it thinks I want it to do?

You are benefiting. You just aren't necessarily aware of when this tech is being used under the hood.Yes, a "buzzword". And it'll continue to be that at least until we normal individuals are benefited directly from this technology.

You're not comparing against the scenario in which it wasn't used. You can't see how much performance, efficiency, time-to-market, or cost is improved through its use. You just blindly assume it's not being used or that its use is entirely optional and inconsequential.Okay, it can speed up the process of chip design, but do you see it in effect? Are CPUs and GPUs getting cheaper? No. Actually, the opposite.

When corporate finances falter, products get cancelled or scaled back. Ultimately, business performance does matter to consumers.it only benefits those businessmen to increase their profits, no real benefit for the consumer, till now.

The problem with AI vs. some other revolutionary technologies (e.g. cellphones, the internet), is that you can't externally see that it's being used. That's why you believe it's not benefiting you.For now, AI is still a wounded dog. AI chips are there because there is so much potential, but still it's not there. Yes, it looks promising and potentially life-changing, but hey, let's be honest, at the moment we still don't feel the impact, it will only get overhyped more over the time for its potential, but it is far from being here, and not ready yet to help us become better as human beings.

It's like trying to argue against fuel-injection because we already had cars without it. Or against LCD monitors, because we can keep using CRT monitors.Speech recognition, trained on gobs of data (just sound files with the words behind them), is absolutely not what Dragon, Microsoft, Lernout and Hauspie, etc. did. Yes, the end result might seem similar, but the mechanics are fundamentally different. It's like saying, "We had algorithms that could play a perfect game of checkers back in the 90s" is the same as "We have a deep learning algorithm that taught itself to play checkers and now plays a perfect game."

forbes.com

forbes.com

Huh? I mean, they built the hardware into their CPUs and have been talking about it since late last year (IIRC).>It's very disappointing they couldn't even provide a perf/W comparison vs. using their CPU cores or the iGPU to run the same workload.

It's safe to say that AMD doesn't have any AI announcement ready. But given that all eyeballs are on AI at the moment, it's good PR to say something something AI.

Eh, I think the gaming market is still too big for AMD to ignore. Even if it's not as rapidly growing, AMD could achieve substantial revenue growth if they manage to take a bigger share of it. Plus, it would set the stage for the next round of consoles. And they need to be thinking about continuing to develop competitive iGPUs.it does drive home the point that AMD's primary focus going forward will be to compete in AI hardware, for datacenters. Then, consumer GPU market will take a backseat, given both the lower margins and it's not a growth market. It's a logical take, even if it's not already born out in the current GPU iteration.

It should absolutely be better than generational CPU increases. One reason generational GPU performance has slowed is that new functional blocks are competing for some of the process node dividends. However, unlike CPUs, it's almost easy to increase GPU performance simply by throwing more gates at the problem.I expect the same incremental improvement (+10-15% raw perf) for future GPU iterations,