Look! Steve caused an adapter to melt... by not plugging it in correctly! That must be the only possible explanation as to what's going on, right? Seriously, for all his "fact" talk, the reality is that we still don't have a clear answer on what is causing problems — and more importantly, we don't have a guaranteed solution. His rant on responsible reporting rings a bit hollow, considering he himself has been a major part of the theorycrafting that's taken place. Also, even though he mentioned FOD (foreign object debris) as a factor, like Igor, he has no actual proof that FOD caused any of the failures — he hasn't replicated that; he just showed that FOD is present and then jumped to a conclusion. Anyway, GN spent a LOT of money to make these videos, but it's not like he can actually solve the problem — that's up to Nvidia and its partners. The videos were done to increase his street cred and to get lots of views, while officially providing no answer whatsoever.

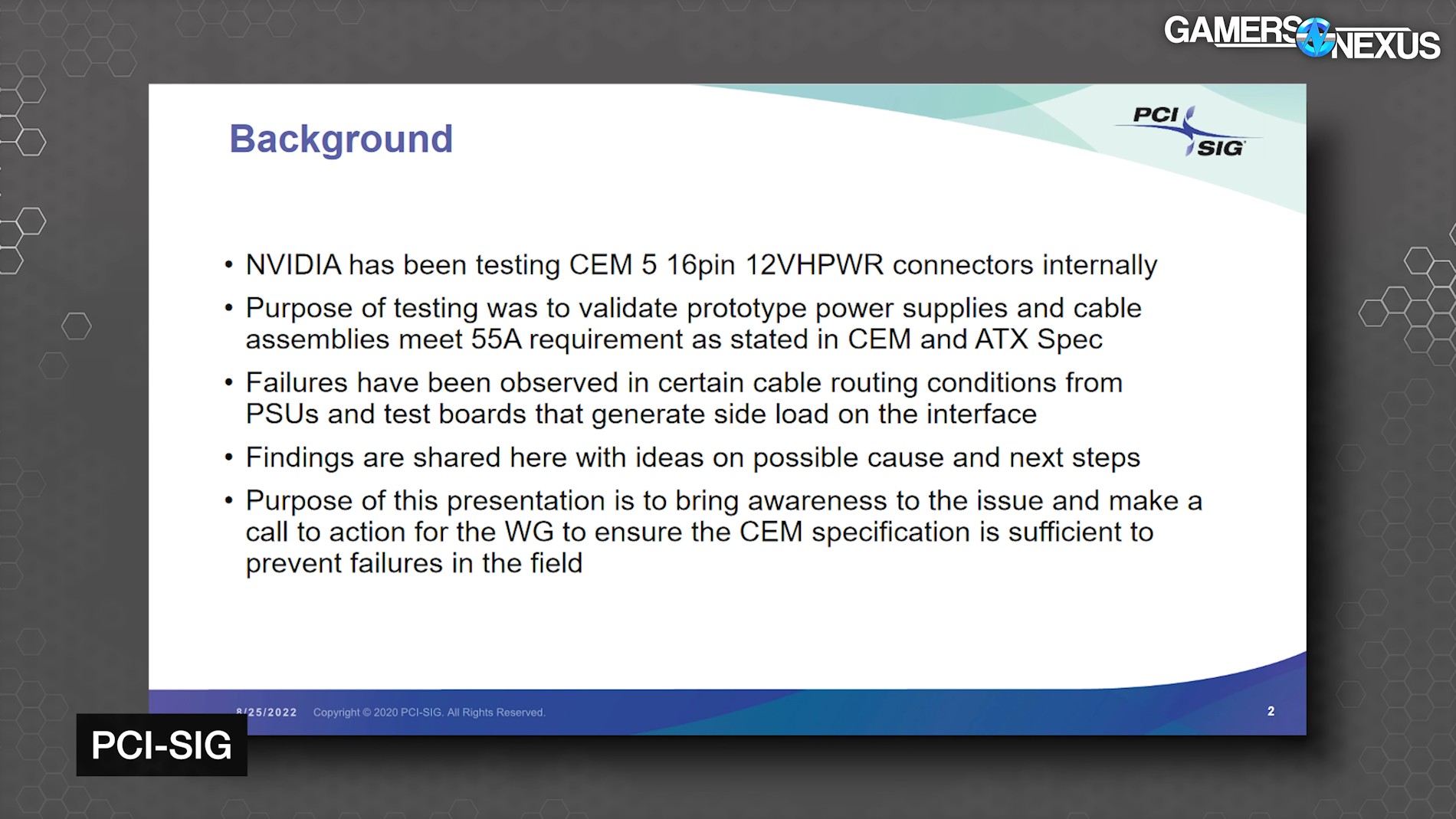

Not quite! The connector was created by PCI-SIG at the behest of Nvidia. That's important to remember. On its own, PCI-SIG almost certainly wouldn't have made this connector. Nvidia even pre-empted PCI-SIG's version with the 12-pin connector two years ago. It's exactly the same, but without the sense pins — and funny enough might actually be safer, as there's reason to suppose the extra four sense pins are part of what's potentially causing people to incorrectly install the connector. If the true root cause is user error — not proved yet, but possible — then it indicates a faulty design. People haven't been experiencing melting 8-pin and 12-pin connectors, so what changed that's causing problems now?

I'm also still quite concerned with connector longevity, as someone who swaps GPUs in my test beds often multiple times per day. For that reason, I have to use the adapters! Because if I didn't, I'd need a native 16-pin connector (I have one), and then I'd be plugging that in and unplugging it likely more than 500 times in a year. The 12-pin and 16-pin connectors just won't last under that sort of use, while history shows the 8-pin connectors, being larger and more robust, can manage just fine.