Caveats all around, as it will depend on conductivity of the materials and such. There's no perfect superconductor for neither heat or electricity, so to be pedantic, it depends on some key elements:

- A CPU that runs hotter will keep heat within itself making electric properties a tad worse (loss), so the hotter a CPU runs you can make the point it'll be "less efficient" overall. The extremes are LN2 cooling for world records and such and the extremely hot CPUs using much more power than actually needed (be it because of more voltage applied or more amps to compensate) in order to hit certain target speeds; you can cross check with GPUs in this particular case as they've had more time exposed to this.

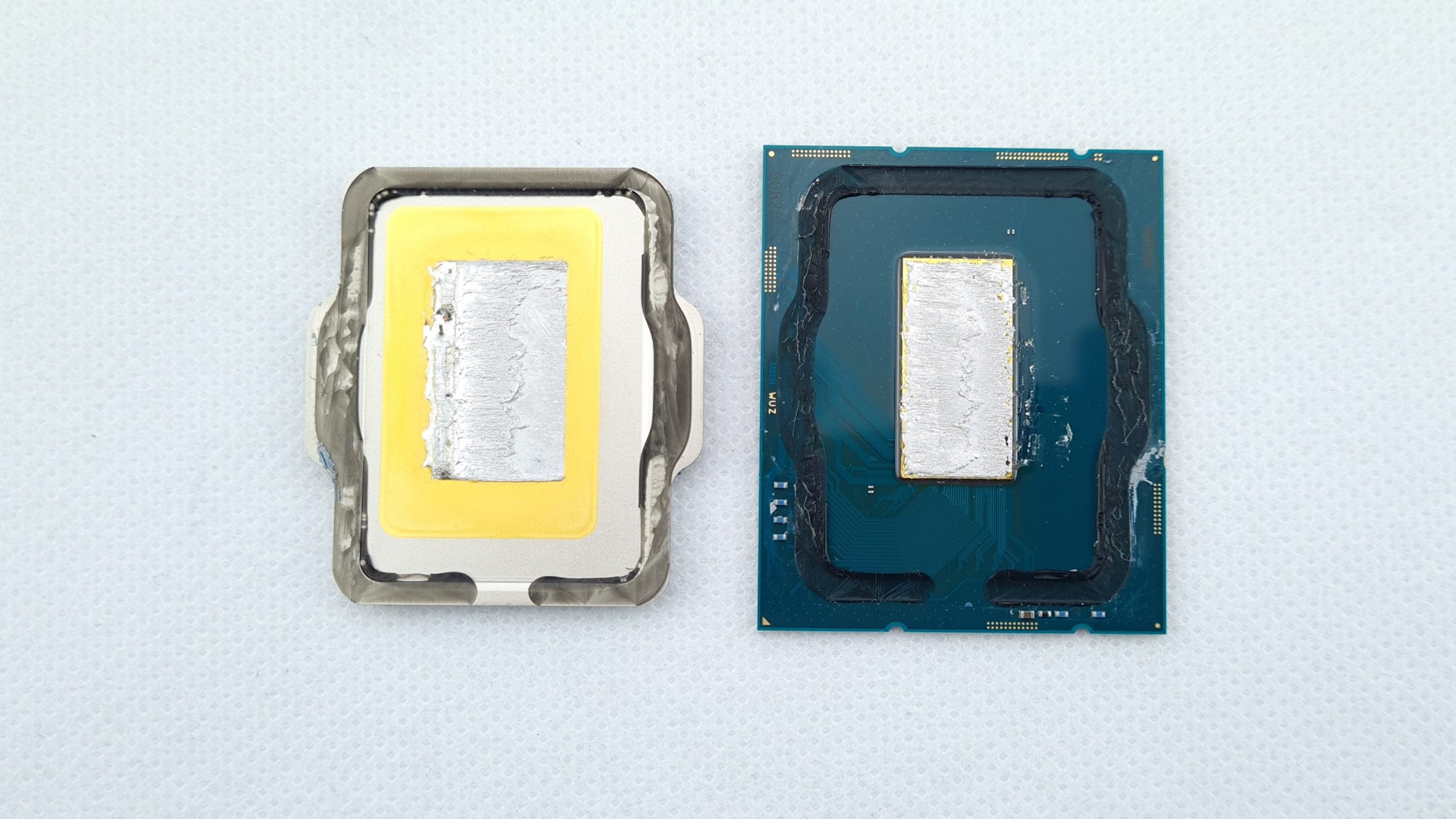

- In a thermally constrained environment, how you manage each "jump" from one material to another will determine greatly how much heat you can move in total. More jumps, more loss (or, in this case, less transfer). So with delidding and using direct contact, you already remove the chunky bit the IHS takes away from the equation.

- Contact area. This affects Intel more than AMD (at least, no one has reported issues with AMD yet) and the IHS being removed allows you to reach, ideally, almost 100% surface area for the components that do need moving the heat away from them. So far, AMD worked in lower power modes, so it wasn't a big problem to move that heat away, but now they've more than doubled the power, so all that needs to be moved fast.

- Thermal capacity. This is about heat saturation for components. When something (metal) becomes heat saturated, it becomes a terrible conductor of, well, anything. This comes down to what the IHS is made of and, let's be real, it's probably very cheap stuff, otherwise they'd be using gold or copper. So it means it'll become saturated immediately and it'll start moving less heat across than at lower operating temps. This sounds strange, but that's how a lot of materials behave. If AMD says "95°C is ok", I'd imagine they've made their homework and decided the IHS material's optimal operating temp for heat transfer is around the 90°C mark. Well, I doubt it.

All in all, a CPU that uses 250W won't necessarily output that equivalent amount of heat to the HSF/cooler as it'll keep some of it heating the motherboard and other components around it in the current scenario from AMD, which I do not like. I'm not sure how the split would be, but let's go with the good ol' 80/20, so 80% of that energy will be heat transferred to the case/ambient and 20% will stay behind, circling around and heating up other stuff. If you delid the CPUs, that ratio improves, so it'll move more heat to the ambient, but also removing that heat which would otherwise stay at the motherboard/CPU level. Also, improving power efficiency.

It's not uni-dimensional why I personally dislike this "95°C is fine" take from AMD. It does have implications, practical ones, I do not like. Looks like, for the mid range, this is won't be such an issue, but I'm still wondering if AMD can do better there. I would not mind them breaking cooler compatibility and just improving the heat transfer to get rid of that 10°C difference.

I'd love to see more testing around how these higher temps affect other components, specially in more constrained environments, but most review sites and testers use open-case or have setups where "heat buildup" is less of an issue for them. Which is, obviously, not the case for 99% of people out there. I don't even want to imagine OEM machines with Ryzen 7 in them and how they'll perform. They'll probably have to enforce the "ECO" modes like they do with Intel enforcing the 65W/125W modes.

Regards.