The M1 Ultra is a 5nm SoC that packs the power of two M1 Max chips.

Apple M1 Ultra SoC Cranks Mac Performance with 20-Core CPU, 64-core GPU : Read more

Apple M1 Ultra SoC Cranks Mac Performance with 20-Core CPU, 64-core GPU : Read more

Idc how good it is as long as it comes with a ridiculous premium, and mac os. Make these good power efficient cpus, pair them with NVIDIA laptop gpus and make a great gaming laptop with windows 10.

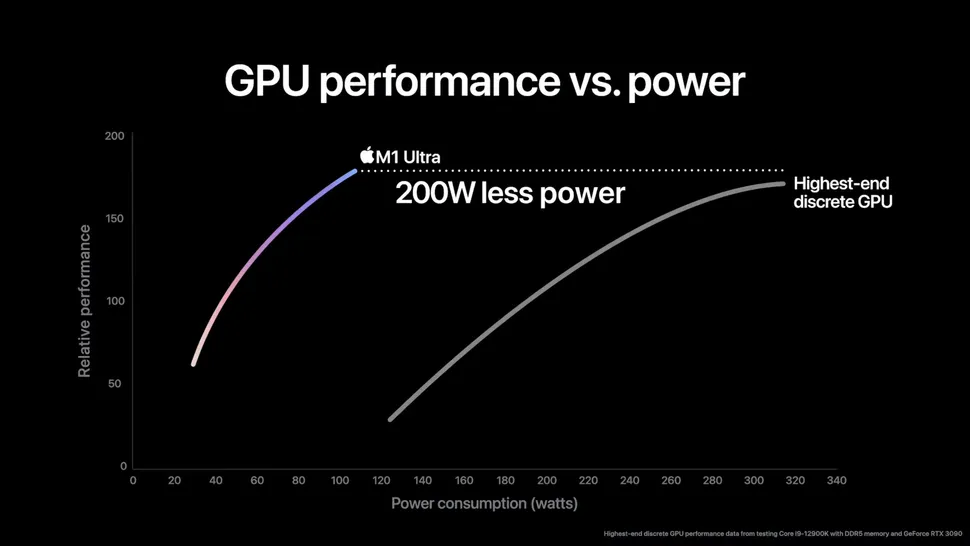

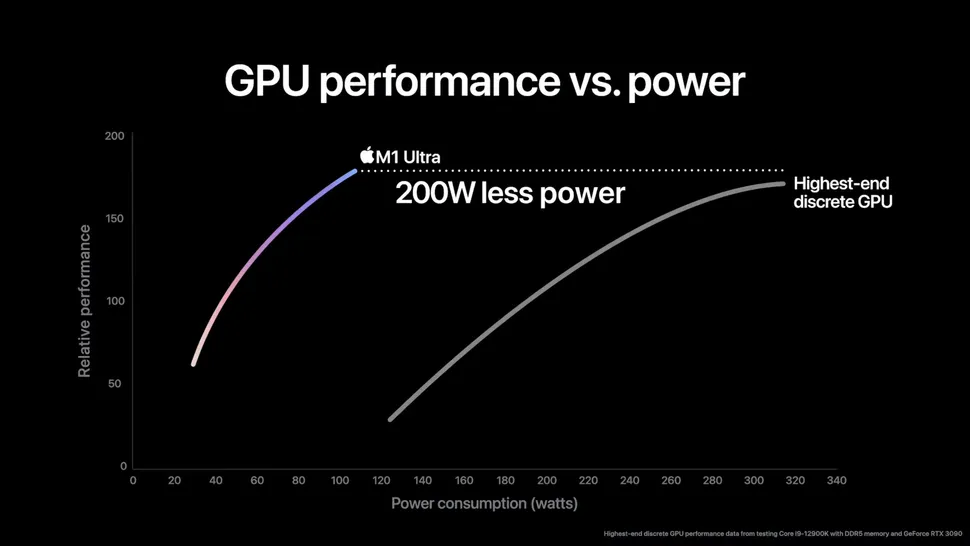

It's in the x-axis of this chart:Kind of hard to say how good it is when they do not giving any information on how much power it uses.

I would argue on the Linux side, it depends. For one thing, I really don't like most Linux based OS's default app management and distribution system. I tried installing a specific version of Python and it took me like 6-7 steps to get it to a point where I could finally type in "python" in the command line and get going. On Windows? Download installer, install, done.Are you that scared of macOS and other Unix/Linux based operating systems? These days the OS's are all basically the same with just a different GUI slapped on top from a feature point of view. Other than games, Windows really doesn't offer anything over any of the other operating systems. I haven't seen a productivity/developer tool made for Windows only in about a decade.

If you're locked into the Apple eco system for software reasons, what other options do you have? It doesn't matter what the charts show, you still have to buy from the limited number of configurations Apple offers.Apple people will buy anything, regardless of what it shows on charts or technical details🤣

If I'm reading this correctly, they compared the M1 Ultra GPU to the M1 Max GPU in one line and the RTX 3090 against an RTX 3060 Ti on the other.Testing was conducted by Apple in February 2022 using preproduction Mac Studio systems with Apple M1 Max, 10-core CPU and 32-core GPU, and preproduction Mac Studio systems with Apple M1 Ultra, 20-core CPU and 64-core GPU. Performance was measured using select industry‑standard benchmarks. Popular discrete GPU performance data tested from Core i9-12900K with DDR5 memory and GeForce RTX 3060 Ti. Highest-end discrete GPU performance data tested from Core i9-12900K with DDR5 memory and GeForce RTX 3090. Performance tests are conducted using specific computer systems and reflect the approximate performance of Mac Studio.

$5800 for that configuration with a 1TB SSD. You can build a pretty stout PC for that amount. Is a smaller case and lower power usage worth over $2000? Not to most people.CPU. Plus GPU. Plus 128 gigs memory. 114 billion transistors. One chip?

If this delivers then let Apple bask in the well-earned glory.

I have a MacBook for photo and video editing and you are right that most productivity apps are for both. But mac os is slim on games. I and many others heavily prefer windows for diverse reasons.Are you that scared of macOS and other Unix/Linux based operating systems? These days the OS's are all basically the same with just a different GUI slapped on top from a feature point of view. Other than games, Windows really doesn't offer anything over any of the other operating systems. I haven't seen a productivity/developer tool made for Windows only in about a decade.

Agreed. The M1 SoC has the Neural Engine (AI Accelerator) and an Image Processor on the SoC. The load they could be benchmarking could be an Image AI load. The M1 SoC also could be running a load that would favor an SoC vs a GPUEven Intel would be ashamed by the amount of nonsense in those slides.

"Our integrated graphics are faster than a 3090 while drawing 100 watts!"

"Oh wow! What are they faster at?"

"You know... things. <_< "

No doubt they could be faster at certain workloads that utilize specific baked-in hardware features, like if their chip has hardware support for encoding a particular video format, while another does not have the same level of support for it, and must utilize general-purpose hardware to perform the task. But I really doubt we're going to see overall graphics performance anywhere remotely close to 3090 in that chip.

It's in the x-axis of this chart:

I would argue on the Linux side, it depends. For one thing, I really don't like most Linux based OS's default app management and distribution system. I tried installing a specific version of Python and it took me like 6-7 steps to get it to a point where I could finally type in "python" in the command line and get going. On Windows? Download installer, install, done.

But if you strip it down to the kernel level stuff, then yeah, I'd agree that for the most part, Windows and UNIX have enough similarities that arguing anything is purely academic.

Yes, for whatever's on the repo. But if I need a specific version of Python, then I have to go through a lot of steps to get it installed.Brew or apt-get python. Done.